Ouwen Huang

ReXGradient-160K: A Large-Scale Publicly Available Dataset of Chest Radiographs with Free-text Reports

May 01, 2025Abstract:We present ReXGradient-160K, representing the largest publicly available chest X-ray dataset to date in terms of the number of patients. This dataset contains 160,000 chest X-ray studies with paired radiological reports from 109,487 unique patients across 3 U.S. health systems (79 medical sites). This comprehensive dataset includes multiple images per study and detailed radiology reports, making it particularly valuable for the development and evaluation of AI systems for medical imaging and automated report generation models. The dataset is divided into training (140,000 studies), validation (10,000 studies), and public test (10,000 studies) sets, with an additional private test set (10,000 studies) reserved for model evaluation on the ReXrank benchmark. By providing this extensive dataset, we aim to accelerate research in medical imaging AI and advance the state-of-the-art in automated radiological analysis. Our dataset will be open-sourced at https://huggingface.co/datasets/rajpurkarlab/ReXGradient-160K.

Emulating Clinical Quality Muscle B-mode Ultrasound Images from Plane Wave Images Using a Two-Stage Machine Learning Model

Dec 07, 2024Abstract:Research ultrasound scanners such as the Verasonics Vantage often lack the advanced image processing algorithms used by clinical systems. Image quality is even lower in plane wave imaging - often used for shear wave elasticity imaging (SWEI) - which sacrifices spatial resolution for temporal resolution. As a result, delay-and-summed images acquired from SWEI have limited interpretability. In this project, a two-stage machine learning model was trained to enhance single plane wave images of muscle acquired with a Verasonics Vantage system. The first stage of the model consists of a U-Net trained to emulate plane wave compounding, histogram matching, and unsharp masking using paired images. The second stage consists of a CycleGAN trained to emulate clinical muscle B-modes using unpaired images. This two-stage model was implemented on the Verasonics Vantage research ultrasound scanner, and its ability to provide high-speed image formation at a frame rate of 28.5 +/- 0.6 FPS from a single plane wave transmit was demonstrated. A reader study with two physicians demonstrated that these processed images had significantly greater structural fidelity and less speckle than the original plane wave images.

ReXrank: A Public Leaderboard for AI-Powered Radiology Report Generation

Nov 22, 2024

Abstract:AI-driven models have demonstrated significant potential in automating radiology report generation for chest X-rays. However, there is no standardized benchmark for objectively evaluating their performance. To address this, we present ReXrank, https://rexrank.ai, a public leaderboard and challenge for assessing AI-powered radiology report generation. Our framework incorporates ReXGradient, the largest test dataset consisting of 10,000 studies, and three public datasets (MIMIC-CXR, IU-Xray, CheXpert Plus) for report generation assessment. ReXrank employs 8 evaluation metrics and separately assesses models capable of generating only findings sections and those providing both findings and impressions sections. By providing this standardized evaluation framework, ReXrank enables meaningful comparisons of model performance and offers crucial insights into their robustness across diverse clinical settings. Beyond its current focus on chest X-rays, ReXrank's framework sets the stage for comprehensive evaluation of automated reporting across the full spectrum of medical imaging.

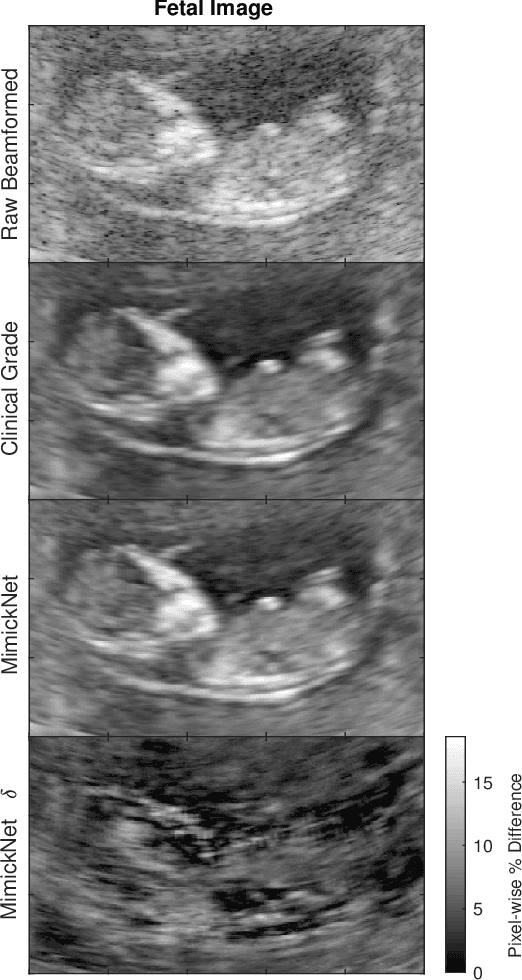

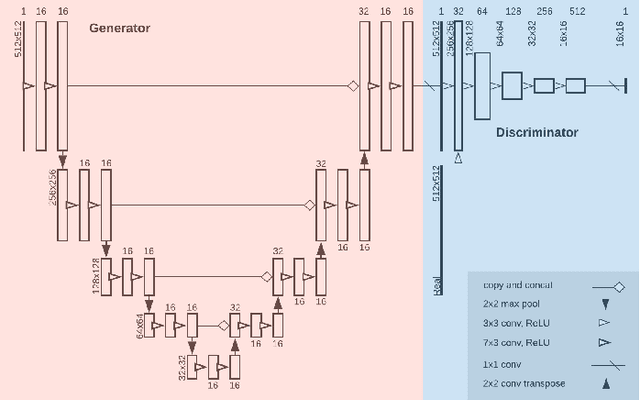

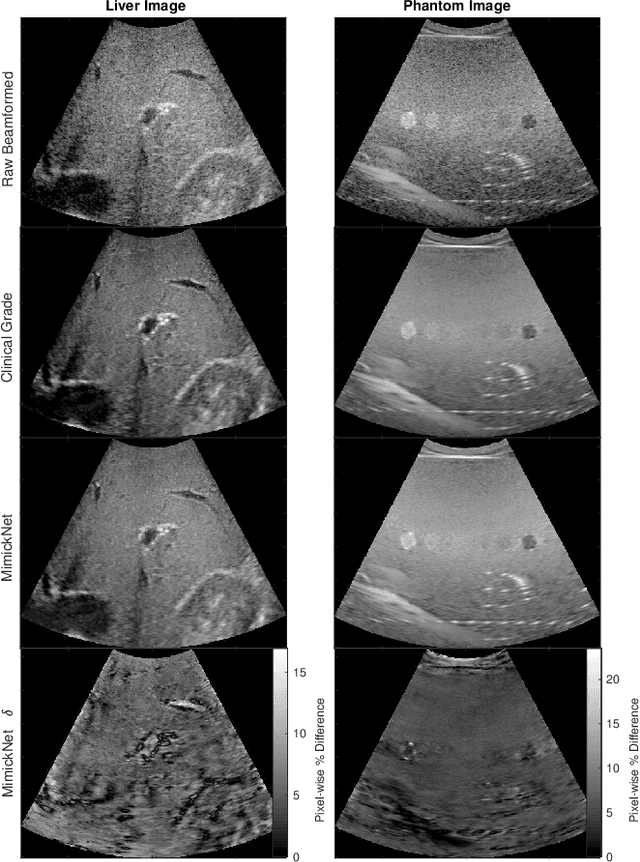

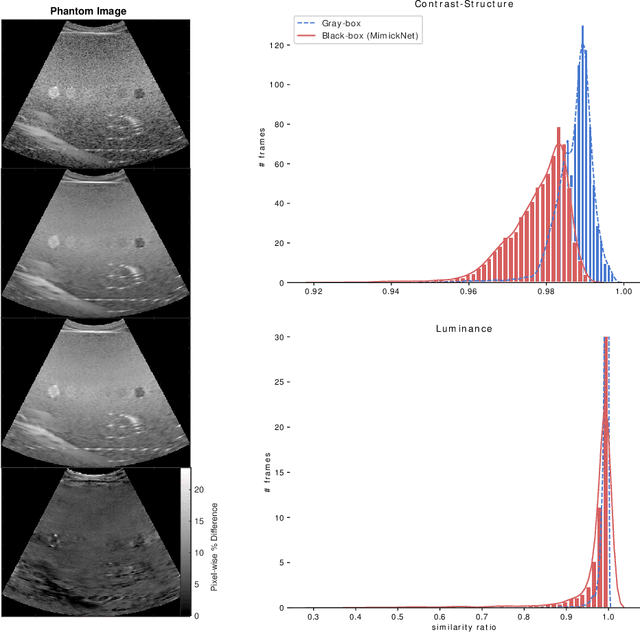

MimickNet, Matching Clinical Post-Processing Under Realistic Black-Box Constraints

Aug 15, 2019

Abstract:Image post-processing is used in clinical-grade ultrasound scanners to improve image quality (e.g., reduce speckle noise and enhance contrast). These post-processing techniques vary across manufacturers and are generally kept proprietary, which presents a challenge for researchers looking to match current clinical-grade workflows. We introduce a deep learning framework, MimickNet, that transforms raw conventional delay-and-summed (DAS) beams into the approximate post-processed images found on clinical-grade scanners. Training MimickNet only requires post-processed image samples from a scanner of interest without the need for explicit pairing to raw DAS data. This flexibility allows it to hypothetically approximate any manufacturer's post-processing without access to the pre-processed data. MimickNet generates images with an average similarity index measurement (SSIM) of 0.930$\pm$0.0892 on a 300 cineloop test set, and it generalizes to cardiac cineloops outside of our train-test distribution achieving an SSIM of 0.967$\pm$0.002. We also explore the theoretical SSIM achievable by evaluating MimickNet performance when trained under gray-box constraints (i.e., when both pre-processed and post-processed images are available). To our knowledge, this is the first work to establish deep learning models that closely approximate current clinical-grade ultrasound post-processing under realistic black-box constraints where before and after post-processing data is unavailable. MimickNet serves as a clinical post-processing baseline for future works in ultrasound image formation to compare against. To this end, we have made the MimickNet software open source.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge