Orion Campbell

Prioritized Kinematic Control of Joint-Constrained Head-Eye Robots using the Intermediate Value Approach

Sep 24, 2018

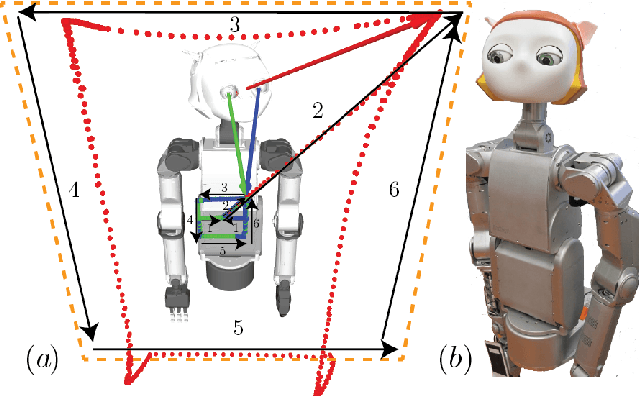

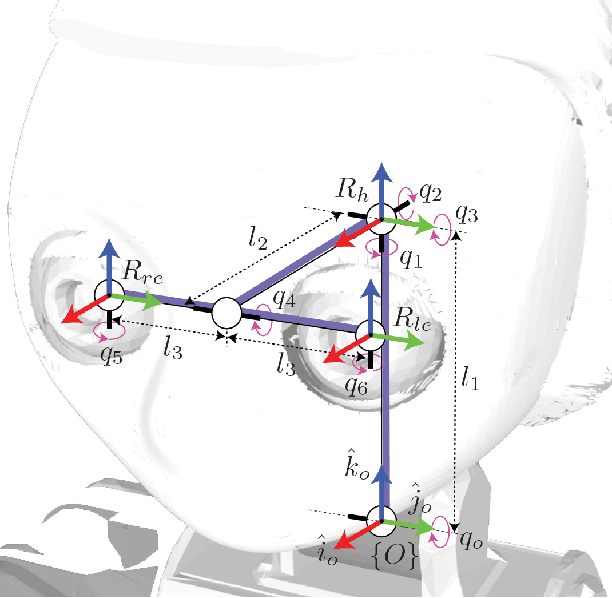

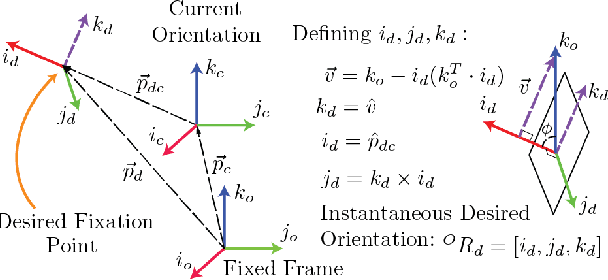

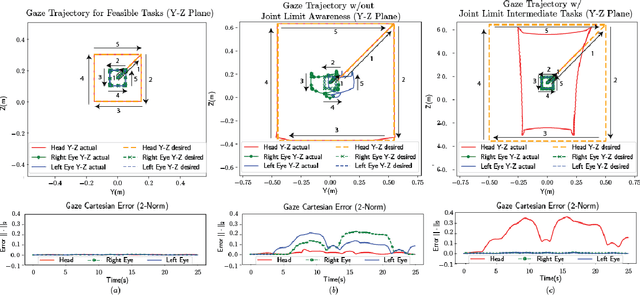

Abstract:Existing gaze controllers for head-eye robots can only handle single fixation points. Here, a generic controller for head-eye robots capable of executing simultaneous and prioritized fixation trajectories in Cartesian space is presented. This enables the specification of multiple operational-space behaviors with priority such that the execution of a low priority head orientation task does not disturb the satisfaction of a higher prioritized eye gaze task. Through our approach, the head-eye robot inherently gains the biomimetic vestibulo-ocular reflex (VOR), which is the ability of gaze stabilization under self generated movements. The described controller utilizes recursive null space projections to encode joint limit constraints and task priorities. To handle the solution discontinuity that occurs when joint limit tasks are inserted or removed as a constraint, the Intermediate Desired Value (IDV) approach is applied. Experimental validation of the controller's properties is demonstrated with the Dreamer humanoid robot. Our contribution is on (1) the formulation of a desired gaze task as an operational space orientation task, (2) the application details of the IDV approach for the prioritized head-eye robot controller that can handle intermediate joint constraints, and (3) a minimum-jerk specification for behavior and trajectory generation in Cartesian space.

Exploiting the Natural Dynamics of Series Elastic Robots by Actuator-Centered Sequential Linear Programming

Jul 17, 2018

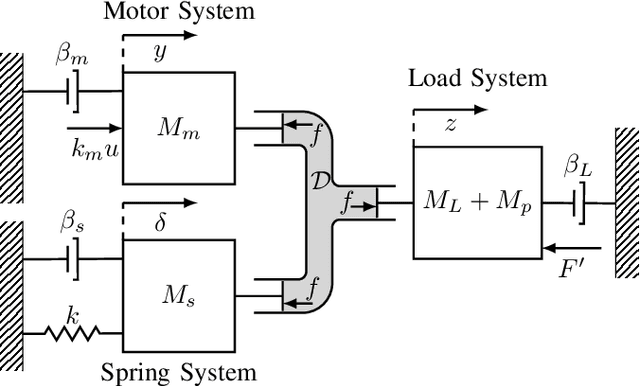

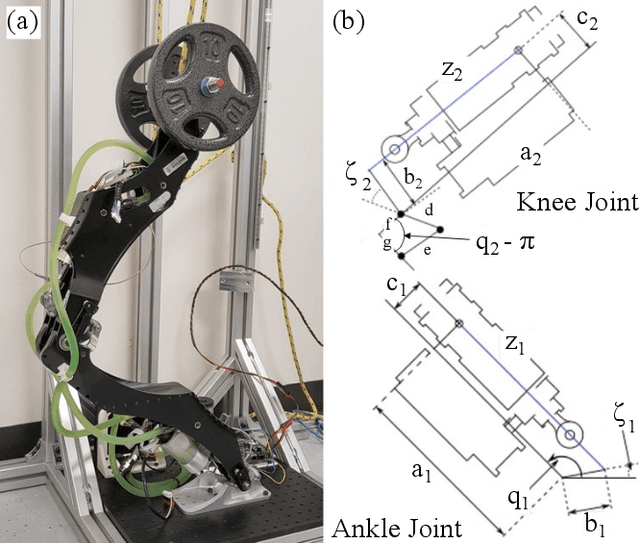

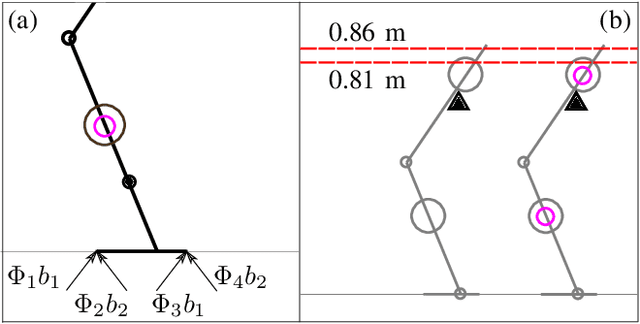

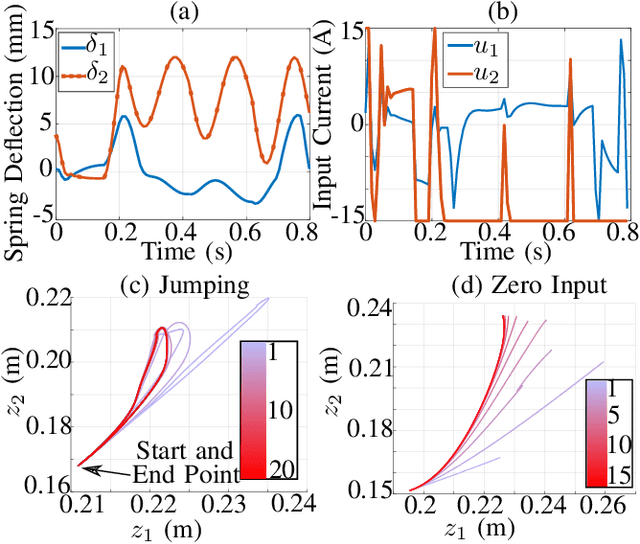

Abstract:Series elastic robots are best able to follow trajectories which obey the limitations of their actuators, since they cannot instantly change their joint forces. In fact, the performance of series elastic actuators can surpass that of ideal force source actuators by storing and releasing energy. In this paper, we formulate the trajectory optimization problem for series elastic robots in a novel way based on sequential linear programming. Our framework is unique in the separation of the actuator dynamics from the rest of the dynamics, and in the use of a tunable pseudo-mass parameter that improves the discretization accuracy of our approach. The actuator dynamics are truly linear, which allows them to be excluded from trust-region mechanics. This causes our algorithm to have similar run times with and without the actuator dynamics. We demonstrate our optimization algorithm by tuning high performance behaviors for a single-leg robot in simulation and on hardware for a single degree-of-freedom actuator testbed. The results show that compliance allows for faster motions and takes a similar amount of computation time.

Fast Kinodynamic Bipedal Locomotion Planning with Moving Obstacles

Jul 09, 2018

Abstract:We present a sampling-based kinodynamic planning framework for a bipedal robot in complex environments. Unlike other footstep planner which typically plan footstep locations and the biped dynamics in separate steps, we handle both simultaneously. Three advantages of this approach are (1) the ability to differentiate alternate routes while selecting footstep locations based on the temporal duration of the route as determined by the Linear Inverted Pendulum Model dynamics, (2) the ability to perform collision checking through time so that collisions with moving obstacles are prevented without avoiding their entire trajectory, and (3) the ability to specify a minimum forward velocity for the biped. To generate a dynamically consistent description of the walking behavior, we exploit the Phase Space Planner. To plan a collision free route toward the goal, we adapt planning strategies from non-holonomic wheeled robots to gather a sequence of inputs for the PSP. This allows us to efficiently approximate dynamic and kinematic constraints on bipedal motion, to apply a sampling based planning algorithms, and to use the Dubin's path as the steering method to connect two points in the configuration space. The results of the algorithm are sent to a Whole Body Controller to generate full body dynamic walking behavior.

Computationally-Robust and Efficient Prioritized Whole-Body Controller with Contact Constraints

Jul 03, 2018

Abstract:In this paper, we devise methods for the multi- objective control of humanoid robots, a.k.a. prioritized whole- body controllers, that achieve efficiency and robustness in the algorithmic computations. We use a form of whole-body controllers that is very general via incorporating centroidal momentum dynamics, operational task priorities, contact re- action forces, and internal force constraints. First, we achieve efficiency by solving a quadratic program that only involves the floating base dynamics and the reaction forces. Second, we achieve computational robustness by relaxing task accelerations such that they comply with friction cone constraints. Finally, we incorporate methods for smooth contact transitions to enhance the control of dynamic locomotion behaviors. The proposed methods are demonstrated both in simulation and in real experiments using a passive-ankle bipedal robot.

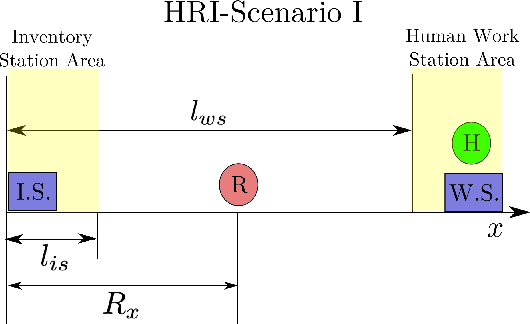

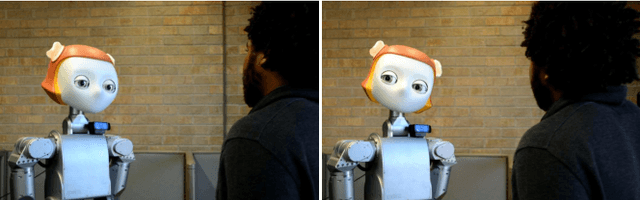

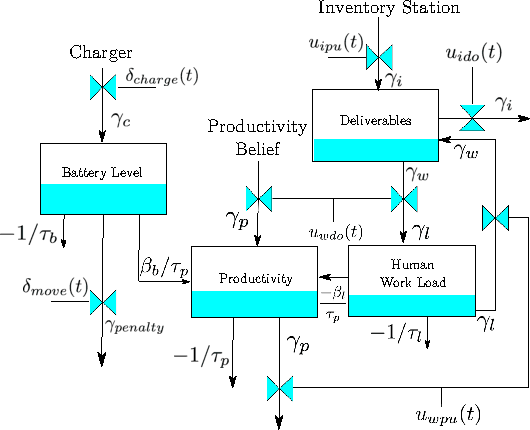

Exploring Model Predictive Control to Generate Optimal Control Policies for HRI Dynamical Systems

Jan 13, 2017

Abstract:We model Human-Robot-Interaction (HRI) scenarios as linear dynamical systems and use Model Predictive Control (MPC) with mixed integer constraints to generate human-aware control policies. We motivate the approach by presenting two scenarios. The first involves an assistive robot that aims to maximize productivity while minimizing the human's workload, and the second involves a listening humanoid robot that manages its eye contact behavior to maximize "connection" and minimize social "awkwardness" with the human during the interaction. Our simulation results show that the robot generates useful behaviors as it finds control policies to minimize the specified cost function. Further, we implement the second scenario on a humanoid robot and test the eye contact scenario with 48 human participants to demonstrate and evaluate the desired controller behavior. The humanoid generated 25% more eye contact when it was told to maximize connection over when it was told to maximize awkwardness. However, despite showing the desired behavior, there was no statistical difference between the participant's perceived connection with the humanoid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge