Travis Llado

Prioritized Kinematic Control of Joint-Constrained Head-Eye Robots using the Intermediate Value Approach

Sep 24, 2018

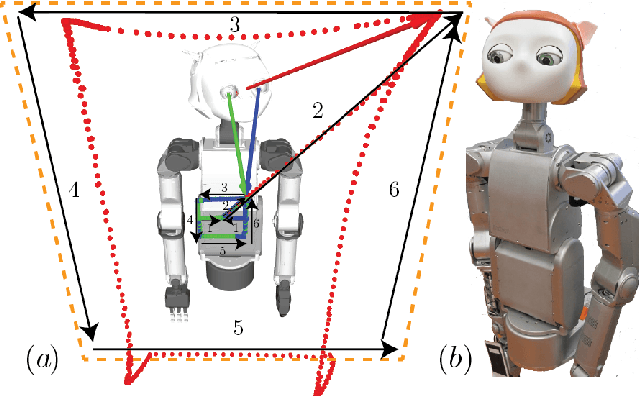

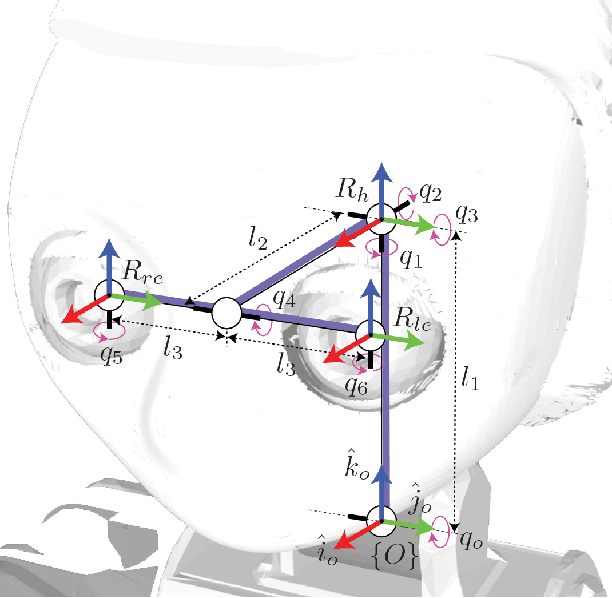

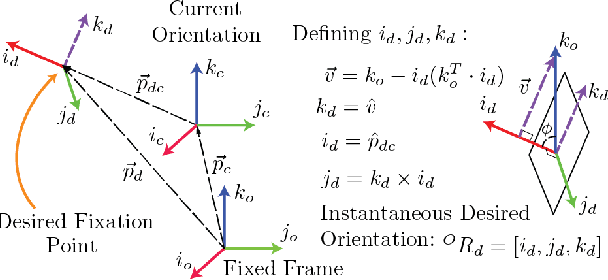

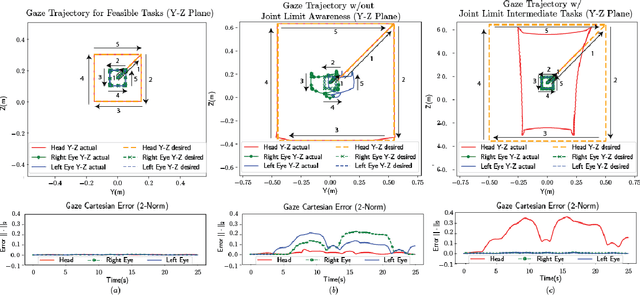

Abstract:Existing gaze controllers for head-eye robots can only handle single fixation points. Here, a generic controller for head-eye robots capable of executing simultaneous and prioritized fixation trajectories in Cartesian space is presented. This enables the specification of multiple operational-space behaviors with priority such that the execution of a low priority head orientation task does not disturb the satisfaction of a higher prioritized eye gaze task. Through our approach, the head-eye robot inherently gains the biomimetic vestibulo-ocular reflex (VOR), which is the ability of gaze stabilization under self generated movements. The described controller utilizes recursive null space projections to encode joint limit constraints and task priorities. To handle the solution discontinuity that occurs when joint limit tasks are inserted or removed as a constraint, the Intermediate Desired Value (IDV) approach is applied. Experimental validation of the controller's properties is demonstrated with the Dreamer humanoid robot. Our contribution is on (1) the formulation of a desired gaze task as an operational space orientation task, (2) the application details of the IDV approach for the prioritized head-eye robot controller that can handle intermediate joint constraints, and (3) a minimum-jerk specification for behavior and trajectory generation in Cartesian space.

Exploring Model Predictive Control to Generate Optimal Control Policies for HRI Dynamical Systems

Jan 13, 2017

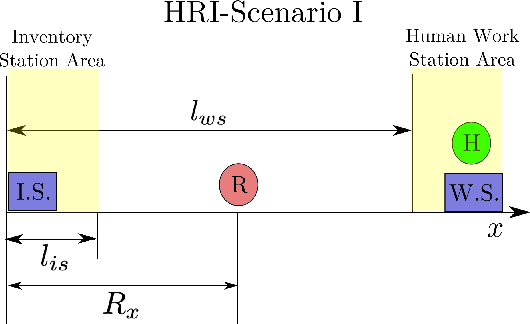

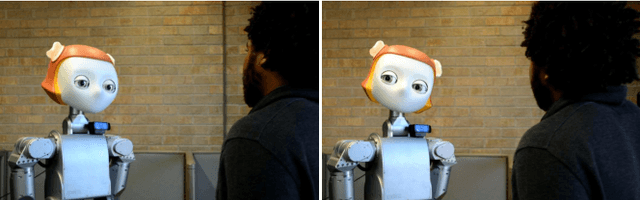

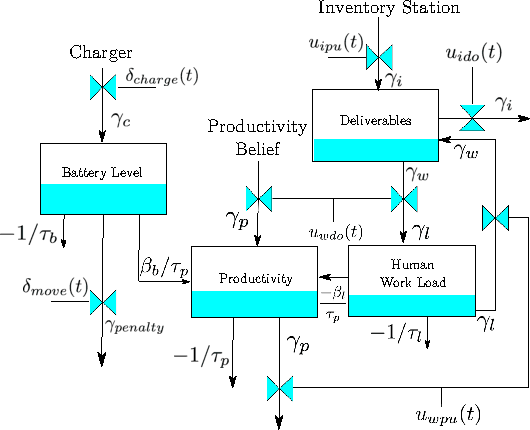

Abstract:We model Human-Robot-Interaction (HRI) scenarios as linear dynamical systems and use Model Predictive Control (MPC) with mixed integer constraints to generate human-aware control policies. We motivate the approach by presenting two scenarios. The first involves an assistive robot that aims to maximize productivity while minimizing the human's workload, and the second involves a listening humanoid robot that manages its eye contact behavior to maximize "connection" and minimize social "awkwardness" with the human during the interaction. Our simulation results show that the robot generates useful behaviors as it finds control policies to minimize the specified cost function. Further, we implement the second scenario on a humanoid robot and test the eye contact scenario with 48 human participants to demonstrate and evaluate the desired controller behavior. The humanoid generated 25% more eye contact when it was told to maximize connection over when it was told to maximize awkwardness. However, despite showing the desired behavior, there was no statistical difference between the participant's perceived connection with the humanoid.

Full-Body Collision Detection and Reaction with Omnidirectional Mobile Platforms: A Step Towards Safe Human-Robot Interaction

Jan 20, 2015

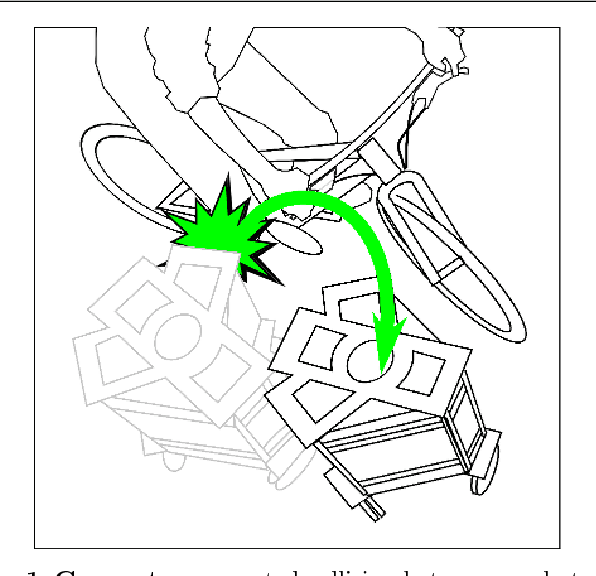

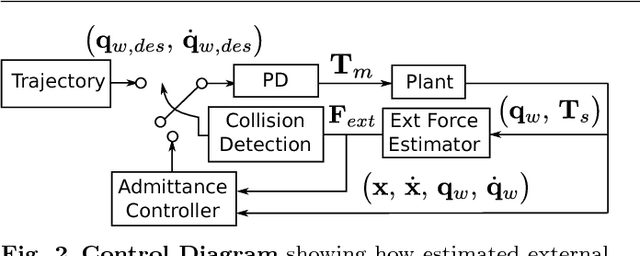

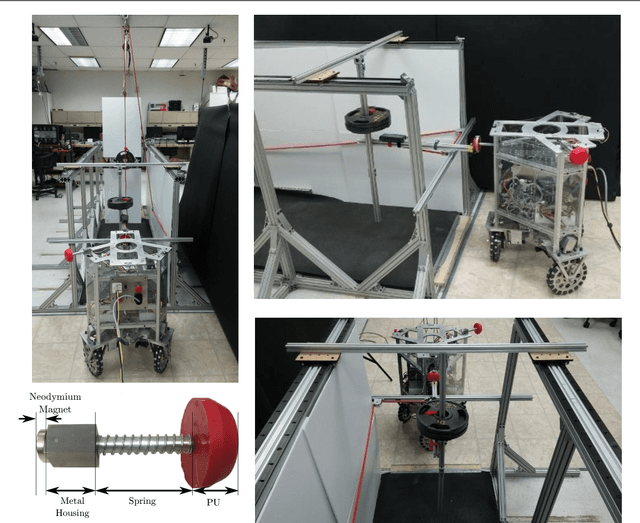

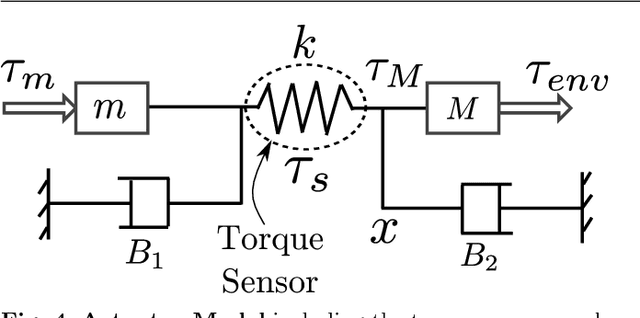

Abstract:In this paper, we develop estimation and control methods for quickly reacting to collisions between omnidirectional mobile platforms and their environment. To enable the full-body detection of external forces, we use torque sensors located in the robot's drivetrain. Using model based techniques we estimate, with good precision, the location, direction, and magnitude of collision forces, and we develop an admittance controller that achieves a low effective mass in reaction to them. For experimental testing, we use a facility containing a calibrated collision dummy and our holonomic mobile platform. We subsequently explore collisions with the dummy colliding against a stationary base and the base colliding against a stationary dummy. Overall, we accomplish fast reaction times and a reduction of impact forces. A proof of concept experiment presents various parts of the mobile platform, including the wheels, colliding safely with humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge