Omid Ardakanian

CLOAK: Contrastive Guidance for Latent Diffusion-Based Data Obfuscation

Dec 12, 2025Abstract:Data obfuscation is a promising technique for mitigating attribute inference attacks by semi-trusted parties with access to time-series data emitted by sensors. Recent advances leverage conditional generative models together with adversarial training or mutual information-based regularization to balance data privacy and utility. However, these methods often require modifying the downstream task, struggle to achieve a satisfactory privacy-utility trade-off, or are computationally intensive, making them impractical for deployment on resource-constrained mobile IoT devices. We propose Cloak, a novel data obfuscation framework based on latent diffusion models. In contrast to prior work, we employ contrastive learning to extract disentangled representations, which guide the latent diffusion process to retain useful information while concealing private information. This approach enables users with diverse privacy needs to navigate the privacy-utility trade-off with minimal retraining. Extensive experiments on four public time-series datasets, spanning multiple sensing modalities, and a dataset of facial images demonstrate that Cloak consistently outperforms state-of-the-art obfuscation techniques and is well-suited for deployment in resource-constrained settings.

Forecasting Multivariate Urban Data via Decomposition and Spatio-Temporal Graph Analysis

May 28, 2025Abstract:The forecasting of multivariate urban data presents a complex challenge due to the intricate dependencies between various urban metrics such as weather, air pollution, carbon intensity, and energy demand. This paper introduces a novel multivariate time-series forecasting model that utilizes advanced Graph Neural Networks (GNNs) to capture spatial dependencies among different time-series variables. The proposed model incorporates a decomposition-based preprocessing step, isolating trend, seasonal, and residual components to enhance the accuracy and interpretability of forecasts. By leveraging the dynamic capabilities of GNNs, the model effectively captures interdependencies and improves the forecasting performance. Extensive experiments on real-world datasets, including electricity usage, weather metrics, carbon intensity, and air pollution data, demonstrate the effectiveness of the proposed approach across various forecasting scenarios. The results highlight the potential of the model to optimize smart infrastructure systems, contributing to energy-efficient urban development and enhanced public well-being.

Knowing When to Stop Matters: A Unified Algorithm for Online Conversion under Horizon Uncertainty

Feb 06, 2025

Abstract:This paper investigates the online conversion problem, which involves sequentially trading a divisible resource (e.g., energy) under dynamically changing prices to maximize profit. A key challenge in online conversion is managing decisions under horizon uncertainty, where the duration of trading is either known, revealed partway, or entirely unknown. We propose a unified algorithm that achieves optimal competitive guarantees across these horizon models, accounting for practical constraints such as box constraints, which limit the maximum allowable trade per step. Additionally, we extend the algorithm to a learning-augmented version, leveraging horizon predictions to adaptively balance performance: achieving near-optimal results when predictions are accurate while maintaining strong guarantees when predictions are unreliable. These results advance the understanding of online conversion under various degrees of horizon uncertainty and provide more practical strategies to address real world constraints.

Guided Diffusion Model for Sensor Data Obfuscation

Dec 19, 2024

Abstract:Sensor data collected by Internet of Things (IoT) devices carries detailed information about individuals in their vicinity. Sharing this data with a semi-trusted service provider may compromise the individuals' privacy, as sensitive information can be extracted by powerful machine learning models. Data obfuscation empowered by generative models is a promising approach to generate synthetic sensor data such that the useful information contained in the original data is preserved and the sensitive information is obscured. This newly generated data will then be shared with the service provider instead of the original sensor data. In this work, we propose PrivDiffuser, a novel data obfuscation technique based on a denoising diffusion model that attains a superior trade-off between data utility and privacy through effective guidance techniques. Specifically, we extract latent representations that contain information about public and private attributes from sensor data to guide the diffusion model, and impose mutual information-based regularization when learning the latent representations to alleviate the entanglement of public and private attributes, thereby increasing the effectiveness of guidance. Evaluation on three real-world datasets containing different sensing modalities reveals that PrivDiffuser yields a better privacy-utility trade-off than the state-of-the-art obfuscation model, decreasing the utility loss by up to $1.81\%$ and the privacy loss by up to $3.42\%$. Moreover, we showed that users with diverse privacy needs can use PrivDiffuser to protect their privacy without having to retrain the model.

Grey-box Bayesian Optimization for Sensor Placement in Assisted Living Environments

Sep 11, 2023

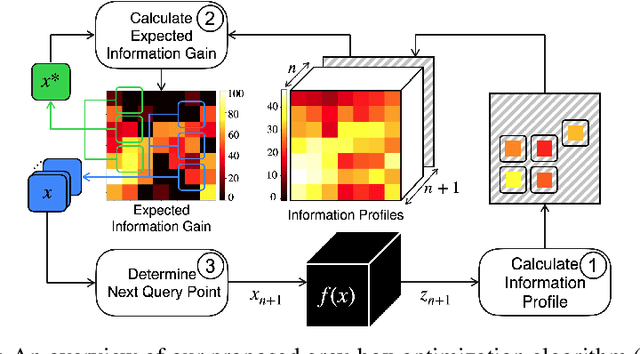

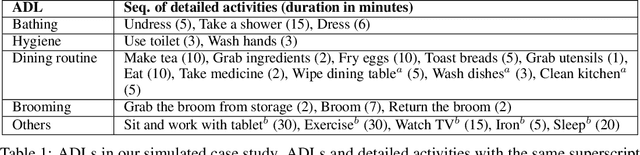

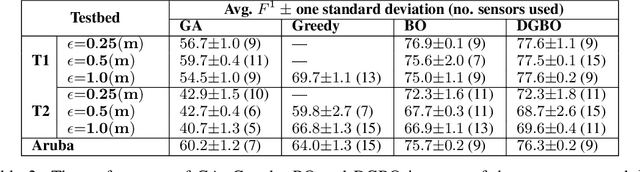

Abstract:Optimizing the configuration and placement of sensors is crucial for reliable fall detection, indoor localization, and activity recognition in assisted living spaces. We propose a novel, sample-efficient approach to find a high-quality sensor placement in an arbitrary indoor space based on grey-box Bayesian optimization and simulation-based evaluation. Our key technical contribution lies in capturing domain-specific knowledge about the spatial distribution of activities and incorporating it into the iterative selection of query points in Bayesian optimization. Considering two simulated indoor environments and a real-world dataset containing human activities and sensor triggers, we show that our proposed method performs better compared to state-of-the-art black-box optimization techniques in identifying high-quality sensor placements, leading to accurate activity recognition in terms of F1-score, while also requiring a significantly lower (51.3% on average) number of expensive function queries.

Robust Multimodal Fusion for Human Activity Recognition

Mar 08, 2023Abstract:The proliferation of IoT and mobile devices equipped with heterogeneous sensors has enabled new applications that rely on the fusion of time-series data generated by multiple sensors with different modalities. While there are promising deep neural network architectures for multimodal fusion, their performance falls apart quickly in the presence of consecutive missing data and noise across multiple modalities/sensors, the issues that are prevalent in real-world settings. We propose Centaur, a multimodal fusion model for human activity recognition (HAR) that is robust to these data quality issues. Centaur combines a data cleaning module, which is a denoising autoencoder with convolutional layers, and a multimodal fusion module, which is a deep convolutional neural network with the self-attention mechanism to capture cross-sensor correlation. We train Centaur using a stochastic data corruption scheme and evaluate it on three datasets that contain data generated by multiple inertial measurement units. Centaur's data cleaning module outperforms 2 state-of-the-art autoencoder-based models and its multimodal fusion module outperforms 4 strong baselines. Compared to 2 related robust fusion architectures, Centaur is more robust, achieving 11.59-17.52% higher accuracy in HAR, especially in the presence of consecutive missing data in multiple sensor channels.

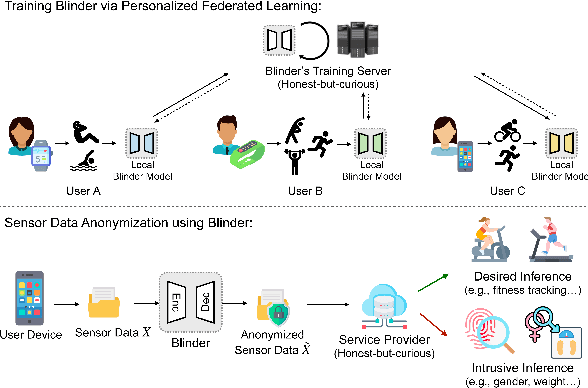

Blinder: End-to-end Privacy Protection in Sensing Systems via Personalized Federated Learning

Sep 27, 2022

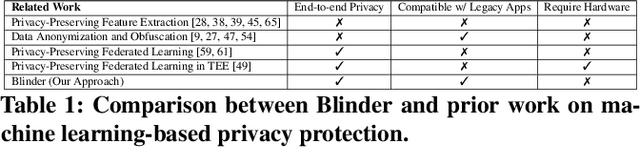

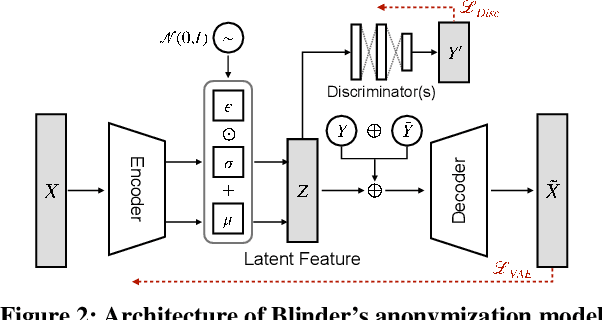

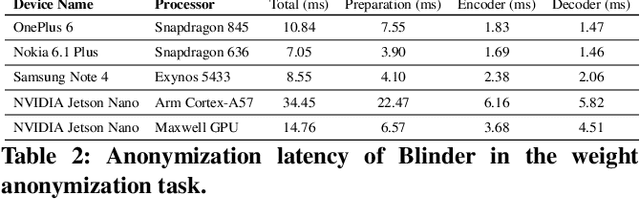

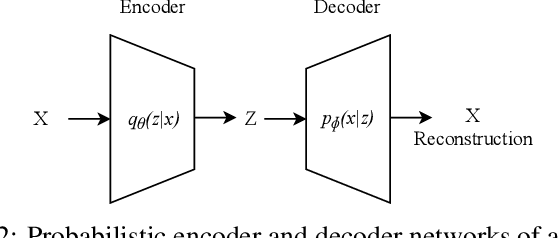

Abstract:This paper proposes a sensor data anonymization model that is trained on decentralized data and strikes a desirable trade-off between data utility and privacy, even in heterogeneous settings where the collected sensor data have different underlying distributions. Our anonymization model, dubbed Blinder, is based on a variational autoencoder and discriminator networks trained in an adversarial fashion. We use the model-agnostic meta-learning framework to adapt the anonymization model trained via federated learning to each user's data distribution. We evaluate Blinder under different settings and show that it provides end-to-end privacy protection at the cost of increasing privacy loss by up to 4.00% and decreasing data utility by up to 4.24%, compared to the state-of-the-art anonymization model trained on centralized data. Our experiments confirm that Blinder can obscure multiple private attributes at once, and has sufficiently low power consumption and computational overhead for it to be deployed on edge devices and smartphones to perform real-time anonymization of sensor data.

Privacy-preserving Data Analysis through Representation Learning and Transformation

Nov 16, 2020

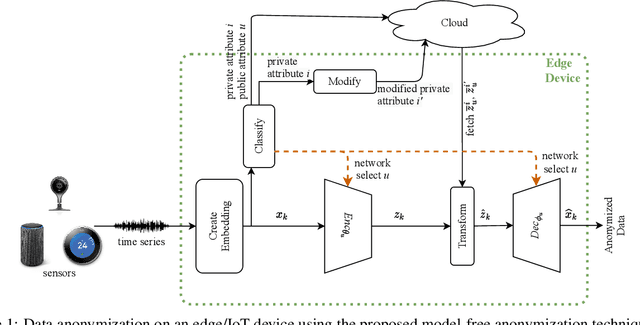

Abstract:The abundance of data from the sensors embedded in mobile and Internet of Things (IoT) devices and the remarkable success of deep neural networks in uncovering hidden patterns in time series data have led to mounting privacy concerns in recent years. In this paper, we aim to navigate the trade-off between data utility and privacy by learning low-dimensional representations that are useful for data anonymization. We propose probabilistic transformations in the latent space of a variational autoencoder to synthesize time series data such that intrusive inferences are prevented while desired inferences can still be made with a satisfactory level of accuracy. We compare our technique with state-of-the-art autoencoder-based anonymization techniques and additionally show that it can anonymize data in real time on resource-constrained edge devices.

On Identification of Distribution Grids

Nov 05, 2017

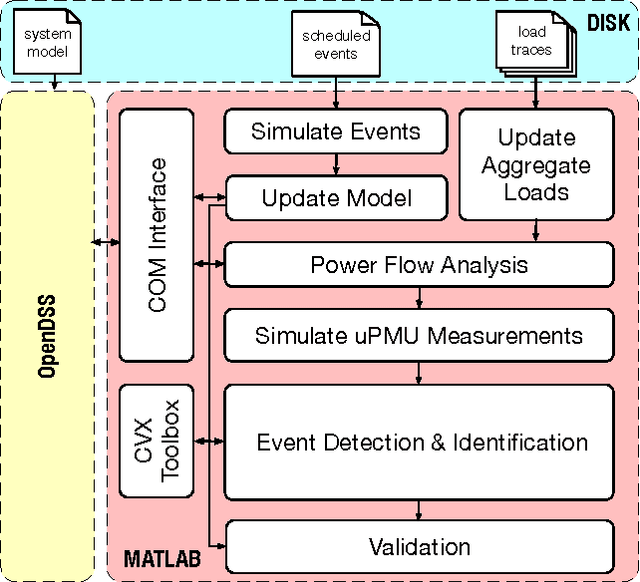

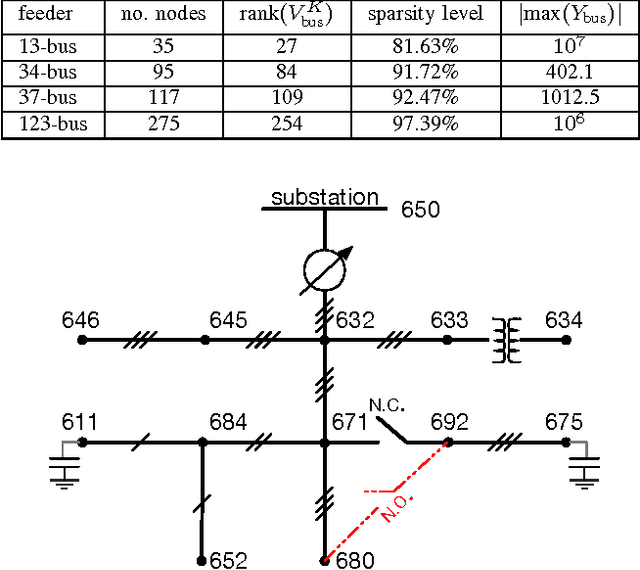

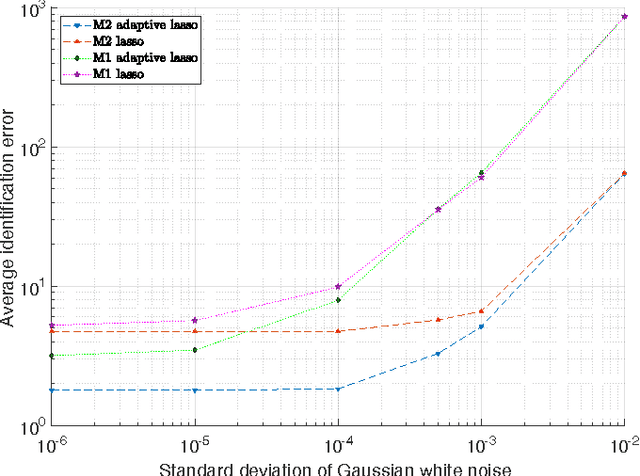

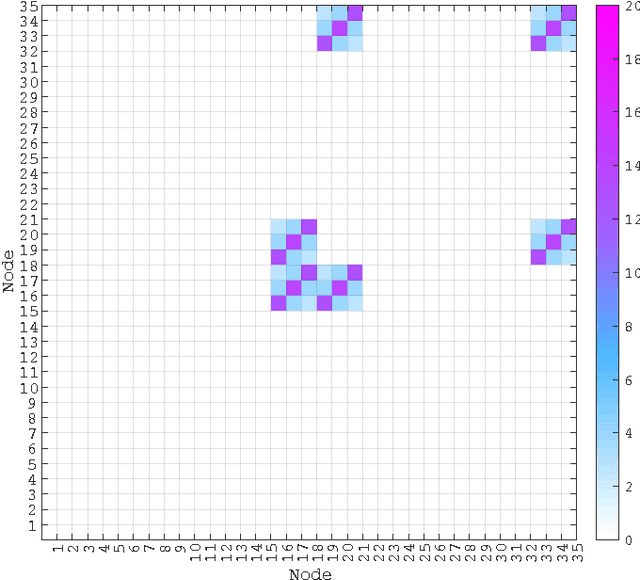

Abstract:Large-scale integration of distributed energy resources into residential distribution feeders necessitates careful control of their operation through power flow analysis. While the knowledge of the distribution system model is crucial for this type of analysis, it is often unavailable or outdated. The recent introduction of synchrophasor technology in low-voltage distribution grids has created an unprecedented opportunity to learn this model from high-precision, time-synchronized measurements of voltage and current phasors at various locations. This paper focuses on joint estimation of model parameters (admittance values) and operational structure of a poly-phase distribution network from the available telemetry data via the lasso, a method for regression shrinkage and selection. We propose tractable convex programs capable of tackling the low rank structure of the distribution system and develop an online algorithm for early detection and localization of critical events that induce a change in the admittance matrix. The efficacy of these techniques is corroborated through power flow studies on four three-phase radial distribution systems serving real household demands.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge