Yanzhao Wang

Knowing When to Stop Matters: A Unified Algorithm for Online Conversion under Horizon Uncertainty

Feb 06, 2025

Abstract:This paper investigates the online conversion problem, which involves sequentially trading a divisible resource (e.g., energy) under dynamically changing prices to maximize profit. A key challenge in online conversion is managing decisions under horizon uncertainty, where the duration of trading is either known, revealed partway, or entirely unknown. We propose a unified algorithm that achieves optimal competitive guarantees across these horizon models, accounting for practical constraints such as box constraints, which limit the maximum allowable trade per step. Additionally, we extend the algorithm to a learning-augmented version, leveraging horizon predictions to adaptively balance performance: achieving near-optimal results when predictions are accurate while maintaining strong guarantees when predictions are unreliable. These results advance the understanding of online conversion under various degrees of horizon uncertainty and provide more practical strategies to address real world constraints.

MAVOT: Memory-Augmented Video Object Tracking

Nov 26, 2017

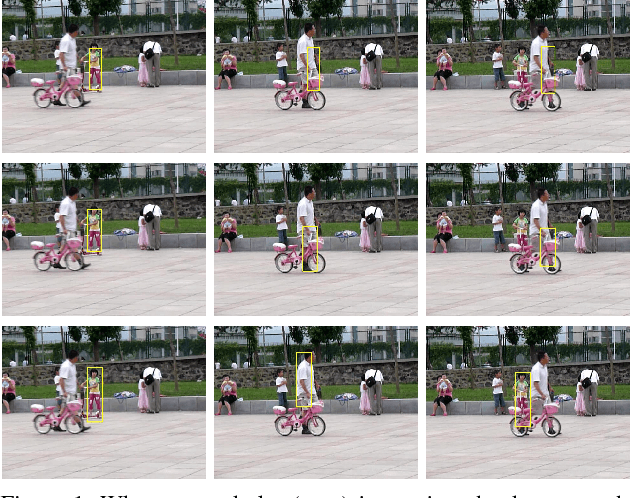

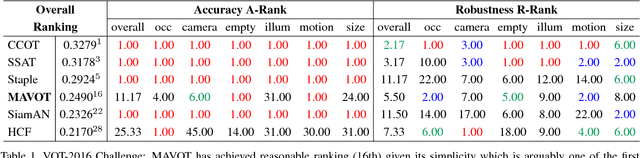

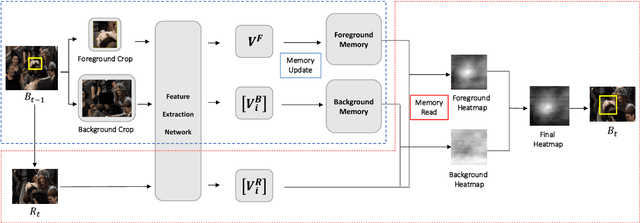

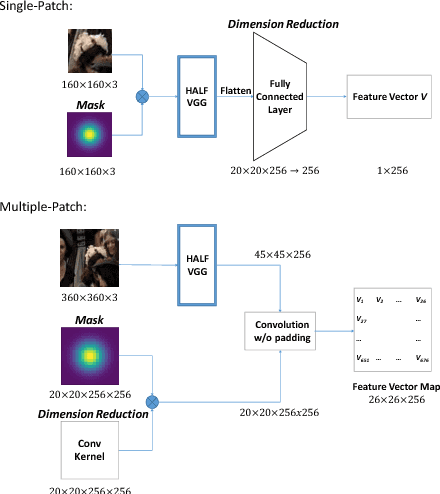

Abstract:We introduce a one-shot learning approach for video object tracking. The proposed algorithm requires seeing the object to be tracked only once, and employs an external memory to store and remember the evolving features of the foreground object as well as backgrounds over time during tracking. With the relevant memory retrieved and updated in each tracking, our tracking model is capable of maintaining long-term memory of the object, and thus can naturally deal with hard tracking scenarios including partial and total occlusion, motion changes and large scale and shape variations. In our experiments we use the ImageNet ILSVRC2015 video detection dataset to train and use the VOT-2016 benchmark to test and compare our Memory-Augmented Video Object Tracking (MAVOT) model. From the results, we conclude that given its oneshot property and simplicity in design, MAVOT is an attractive approach in visual tracking because it shows good performance on VOT-2016 benchmark and is among the top 5 performers in accuracy and robustness in occlusion, motion changes and empty target.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge