Oleg V. Komogortsev

Ocular Authentication: Fusion of Gaze and Periocular Modalities

May 26, 2025Abstract:This paper investigates the feasibility of fusing two eye-centric authentication modalities-eye movements and periocular images-within a calibration-free authentication system. While each modality has independently shown promise for user authentication, their combination within a unified gaze-estimation pipeline has not been thoroughly explored at scale. In this report, we propose a multimodal authentication system and evaluate it using a large-scale in-house dataset comprising 9202 subjects with an eye tracking (ET) signal quality equivalent to a consumer-facing virtual reality (VR) device. Our results show that the multimodal approach consistently outperforms both unimodal systems across all scenarios, surpassing the FIDO benchmark. The integration of a state-of-the-art machine learning architecture contributed significantly to the overall authentication performance at scale, driven by the model's ability to capture authentication representations and the complementary discriminative characteristics of the fused modalities.

Filtering Eye-Tracking Data From an EyeLink 1000: Comparing Heuristic, Savitzky-Golay, IIR and FIR Digital Filters

Mar 03, 2023Abstract:In a previous report (Raju et al.,2023) we concluded that, if the goal was to preserve events such as saccades, microsaccades, and smooth pursuit in eye-tracking recordings, data with sine wave frequencies less than 100 Hz (-3db) were the signal and data above 100 Hz were noise. We compare 5 filters in their ability to preserve signal and remove noise. Specifically, we compared the proprietary STD and EXTRA heuristic filters provided by our EyeLink 1000 (SR-Research, Ottawa, Canada), a Savitzky-Golay (SG) filter, an infinite impulse response (IIR) filter (low-pass Butterworth), and a finite impulse filter (FIR). For each of the non-heuristic filters, we systematically searched for optimal parameters. Both the IIR and the FIR filters were zero-phase filters. Mean frequency response profiles and amplitude spectra for all 5 filters are provided. In addition, we examined the effect of our filters on a noisy recording. Our FIR filter had the sharpest roll-off of any filter. Therefore, it maintained the signal and removed noise more effectively than any other filter. On this basis, we recommend the use of our FIR filter. Several reports have shown that filtering increased the temporal autocorrelation of a signal. To address this, the present filters were also evaluated in terms of autocorrelation (specifically the first 3 lags). Of all our filters, the STD filter introduced the least amount of autocorrelation.

Benefits of temporal information for appearance-based gaze estimation

May 24, 2020

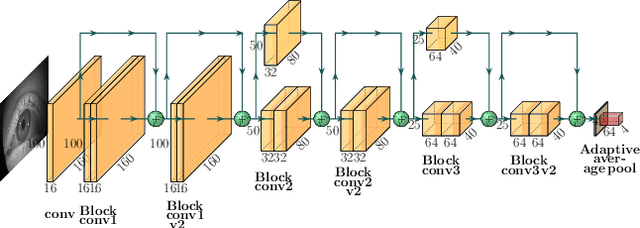

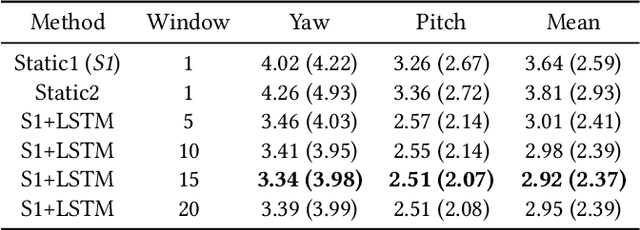

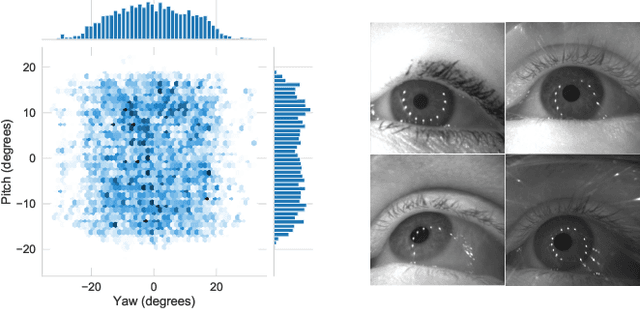

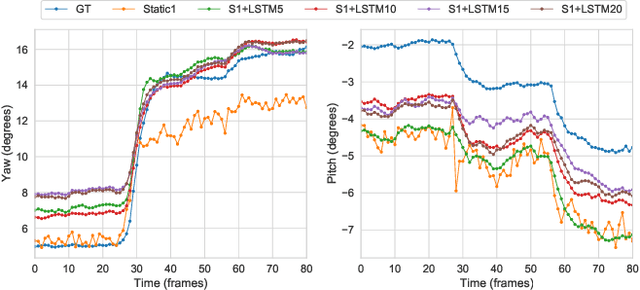

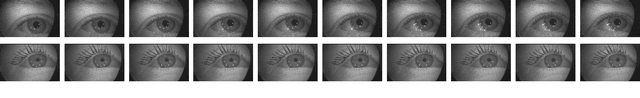

Abstract:State-of-the-art appearance-based gaze estimation methods, usually based on deep learning techniques, mainly rely on static features. However, temporal trace of eye gaze contains useful information for estimating a given gaze point. For example, approaches leveraging sequential eye gaze information when applied to remote or low-resolution image scenarios with off-the-shelf cameras are showing promising results. The magnitude of contribution from temporal gaze trace is yet unclear for higher resolution/frame rate imaging systems, in which more detailed information about an eye is captured. In this paper, we investigate whether temporal sequences of eye images, captured using a high-resolution, high-frame rate head-mounted virtual reality system, can be leveraged to enhance the accuracy of an end-to-end appearance-based deep-learning model for gaze estimation. Performance is compared against a static-only version of the model. Results demonstrate statistically-significant benefits of temporal information, particularly for the vertical component of gaze.

OpenEDS2020: Open Eyes Dataset

May 08, 2020

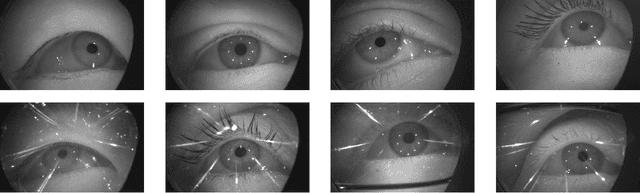

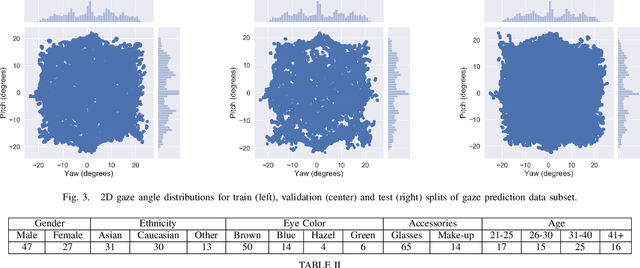

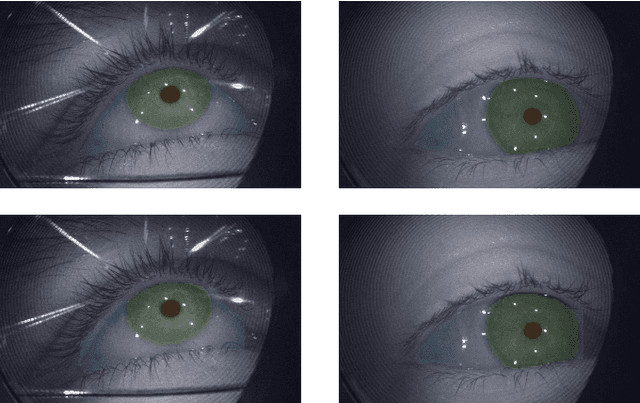

Abstract:We present the second edition of OpenEDS dataset, OpenEDS2020, a novel dataset of eye-image sequences captured at a frame rate of 100 Hz under controlled illumination, using a virtual-reality head-mounted display mounted with two synchronized eye-facing cameras. The dataset, which is anonymized to remove any personally identifiable information on participants, consists of 80 participants of varied appearance performing several gaze-elicited tasks, and is divided in two subsets: 1) Gaze Prediction Dataset, with up to 66,560 sequences containing 550,400 eye-images and respective gaze vectors, created to foster research in spatio-temporal gaze estimation and prediction approaches; and 2) Eye Segmentation Dataset, consisting of 200 sequences sampled at 5 Hz, with up to 29,500 images, of which 5% contain a semantic segmentation label, devised to encourage the use of temporal information to propagate labels to contiguous frames. Baseline experiments have been evaluated on OpenEDS2020, one for each task, with average angular error of 5.37 degrees when performing gaze prediction on 1 to 5 frames into the future, and a mean intersection over union score of 84.1% for semantic segmentation. As its predecessor, OpenEDS dataset, we anticipate that this new dataset will continue creating opportunities to researchers in eye tracking, machine learning and computer vision communities, to advance the state of the art for virtual reality applications. The dataset is available for download upon request at http://research.fb.com/programs/openeds-2020-challenge/.

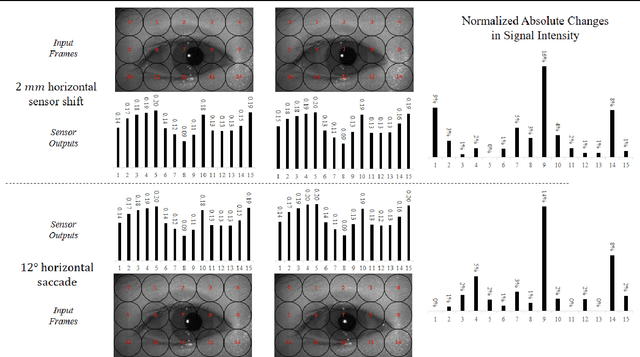

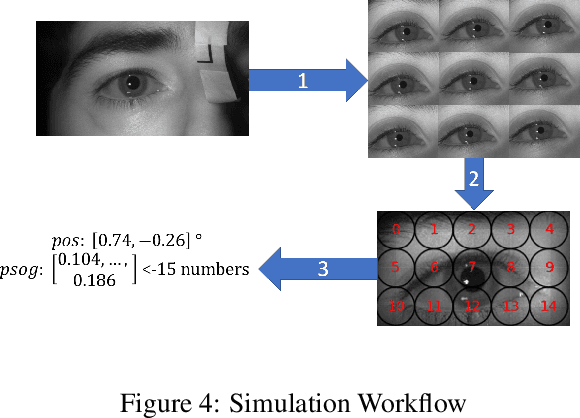

Assessment of Shift-Invariant CNN Gaze Mappings for PS-OG Eye Movement Sensors

Sep 04, 2019

Abstract:Photosensor oculography (PS-OG) eye movement sensors offer desirable performance characteristics for integration within wireless head mounted devices (HMDs), including low power consumption and high sampling rates. To address the known performance degradation of these sensors due to HMD shifts, various machine learning techniques have been proposed for mapping sensor outputs to gaze location. This paper advances the understanding of a recently introduced convolutional neural network designed to provide shift invariant gaze mapping within a specified range of sensor translations. Performance is assessed for shift training examples which better reflect the distribution of values that would be generated through manual repositioning of the HMD during a dedicated collection of training data. The network is shown to exhibit comparable accuracy for this realistic shift distribution versus a previously considered rectangular grid, thereby enhancing the feasibility of in-field set-up. In addition, this work further demonstrates the practical viability of the proposed initialization process by demonstrating robust mapping performance versus training data scale. The ability to maintain reasonable accuracy for shifts extending beyond those introduced during training is also demonstrated.

Method to Detect Eye Position Noise from Video-Oculography when Detection of Pupil or Corneal Reflection Position Fails

Sep 08, 2017

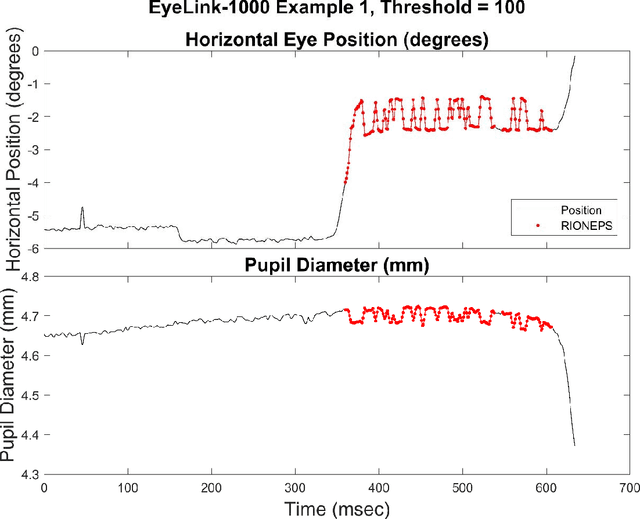

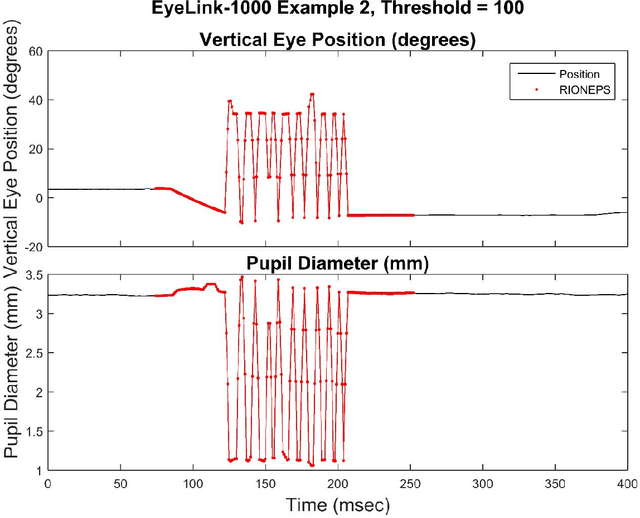

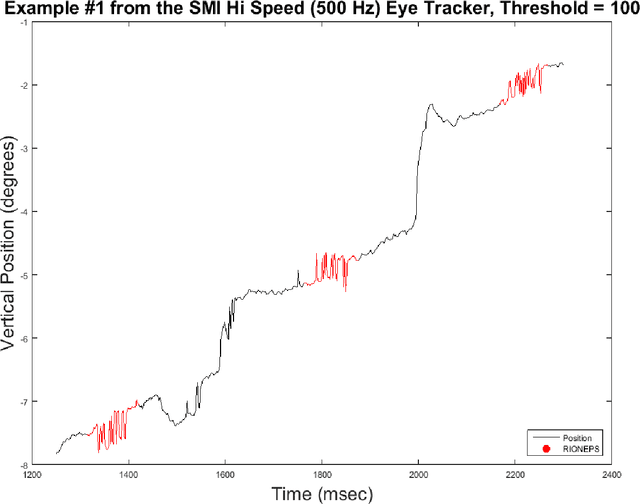

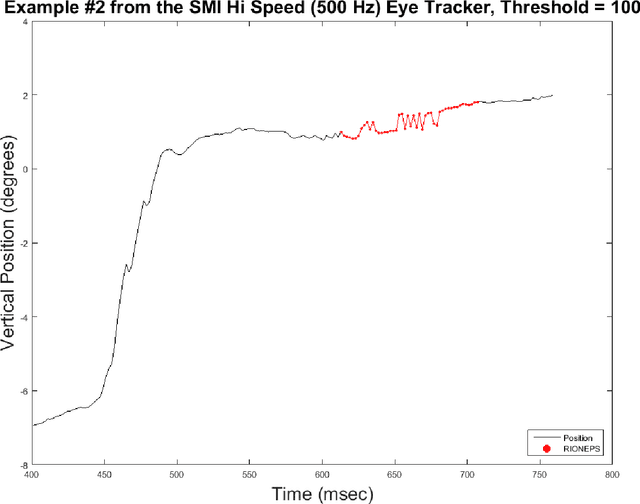

Abstract:We present software to detect noise in eye position signals from video-based eye-tracking systems that depend on accurate pupil and corneal reflection position estimation. When such systems transiently fail to properly detect the pupil or the corneal reflection due to occlusion from eyelids, eye lashes or various shadows, the estimated gaze position is false. This produces an artifactual signal in the position trace that is rapidly, irregularly oscillating between true and false gaze positions. We refer to this noise as RIONEPS (Rapid Irregularly Oscillating Noise of the Eye Position Signal). Our method for detecting these periods automatically is based on an estimate of the relative inefficiency of the eye position signal. We look for RIONEPS in the horizontal and vertical traces separately, and although we typically use it offline, it is suitable to adaptation for real time use. This method requires a threshold to be set, and although we provide some guidance, thresholds will have to be estimated empirically.

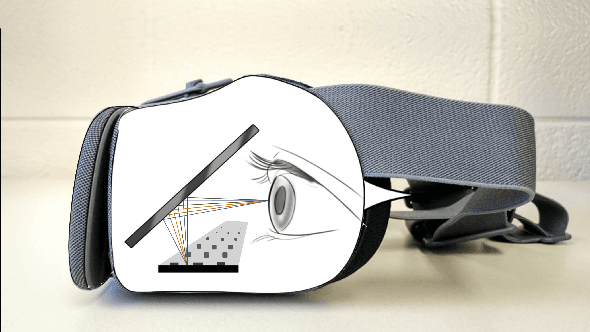

Hybrid PS-V Technique: A Novel Sensor Fusion Approach for Fast Mobile Eye-Tracking with Sensor-Shift Aware Correction

Jul 19, 2017

Abstract:This paper introduces and evaluates a hybrid technique that fuses efficiently the eye-tracking principles of photosensor oculography (PSOG) and video oculography (VOG). The main concept of this novel approach is to use a few fast and power-economic photosensors as the core mechanism for performing high speed eye-tracking, whereas in parallel, use a video sensor operating at low sampling-rate (snapshot mode) to perform dead-reckoning error correction when sensor movements occur. In order to evaluate the proposed method, we simulate the functional components of the technique and present our results in experimental scenarios involving various combinations of horizontal and vertical eye and sensor movements. Our evaluation shows that the developed technique can be used to provide robustness to sensor shifts that otherwise could induce error larger than 5 deg. Our analysis suggests that the technique can potentially enable high speed eye-tracking at low power profiles, making it suitable to be used in emerging head-mounted devices, e.g. AR/VR headsets.

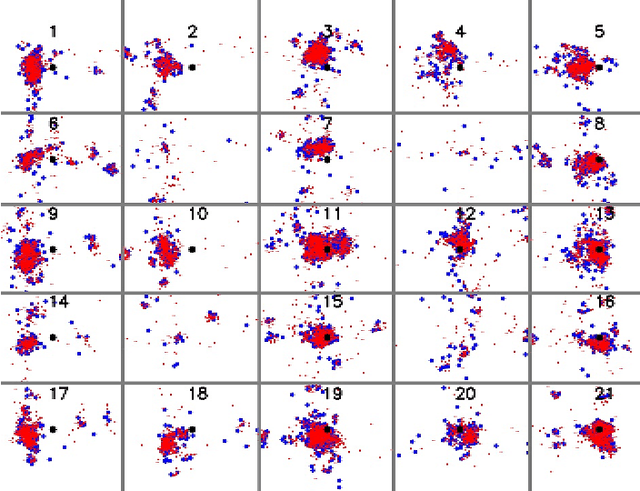

Photosensor Oculography: Survey and Parametric Analysis of Designs using Model-Based Simulation

Jul 19, 2017

Abstract:This paper presents a renewed overview of photosensor oculography (PSOG), an eye-tracking technique based on the principle of using simple photosensors to measure the amount of reflected (usually infrared) light when the eye rotates. Photosensor oculography can provide measurements with high precision, low latency and reduced power consumption, and thus it appears as an attractive option for performing eye-tracking in the emerging head-mounted interaction devices, e.g. augmented and virtual reality (AR/VR) headsets. In our current work we employ an adjustable simulation framework as a common basis for performing an exploratory study of the eye-tracking behavior of different photosensor oculography designs. With the performed experiments we explore the effects from the variation of some basic parameters of the designs on the resulting accuracy and cross-talk, which are crucial characteristics for the seamless operation of human-computer interaction applications based on eye-tracking. Our experimental results reveal the design trade-offs that need to be adopted to tackle the competing conditions that lead to optimum performance of different eye-tracking characteristics. We also present the transformations that arise in the eye-tracking output when sensor shifts occur, and assess the resulting degradation in accuracy for different combinations of eye movements and sensor shifts.

Method to Assess the Temporal Persistence of Potential Biometric Features: Application to Oculomotor, and Gait-Related Databases

Sep 13, 2016

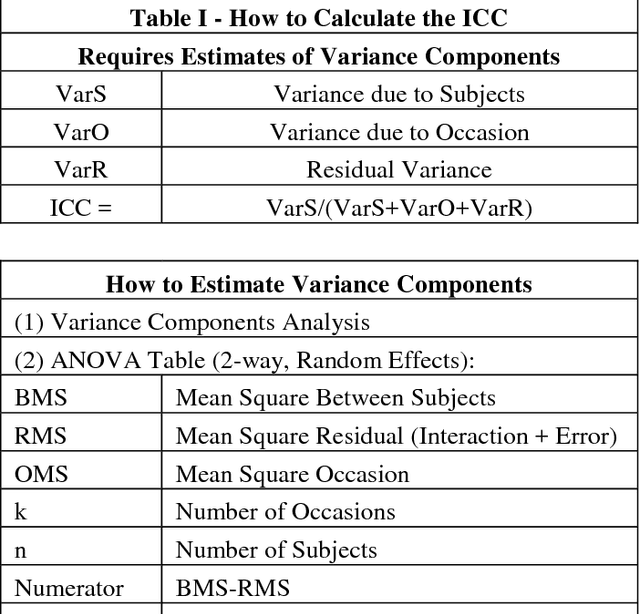

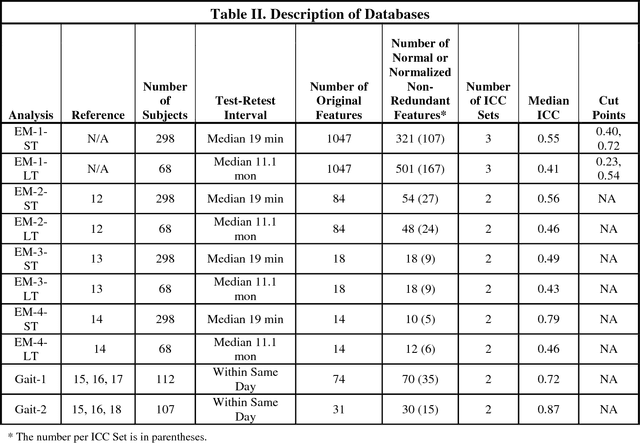

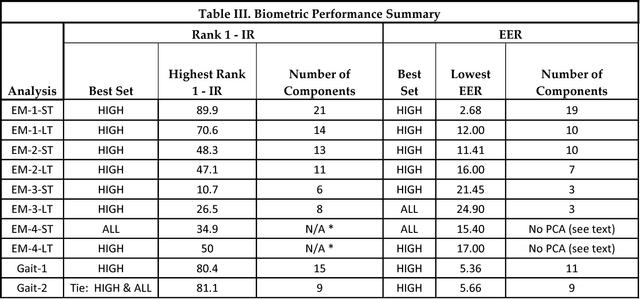

Abstract:Although temporal persistence, or permanence, is a well understood requirement for optimal biometric features, there is no general agreement on how to assess temporal persistence. We suggest that the best way to assess temporal persistence is to perform a test-retest study, and assess test-retest reliability. For ratio-scale features that are normally distributed, this is best done using the Intraclass Correlation Coefficient (ICC). For 10 distinct data sets (8 eye-movement related, and 2 gait related), we calculated the test-retest reliability ('Temporal persistence') of each feature, and compared biometric performance of high-ICC features to lower ICC features, and to the set of all features. We demonstrate that using a subset of only high-ICC features produced superior Rank-1-Identification Rate (Rank-1-IR) performance in 9 of 10 databases (p = 0.01, one-tailed). For Equal Error Rate (EER), using a subset of only high-ICC features produced superior performance in 8 of 10 databases (p = 0.055, one-tailed). In general, then, prescreening potential biometric features, and choosing only highly reliable features will yield better performance than lower ICC features or than the set of all features combined. We hypothesize that this would likely be the case for any biometric modality where the features can be expressed as quantitative values on an interval or ratio scale, assuming an adequate number of relatively independent features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge