Dillon Lohr

Ocular Authentication: Fusion of Gaze and Periocular Modalities

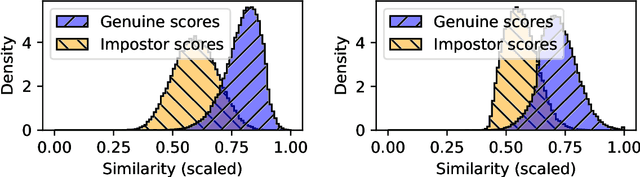

May 26, 2025Abstract:This paper investigates the feasibility of fusing two eye-centric authentication modalities-eye movements and periocular images-within a calibration-free authentication system. While each modality has independently shown promise for user authentication, their combination within a unified gaze-estimation pipeline has not been thoroughly explored at scale. In this report, we propose a multimodal authentication system and evaluate it using a large-scale in-house dataset comprising 9202 subjects with an eye tracking (ET) signal quality equivalent to a consumer-facing virtual reality (VR) device. Our results show that the multimodal approach consistently outperforms both unimodal systems across all scenarios, surpassing the FIDO benchmark. The integration of a state-of-the-art machine learning architecture contributed significantly to the overall authentication performance at scale, driven by the model's ability to capture authentication representations and the complementary discriminative characteristics of the fused modalities.

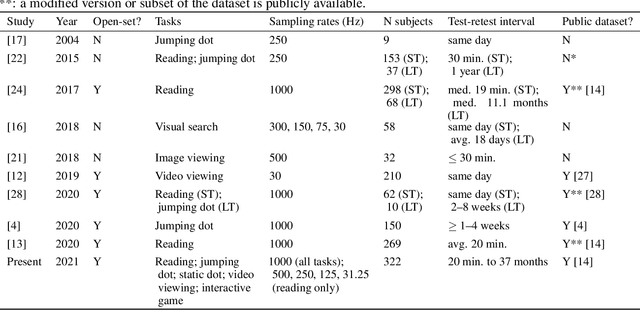

Establishing a Baseline for Gaze-driven Authentication Performance in VR: A Breadth-First Investigation on a Very Large Dataset

Apr 17, 2024

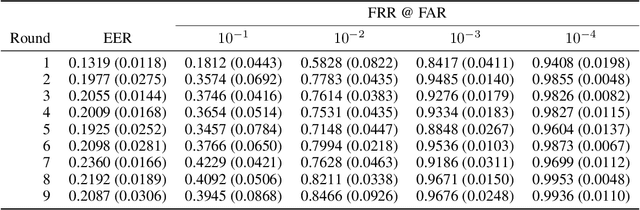

Abstract:This paper performs the crucial work of establishing a baseline for gaze-driven authentication performance to begin answering fundamental research questions using a very large dataset of gaze recordings from 9202 people with a level of eye tracking (ET) signal quality equivalent to modern consumer-facing virtual reality (VR) platforms. The size of the employed dataset is at least an order-of-magnitude larger than any other dataset from previous related work. Binocular estimates of the optical and visual axes of the eyes and a minimum duration for enrollment and verification are required for our model to achieve a false rejection rate (FRR) of below 3% at a false acceptance rate (FAR) of 1 in 50,000. In terms of identification accuracy which decreases with gallery size, we estimate that our model would fall below chance-level accuracy for gallery sizes of 148,000 or more. Our major findings indicate that gaze authentication can be as accurate as required by the FIDO standard when driven by a state-of-the-art machine learning architecture and a sufficiently large training dataset.

Eye Know You Too: A DenseNet Architecture for End-to-end Biometric Authentication via Eye Movements

Jan 05, 2022

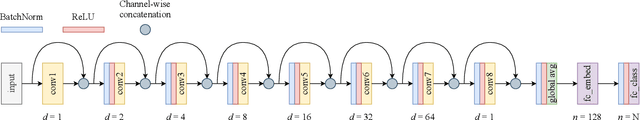

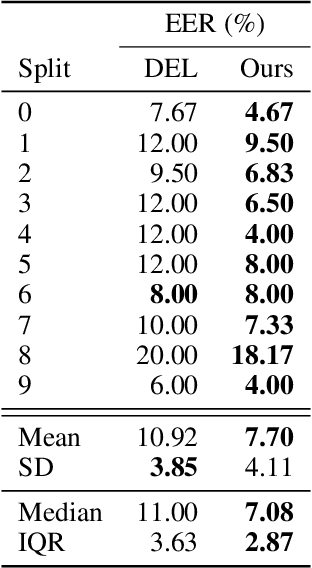

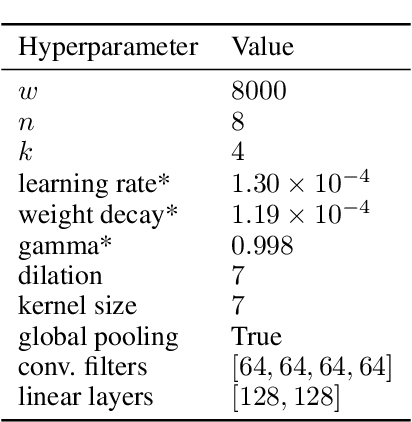

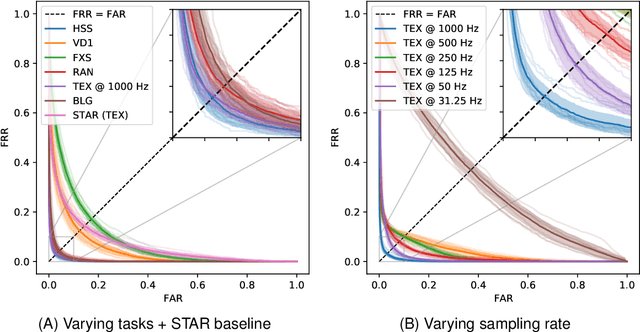

Abstract:Plain convolutional neural networks (CNNs) have been used to achieve state-of-the-art performance in various domains in the past years, including biometric authentication via eye movements. There have been many relatively recent improvements to plain CNNs, including residual networks (ResNets) and densely connected convolutional networks (DenseNets). Although these networks primarily target image processing domains, they can be easily modified to work with time series data. We employ a DenseNet architecture for end-to-end biometric authentication via eye movements. We compare our model against the most relevant prior works including the current state-of-the-art. We find that our model achieves state-of-the-art performance for all considered training conditions and data sets.

Eye Know You: Metric Learning for End-to-end Biometric Authentication Using Eye Movements from a Longitudinal Dataset

Apr 21, 2021

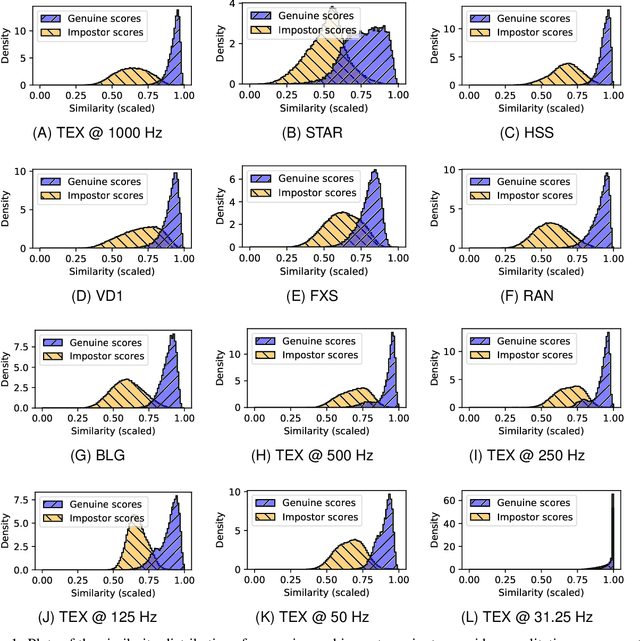

Abstract:While numerous studies have explored eye movement biometrics since the modality's inception in 2004, the permanence of eye movements remains largely unexplored as most studies utilize datasets collected within a short time frame. This paper presents a convolutional neural network for authenticating users using their eye movements. The network is trained with an established metric learning loss function, multi-similarity loss, which seeks to form a well-clustered embedding space and directly enables the enrollment and authentication of out-of-sample users. Performance measures are computed on GazeBase, a task-diverse and publicly-available dataset collected over a 37-month period. This study includes an exhaustive analysis of the effects of training on various tasks and downsampling from 1000 Hz to several lower sampling rates. Our results reveal that reasonable authentication accuracy may be achieved even during a low-cognitive-load task or at low sampling rates. Moreover, we find that eye movements are quite resilient against template aging after 3 years.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge