Henry Griffith

Eye Know You: Metric Learning for End-to-end Biometric Authentication Using Eye Movements from a Longitudinal Dataset

Apr 21, 2021

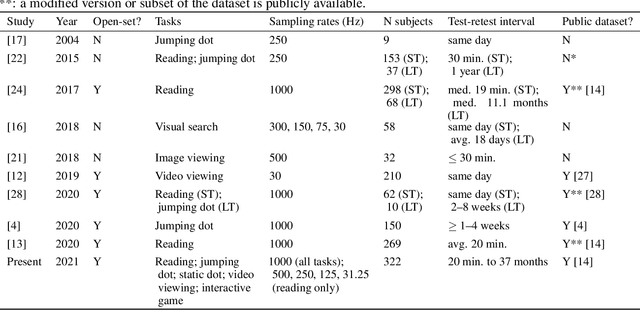

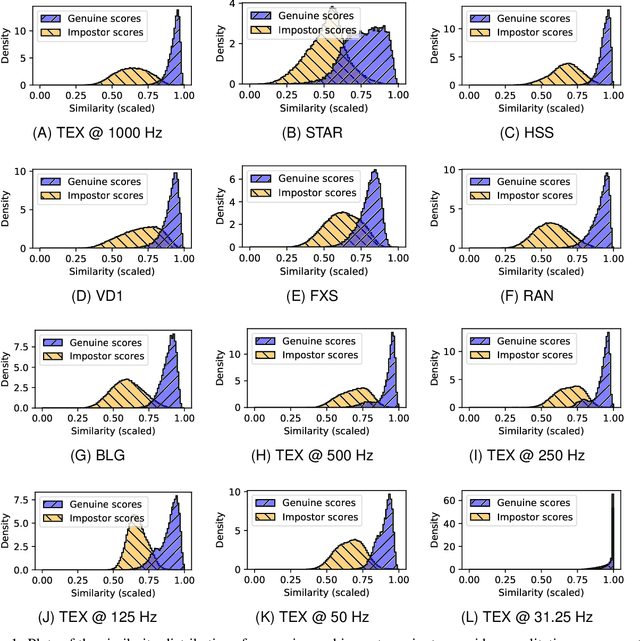

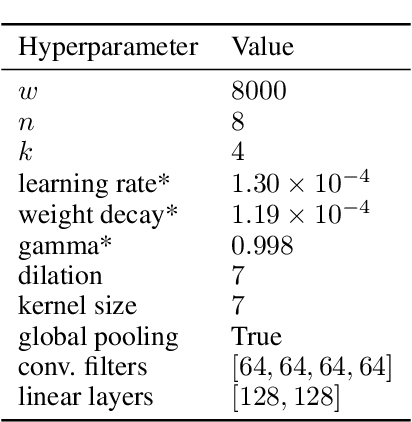

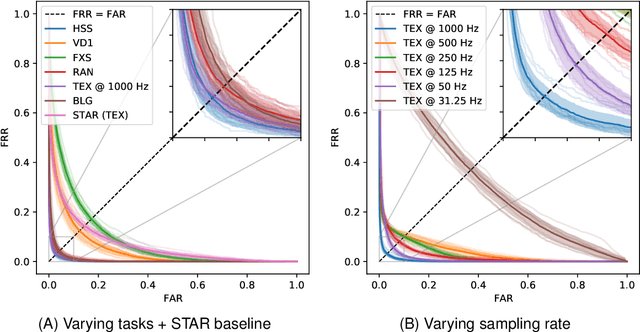

Abstract:While numerous studies have explored eye movement biometrics since the modality's inception in 2004, the permanence of eye movements remains largely unexplored as most studies utilize datasets collected within a short time frame. This paper presents a convolutional neural network for authenticating users using their eye movements. The network is trained with an established metric learning loss function, multi-similarity loss, which seeks to form a well-clustered embedding space and directly enables the enrollment and authentication of out-of-sample users. Performance measures are computed on GazeBase, a task-diverse and publicly-available dataset collected over a 37-month period. This study includes an exhaustive analysis of the effects of training on various tasks and downsampling from 1000 Hz to several lower sampling rates. Our results reveal that reasonable authentication accuracy may be achieved even during a low-cognitive-load task or at low sampling rates. Moreover, we find that eye movements are quite resilient against template aging after 3 years.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge