Ioannis Rigas

Hybrid PS-V Technique: A Novel Sensor Fusion Approach for Fast Mobile Eye-Tracking with Sensor-Shift Aware Correction

Jul 19, 2017

Abstract:This paper introduces and evaluates a hybrid technique that fuses efficiently the eye-tracking principles of photosensor oculography (PSOG) and video oculography (VOG). The main concept of this novel approach is to use a few fast and power-economic photosensors as the core mechanism for performing high speed eye-tracking, whereas in parallel, use a video sensor operating at low sampling-rate (snapshot mode) to perform dead-reckoning error correction when sensor movements occur. In order to evaluate the proposed method, we simulate the functional components of the technique and present our results in experimental scenarios involving various combinations of horizontal and vertical eye and sensor movements. Our evaluation shows that the developed technique can be used to provide robustness to sensor shifts that otherwise could induce error larger than 5 deg. Our analysis suggests that the technique can potentially enable high speed eye-tracking at low power profiles, making it suitable to be used in emerging head-mounted devices, e.g. AR/VR headsets.

Photosensor Oculography: Survey and Parametric Analysis of Designs using Model-Based Simulation

Jul 19, 2017

Abstract:This paper presents a renewed overview of photosensor oculography (PSOG), an eye-tracking technique based on the principle of using simple photosensors to measure the amount of reflected (usually infrared) light when the eye rotates. Photosensor oculography can provide measurements with high precision, low latency and reduced power consumption, and thus it appears as an attractive option for performing eye-tracking in the emerging head-mounted interaction devices, e.g. augmented and virtual reality (AR/VR) headsets. In our current work we employ an adjustable simulation framework as a common basis for performing an exploratory study of the eye-tracking behavior of different photosensor oculography designs. With the performed experiments we explore the effects from the variation of some basic parameters of the designs on the resulting accuracy and cross-talk, which are crucial characteristics for the seamless operation of human-computer interaction applications based on eye-tracking. Our experimental results reveal the design trade-offs that need to be adopted to tackle the competing conditions that lead to optimum performance of different eye-tracking characteristics. We also present the transformations that arise in the eye-tracking output when sensor shifts occur, and assess the resulting degradation in accuracy for different combinations of eye movements and sensor shifts.

A Study on the Extraction and Analysis of a Large Set of Eye Movement Features during Reading

Mar 27, 2017

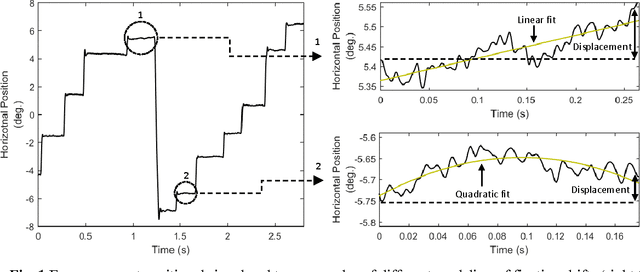

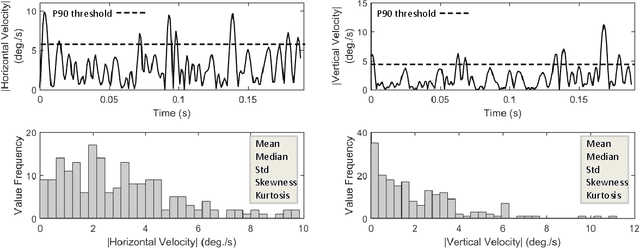

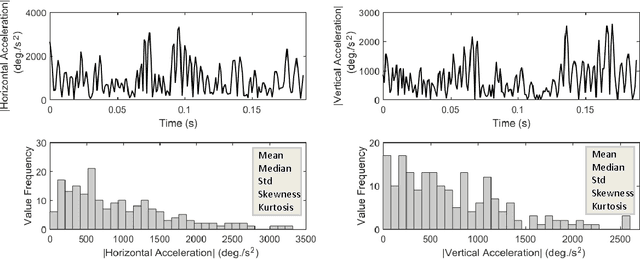

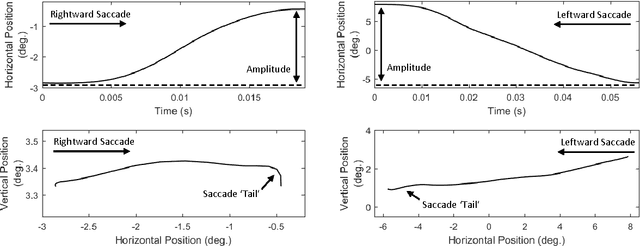

Abstract:This work presents a study on the extraction and analysis of a set of 101 categories of eye movement features from three types of eye movement events: fixations, saccades, and post-saccadic oscillations. The eye movements were recorded during a reading task. For the categories of features with multiple instances in a recording we extract corresponding feature subtypes by calculating descriptive statistics on the distributions of these instances. A unified framework of detailed descriptions and mathematical formulas are provided for the extraction of the feature set. The analysis of feature values is performed using a large database of eye movement recordings from a normative population of 298 subjects. We demonstrate the central tendency and overall variability of feature values over the experimental population, and more importantly, we quantify the test-retest reliability (repeatability) of each separate feature. The described methods and analysis can provide valuable tools in fields exploring the eye movements, such as in behavioral studies, attention and cognition research, medical research, biometric recognition, and human-computer interaction.

Method to Assess the Temporal Persistence of Potential Biometric Features: Application to Oculomotor, and Gait-Related Databases

Sep 13, 2016

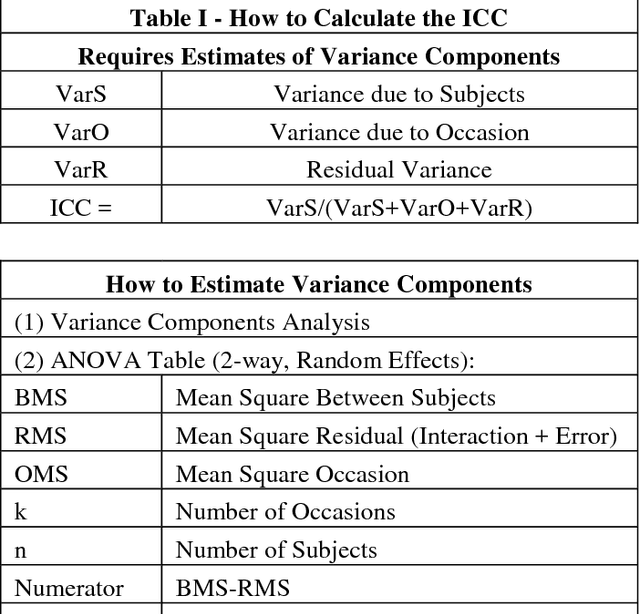

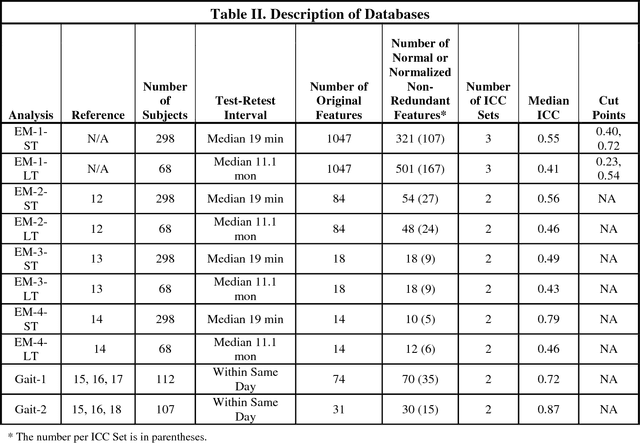

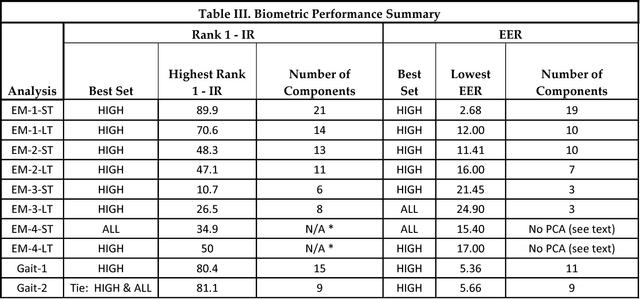

Abstract:Although temporal persistence, or permanence, is a well understood requirement for optimal biometric features, there is no general agreement on how to assess temporal persistence. We suggest that the best way to assess temporal persistence is to perform a test-retest study, and assess test-retest reliability. For ratio-scale features that are normally distributed, this is best done using the Intraclass Correlation Coefficient (ICC). For 10 distinct data sets (8 eye-movement related, and 2 gait related), we calculated the test-retest reliability ('Temporal persistence') of each feature, and compared biometric performance of high-ICC features to lower ICC features, and to the set of all features. We demonstrate that using a subset of only high-ICC features produced superior Rank-1-Identification Rate (Rank-1-IR) performance in 9 of 10 databases (p = 0.01, one-tailed). For Equal Error Rate (EER), using a subset of only high-ICC features produced superior performance in 8 of 10 databases (p = 0.055, one-tailed). In general, then, prescreening potential biometric features, and choosing only highly reliable features will yield better performance than lower ICC features or than the set of all features combined. We hypothesize that this would likely be the case for any biometric modality where the features can be expressed as quantitative values on an interval or ratio scale, assuming an adequate number of relatively independent features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge