Oleg Kupervasser

Visual navigation for airborne control of ground robots from tethered platform: creation of the first prototype

Jan 05, 2019

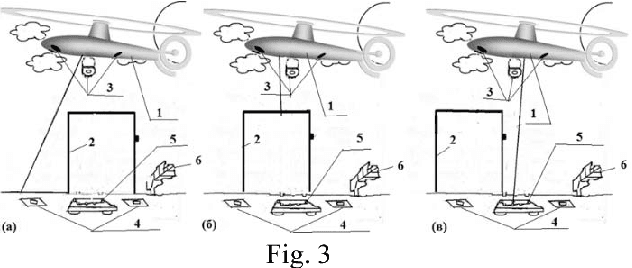

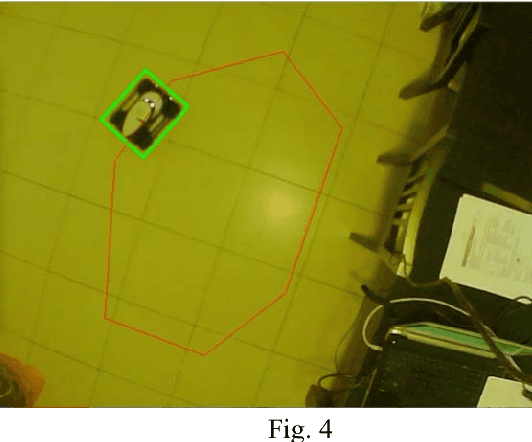

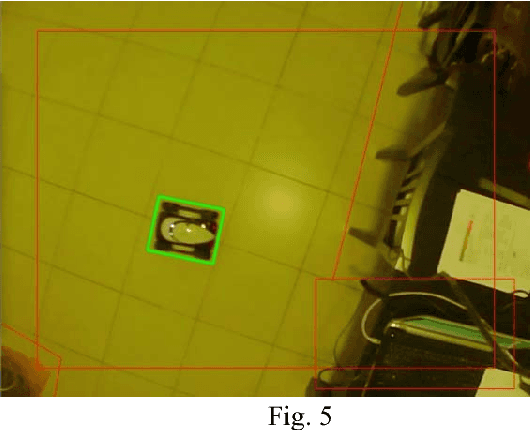

Abstract:We propose control systems for the coordination of the ground robots. We develop robot efficient coordination using the devices located on towers or a tethered aerial apparatus tracing the robots on controlled area and supervising their environment including natural and artificial markings. The simple prototype of such a system was created in the Laboratory of Applied Mathematics of Ariel University (under the supervision of Prof. Domoshnitsky Alexander) in collaboration with company TRANSIST VIDEO LLC (Skolkovo, Moscow). We plan to create much more complicated prototype using Kamin grant (Israel)

* 11 pages, 7 figures

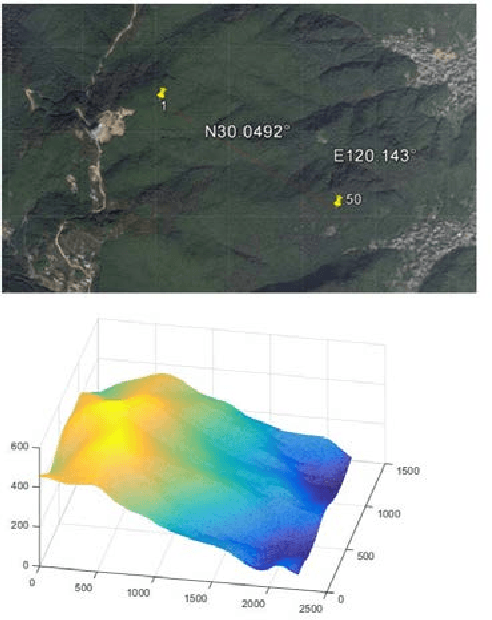

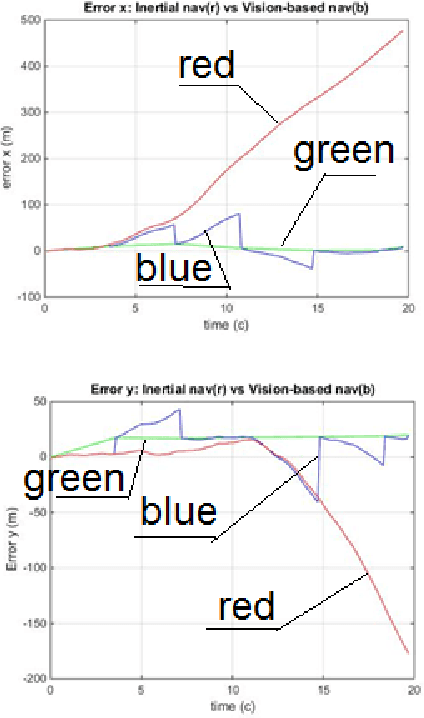

Robust positioning of drones for land use monitoring in strong terrain relief using vision-based navigation

Feb 20, 2018

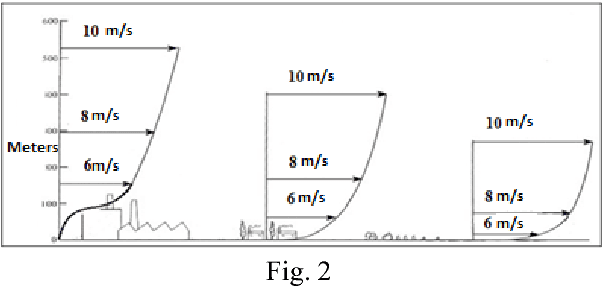

Abstract:For land use monitoring, the main problems are robust positioning in urban canyons and strong terrain reliefs with the use of GPS system only. Indeed, satellite signal reflection and shielding in urban canyons and strong terrain relief results in problems with correct positioning. Using GNSS-RTK does not solve the problem completely because in some complex situations the whole satellite's system works incorrectly. We transform the weakness (urban canyons and strong terrain relief) to an advantage. It is a vision-based navigation using a map of the terrain relief. We investigate and demonstrate the effectiveness of this technology in Chinese region Xiaoshan. The accuracy of the vision-based navigation system corresponds to the expected for these conditions. . It was concluded that the maximum position error based on vision-based navigation is 20 m and the maximum angle Euler error based on vision-based navigation is 0.83 degree. In case of camera movement, the maximum position error based on vision-based navigation is 30m and the maximum Euler angle error based on vision-based navigation is 2.2 degrees.

* 7 pages, 7 figures

Precision improvement of MEMS gyros for indoor mobile robots with horizontal motion inspired by methods of TRIZ

Mar 18, 2014

Abstract:In the paper, the problem of precision improvement for the MEMS gyrosensors on indoor robots with horizontal motion is solved by methods of TRIZ ("the theory of inventive problem solving").

* 6 pages, the paper is accepted to 9th IEEE International Conference on Nano/Micro Engineered and Molecular Systems, Hawaii, USA (IEEE-NEMS 2014) as an oral presentation

The Mysterious Optimality of Naive Bayes: Estimation of the Probability in the System of "Classifiers"

Aug 24, 2012Abstract:Bayes Classifiers are widely used currently for recognition, identification and knowledge discovery. The fields of application are, for example, image processing, medicine, chemistry (QSAR). But by mysterious way the Naive Bayes Classifier usually gives a very nice and good presentation of a recognition. It can not be improved considerably by more complex models of Bayes Classifier. We demonstrate here a very nice and simple proof of the Naive Bayes Classifier optimality, that can explain this interesting fact.The derivation in the current paper is based on arXiv:cs/0202020v1

* 9 pages,1 figure, all changes in the second version is made by Kupervasser only

Recovering Epipolar Geometry from Images of Smooth Surfaces

Jul 19, 2012

Abstract:We present four methods for recovering the epipolar geometry from images of smooth surfaces. In the existing methods for recovering epipolar geometry corresponding feature points are used that cannot be found in such images. The first method is based on finding corresponding characteristic points created by illumination (ICPM - illumination characteristic points' method (PM)). The second method is based on correspondent tangency points created by tangents from epipoles to outline of smooth bodies (OTPM - outline tangent PM). These two methods are exact and give correct results for real images, because positions of the corresponding illumination characteristic points and corresponding outline are known with small errors. But the second method is limited either to special type of scenes or to restricted camera motion. We also consider two more methods which are termed CCPM (curve characteristic PM) and CTPM (curve tangent PM), for searching epipolar geometry for images of smooth bodies based on a set of level curves with constant illumination intensity. The CCPM method is based on searching correspondent points on isophoto curves with the help of correlation of curvatures between these lines. The CTPM method is based on property of the tangential to isophoto curve epipolar line to map into the tangential to correspondent isophoto curves epipolar line. The standard method (SM) based on knowledge of pairs of the almost exact correspondent points. The methods have been implemented and tested by SM on pairs of real images. Unfortunately, the last two methods give us only a finite subset of solutions including "good" solution. Exception is "epipoles in infinity". The main reason is inaccuracy of assumption of constant brightness for smooth bodies. But outline and illumination characteristic points are not influenced by this inaccuracy. So, the first pair of methods gives exact results.

* accepted to "Pattern Recognition and Image Analysis" for publishing in 2013, 33 pages, 19 figures

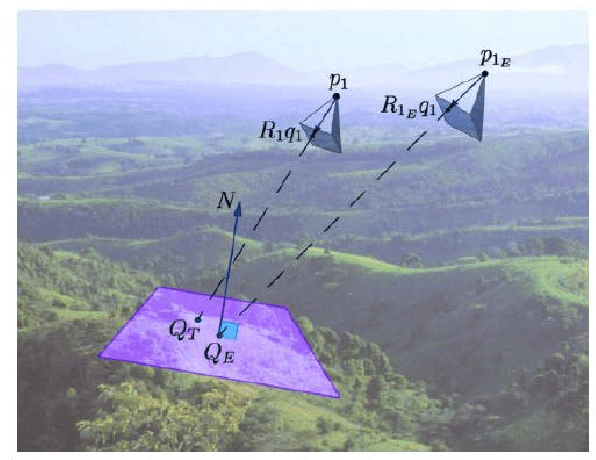

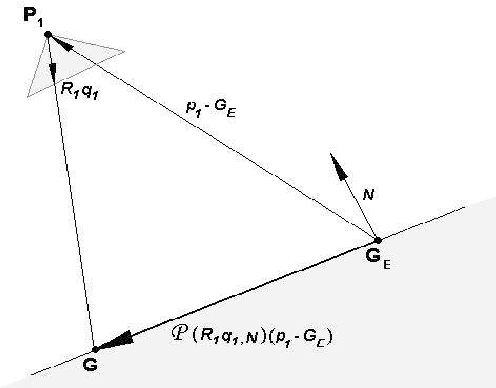

Vision-Based Navigation I: A navigation filter for fusing DTM/correspondence updates

Jul 10, 2012

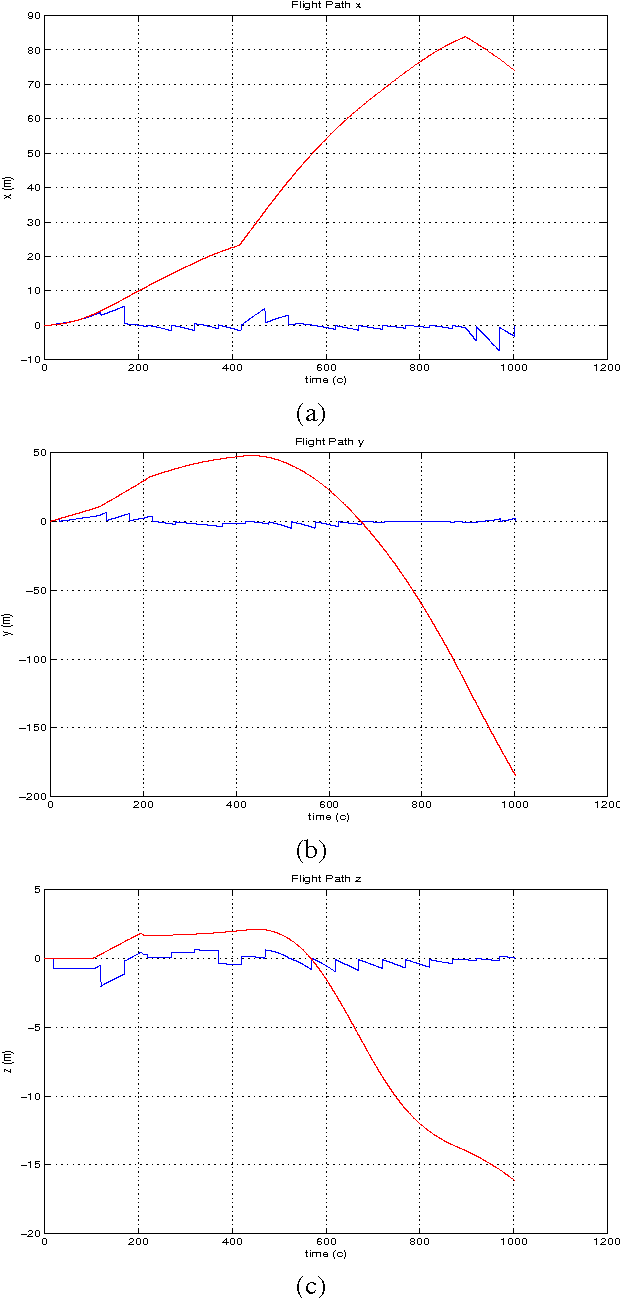

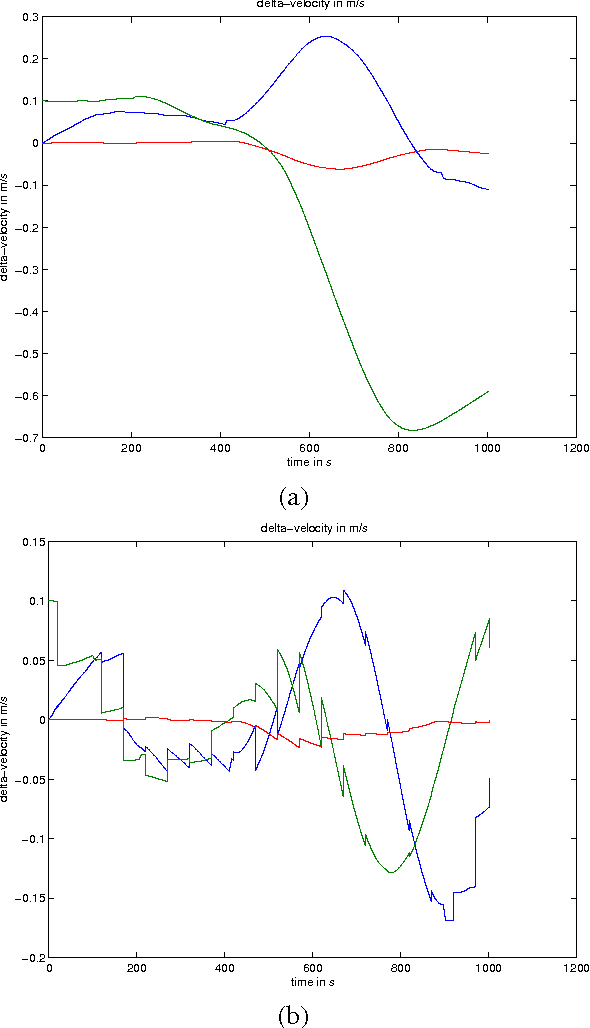

Abstract:An algorithm for pose and motion estimation using corresponding features in images and a digital terrain map is proposed. Using a Digital Terrain (or Digital Elevation) Map (DTM/DEM) as a global reference enables recovering the absolute position and orientation of the camera. In order to do this, the DTM is used to formulate a constraint between corresponding features in two consecutive frames. The utilization of data is shown to improve the robustness and accuracy of the inertial navigation algorithm. Extended Kalman filter was used to combine results of inertial navigation algorithm and proposed vision-based navigation algorithm. The feasibility of this algorithms is established through numerical simulations.

* 26 pages, 3 figures, in English and in Russian. arXiv admin note: substantial text overlap with arXiv:1106.6341, arXiv:1107.1470

Vision-Based Navigation III: Pose and Motion from Omnidirectional Optical Flow and a Digital Terrain Map

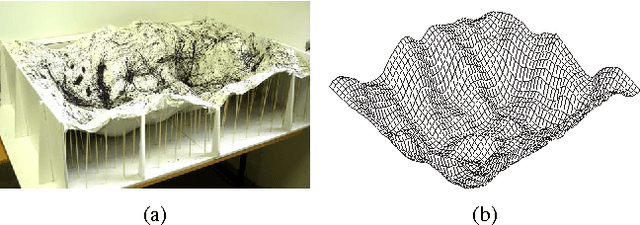

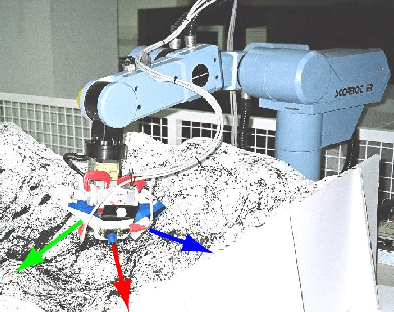

Aug 16, 2011

Abstract:An algorithm for pose and motion estimation using corresponding features in omnidirectional images and a digital terrain map is proposed. In previous paper, such algorithm for regular camera was considered. Using a Digital Terrain (or Digital Elevation) Map (DTM/DEM) as a global reference enables recovering the absolute position and orientation of the camera. In order to do this, the DTM is used to formulate a constraint between corresponding features in two consecutive frames. In this paper, these constraints are extended to handle non-central projection, as is the case with many omnidirectional systems. The utilization of omnidirectional data is shown to improve the robustness and accuracy of the navigation algorithm. The feasibility of this algorithm is established through lab experimentation with two kinds of omnidirectional acquisition systems. The first one is polydioptric cameras while the second is catadioptric camera.

* 6 pages, 9 figures

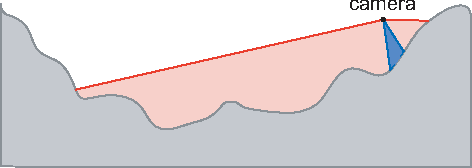

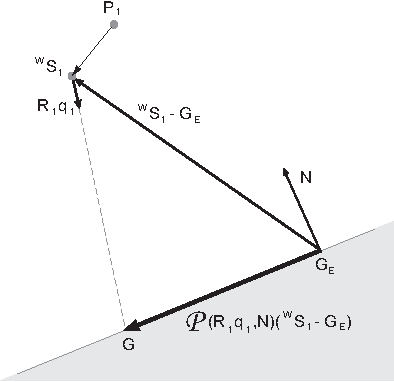

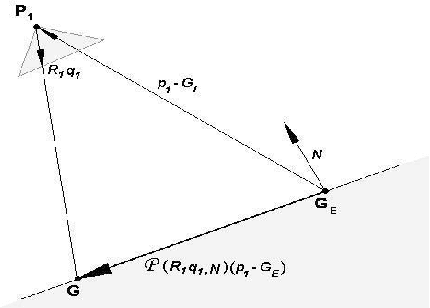

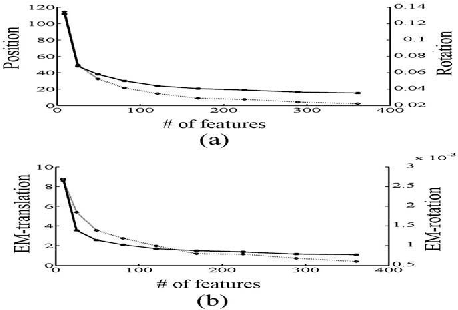

Vision-Based Navigation II: Error Analysis for a Navigation Algorithm based on Optical-Flow and a Digital Terrain Map

Aug 11, 2011

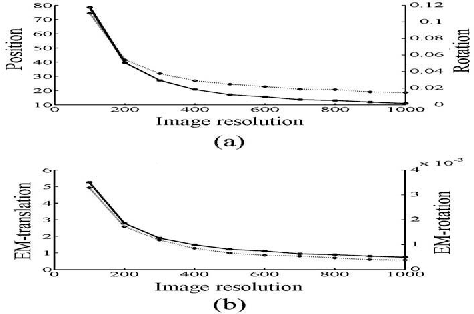

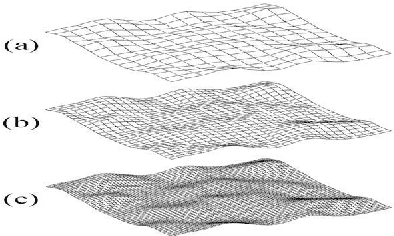

Abstract:The paper deals with the error analysis of a navigation algorithm that uses as input a sequence of images acquired by a moving camera and a Digital Terrain Map (DTM) of the region been imaged by the camera during the motion. The main sources of error are more or less straightforward to identify: camera resolution, structure of the observed terrain and DTM accuracy, field of view and camera trajectory. After characterizing and modeling these error sources in the framework of the CDTM algorithm, a closed form expression for their effect on the pose and motion errors of the camera can be found. The analytic expression provides a priori measurements for the accuracy in terms of the parameters mentioned above.

* 10 pages,12 figures, 2 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge