Nuno Gracias

IFREMER

BenthiCat: An opti-acoustic dataset for advancing benthic classification and habitat mapping

Oct 06, 2025Abstract:Benthic habitat mapping is fundamental for understanding marine ecosystems, guiding conservation efforts, and supporting sustainable resource management. Yet, the scarcity of large, annotated datasets limits the development and benchmarking of machine learning models in this domain. This paper introduces a thorough multi-modal dataset, comprising about a million side-scan sonar (SSS) tiles collected along the coast of Catalonia (Spain), complemented by bathymetric maps and a set of co-registered optical images from targeted surveys using an autonomous underwater vehicle (AUV). Approximately \num{36000} of the SSS tiles have been manually annotated with segmentation masks to enable supervised fine-tuning of classification models. All the raw sensor data, together with mosaics, are also released to support further exploration and algorithm development. To address challenges in multi-sensor data fusion for AUVs, we spatially associate optical images with corresponding SSS tiles, facilitating self-supervised, cross-modal representation learning. Accompanying open-source preprocessing and annotation tools are provided to enhance accessibility and encourage research. This resource aims to establish a standardized benchmark for underwater habitat mapping, promoting advancements in autonomous seafloor classification and multi-sensor integration.

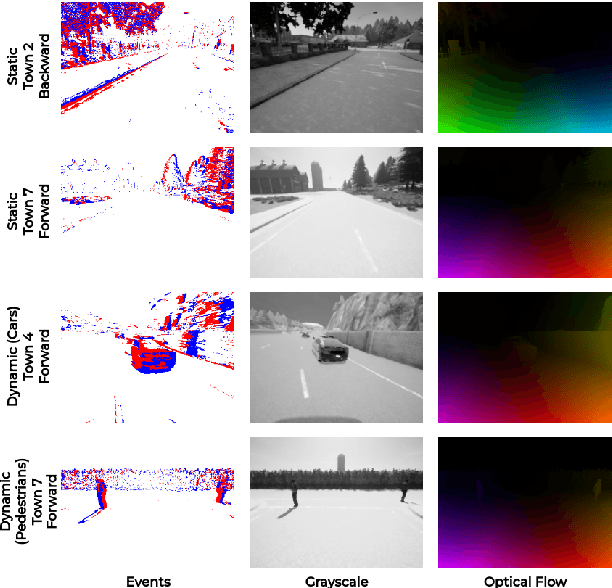

eStonefish-scenes: A synthetically generated dataset for underwater event-based optical flow prediction tasks

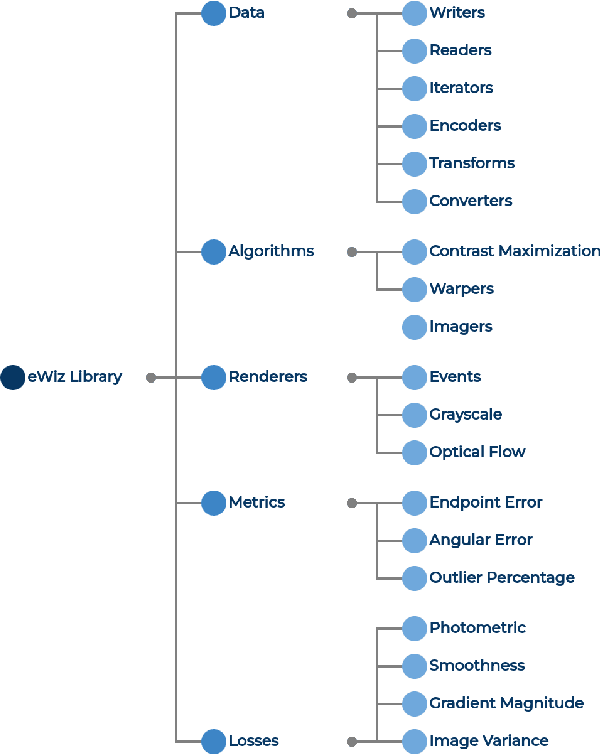

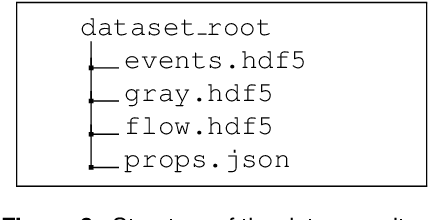

May 19, 2025Abstract:The combined use of event-based vision and Spiking Neural Networks (SNNs) is expected to significantly impact robotics, particularly in tasks like visual odometry and obstacle avoidance. While existing real-world event-based datasets for optical flow prediction, typically captured with Unmanned Aerial Vehicles (UAVs), offer valuable insights, they are limited in diversity, scalability, and are challenging to collect. Moreover, there is a notable lack of labelled datasets for underwater applications, which hinders the integration of event-based vision with Autonomous Underwater Vehicles (AUVs). To address this, synthetic datasets could provide a scalable solution while bridging the gap between simulation and reality. In this work, we introduce eStonefish-scenes, a synthetic event-based optical flow dataset based on the Stonefish simulator. Along with the dataset, we present a data generation pipeline that enables the creation of customizable underwater environments. This pipeline allows for simulating dynamic scenarios, such as biologically inspired schools of fish exhibiting realistic motion patterns, including obstacle avoidance and reactive navigation around corals. Additionally, we introduce a scene generator that can build realistic reef seabeds by randomly distributing coral across the terrain. To streamline data accessibility, we present eWiz, a comprehensive library designed for processing event-based data, offering tools for data loading, augmentation, visualization, encoding, and training data generation, along with loss functions and performance metrics.

Real-time Seafloor Segmentation and Mapping

Apr 14, 2025Abstract:Posidonia oceanica meadows are a species of seagrass highly dependent on rocks for their survival and conservation. In recent years, there has been a concerning global decline in this species, emphasizing the critical need for efficient monitoring and assessment tools. While deep learning-based semantic segmentation and visual automated monitoring systems have shown promise in a variety of applications, their performance in underwater environments remains challenging due to complex water conditions and limited datasets. This paper introduces a framework that combines machine learning and computer vision techniques to enable an autonomous underwater vehicle (AUV) to inspect the boundaries of Posidonia oceanica meadows autonomously. The framework incorporates an image segmentation module using an existing Mask R-CNN model and a strategy for Posidonia oceanica meadow boundary tracking. Furthermore, a new class dedicated to rocks is introduced to enhance the existing model, aiming to contribute to a comprehensive monitoring approach and provide a deeper understanding of the intricate interactions between the meadow and its surrounding environment. The image segmentation model is validated using real underwater images, while the overall inspection framework is evaluated in a realistic simulation environment, replicating actual monitoring scenarios with real underwater images. The results demonstrate that the proposed framework enables the AUV to autonomously accomplish the main tasks of underwater inspection and segmentation of rocks. Consequently, this work holds significant potential for the conservation and protection of marine environments, providing valuable insights into the status of Posidonia oceanica meadows and supporting targeted preservation efforts

Stonefish: Supporting Machine Learning Research in Marine Robotics

Feb 17, 2025Abstract:Simulations are highly valuable in marine robotics, offering a cost-effective and controlled environment for testing in the challenging conditions of underwater and surface operations. Given the high costs and logistical difficulties of real-world trials, simulators capable of capturing the operational conditions of subsea environments have become key in developing and refining algorithms for remotely-operated and autonomous underwater vehicles. This paper highlights recent enhancements to the Stonefish simulator, an advanced open-source platform supporting development and testing of marine robotics solutions. Key updates include a suite of additional sensors, such as an event-based camera, a thermal camera, and an optical flow camera, as well as, visual light communication, support for tethered operations, improved thruster modelling, more flexible hydrodynamics, and enhanced sonar accuracy. These developments and an automated annotation tool significantly bolster Stonefish's role in marine robotics research, especially in the field of machine learning, where training data with a known ground truth is hard or impossible to collect.

eCARLA-scenes: A synthetically generated dataset for event-based optical flow prediction

Dec 12, 2024

Abstract:The joint use of event-based vision and Spiking Neural Networks (SNNs) is expected to have a large impact in robotics in the near future, in tasks such as, visual odometry and obstacle avoidance. While researchers have used real-world event datasets for optical flow prediction (mostly captured with Unmanned Aerial Vehicles (UAVs)), these datasets are limited in diversity, scalability, and are challenging to collect. Thus, synthetic datasets offer a scalable alternative by bridging the gap between reality and simulation. In this work, we address the lack of datasets by introducing eWiz, a comprehensive library for processing event-based data. It includes tools for data loading, augmentation, visualization, encoding, and generation of training data, along with loss functions and performance metrics. We further present a synthetic event-based datasets and data generation pipelines for optical flow prediction tasks. Built on top of eWiz, eCARLA-scenes makes use of the CARLA simulator to simulate self-driving car scenarios. The ultimate goal of this dataset is the depiction of diverse environments while laying a foundation for advancing event-based camera applications in autonomous field vehicle navigation, paving the way for using SNNs on neuromorphic hardware such as the Intel Loihi.

A Convolutional Vision Transformer for Semantic Segmentation of Side-Scan Sonar Data

Feb 24, 2023

Abstract:Distinguishing among different marine benthic habitat characteristics is of key importance in a wide set of seabed operations ranging from installations of oil rigs to laying networks of cables and monitoring the impact of humans on marine ecosystems. The Side-Scan Sonar (SSS) is a widely used imaging sensor in this regard. It produces high-resolution seafloor maps by logging the intensities of sound waves reflected back from the seafloor. In this work, we leverage these acoustic intensity maps to produce pixel-wise categorization of different seafloor types. We propose a novel architecture adapted from the Vision Transformer (ViT) in an encoder-decoder framework. Further, in doing so, the applicability of ViTs is evaluated on smaller datasets. To overcome the lack of CNN-like inductive biases, thereby making ViTs more conducive to applications in low data regimes, we propose a novel feature extraction module to replace the Multi-layer Perceptron (MLP) block within transformer layers and a novel module to extract multiscale patch embeddings. A lightweight decoder is also proposed to complement this design in order to further boost multiscale feature extraction. With the modified architecture, we achieve state-of-the-art results and also meet real-time computational requirements. We make our code available at ~\url{https://github.com/hayatrajani/s3seg-vit

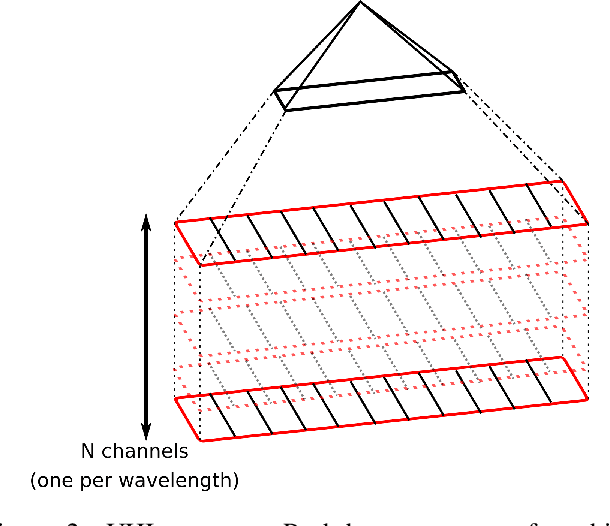

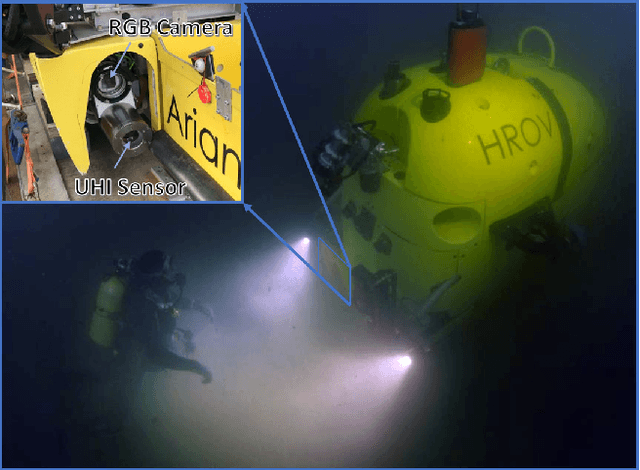

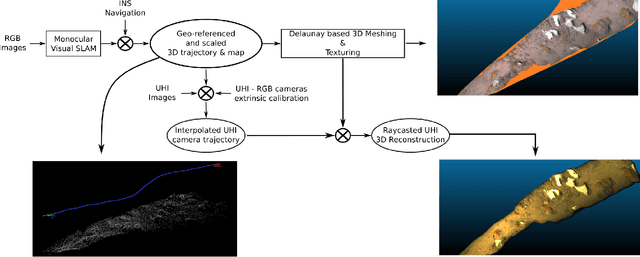

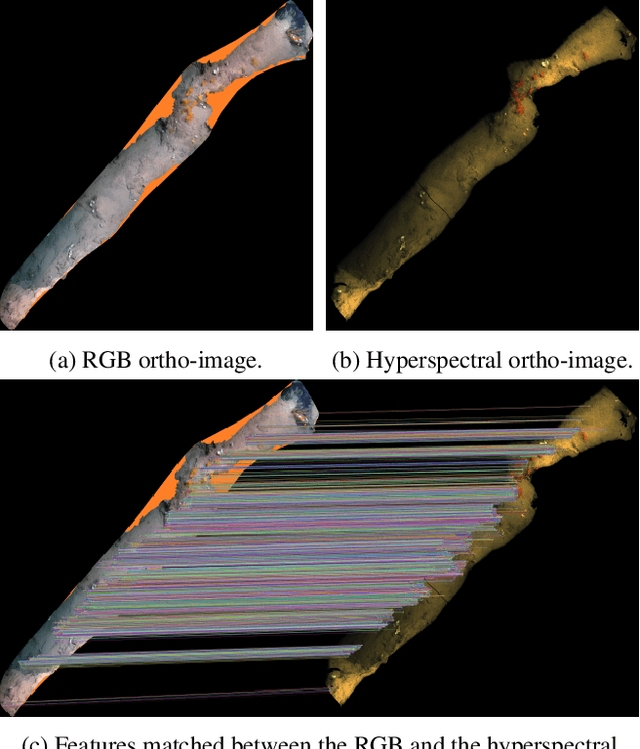

Hyperspectral 3D Mapping of Underwater Environments

Oct 13, 2021

Abstract:Hyperspectral imaging has been increasingly used for underwater survey applications over the past years. As many hyperspectral cameras work as push-broom scanners, their use is usually limited to the creation of photo-mosaics based on a flat surface approximation and by interpolating the camera pose from dead-reckoning navigation. Yet, because of drift in the navigation and the mostly wrong flat surface assumption, the quality of the obtained photo-mosaics is often too low to support adequate analysis.In this paper we present an initial method for creating hyperspectral 3D reconstructions of underwater environments. By fusing the data gathered by a classical RGB camera, an inertial navigation system and a hyperspectral push-broom camera, we show that the proposed method creates highly accurate 3D reconstructions with hyperspectral textures. We propose to combine techniques from simultaneous localization and mapping, structure-from-motion and 3D reconstruction and advantageously use them to create 3D models with hyperspectral texture, allowing us to overcome the flat surface assumption and the classical limitation of dead-reckoning navigation.

* ICCV'21 - Computer Vision in the Ocean Workshop

Automatic Scale Estimation of Structure from Motion based 3D Models using Laser Scalers

Jun 19, 2019

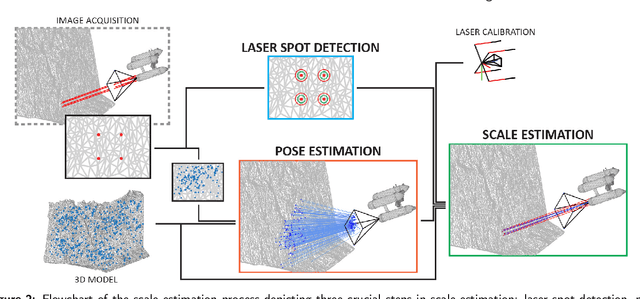

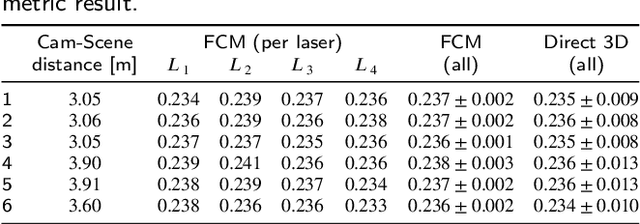

Abstract:Recent advances in structure-from-motion techniques are enabling many scientific fields to benefit from the routine creation of detailed 3D models. However, for a large number of applications, only a single camera is available, due to cost or space constraints in the survey platforms. Monocular structure-from-motion raises the issue of properly estimating the scale of the 3D models, in order to later use those models for metrology. The scale can be determined from the presence of visible objects of known dimensions, or from information on the magnitude of the camera motion provided by other sensors, such as GPS. This paper addresses the problem of accurately scaling 3D models created from monocular cameras in GPS-denied environments, such as in underwater applications. Motivated by the common availability of underwater laser scalers, we present two novel approaches. A fully-calibrated method enables the use of arbitrary laser setups, while a partially-calibrated method reduces the need for calibration by only assuming parallelism on the laser beams, with no constraints on the camera. The proposed methods have several advantages with respect to the existing methods. The need for laser alignment with the optical axis of the camera is removed, together with the extremely error-prone manual identification of image points on the 3D model. The performance of the methods and their applicability was evaluated on both data generated from a realistic 3D model and data collected during an oceanographic cruise in 2017. Three separate laser configurations have been tested, encompassing nearly all possible laser setups, to evaluate the effects of terrain roughness, noise, camera perspective angle and camera-scene distance. In the real scenario, the computation of 6 independent model scale estimates using our fully-calibrated approach, produced values with standard deviation of 0.3%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge