Klemen Istenic

IFREMER

Hyperspectral 3D Mapping of Underwater Environments

Oct 13, 2021

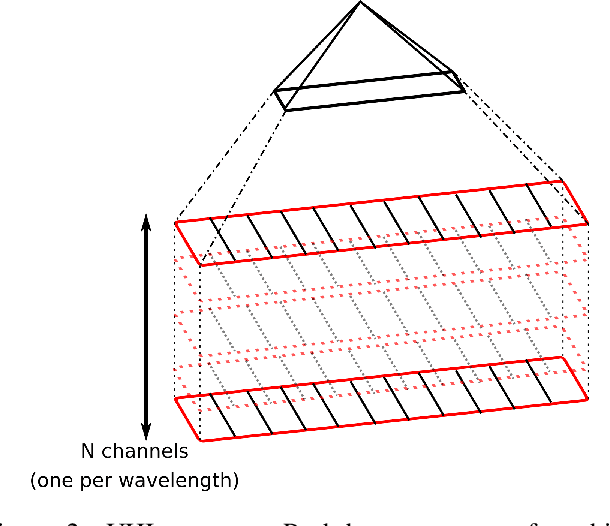

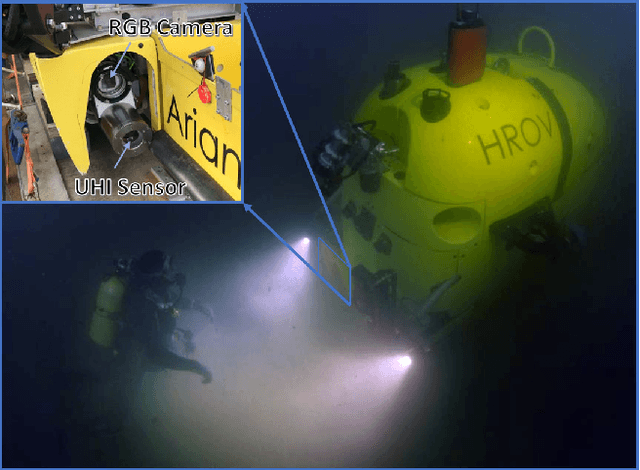

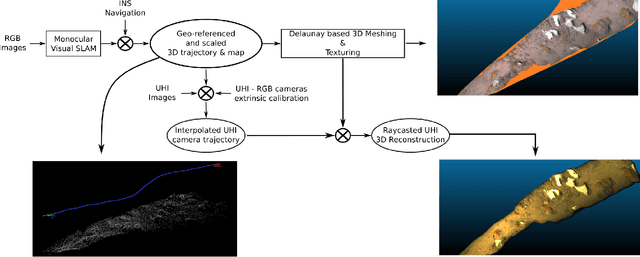

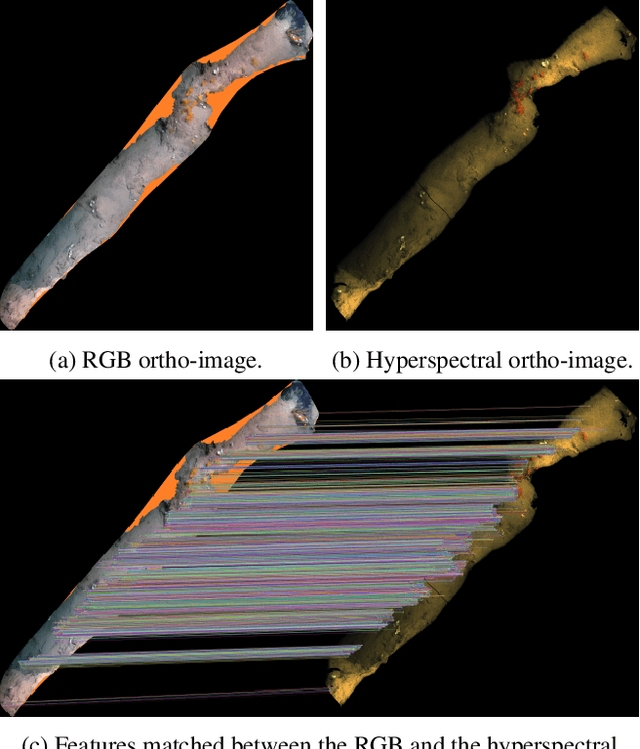

Abstract:Hyperspectral imaging has been increasingly used for underwater survey applications over the past years. As many hyperspectral cameras work as push-broom scanners, their use is usually limited to the creation of photo-mosaics based on a flat surface approximation and by interpolating the camera pose from dead-reckoning navigation. Yet, because of drift in the navigation and the mostly wrong flat surface assumption, the quality of the obtained photo-mosaics is often too low to support adequate analysis.In this paper we present an initial method for creating hyperspectral 3D reconstructions of underwater environments. By fusing the data gathered by a classical RGB camera, an inertial navigation system and a hyperspectral push-broom camera, we show that the proposed method creates highly accurate 3D reconstructions with hyperspectral textures. We propose to combine techniques from simultaneous localization and mapping, structure-from-motion and 3D reconstruction and advantageously use them to create 3D models with hyperspectral texture, allowing us to overcome the flat surface assumption and the classical limitation of dead-reckoning navigation.

* ICCV'21 - Computer Vision in the Ocean Workshop

Automatic Scale Estimation of Structure from Motion based 3D Models using Laser Scalers

Jun 19, 2019

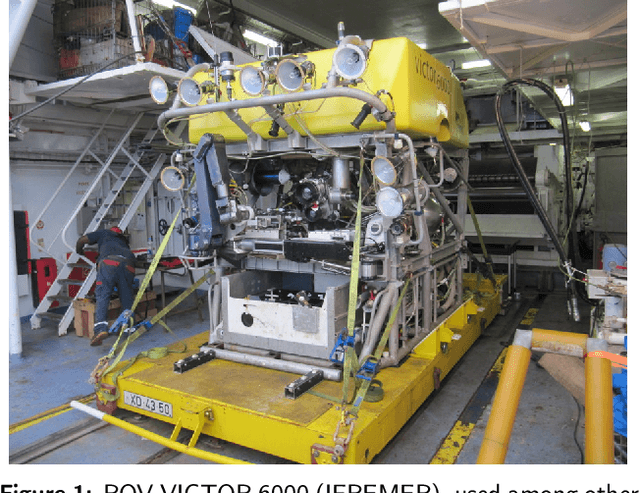

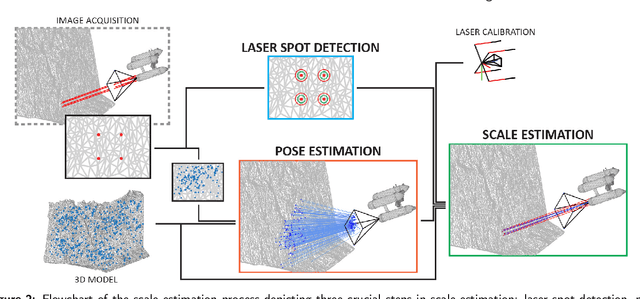

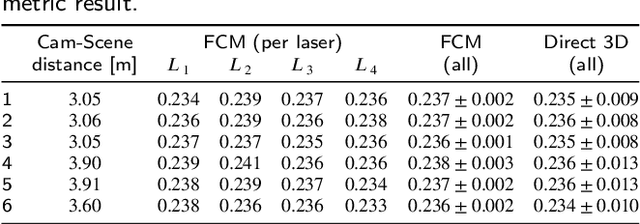

Abstract:Recent advances in structure-from-motion techniques are enabling many scientific fields to benefit from the routine creation of detailed 3D models. However, for a large number of applications, only a single camera is available, due to cost or space constraints in the survey platforms. Monocular structure-from-motion raises the issue of properly estimating the scale of the 3D models, in order to later use those models for metrology. The scale can be determined from the presence of visible objects of known dimensions, or from information on the magnitude of the camera motion provided by other sensors, such as GPS. This paper addresses the problem of accurately scaling 3D models created from monocular cameras in GPS-denied environments, such as in underwater applications. Motivated by the common availability of underwater laser scalers, we present two novel approaches. A fully-calibrated method enables the use of arbitrary laser setups, while a partially-calibrated method reduces the need for calibration by only assuming parallelism on the laser beams, with no constraints on the camera. The proposed methods have several advantages with respect to the existing methods. The need for laser alignment with the optical axis of the camera is removed, together with the extremely error-prone manual identification of image points on the 3D model. The performance of the methods and their applicability was evaluated on both data generated from a realistic 3D model and data collected during an oceanographic cruise in 2017. Three separate laser configurations have been tested, encompassing nearly all possible laser setups, to evaluate the effects of terrain roughness, noise, camera perspective angle and camera-scene distance. In the real scenario, the computation of 6 independent model scale estimates using our fully-calibrated approach, produced values with standard deviation of 0.3%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge