Nirmalya Ghosh

A Two-Stage Multiple Instance Learning Framework for the Detection of Breast Cancer in Mammograms

Apr 24, 2020

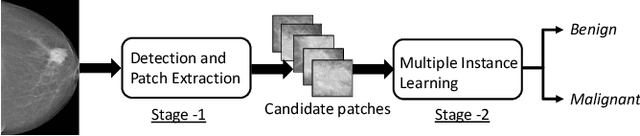

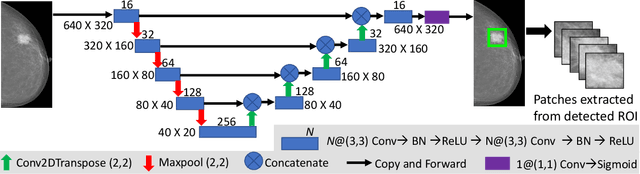

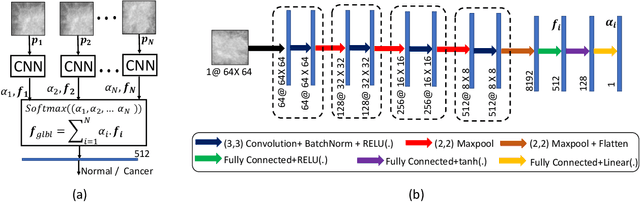

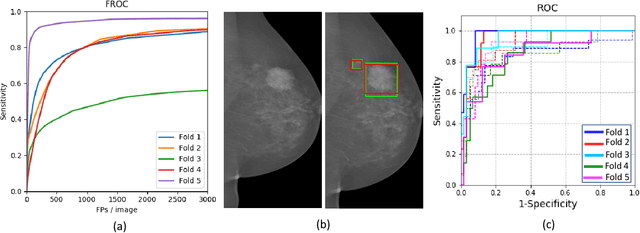

Abstract:Mammograms are commonly employed in the large scale screening of breast cancer which is primarily characterized by the presence of malignant masses. However, automated image-level detection of malignancy is a challenging task given the small size of the mass regions and difficulty in discriminating between malignant, benign mass and healthy dense fibro-glandular tissue. To address these issues, we explore a two-stage Multiple Instance Learning (MIL) framework. A Convolutional Neural Network (CNN) is trained in the first stage to extract local candidate patches in the mammograms that may contain either a benign or malignant mass. The second stage employs a MIL strategy for an image level benign vs. malignant classification. A global image-level feature is computed as a weighted average of patch-level features learned using a CNN. Our method performed well on the task of localization of masses with an average Precision/Recall of 0.76/0.80 and acheived an average AUC of 0.91 on the imagelevel classification task using a five-fold cross-validation on the INbreast dataset. Restricting the MIL only to the candidate patches extracted in Stage 1 led to a significant improvement in classification performance in comparison to a dense extraction of patches from the entire mammogram.

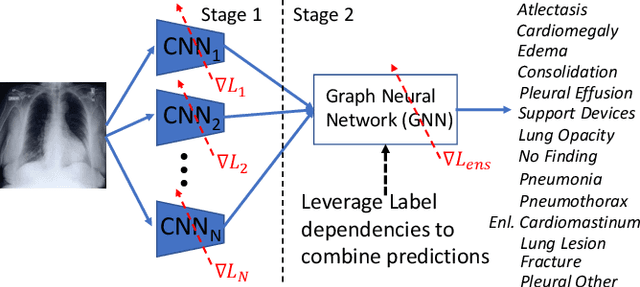

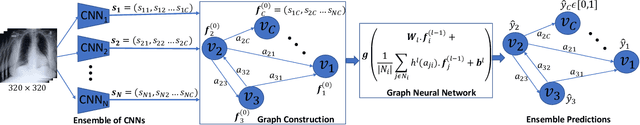

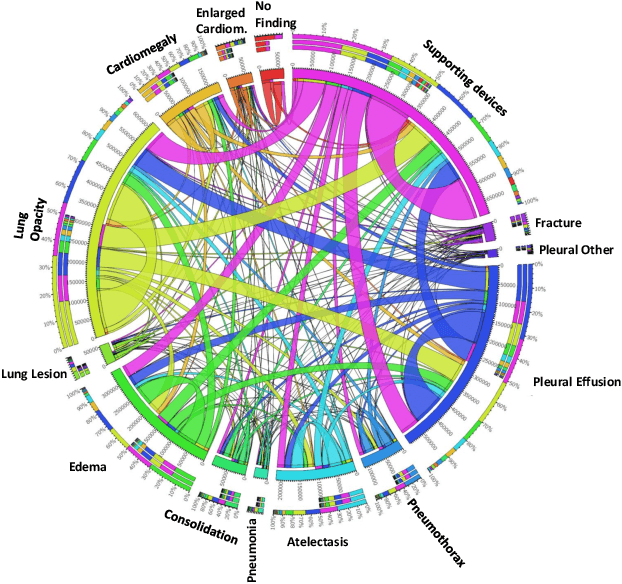

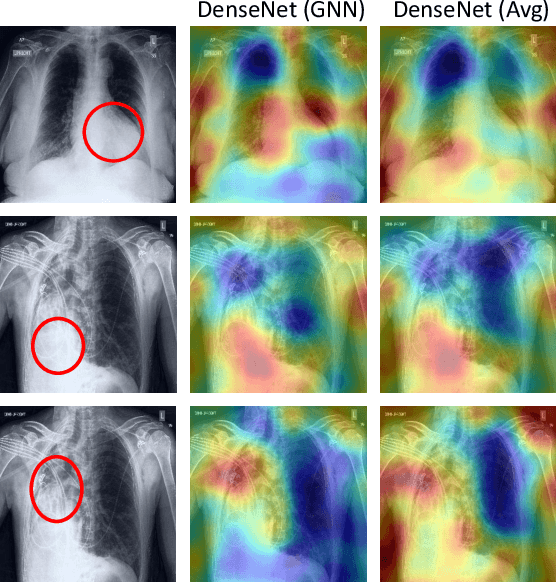

Learning Decision Ensemble using a Graph Neural Network for Comorbidity Aware Chest Radiograph Screening

Apr 24, 2020

Abstract:Chest radiographs are primarily employed for the screening of cardio, thoracic and pulmonary conditions. Machine learning based automated solutions are being developed to reduce the burden of routine screening on Radiologists, allowing them to focus on critical cases. While recent efforts demonstrate the use of ensemble of deep convolutional neural networks(CNN), they do not take disease comorbidity into consideration, thus lowering their screening performance. To address this issue, we propose a Graph Neural Network (GNN) based solution to obtain ensemble predictions which models the dependencies between different diseases. A comprehensive evaluation of the proposed method demonstrated its potential by improving the performance over standard ensembling technique across a wide range of ensemble constructions. The best performance was achieved using the GNN ensemble of DenseNet121 with an average AUC of 0.821 across thirteen disease comorbidities.

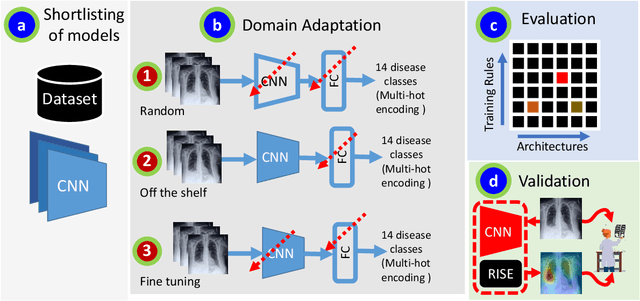

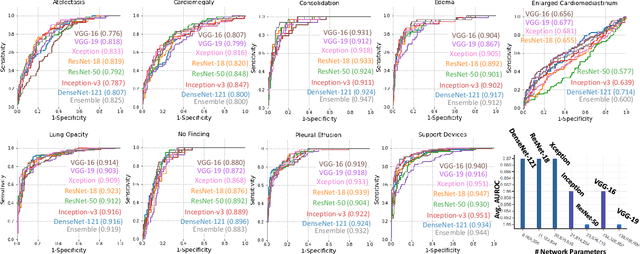

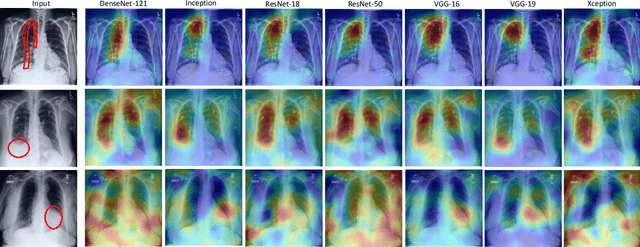

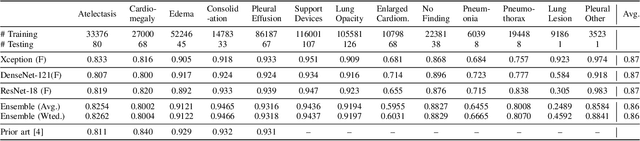

A Systematic Search over Deep Convolutional Neural Network Architectures for Screening Chest Radiographs

Apr 24, 2020

Abstract:Chest radiographs are primarily employed for the screening of pulmonary and cardio-/thoracic conditions. Being undertaken at primary healthcare centers, they require the presence of an on-premise reporting Radiologist, which is a challenge in low and middle income countries. This has inspired the development of machine learning based automation of the screening process. While recent efforts demonstrate a performance benchmark using an ensemble of deep convolutional neural networks (CNN), our systematic search over multiple standard CNN architectures identified single candidate CNN models whose classification performances were found to be at par with ensembles. Over 63 experiments spanning 400 hours, executed on a 11:3 FP32 TensorTFLOPS compute system, we found the Xception and ResNet-18 architectures to be consistent performers in identifying co-existing disease conditions with an average AUC of 0.87 across nine pathologies. We conclude on the reliability of the models by assessing their saliency maps generated using the randomized input sampling for explanation (RISE) method and qualitatively validating them against manual annotations locally sourced from an experienced Radiologist. We also draw a critical note on the limitations of the publicly available CheXpert dataset primarily on account of disparity in class distribution in training vs. testing sets, and unavailability of sufficient samples for few classes, which hampers quantitative reporting due to sample insufficiency.

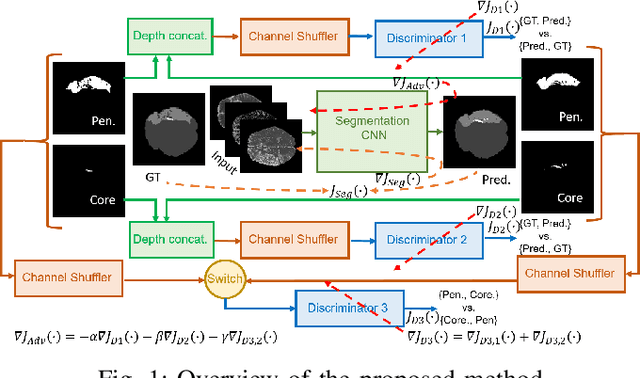

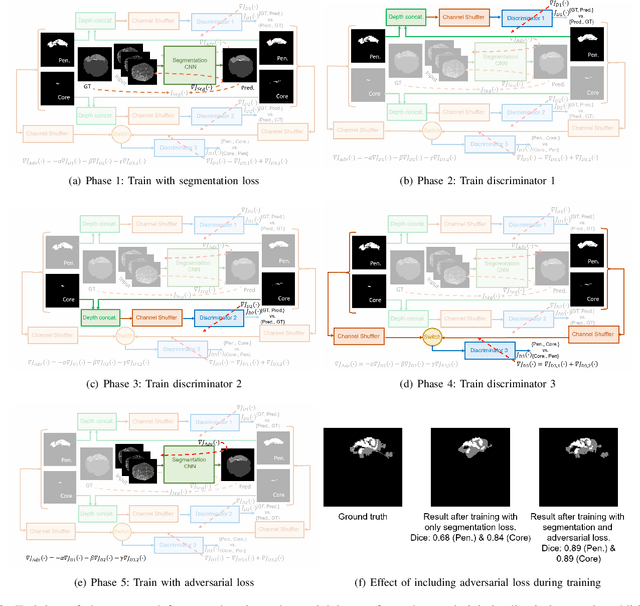

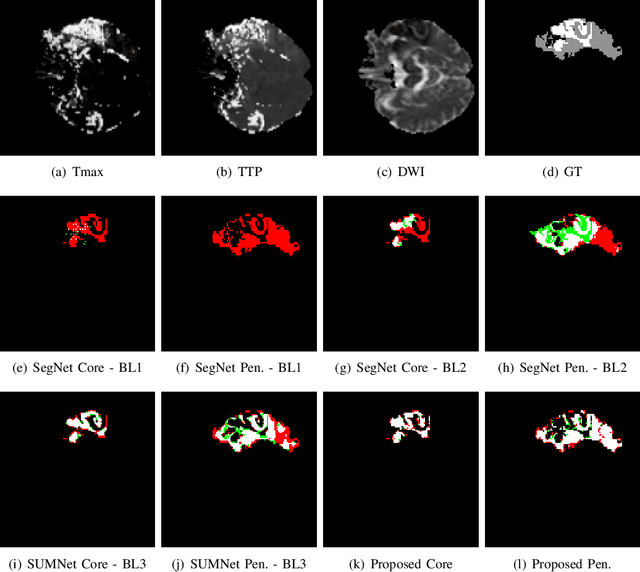

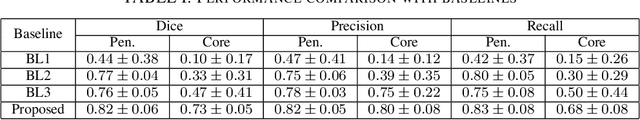

Adversarially Trained Convolutional Neural Networks for Semantic Segmentation of Ischaemic Stroke Lesion using Multisequence Magnetic Resonance Imaging

Aug 03, 2019

Abstract:Ischaemic stroke is a medical condition caused by occlusion of blood supply to the brain tissue thus forming a lesion. A lesion is zoned into a core associated with irreversible necrosis typically located at the center of the lesion, while reversible hypoxic changes in the outer regions of the lesion are termed as the penumbra. Early estimation of core and penumbra in ischaemic stroke is crucial for timely intervention with thrombolytic therapy to reverse the damage and restore normalcy. Multisequence magnetic resonance imaging (MRI) is commonly employed for clinical diagnosis. However, a sequence singly has not been found to be sufficiently able to differentiate between core and penumbra, while a combination of sequences is required to determine the extent of the damage. The challenge, however, is that with an increase in the number of sequences, it cognitively taxes the clinician to discover symptomatic biomarkers in these images. In this paper, we present a data-driven fully automated method for estimation of core and penumbra in ischaemic lesions using diffusion-weighted imaging (DWI) and perfusion-weighted imaging (PWI) sequence maps of MRI. The method employs recent developments in convolutional neural networks (CNN) for semantic segmentation in medical images. In the absence of availability of a large amount of labeled data, the CNN is trained using an adversarial approach employing cross-entropy as a segmentation loss along with losses aggregated from three discriminators of which two employ relativistic visual Turing test. This method is experimentally validated on the ISLES-2015 dataset through three-fold cross-validation to obtain with an average Dice score of 0.82 and 0.73 for segmentation of penumbra and core respectively.

Fully Convolutional Model for Variable Bit Length and Lossy High Density Compression of Mammograms

May 17, 2018

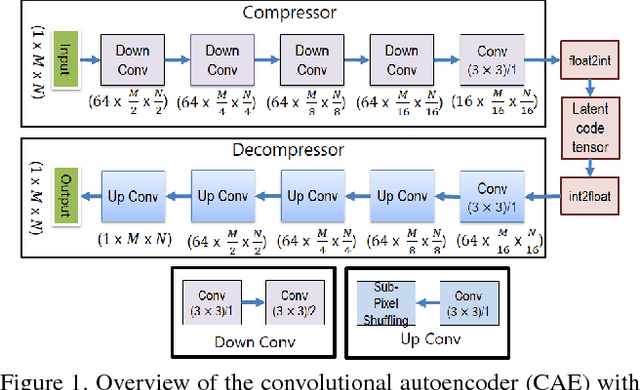

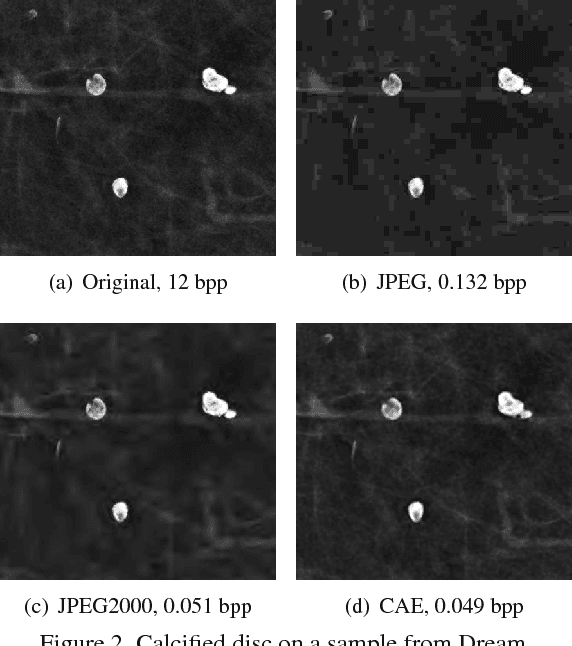

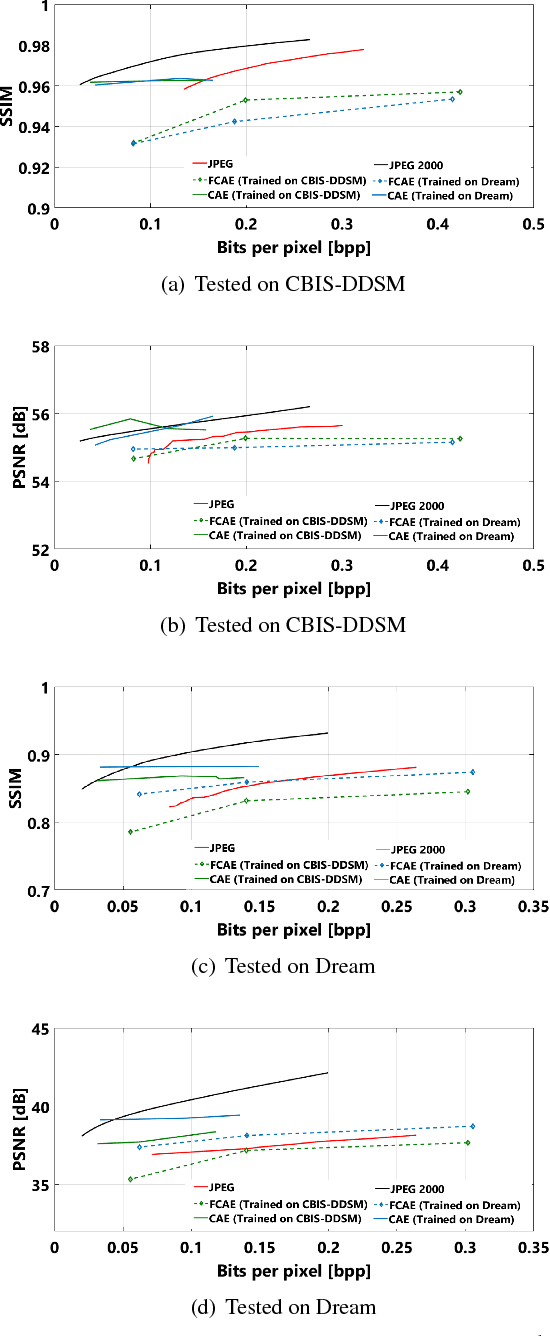

Abstract:Early works on medical image compression date to the 1980's with the impetus on deployment of teleradiology systems for high-resolution digital X-ray detectors. Commercially deployed systems during the period could compress 4,096 x 4,096 sized images at 12 bpp to 2 bpp using lossless arithmetic coding, and over the years JPEG and JPEG2000 were imbibed reaching upto 0.1 bpp. Inspired by the reprise of deep learning based compression for natural images over the last two years, we propose a fully convolutional autoencoder for diagnostically relevant feature preserving lossy compression. This is followed by leveraging arithmetic coding for encapsulating high redundancy of features for further high-density code packing leading to variable bit length. We demonstrate performance on two different publicly available digital mammography datasets using peak signal-to-noise ratio (pSNR), structural similarity (SSIM) index and domain adaptability tests between datasets. At high density compression factors of >300x (~0.04 bpp), our approach rivals JPEG and JPEG2000 as evaluated through a Radiologist's visual Turing test.

Application of S-Transform on Hyper kurtosis based Modified Duo Histogram Equalized DIC images for Pre-cancer Detection

Apr 30, 2015Abstract:Our proposed hyper kurtosis based histogram equalized DIC images enhances the contrast by preserving the brightness. The evolution and development of precancerous activity among tissues are studied through S-transform (ST). The significant variations of amplitude spectra can be observed due to increased medium roughness from normal tissue were observed in time-frequency domain. The randomness and inhomogeneity of the tissue structures among human normal and different grades of DIC tissues is recognized by ST based timefrequency analysis. This study offers a simpler and better way to recognize the substantial changes among different stages of DIC tissues, which are reflected by spatial information containing within the inhomogeneity structures of different types of tissue.

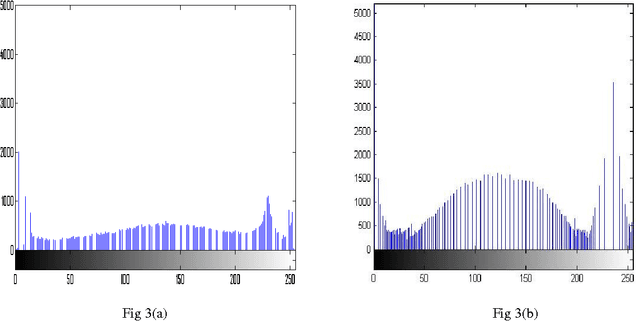

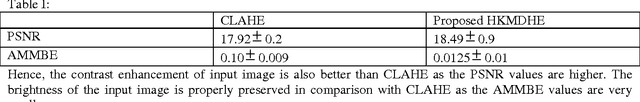

A comparative study between proposed Hyper Kurtosis based Modified Duo-Histogram Equalization (HKMDHE) and Contrast Limited Adaptive Histogram Equalization (CLAHE) for Contrast Enhancement Purpose of Low Contrast Human Brain CT scan images

Apr 07, 2015

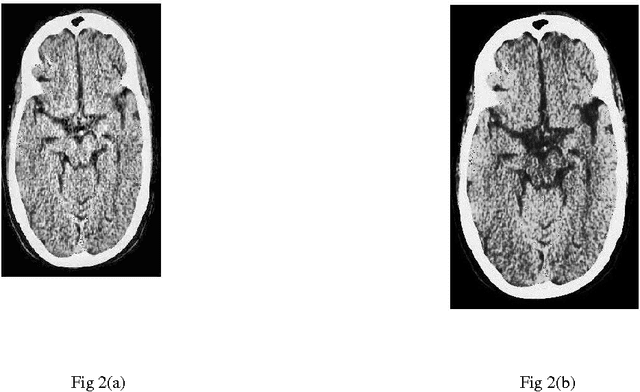

Abstract:In this paper, a comparative study between proposed hyper kurtosis based modified duo-histogram equalization (HKMDHE) algorithm and contrast limited adaptive histogram enhancement (CLAHE) has been presented for the implementation of contrast enhancement and brightness preservation of low contrast human brain CT scan images. In HKMDHE algorithm, contrast enhancement is done on the hyper-kurtosis based application. The results are very promising of proposed HKMDHE technique with improved PSNR values and lesser AMMBE values than CLAHE technique.

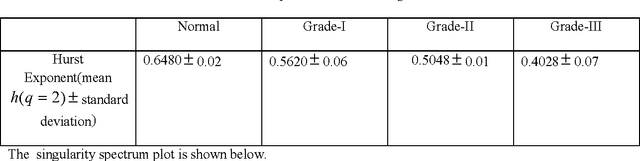

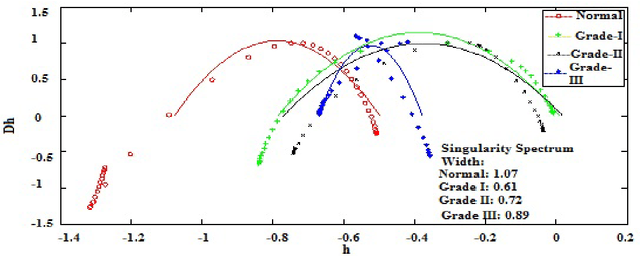

Wavelet based approach for tissue fractal parameter measurement: Pre cancer detection

Mar 21, 2015

Abstract:In this paper, we have carried out the detail studies of pre-cancer by wavelet coherency and multifractal based detrended fluctuation analysis (MFDFA) on differential interference contrast (DIC) images of stromal region among different grades of pre-cancer tissues. Discrete wavelet transform (DWT) through Daubechies basis has been performed for identifying fluctuations over polynomial trends for clear characterization and differentiation of tissues. Wavelet coherence plots are performed for identifying the level of correlation in time scale plane between normal and various grades of DIC samples. Applying MFDFA on refractive index variations of cervical tissues, we have observed that the values of Hurst exponent (correlation) decreases from healthy (normal) to pre-cancer tissues. The width of singularity spectrum has a sudden degradation at grade-I in comparison of healthy (normal) tissue but later on it increases as cancer progresses from grade-II to grade-III.

Diagnosing Heterogeneous Dynamics for CT Scan Images of Human Brain in Wavelet and MFDFA domain

Mar 12, 2015Abstract:CT scan images of human brain of a particular patient in different cross sections are taken, on which wavelet transform and multi-fractal analysis are applied. The vertical and horizontal unfolding of images are done before analyzing these images. A systematic investigation of de-noised CT scan images of human brain in different cross-sections are carried out through wavelet normalized energy and wavelet semi-log plots, which clearly points out the mismatch between results of vertical and horizontal unfolding. The mismatch of results confirms the heterogeneity in spatial domain. Using the multi-fractal de-trended fluctuation analysis (MFDFA), the mismatch between the values of Hurst exponent and width of singularity spectrum by vertical and horizontal unfolding confirms the same.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge