Tandra Sarkar

A Two-Stage Multiple Instance Learning Framework for the Detection of Breast Cancer in Mammograms

Apr 24, 2020

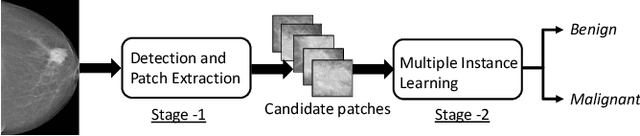

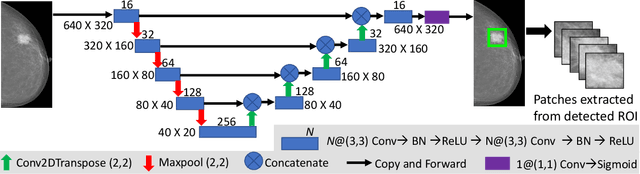

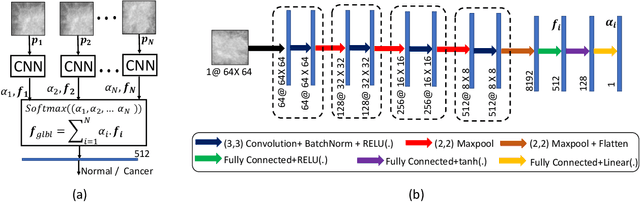

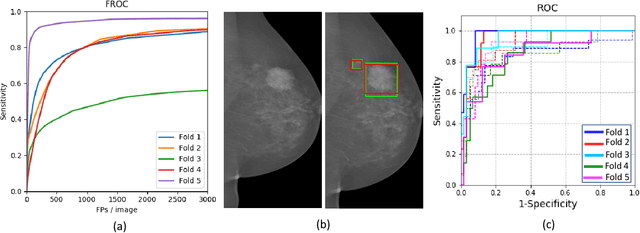

Abstract:Mammograms are commonly employed in the large scale screening of breast cancer which is primarily characterized by the presence of malignant masses. However, automated image-level detection of malignancy is a challenging task given the small size of the mass regions and difficulty in discriminating between malignant, benign mass and healthy dense fibro-glandular tissue. To address these issues, we explore a two-stage Multiple Instance Learning (MIL) framework. A Convolutional Neural Network (CNN) is trained in the first stage to extract local candidate patches in the mammograms that may contain either a benign or malignant mass. The second stage employs a MIL strategy for an image level benign vs. malignant classification. A global image-level feature is computed as a weighted average of patch-level features learned using a CNN. Our method performed well on the task of localization of masses with an average Precision/Recall of 0.76/0.80 and acheived an average AUC of 0.91 on the imagelevel classification task using a five-fold cross-validation on the INbreast dataset. Restricting the MIL only to the candidate patches extracted in Stage 1 led to a significant improvement in classification performance in comparison to a dense extraction of patches from the entire mammogram.

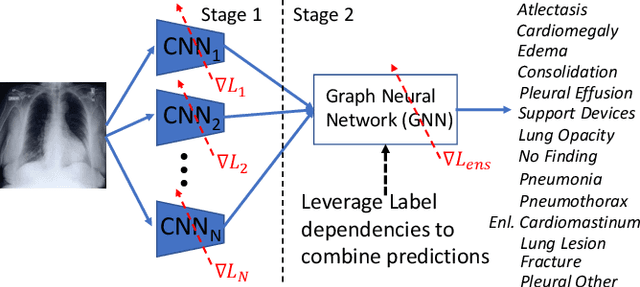

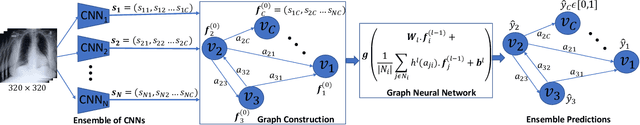

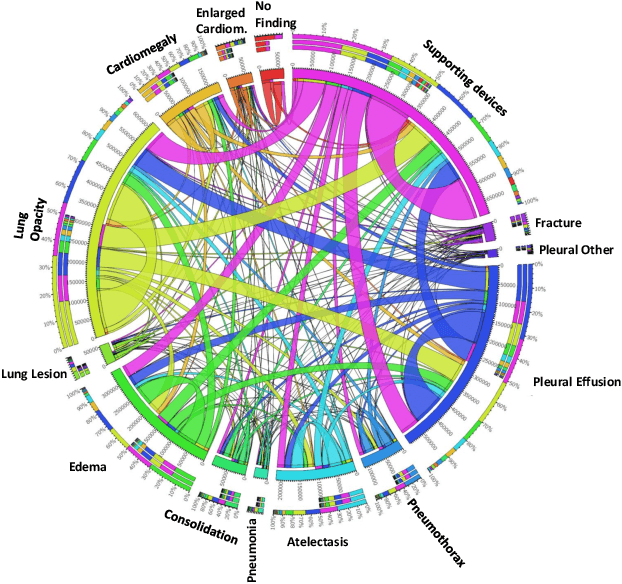

Learning Decision Ensemble using a Graph Neural Network for Comorbidity Aware Chest Radiograph Screening

Apr 24, 2020

Abstract:Chest radiographs are primarily employed for the screening of cardio, thoracic and pulmonary conditions. Machine learning based automated solutions are being developed to reduce the burden of routine screening on Radiologists, allowing them to focus on critical cases. While recent efforts demonstrate the use of ensemble of deep convolutional neural networks(CNN), they do not take disease comorbidity into consideration, thus lowering their screening performance. To address this issue, we propose a Graph Neural Network (GNN) based solution to obtain ensemble predictions which models the dependencies between different diseases. A comprehensive evaluation of the proposed method demonstrated its potential by improving the performance over standard ensembling technique across a wide range of ensemble constructions. The best performance was achieved using the GNN ensemble of DenseNet121 with an average AUC of 0.821 across thirteen disease comorbidities.

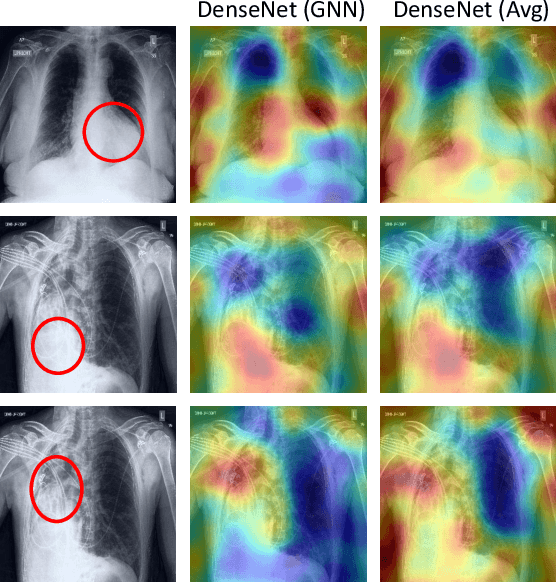

A Systematic Search over Deep Convolutional Neural Network Architectures for Screening Chest Radiographs

Apr 24, 2020

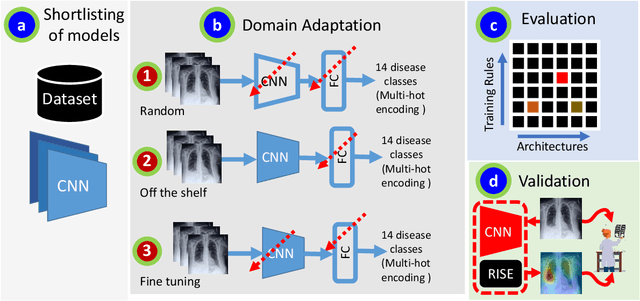

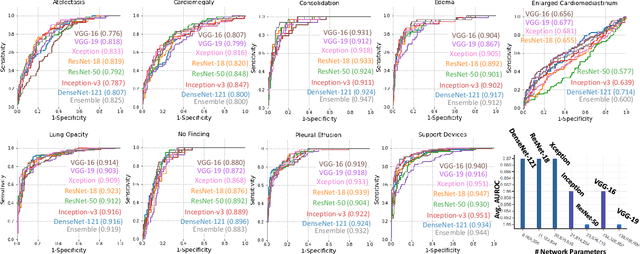

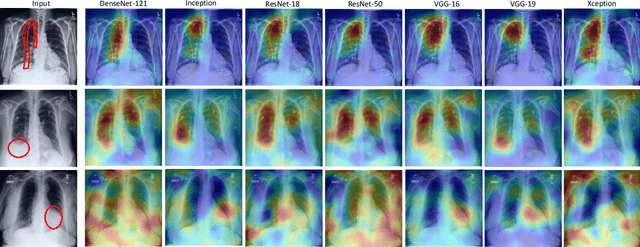

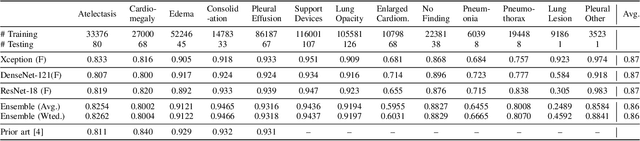

Abstract:Chest radiographs are primarily employed for the screening of pulmonary and cardio-/thoracic conditions. Being undertaken at primary healthcare centers, they require the presence of an on-premise reporting Radiologist, which is a challenge in low and middle income countries. This has inspired the development of machine learning based automation of the screening process. While recent efforts demonstrate a performance benchmark using an ensemble of deep convolutional neural networks (CNN), our systematic search over multiple standard CNN architectures identified single candidate CNN models whose classification performances were found to be at par with ensembles. Over 63 experiments spanning 400 hours, executed on a 11:3 FP32 TensorTFLOPS compute system, we found the Xception and ResNet-18 architectures to be consistent performers in identifying co-existing disease conditions with an average AUC of 0.87 across nine pathologies. We conclude on the reliability of the models by assessing their saliency maps generated using the randomized input sampling for explanation (RISE) method and qualitatively validating them against manual annotations locally sourced from an experienced Radiologist. We also draw a critical note on the limitations of the publicly available CheXpert dataset primarily on account of disparity in class distribution in training vs. testing sets, and unavailability of sufficient samples for few classes, which hampers quantitative reporting due to sample insufficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge