Nikolai Kalischek

CubeDiff: Repurposing Diffusion-Based Image Models for Panorama Generation

Jan 28, 2025Abstract:We introduce a novel method for generating 360{\deg} panoramas from text prompts or images. Our approach leverages recent advances in 3D generation by employing multi-view diffusion models to jointly synthesize the six faces of a cubemap. Unlike previous methods that rely on processing equirectangular projections or autoregressive generation, our method treats each face as a standard perspective image, simplifying the generation process and enabling the use of existing multi-view diffusion models. We demonstrate that these models can be adapted to produce high-quality cubemaps without requiring correspondence-aware attention layers. Our model allows for fine-grained text control, generates high resolution panorama images and generalizes well beyond its training set, whilst achieving state-of-the-art results, both qualitatively and quantitatively. Project page: https://cubediff.github.io/

BiasBed -- Rigorous Texture Bias Evaluation

Dec 01, 2022

Abstract:The well-documented presence of texture bias in modern convolutional neural networks has led to a plethora of algorithms that promote an emphasis on shape cues, often to support generalization to new domains. Yet, common datasets, benchmarks and general model selection strategies are missing, and there is no agreed, rigorous evaluation protocol. In this paper, we investigate difficulties and limitations when training networks with reduced texture bias. In particular, we also show that proper evaluation and meaningful comparisons between methods are not trivial. We introduce BiasBed, a testbed for texture- and style-biased training, including multiple datasets and a range of existing algorithms. It comes with an extensive evaluation protocol that includes rigorous hypothesis testing to gauge the significance of the results, despite the considerable training instability of some style bias methods. Our extensive experiments, shed new light on the need for careful, statistically founded evaluation protocols for style bias (and beyond). E.g., we find that some algorithms proposed in the literature do not significantly mitigate the impact of style bias at all. With the release of BiasBed, we hope to foster a common understanding of consistent and meaningful comparisons, and consequently faster progress towards learning methods free of texture bias. Code is available at https://github.com/D1noFuzi/BiasBed

Tetrahedral Diffusion Models for 3D Shape Generation

Nov 23, 2022Abstract:Recently, probabilistic denoising diffusion models (DDMs) have greatly advanced the generative power of neural networks. DDMs, inspired by non-equilibrium thermodynamics, have not only been used for 2D image generation, but can also readily be applied to 3D point clouds. However, representing 3D shapes as point clouds has a number of drawbacks, most obvious perhaps that they have no notion of topology or connectivity. Here, we explore an alternative route and introduce tetrahedral diffusion models, an extension of DDMs to tetrahedral partitions of 3D space. The much more structured 3D representation with space-filling tetrahedra makes it possible to guide and regularize the diffusion process and to apply it to colorized assets. To manipulate the proposed representation, we develop tetrahedral convolutions, down- and up-sampling kernels. With those operators, 3D shape generation amounts to learning displacement vectors and signed distance values on the tetrahedral grid. Our experiments confirm that Tetrahedral Diffusion yields plausible, visually pleasing and diverse 3D shapes, is able to handle surface attributes like color, and can be guided at test time to manipulate the resulting shapes.

Satellite-based high-resolution maps of cocoa planted area for Côte d'Ivoire and Ghana

Jun 13, 2022

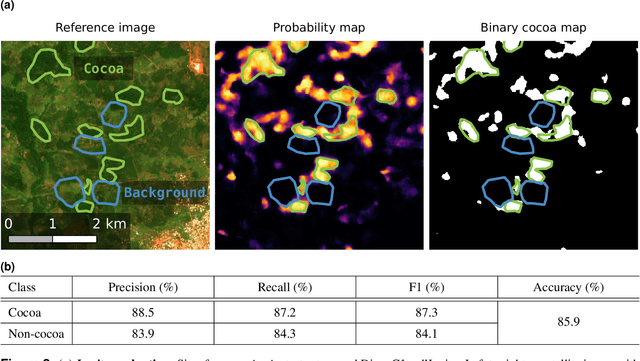

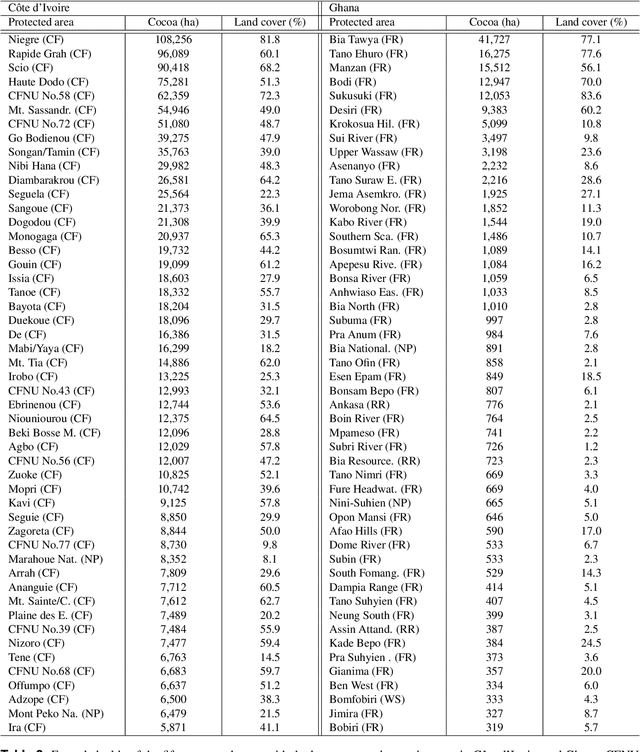

Abstract:C\^ote d'Ivoire and Ghana, the world's largest producers of cocoa, account for two thirds of the global cocoa production. In both countries, cocoa is the primary perennial crop, providing income to almost two million farmers. Yet precise maps of cocoa planted area are missing, hindering accurate quantification of expansion in protected areas, production and yields, and limiting information available for improved sustainability governance. Here, we combine cocoa plantation data with publicly available satellite imagery in a deep learning framework and create high-resolution maps of cocoa plantations for both countries, validated in situ. Our results suggest that cocoa cultivation is an underlying driver of over 37% and 13% of forest loss in protected areas in C\^ote d'Ivoire and Ghana, respectively, and that official reports substantially underestimate the planted area, up to 40% in Ghana. These maps serve as a crucial building block to advance understanding of conservation and economic development in cocoa producing regions.

In the light of feature distributions: moment matching for Neural Style Transfer

Mar 12, 2021

Abstract:Style transfer aims to render the content of a given image in the graphical/artistic style of another image. The fundamental concept underlying NeuralStyle Transfer (NST) is to interpret style as a distribution in the feature space of a Convolutional Neural Network, such that a desired style can be achieved by matching its feature distribution. We show that most current implementations of that concept have important theoretical and practical limitations, as they only partially align the feature distributions. We propose a novel approach that matches the distributions more precisely, thus reproducing the desired style more faithfully, while still being computationally efficient. Specifically, we adapt the dual form of Central Moment Discrepancy (CMD), as recently proposed for domain adaptation, to minimize the difference between the target style and the feature distribution of the output image. The dual interpretation of this metric explicitly matches all higher-order centralized moments and is therefore a natural extension of existing NST methods that only take into account the first and second moments. Our experiments confirm that the strong theoretical properties also translate to visually better style transfer, and better disentangle style from semantic image content.

Global canopy height estimation with GEDI LIDAR waveforms and Bayesian deep learning

Mar 05, 2021

Abstract:NASA's Global Ecosystem Dynamics Investigation (GEDI) is a key climate mission whose goal is to advance our understanding of the role of forests in the global carbon cycle. While GEDI is the first space-based LIDAR explicitly optimized to measure vertical forest structure predictive of aboveground biomass, the accurate interpretation of this vast amount of waveform data across the broad range of observational and environmental conditions is challenging. Here, we present a novel supervised machine learning approach to interpret GEDI waveforms and regress canopy top height globally. We propose a Bayesian convolutional neural network (CNN) to avoid the explicit modelling of unknown effects, such as atmospheric noise. The model learns to extract robust features that generalize to unseen geographical regions and, in addition, yields reliable estimates of predictive uncertainty. Ultimately, the global canopy top height estimates produced by our model have an expected RMSE of 2.7 m with low bias.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge