Nikita Gushchin

Variational Entropic Optimal Transport

Feb 02, 2026Abstract:Entropic optimal transport (EOT) in continuous spaces with quadratic cost is a classical tool for solving the domain translation problem. In practice, recent approaches optimize a weak dual EOT objective depending on a single potential, but doing so is computationally not efficient due to the intractable log-partition term. Existing methods typically resolve this obstacle in one of two ways: by significantly restricting the transport family to obtain closed-form normalization (via Gaussian-mixture parameterizations), or by using general neural parameterizations that require simulation-based training procedures. We propose Variational Entropic Optimal Transport (VarEOT), based on an exact variational reformulation of the log-partition $\log \mathbb{E}[\exp(\cdot)]$ as a tractable minimization over an auxiliary positive normalizer. This yields a differentiable learning objective optimized with stochastic gradients and avoids the necessity of MCMC simulations during the training. We provide theoretical guarantees, including finite-sample generalization bounds and approximation results under universal function approximation. Experiments on synthetic data and unpaired image-to-image translation demonstrate competitive or improved translation quality, while comparisons within the solvers that use the same weak dual EOT objective support the benefit of the proposed optimization principle.

Time-Correlated Video Bridge Matching

Oct 14, 2025Abstract:Diffusion models excel in noise-to-data generation tasks, providing a mapping from a Gaussian distribution to a more complex data distribution. However they struggle to model translations between complex distributions, limiting their effectiveness in data-to-data tasks. While Bridge Matching (BM) models address this by finding the translation between data distributions, their application to time-correlated data sequences remains unexplored. This is a critical limitation for video generation and manipulation tasks, where maintaining temporal coherence is particularly important. To address this gap, we propose Time-Correlated Video Bridge Matching (TCVBM), a framework that extends BM to time-correlated data sequences in the video domain. TCVBM explicitly models inter-sequence dependencies within the diffusion bridge, directly incorporating temporal correlations into the sampling process. We compare our approach to classical methods based on bridge matching and diffusion models for three video-related tasks: frame interpolation, image-to-video generation, and video super-resolution. TCVBM achieves superior performance across multiple quantitative metrics, demonstrating enhanced generation quality and reconstruction fidelity.

Universal Inverse Distillation for Matching Models with Real-Data Supervision (No GANs)

Sep 26, 2025Abstract:While achieving exceptional generative quality, modern diffusion, flow, and other matching models suffer from slow inference, as they require many steps of iterative generation. Recent distillation methods address this by training efficient one-step generators under the guidance of a pre-trained teacher model. However, these methods are often constrained to only one specific framework, e.g., only to diffusion or only to flow models. Furthermore, these methods are naturally data-free, and to benefit from the usage of real data, it is required to use an additional complex adversarial training with an extra discriminator model. In this paper, we present RealUID, a universal distillation framework for all matching models that seamlessly incorporates real data into the distillation procedure without GANs. Our RealUID approach offers a simple theoretical foundation that covers previous distillation methods for Flow Matching and Diffusion models, and is also extended to their modifications, such as Bridge Matching and Stochastic Interpolants.

One-Step Residual Shifting Diffusion for Image Super-Resolution via Distillation

Mar 17, 2025Abstract:Diffusion models for super-resolution (SR) produce high-quality visual results but require expensive computational costs. Despite the development of several methods to accelerate diffusion-based SR models, some (e.g., SinSR) fail to produce realistic perceptual details, while others (e.g., OSEDiff) may hallucinate non-existent structures. To overcome these issues, we present RSD, a new distillation method for ResShift, one of the top diffusion-based SR models. Our method is based on training the student network to produce such images that a new fake ResShift model trained on them will coincide with the teacher model. RSD achieves single-step restoration and outperforms the teacher by a large margin. We show that our distillation method can surpass the other distillation-based method for ResShift - SinSR - making it on par with state-of-the-art diffusion-based SR distillation methods. Compared to SR methods based on pre-trained text-to-image models, RSD produces competitive perceptual quality, provides images with better alignment to degraded input images, and requires fewer parameters and GPU memory. We provide experimental results on various real-world and synthetic datasets, including RealSR, RealSet65, DRealSR, ImageNet, and DIV2K.

InfoBridge: Mutual Information estimation via Bridge Matching

Feb 03, 2025

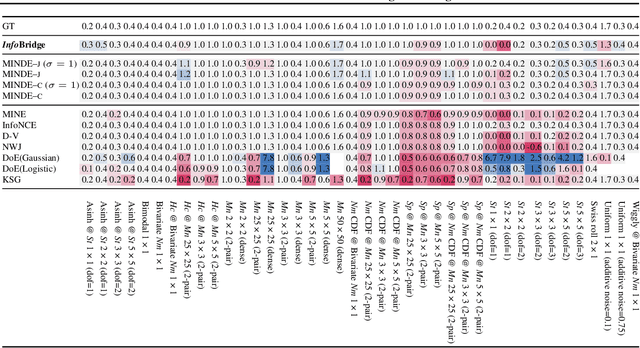

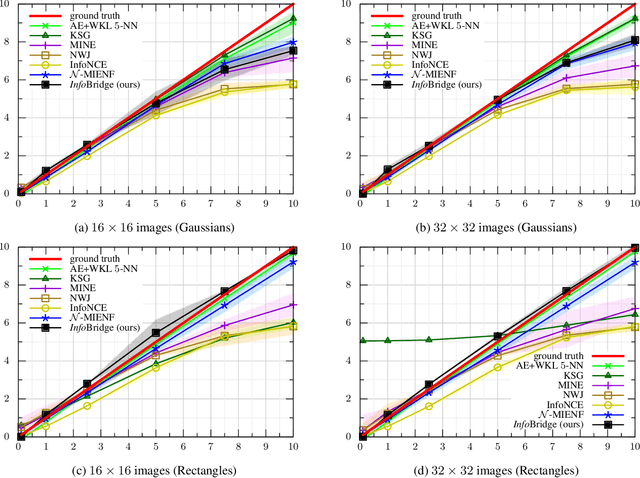

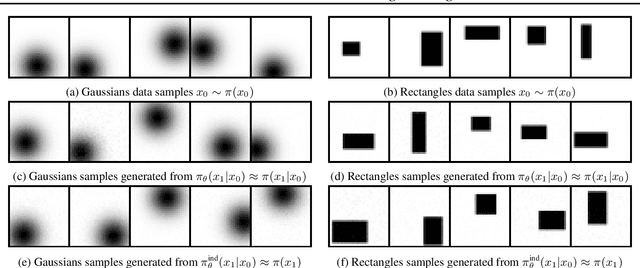

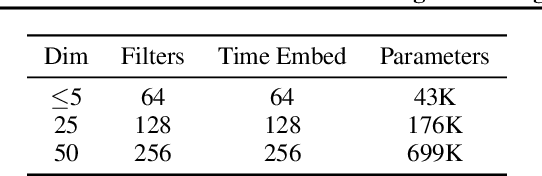

Abstract:Diffusion bridge models have recently become a powerful tool in the field of generative modeling. In this work, we leverage their power to address another important problem in machine learning and information theory - the estimation of the mutual information (MI) between two random variables. We show that by using the theory of diffusion bridges, one can construct an unbiased estimator for data posing difficulties for conventional MI estimators. We showcase the performance of our estimator on a series of standard MI estimation benchmarks.

Inverse Bridge Matching Distillation

Feb 03, 2025Abstract:Learning diffusion bridge models is easy; making them fast and practical is an art. Diffusion bridge models (DBMs) are a promising extension of diffusion models for applications in image-to-image translation. However, like many modern diffusion and flow models, DBMs suffer from the problem of slow inference. To address it, we propose a novel distillation technique based on the inverse bridge matching formulation and derive the tractable objective to solve it in practice. Unlike previously developed DBM distillation techniques, the proposed method can distill both conditional and unconditional types of DBMs, distill models in a one-step generator, and use only the corrupted images for training. We evaluate our approach for both conditional and unconditional types of bridge matching on a wide set of setups, including super-resolution, JPEG restoration, sketch-to-image, and other tasks, and show that our distillation technique allows us to accelerate the inference of DBMs from 4x to 100x and even provide better generation quality than used teacher model depending on particular setup.

Diffusion & Adversarial Schrödinger Bridges via Iterative Proportional Markovian Fitting

Oct 03, 2024

Abstract:The Iterative Markovian Fitting (IMF) procedure based on iterative reciprocal and Markovian projections has recently been proposed as a powerful method for solving the Schr\"odinger Bridge problem. However, it has been observed that for the practical implementation of this procedure, it is crucial to alternate between fitting a forward and backward time diffusion at each iteration. Such implementation is thought to be a practical heuristic, which is required to stabilize training and obtain good results in applications such as unpaired domain translation. In our work, we show that this heuristic closely connects with the pioneer approaches for the Schr\"odinger Bridge based on the Iterative Proportional Fitting (IPF) procedure. Namely, we find that the practical implementation of IMF is, in fact, a combination of IMF and IPF procedures, and we call this combination the Iterative Proportional Markovian Fitting (IPMF) procedure. We show both theoretically and practically that this combined IPMF procedure can converge under more general settings, thus, showing that the IPMF procedure opens a door towards developing a unified framework for solving Schr\"odinger Bridge problems.

Adversarial Schrödinger Bridge Matching

May 23, 2024

Abstract:The Schr\"odinger Bridge (SB) problem offers a powerful framework for combining optimal transport and diffusion models. A promising recent approach to solve the SB problem is the Iterative Markovian Fitting (IMF) procedure, which alternates between Markovian and reciprocal projections of continuous-time stochastic processes. However, the model built by the IMF procedure has a long inference time due to using many steps of numerical solvers for stochastic differential equations. To address this limitation, we propose a novel Discrete-time IMF (D-IMF) procedure in which learning of stochastic processes is replaced by learning just a few transition probabilities in discrete time. Its great advantage is that in practice it can be naturally implemented using the Denoising Diffusion GAN (DD-GAN), an already well-established adversarial generative modeling technique. We show that our D-IMF procedure can provide the same quality of unpaired domain translation as the IMF, using only several generation steps instead of hundreds.

Light and Optimal Schrödinger Bridge Matching

Feb 05, 2024

Abstract:Schr\"odinger Bridges (SB) have recently gained the attention of the ML community as a promising extension of classic diffusion models which is also interconnected to the Entropic Optimal Transport (EOT). Recent solvers for SB exploit the pervasive bridge matching procedures. Such procedures aim to recover a stochastic process transporting the mass between distributions given only a transport plan between them. In particular, given the EOT plan, these procedures can be adapted to solve SB. This fact is heavily exploited by recent works giving rives to matching-based SB solvers. The cornerstone here is recovering the EOT plan: recent works either use heuristical approximations (e.g., the minibatch OT) or establish iterative matching procedures which by the design accumulate the error during the training. We address these limitations and propose a novel procedure to learn SB which we call the \textbf{optimal Schr\"odinger bridge matching}. It exploits the optimal parameterization of the diffusion process and provably recovers the SB process \textbf{(a)} with a single bridge matching step and \textbf{(b)} with arbitrary transport plan as the input. Furthermore, we show that the optimal bridge matching objective coincides with the recently discovered energy-based modeling (EBM) objectives to learn EOT/SB. Inspired by this observation, we develop a light solver (which we call LightSB-M) to implement optimal matching in practice using the Gaussian mixture parameterization of the Schr\"odinger potential. We experimentally showcase the performance of our solver in a range of practical tasks. The code for the LightSB-M solver can be found at \url{https://github.com/SKholkin/LightSB-Matching}.

Light Schrödinger Bridge

Oct 02, 2023Abstract:Despite the recent advances in the field of computational Schrodinger Bridges (SB), most existing SB solvers are still heavy-weighted and require complex optimization of several neural networks. It turns out that there is no principal solver which plays the role of simple-yet-effective baseline for SB just like, e.g., $k$-means method in clustering, logistic regression in classification or Sinkhorn algorithm in discrete optimal transport. We address this issue and propose a novel fast and simple SB solver. Our development is a smart combination of two ideas which recently appeared in the field: (a) parameterization of the Schrodinger potentials with sum-exp quadratic functions and (b) viewing the log-Schrodinger potentials as the energy functions. We show that combined together these ideas yield a lightweight, simulation-free and theoretically justified SB solver with a simple straightforward optimization objective. As a result, it allows solving SB in moderate dimensions in a matter of minutes on CPU without a painful hyperparameter selection. Our light solver resembles the Gaussian mixture model which is widely used for density estimation. Inspired by this similarity, we also prove an important theoretical result showing that our light solver is a universal approximator of SBs. The code for the LightSB solver can be found at https://github.com/ngushchin/LightSB

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge