Nicolas Longepe

European Space Agency Phi-Lab, Frascati, Italy

Seeing Soil from Space: Towards Robust and Scalable Remote Soil Nutrient Analysis

Dec 10, 2025Abstract:Environmental variables are increasingly affecting agricultural decision-making, yet accessible and scalable tools for soil assessment remain limited. This study presents a robust and scalable modeling system for estimating soil properties in croplands, including soil organic carbon (SOC), total nitrogen (N), available phosphorus (P), exchangeable potassium (K), and pH, using remote sensing data and environmental covariates. The system employs a hybrid modeling approach, combining the indirect methods of modeling soil through proxies and drivers with direct spectral modeling. We extend current approaches by using interpretable physics-informed covariates derived from radiative transfer models (RTMs) and complex, nonlinear embeddings from a foundation model. We validate the system on a harmonized dataset that covers Europes cropland soils across diverse pedoclimatic zones. Evaluation is conducted under a robust validation framework that enforces strict spatial blocking, stratified splits, and statistically distinct train-test sets, which deliberately make the evaluation harder and produce more realistic error estimates for unseen regions. The models achieved their highest accuracy for SOC and N. This performance held across unseen locations, under both spatial cross-validation and an independent test set. SOC obtained a MAE of 5.12 g/kg and a CCC of 0.77, and N obtained a MAE of 0.44 g/kg and a CCC of 0.77. We also assess uncertainty through conformal calibration, achieving 90 percent coverage at the target confidence level. This study contributes to the digital advancement of agriculture through the application of scalable, data-driven soil analysis frameworks that can be extended to related domains requiring quantitative soil evaluation, such as carbon markets.

GEO-Bench-2: From Performance to Capability, Rethinking Evaluation in Geospatial AI

Nov 19, 2025

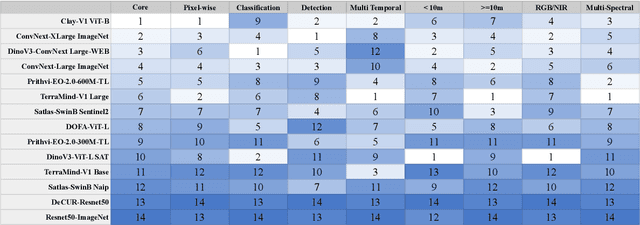

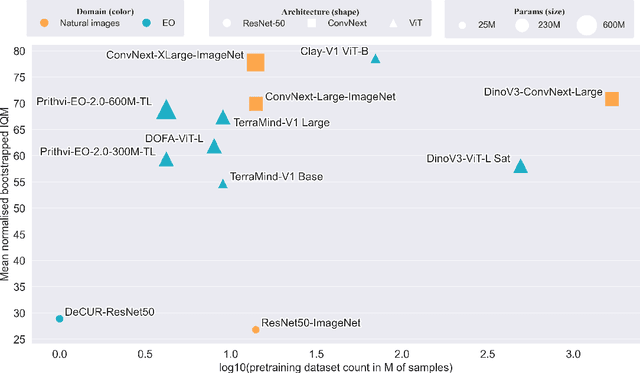

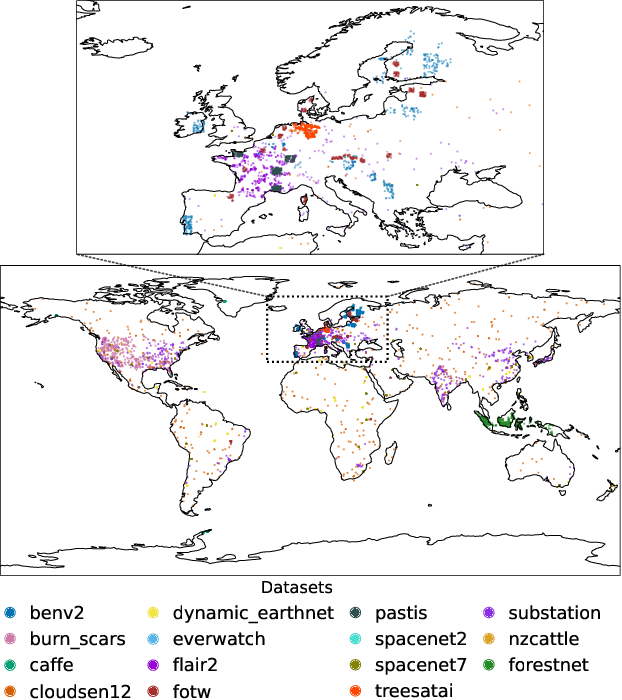

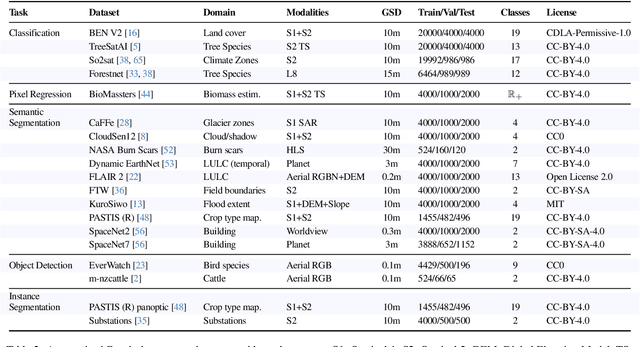

Abstract:Geospatial Foundation Models (GeoFMs) are transforming Earth Observation (EO), but evaluation lacks standardized protocols. GEO-Bench-2 addresses this with a comprehensive framework spanning classification, segmentation, regression, object detection, and instance segmentation across 19 permissively-licensed datasets. We introduce ''capability'' groups to rank models on datasets that share common characteristics (e.g., resolution, bands, temporality). This enables users to identify which models excel in each capability and determine which areas need improvement in future work. To support both fair comparison and methodological innovation, we define a prescriptive yet flexible evaluation protocol. This not only ensures consistency in benchmarking but also facilitates research into model adaptation strategies, a key and open challenge in advancing GeoFMs for downstream tasks. Our experiments show that no single model dominates across all tasks, confirming the specificity of the choices made during architecture design and pretraining. While models pretrained on natural images (ConvNext ImageNet, DINO V3) excel on high-resolution tasks, EO-specific models (TerraMind, Prithvi, and Clay) outperform them on multispectral applications such as agriculture and disaster response. These findings demonstrate that optimal model choice depends on task requirements, data modalities, and constraints. This shows that the goal of a single GeoFM model that performs well across all tasks remains open for future research. GEO-Bench-2 enables informed, reproducible GeoFM evaluation tailored to specific use cases. Code, data, and leaderboard for GEO-Bench-2 are publicly released under a permissive license.

CAS: Confidence Assessments of classification algorithms for Semantic segmentation of EO data

Jun 26, 2024

Abstract:Confidence assessments of semantic segmentation algorithms in remote sensing are important. It is a desirable property of models to a priori know if they produce an incorrect output. Evaluations of the confidence assigned to the estimates of models for the task of classification in Earth Observation (EO) are crucial as they can be used to achieve improved semantic segmentation performance and prevent high error rates during inference and deployment. The model we develop, the Confidence Assessments of classification algorithms for Semantic segmentation (CAS) model, performs confidence evaluations at both the segment and pixel levels, and outputs both labels and confidence. The outcome of this work has important applications. The main application is the evaluation of EO Foundation Models on semantic segmentation downstream tasks, in particular land cover classification using satellite Copernicus Sentinel-2 data. The evaluation shows that the proposed model is effective and outperforms other alternative baseline models.

An Interpretable Deep Semantic Segmentation Method for Earth Observation

Oct 23, 2022

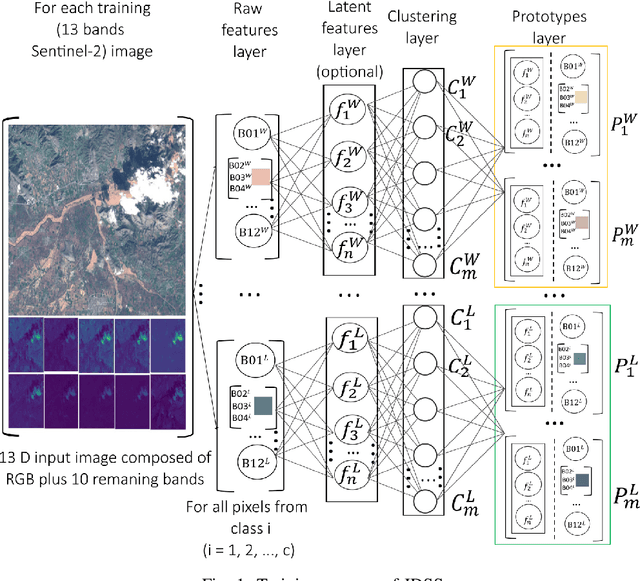

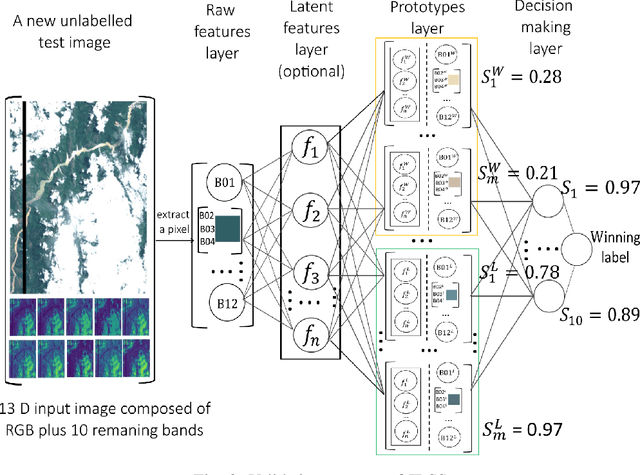

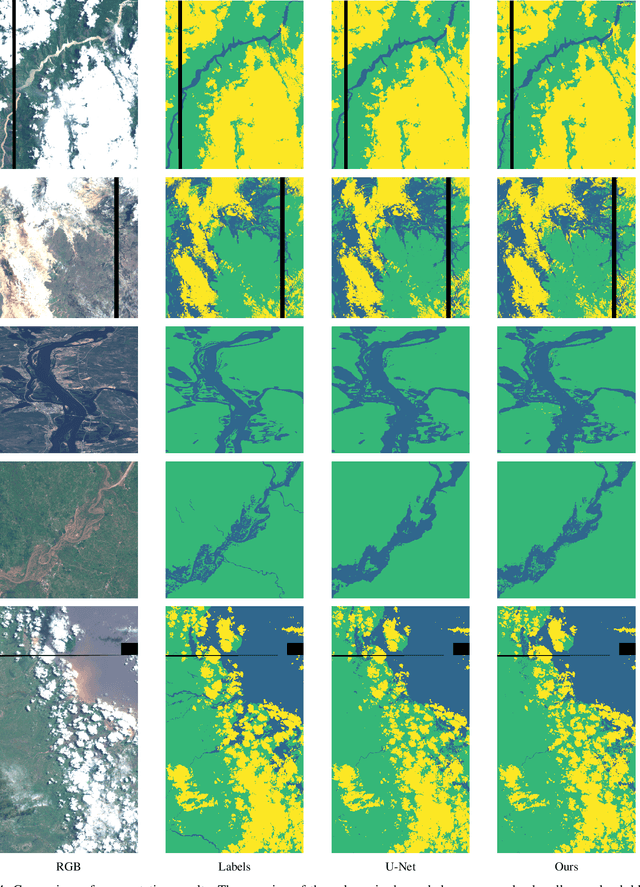

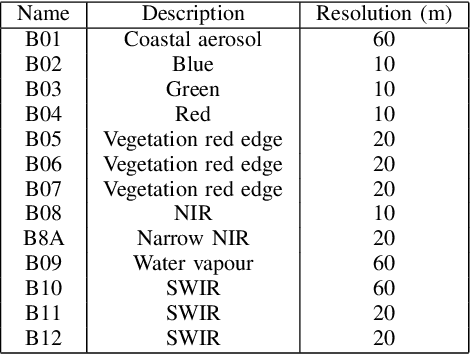

Abstract:Earth observation is fundamental for a range of human activities including flood response as it offers vital information to decision makers. Semantic segmentation plays a key role in mapping the raw hyper-spectral data coming from the satellites into a human understandable form assigning class labels to each pixel. In this paper, we introduce a prototype-based interpretable deep semantic segmentation (IDSS) method, which is highly accurate as well as interpretable. Its parameters are in orders of magnitude less than the number of parameters used by deep networks such as U-Net and are clearly interpretable by humans. The proposed here IDSS offers a transparent structure that allows users to inspect and audit the algorithm's decision. Results have demonstrated that IDSS could surpass other algorithms, including U-Net, in terms of IoU (Intersection over Union) total water and Recall total water. We used WorldFloods data set for our experiments and plan to use the semantic segmentation results combined with masks for permanent water to detect flood events.

Rain Rate Estimation with SAR using NEXRAD measurements with Convolutional Neural Networks

Jul 15, 2022

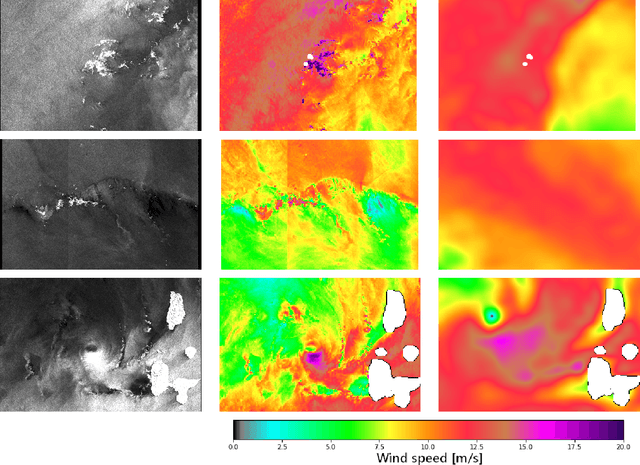

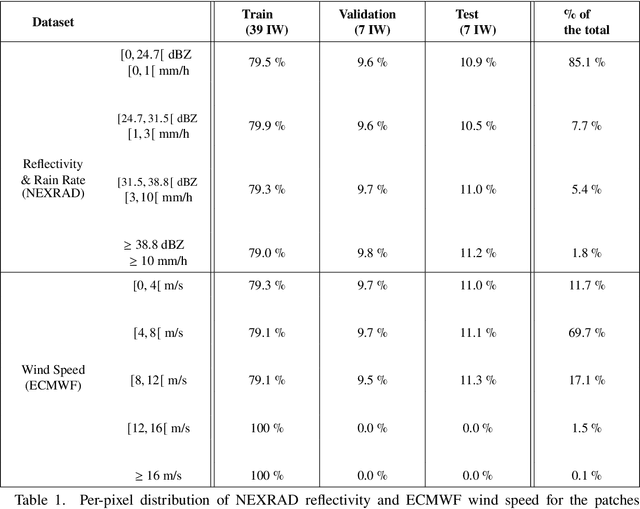

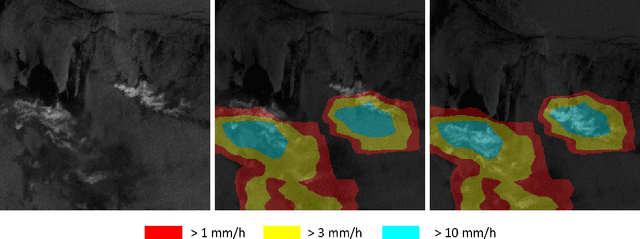

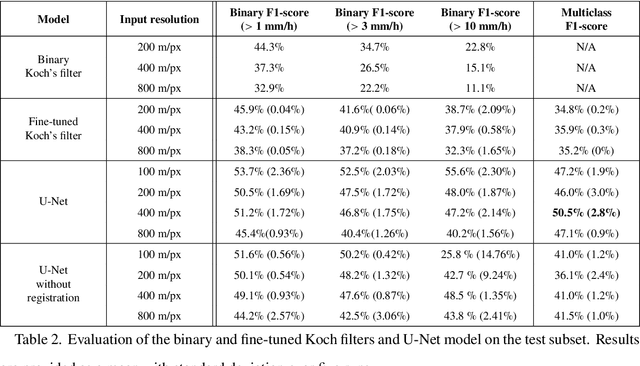

Abstract:Remote sensing of rainfall events is critical for both operational and scientific needs, including for example weather forecasting, extreme flood mitigation, water cycle monitoring, etc. Ground-based weather radars, such as NOAA's Next-Generation Radar (NEXRAD), provide reflectivity and precipitation measurements of rainfall events. However, the observation range of such radars is limited to a few hundred kilometers, prompting the exploration of other remote sensing methods, paricularly over the open ocean, that represents large areas not covered by land-based radars. For a number of decades, C-band SAR imagery such a such as Sentinel-1 imagery has been known to exhibit rainfall signatures over the sea surface. However, the development of SAR-derived rainfall products remains a challenge. Here we propose a deep learning approach to extract rainfall information from SAR imagery. We demonstrate that a convolutional neural network, such as U-Net, trained on a colocated and preprocessed Sentinel-1/NEXRAD dataset clearly outperforms state-of-the-art filtering schemes. Our results indicate high performance in segmenting precipitation regimes, delineated by thresholds at 1, 3, and 10 mm/h. Compared to current methods that rely on Koch filters to draw binary rainfall maps, these multi-threshold learning-based models can provide rainfall estimation for higher wind speeds and thus may be of great interest for data assimilation weather forecasting or for improving the qualification of SAR-derived wind field data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge