Nadav Dym

Technion - Israel Institute of Technology

Toward bilipshiz geometric models

Nov 13, 2025Abstract:Many neural networks for point clouds are, by design, invariant to the symmetries of this datatype: permutations and rigid motions. The purpose of this paper is to examine whether such networks preserve natural symmetry aware distances on the point cloud spaces, through the notion of bi-Lipschitz equivalence. This inquiry is motivated by recent work in the Equivariant learning literature which highlights the advantages of bi-Lipschitz models in other scenarios. We consider two symmetry aware metrics on point clouds: (a) The Procrustes Matching (PM) metric and (b) Hard Gromov Wasserstien distances. We show that these two distances themselves are not bi-Lipschitz equivalent, and as a corollary deduce that popular invariant networks for point clouds are not bi-Lipschitz with respect to the PM metric. We then show how these networks can be modified so that they do obtain bi-Lipschitz guarantees. Finally, we provide initial experiments showing the advantage of the proposed bi-Lipschitz model over standard invariant models, for the tasks of finding correspondences between 3D point clouds.

Spectral Graph Neural Networks are Incomplete on Graphs with a Simple Spectrum

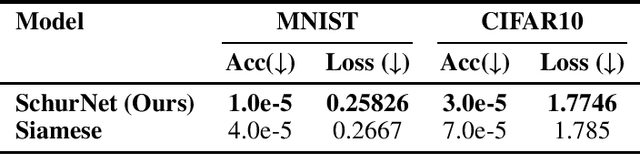

Jun 05, 2025Abstract:Spectral features are widely incorporated within Graph Neural Networks (GNNs) to improve their expressive power, or their ability to distinguish among non-isomorphic graphs. One popular example is the usage of graph Laplacian eigenvectors for positional encoding in MPNNs and Graph Transformers. The expressive power of such Spectrally-enhanced GNNs (SGNNs) is usually evaluated via the k-WL graph isomorphism test hierarchy and homomorphism counting. Yet, these frameworks align poorly with the graph spectra, yielding limited insight into SGNNs' expressive power. We leverage a well-studied paradigm of classifying graphs by their largest eigenvalue multiplicity to introduce an expressivity hierarchy for SGNNs. We then prove that many SGNNs are incomplete even on graphs with distinct eigenvalues. To mitigate this deficiency, we adapt rotation equivariant neural networks to the graph spectra setting to propose a method to provably improve SGNNs' expressivity on simple spectrum graphs. We empirically verify our theoretical claims via an image classification experiment on the MNIST Superpixel dataset and eigenvector canonicalization on graphs from ZINC.

On the (Non) Injectivity of Piecewise Linear Janossy Pooling

May 26, 2025Abstract:Multiset functions, which are functions that map multisets to vectors, are a fundamental tool in the construction of neural networks for multisets and graphs. To guarantee that the vector representation of the multiset is faithful, it is often desirable to have multiset mappings that are both injective and bi-Lipschitz. Currently, there are several constructions of multiset functions achieving both these guarantees, leading to improved performance in some tasks but often also to higher compute time than standard constructions. Accordingly, it is natural to inquire whether simpler multiset functions achieving the same guarantees are available. In this paper, we make a large step towards giving a negative answer to this question. We consider the family of k-ary Janossy pooling, which includes many of the most popular multiset models, and prove that no piecewise linear Janossy pooling function can be injective. On the positive side, we show that when restricted to multisets without multiplicities, even simple deep-sets models suffice for injectivity and bi-Lipschitzness.

The stability of generalized phase retrieval problem over compact groups

May 07, 2025

Abstract:The generalized phase retrieval problem over compact groups aims to recover a set of matrices, representing an unknown signal, from their associated Gram matrices, leveraging prior structural knowledge about the signal. This framework generalizes the classical phase retrieval problem, which reconstructs a signal from the magnitudes of its Fourier transform, to a richer setting involving non-abelian compact groups. In this broader context, the unknown phases in Fourier space are replaced by unknown orthogonal matrices that arise from the action of a compact group on a finite-dimensional vector space. This problem is primarily motivated by advances in electron microscopy to determining the 3D structure of biological macromolecules from highly noisy observations. To capture realistic assumptions from machine learning and signal processing, we model the signal as belonging to one of several broad structural families: a generic linear subspace, a sparse representation in a generic basis, the output of a generic ReLU neural network, or a generic low-dimensional manifold. Our main result shows that, under mild conditions, the generalized phase retrieval problem not only admits a unique solution (up to inherent group symmetries), but also satisfies a bi-Lipschitz property. This implies robustness to both noise and model mismatch, an essential requirement for practical use, especially when measurements are severely corrupted by noise. These findings provide theoretical support for a wide class of scientific problems under modern structural assumptions, and they offer strong foundations for developing robust algorithms in high-noise regimes.

Fourier Sliced-Wasserstein Embedding for Multisets and Measures

Apr 03, 2025

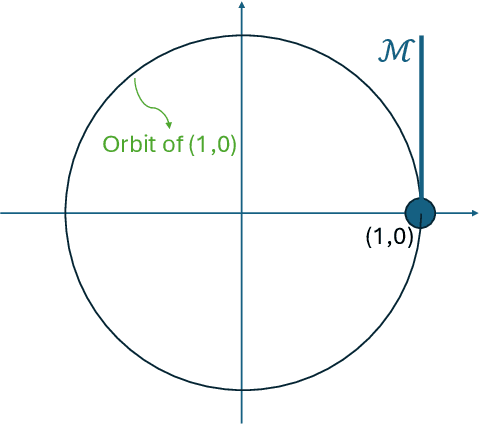

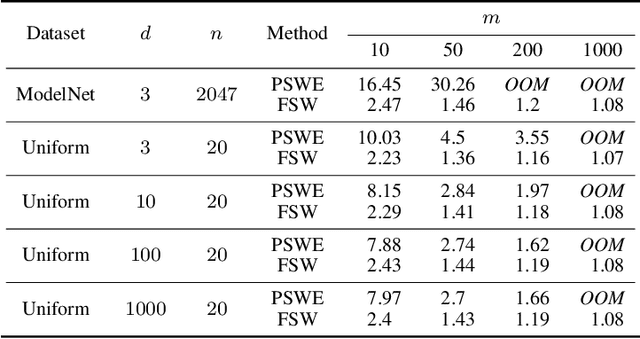

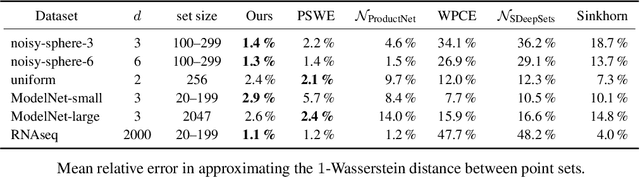

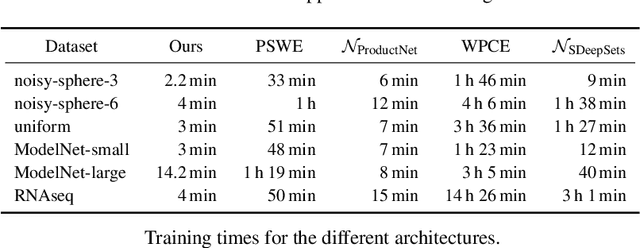

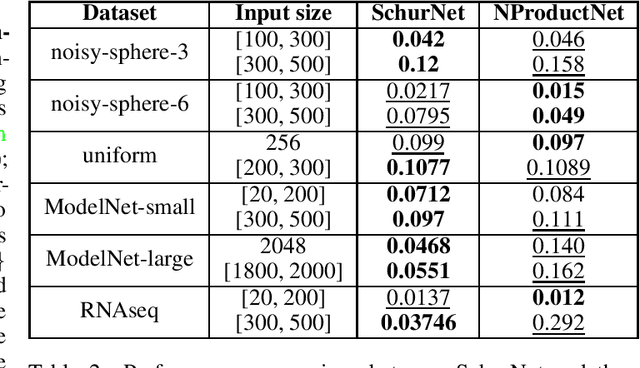

Abstract:We present the Fourier Sliced-Wasserstein (FSW) embedding - a novel method to embed multisets and measures over $\mathbb{R}^d$ into Euclidean space. Our proposed embedding approximately preserves the sliced Wasserstein distance on distributions, thereby yielding geometrically meaningful representations that better capture the structure of the input. Moreover, it is injective on measures and bi-Lipschitz on multisets - a significant advantage over prevalent methods based on sum- or max-pooling, which are provably not bi-Lipschitz, and, in many cases, not even injective. The required output dimension for these guarantees is near-optimal: roughly $2 N d$, where $N$ is the maximal input multiset size. Furthermore, we prove that it is impossible to embed distributions over $\mathbb{R}^d$ into Euclidean space in a bi-Lipschitz manner. Thus, the metric properties of our embedding are, in a sense, the best possible. Through numerical experiments, we demonstrate that our method yields superior multiset representations that improve performance in practical learning tasks. Specifically, we show that (a) a simple combination of the FSW embedding with an MLP achieves state-of-the-art performance in learning the (non-sliced) Wasserstein distance; and (b) replacing max-pooling with the FSW embedding makes PointNet significantly more robust to parameter reduction, with only minor performance degradation even after a 40-fold reduction.

Bi-Lipschitz Ansatz for Anti-Symmetric Functions

Mar 06, 2025Abstract:Motivated by applications for simulating quantum many body functions, we propose a new universal ansatz for approximating anti-symmetric functions. The main advantage of this ansatz over previous alternatives is that it is bi-Lipschitz with respect to a naturally defined metric. As a result, we are able to obtain quantitative approximation results for approximation of Lipschitz continuous antisymmetric functions. Moreover, we provide preliminary experimental evidence to the improved performance of this ansatz for learning antisymmetric functions.

FSW-GNN: A Bi-Lipschitz WL-Equivalent Graph Neural Network

Oct 10, 2024Abstract:Many of the most popular graph neural networks fall into the category of message-passing neural networks (MPNNs). Famously, MPNNs' ability to distinguish between graphs is limited to graphs separable by the Weisfeiler-Lemann (WL) graph isomorphism test, and the strongest MPNNs, in terms of separation power, are WL-equivalent. Recently, it was shown that the quality of separation provided by standard WL-equivalent MPNN can be very low, resulting in WL-separable graphs being mapped to very similar, hardly distinguishable features. This paper addresses this issue by seeking bi-Lipschitz continuity guarantees for MPNNs. We demonstrate that, in contrast with standard summation-based MPNNs, which lack bi-Lipschitz properties, our proposed model provides a bi-Lipschitz graph embedding with respect to two standard graph metrics. Empirically, we show that our MPNN is competitive with standard MPNNs for several graph learning tasks and is far more accurate in over-squashing long-range tasks.

Revisiting Multi-Permutation Equivariance through the Lens of Irreducible Representations

Oct 09, 2024

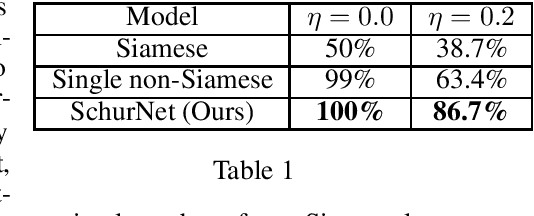

Abstract:This paper explores the characterization of equivariant linear layers for representations of permutations and related groups. Unlike traditional approaches, which address these problems using parameter-sharing, we consider an alternative methodology based on irreducible representations and Schur's lemma. Using this methodology, we obtain an alternative derivation for existing models like DeepSets, 2-IGN graph equivariant networks, and Deep Weight Space (DWS) networks. The derivation for DWS networks is significantly simpler than that of previous results. Next, we extend our approach to unaligned symmetric sets, where equivariance to the wreath product of groups is required. Previous works have addressed this problem in a rather restrictive setting, in which almost all wreath equivariant layers are Siamese. In contrast, we give a full characterization of layers in this case and show that there is a vast number of additional non-Siamese layers in some settings. We also show empirically that these additional non-Siamese layers can improve performance in tasks like graph anomaly detection, weight space alignment, and learning Wasserstein distances. Our code is available at \href{https://github.com/yonatansverdlov/Irreducible-Representations-of-Deep-Weight-Spaces}{GitHub}.

On the Expressive Power of Sparse Geometric MPNNs

Jul 02, 2024Abstract:Motivated by applications in chemistry and other sciences, we study the expressive power of message-passing neural networks for geometric graphs, whose node features correspond to 3-dimensional positions. Recent work has shown that such models can separate generic pairs of non-equivalent geometric graphs, though they may fail to separate some rare and complicated instances. However, these results assume a fully connected graph, where each node possesses complete knowledge of all other nodes. In contrast, often, in application, every node only possesses knowledge of a small number of nearest neighbors. This paper shows that generic pairs of non-equivalent geometric graphs can be separated by message-passing networks with rotation equivariant features as long as the underlying graph is connected. When only invariant intermediate features are allowed, generic separation is guaranteed for generically globally rigid graphs. We introduce a simple architecture, EGENNET, which achieves our theoretical guarantees and compares favorably with alternative architecture on synthetic and chemical benchmarks.

On the Hölder Stability of Multiset and Graph Neural Networks

Jun 11, 2024Abstract:Famously, multiset neural networks based on sum-pooling can separate all distinct multisets, and as a result can be used by message passing neural networks (MPNNs) to separate all pairs of graphs that can be separated by the 1-WL graph isomorphism test. However, the quality of this separation may be very weak, to the extent that the embeddings of "separable" multisets and graphs might even be considered identical when using fixed finite precision. In this work, we propose to fully analyze the separation quality of multiset models and MPNNs via a novel adaptation of Lipschitz and H\"{o}lder continuity to parametric functions. We prove that common sum-based models are lower-H\"{o}lder continuous, with a H\"{o}lder exponent that decays rapidly with the network's depth. Our analysis leads to adversarial examples of graphs which can be separated by three 1-WL iterations, but cannot be separated in practice by standard maximally powerful MPNNs. To remedy this, we propose two novel MPNNs with improved separation quality, one of which is lower Lipschitz continuous. We show these MPNNs can easily classify our adversarial examples, and compare favorably with standard MPNNs on standard graph learning tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge