MyungJae Shin

Cooperative Multi-Agent Deep Reinforcement Learning for Reliable Surveillance via Autonomous Multi-UAV Control

Jan 15, 2022

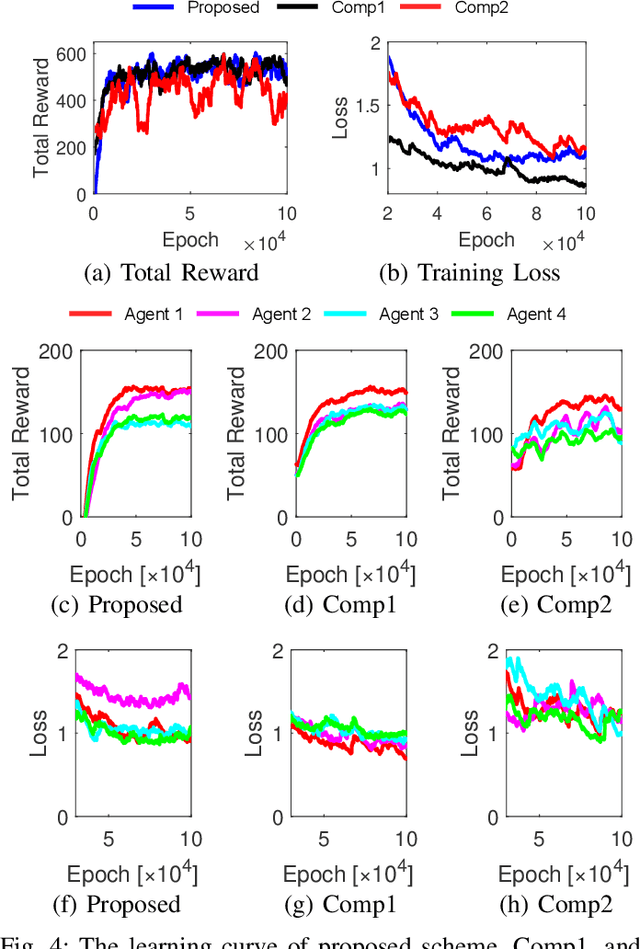

Abstract:CCTV-based surveillance using unmanned aerial vehicles (UAVs) is considered a key technology for security in smart city environments. This paper creates a case where the UAVs with CCTV-cameras fly over the city area for flexible and reliable surveillance services. UAVs should be deployed to cover a large area while minimize overlapping and shadow areas for a reliable surveillance system. However, the operation of UAVs is subject to high uncertainty, necessitating autonomous recovery systems. This work develops a multi-agent deep reinforcement learning-based management scheme for reliable industry surveillance in smart city applications. The core idea this paper employs is autonomously replenishing the UAV's deficient network requirements with communications. Via intensive simulations, our proposed algorithm outperforms the state-of-the-art algorithms in terms of surveillance coverage, user support capability, and computational costs.

Parallelized and Randomized Adversarial Imitation Learning for Safety-Critical Self-Driving Vehicles

Dec 26, 2021

Abstract:Self-driving cars and autonomous driving research has been receiving considerable attention as major promising prospects in modern artificial intelligence applications. According to the evolution of advanced driver assistance system (ADAS), the design of self-driving vehicle and autonomous driving systems becomes complicated and safety-critical. In general, the intelligent system simultaneously and efficiently activates ADAS functions. Therefore, it is essential to consider reliable ADAS function coordination to control the driving system, safely. In order to deal with this issue, this paper proposes a randomized adversarial imitation learning (RAIL) algorithm. The RAIL is a novel derivative-free imitation learning method for autonomous driving with various ADAS functions coordination; and thus it imitates the operation of decision maker that controls autonomous driving with various ADAS functions. The proposed method is able to train the decision maker that deals with the LIDAR data and controls the autonomous driving in multi-lane complex highway environments. The simulation-based evaluation verifies that the proposed method achieves desired performance.

Adversarial Imitation Learning via Random Search

Aug 21, 2020

Abstract:Developing agents that can perform challenging complex tasks is the goal of reinforcement learning. The model-free reinforcement learning has been considered as a feasible solution. However, the state of the art research has been to develop increasingly complicated techniques. This increasing complexity makes the reconstruction difficult. Furthermore, the problem of reward dependency is still exists. As a result, research on imitation learning, which learns policy from a demonstration of experts, has begun to attract attention. Imitation learning directly learns policy based on data on the behavior of the experts without the explicit reward signal provided by the environment. However, imitation learning tries to optimize policies based on deep reinforcement learning such as trust region policy optimization. As a result, deep reinforcement learning based imitation learning also poses a crisis of reproducibility. The issue of complex model-free model has received considerable critical attention. A derivative-free optimization based reinforcement learning and the simplification on policies obtain competitive performance on the dynamic complex tasks. The simplified policies and derivative free methods make algorithm be simple. The reconfiguration of research demo becomes easy. In this paper, we propose an imitation learning method that takes advantage of the derivative-free optimization with simple linear policies. The proposed method performs simple random search in the parameter space of policies and shows computational efficiency. Experiments in this paper show that the proposed model, without a direct reward signal from the environment, obtains competitive performance on the MuJoCo locomotion tasks.

XOR Mixup: Privacy-Preserving Data Augmentation for One-Shot Federated Learning

Jun 09, 2020

Abstract:User-generated data distributions are often imbalanced across devices and labels, hampering the performance of federated learning (FL). To remedy to this non-independent and identically distributed (non-IID) data problem, in this work we develop a privacy-preserving XOR based mixup data augmentation technique, coined XorMixup, and thereby propose a novel one-shot FL framework, termed XorMixFL. The core idea is to collect other devices' encoded data samples that are decoded only using each device's own data samples. The decoding provides synthetic-but-realistic samples until inducing an IID dataset, used for model training. Both encoding and decoding procedures follow the bit-wise XOR operations that intentionally distort raw samples, thereby preserving data privacy. Simulation results corroborate that XorMixFL achieves up to 17.6% higher accuracy than Vanilla FL under a non-IID MNIST dataset.

Randomized Adversarial Imitation Learning for Autonomous Driving

May 13, 2019

Abstract:With the evolution of various advanced driver assistance system (ADAS) platforms, the design of autonomous driving system is becoming more complex and safety-critical. The autonomous driving system simultaneously activates multiple ADAS functions; and thus it is essential to coordinate various ADAS functions. This paper proposes a randomized adversarial imitation learning (RAIL) method that imitates the coordination of autonomous vehicle equipped with advanced sensors. The RAIL policies are trained through derivative-free optimization for the decision maker that coordinates the proper ADAS functions, e.g., smart cruise control and lane keeping system. Especially, the proposed method is also able to deal with the LIDAR data and makes decisions in complex multi-lane highways and multi-agent environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge