Mor Vered

Probabilistic Active Goal Recognition

Jul 29, 2025Abstract:In multi-agent environments, effective interaction hinges on understanding the beliefs and intentions of other agents. While prior work on goal recognition has largely treated the observer as a passive reasoner, Active Goal Recognition (AGR) focuses on strategically gathering information to reduce uncertainty. We adopt a probabilistic framework for Active Goal Recognition and propose an integrated solution that combines a joint belief update mechanism with a Monte Carlo Tree Search (MCTS) algorithm, allowing the observer to plan efficiently and infer the actor's hidden goal without requiring domain-specific knowledge. Through comprehensive empirical evaluation in a grid-based domain, we show that our joint belief update significantly outperforms passive goal recognition, and that our domain-independent MCTS performs comparably to our strong domain-specific greedy baseline. These results establish our solution as a practical and robust framework for goal inference, advancing the field toward more interactive and adaptive multi-agent systems.

Towards Explainable Goal Recognition Using Weight of Evidence (WoE): A Human-Centered Approach

Sep 18, 2024

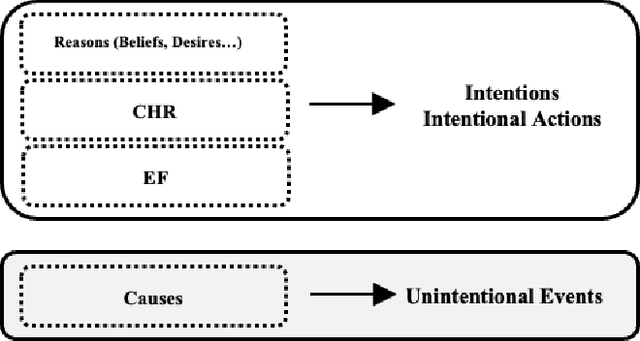

Abstract:Goal recognition (GR) involves inferring an agent's unobserved goal from a sequence of observations. This is a critical problem in AI with diverse applications. Traditionally, GR has been addressed using 'inference to the best explanation' or abduction, where hypotheses about the agent's goals are generated as the most plausible explanations for observed behavior. Alternatively, some approaches enhance interpretability by ensuring that an agent's behavior aligns with an observer's expectations or by making the reasoning behind decisions more transparent. In this work, we tackle a different challenge: explaining the GR process in a way that is comprehensible to humans. We introduce and evaluate an explainable model for goal recognition (GR) agents, grounded in the theoretical framework and cognitive processes underlying human behavior explanation. Drawing on insights from two human-agent studies, we propose a conceptual framework for human-centered explanations of GR. Using this framework, we develop the eXplainable Goal Recognition (XGR) model, which generates explanations for both why and why not questions. We evaluate the model computationally across eight GR benchmarks and through three user studies. The first study assesses the efficiency of generating human-like explanations within the Sokoban game domain, the second examines perceived explainability in the same domain, and the third evaluates the model's effectiveness in aiding decision-making in illegal fishing detection. Results demonstrate that the XGR model significantly enhances user understanding, trust, and decision-making compared to baseline models, underscoring its potential to improve human-agent collaboration.

NEUSIS: A Compositional Neuro-Symbolic Framework for Autonomous Perception, Reasoning, and Planning in Complex UAV Search Missions

Sep 16, 2024

Abstract:This paper addresses the problem of autonomous UAV search missions, where a UAV must locate specific Entities of Interest (EOIs) within a time limit, based on brief descriptions in large, hazard-prone environments with keep-out zones. The UAV must perceive, reason, and make decisions with limited and uncertain information. We propose NEUSIS, a compositional neuro-symbolic system designed for interpretable UAV search and navigation in realistic scenarios. NEUSIS integrates neuro-symbolic visual perception, reasoning, and grounding (GRiD) to process raw sensory inputs, maintains a probabilistic world model for environment representation, and uses a hierarchical planning component (SNaC) for efficient path planning. Experimental results from simulated urban search missions using AirSim and Unreal Engine show that NEUSIS outperforms a state-of-the-art (SOTA) vision-language model and a SOTA search planning model in success rate, search efficiency, and 3D localization. These results demonstrate the effectiveness of our compositional neuro-symbolic approach in handling complex, real-world scenarios, making it a promising solution for autonomous UAV systems in search missions.

Explainable Goal Recognition: A Framework Based on Weight of Evidence

Mar 09, 2023

Abstract:We introduce and evaluate an eXplainable Goal Recognition (XGR) model that uses the Weight of Evidence (WoE) framework to explain goal recognition problems. Our model provides human-centered explanations that answer why? and why not? questions. We computationally evaluate the performance of our system over eight different domains. Using a human behavioral study to obtain the ground truth from human annotators, we further show that the XGR model can successfully generate human-like explanations. We then report on a study with 60 participants who observe agents playing Sokoban game and then receive explanations of the goal recognition output. We investigate participants' understanding obtained by explanations through task prediction, explanation satisfaction, and trust.

Let's Make It Personal, A Challenge in Personalizing Medical Inter-Human Communication

Jul 29, 2019

Abstract:Current AI approaches have frequently been used to help personalize many aspects of medical experiences and tailor them to a specific individuals' needs. However, while such systems consider medically-relevant information, they ignore socially-relevant information about how this diagnosis should be communicated and discussed with the patient. The lack of this capability may lead to mis-communication, resulting in serious implications, such as patients opting out of the best treatment. Consider a case in which the same treatment is proposed to two different individuals. The manner in which this treatment is mediated to each should be different, depending on the individual patient's history, knowledge, and mental state. While it is clear that this communication should be conveyed via a human medical expert and not a software-based system, humans are not always capable of considering all of the relevant aspects and traversing all available information. We pose the challenge of creating Intelligent Agents (IAs) to assist medical service providers (MSPs) and consumers in establishing a more personalized human-to-human dialogue. Personalizing conversations will enable patients and MSPs to reach a solution that is best for their particular situation, such that a relation of trust can be built and commitment to the outcome of the interaction is assured. We propose a four-part conceptual framework for personalized social interactions, expand on which techniques are available within current AI research and discuss what has yet to be achieved.

Heuristic Online Goal Recognition in Continuous Domains

Sep 28, 2017

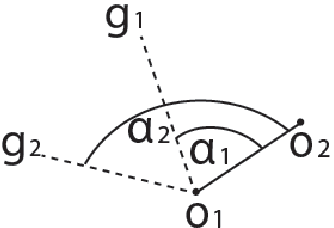

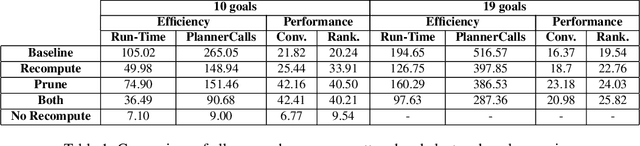

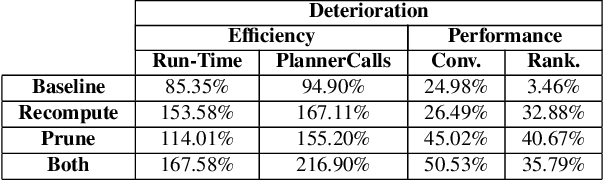

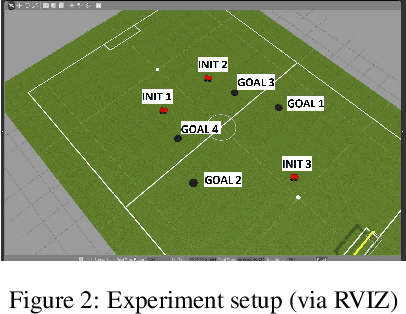

Abstract:Goal recognition is the problem of inferring the goal of an agent, based on its observed actions. An inspiring approach - plan recognition by planning (PRP) - uses off-the-shelf planners to dynamically generate plans for given goals, eliminating the need for the traditional plan library. However, existing PRP formulation is inherently inefficient in online recognition, and cannot be used with motion planners for continuous spaces. In this paper, we utilize a different PRP formulation which allows for online goal recognition, and for application in continuous spaces. We present an online recognition algorithm, where two heuristic decision points may be used to improve run-time significantly over existing work. We specify heuristics for continuous domains, prove guarantees on their use, and empirically evaluate the algorithm over hundreds of experiments in both a 3D navigational environment and a cooperative robotic team task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge