Amal Abdulrahman

Towards Explainable Goal Recognition Using Weight of Evidence (WoE): A Human-Centered Approach

Sep 18, 2024

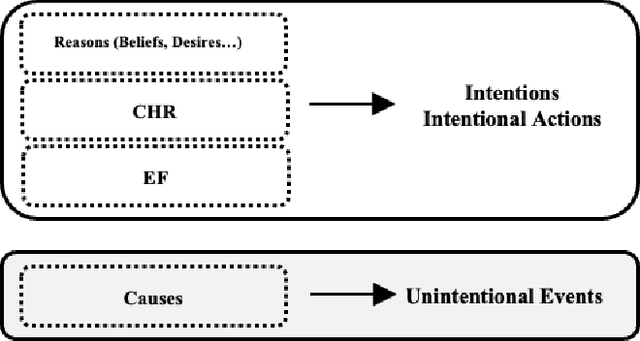

Abstract:Goal recognition (GR) involves inferring an agent's unobserved goal from a sequence of observations. This is a critical problem in AI with diverse applications. Traditionally, GR has been addressed using 'inference to the best explanation' or abduction, where hypotheses about the agent's goals are generated as the most plausible explanations for observed behavior. Alternatively, some approaches enhance interpretability by ensuring that an agent's behavior aligns with an observer's expectations or by making the reasoning behind decisions more transparent. In this work, we tackle a different challenge: explaining the GR process in a way that is comprehensible to humans. We introduce and evaluate an explainable model for goal recognition (GR) agents, grounded in the theoretical framework and cognitive processes underlying human behavior explanation. Drawing on insights from two human-agent studies, we propose a conceptual framework for human-centered explanations of GR. Using this framework, we develop the eXplainable Goal Recognition (XGR) model, which generates explanations for both why and why not questions. We evaluate the model computationally across eight GR benchmarks and through three user studies. The first study assesses the efficiency of generating human-like explanations within the Sokoban game domain, the second examines perceived explainability in the same domain, and the third evaluates the model's effectiveness in aiding decision-making in illegal fishing detection. Results demonstrate that the XGR model significantly enhances user understanding, trust, and decision-making compared to baseline models, underscoring its potential to improve human-agent collaboration.

Evaluating Web Search Engines Results for Personalization and User Tracking

Nov 15, 2022Abstract:Recently, light has been shed on the trend of personalization, which comes into play whenever different search results are being tailored for a group of users who have issued the same search query. The unpalatable fact that myriads of search results are being manipulated has perturbed a horde of people. With regards to that, personalization can be instrumental in spurring the Filter Bubble effects, which revolves around the inability of certain users to gain access to the typified contents that are allegedly irrelevant per the search engine's algorithm. In harmony with that, there is a wealth of research on this area. Each of these has relied on using techniques revolving around creating Google accounts that differ in one feature and issuing identical search queries from each account. The search results are often compared to determine whether those results are going to vary per account. Thereupon, we have conducted six experiments that aim to closely inspect and spot the patterns of personalization in search results. In a like manner, we are going to examine how the search results are going to vary accordingly. In all of the tasks, three different metrics are going to be measured, namely, the number of total hits, the first hit, and the correlation between hits. Those experiments are centered around fulfilling the following tasks. Firstly, setting up four VPNs that are located at different geographic locations and comparing the search results with those obtained in the UAE. Secondly, performing the search while logging in and out of a Google account. Thirdly, searching while connecting to different networks: home, phone, and university networks. Fourthly, using different search engines to issue the search queries. Fifthly, using different web browsers to carry out the search process. Finally, creating and training six Google accounts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge