Mingjie Zeng

HD3C: Efficient Medical Data Classification for Embedded Devices

Sep 18, 2025

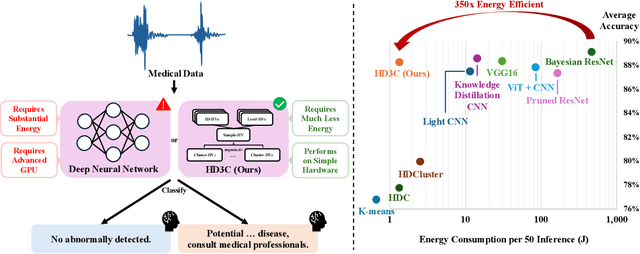

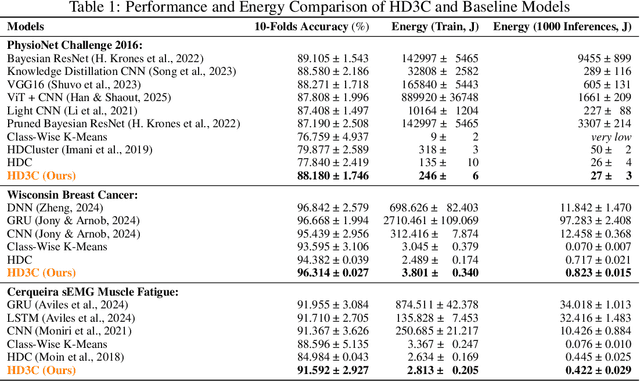

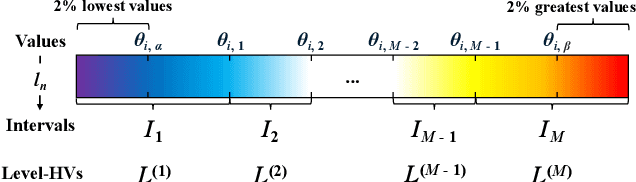

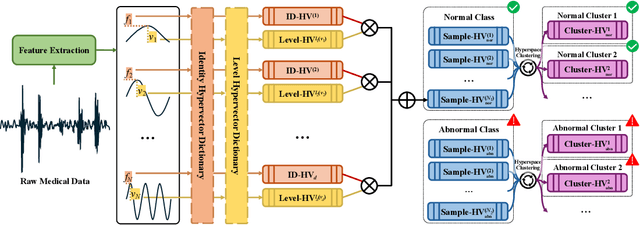

Abstract:Energy-efficient medical data classification is essential for modern disease screening, particularly in home and field healthcare where embedded devices are prevalent. While deep learning models achieve state-of-the-art accuracy, their substantial energy consumption and reliance on GPUs limit deployment on such platforms. We present Hyperdimensional Computing with Class-Wise Clustering (HD3C), a lightweight classification framework designed for low-power environments. HD3C encodes data into high-dimensional hypervectors, aggregates them into multiple cluster-specific prototypes, and performs classification through similarity search in hyperspace. We evaluate HD3C across three medical classification tasks; on heart sound classification, HD3C is $350\times$ more energy-efficient than Bayesian ResNet with less than 1% accuracy difference. Moreover, HD3C demonstrates exceptional robustness to noise, limited training data, and hardware error, supported by both theoretical analysis and empirical results, highlighting its potential for reliable deployment in real-world settings. Code is available at https://github.com/jianglanwei/HD3C.

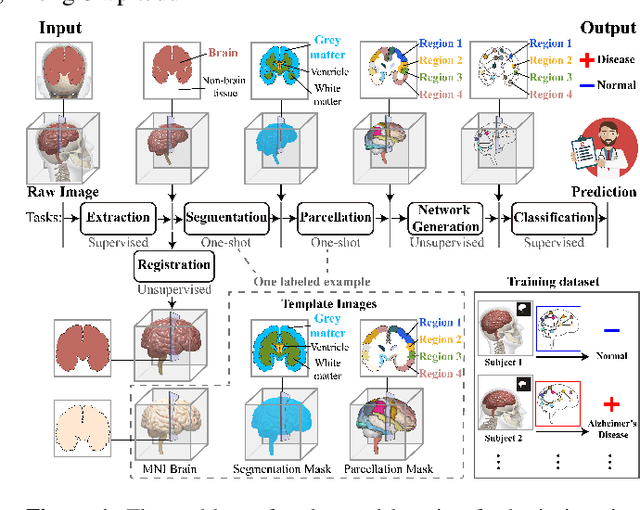

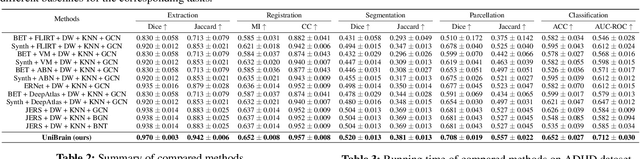

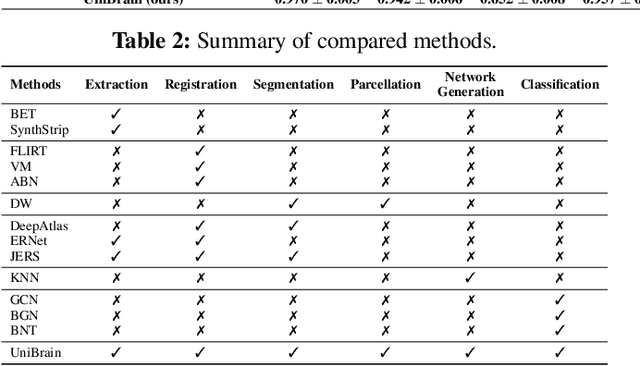

End-to-End Deep Learning for Structural Brain Imaging: A Unified Framework

Feb 23, 2025

Abstract:Brain imaging analysis is fundamental in neuroscience, providing valuable insights into brain structure and function. Traditional workflows follow a sequential pipeline-brain extraction, registration, segmentation, parcellation, network generation, and classification-treating each step as an independent task. These methods rely heavily on task-specific training data and expert intervention to correct intermediate errors, making them particularly burdensome for high-dimensional neuroimaging data, where annotations and quality control are costly and time-consuming. We introduce UniBrain, a unified end-to-end framework that integrates all processing steps into a single optimization process, allowing tasks to interact and refine each other. Unlike traditional approaches that require extensive task-specific annotations, UniBrain operates with minimal supervision, leveraging only low-cost labels (i.e., classification and extraction) and a single labeled atlas. By jointly optimizing extraction, registration, segmentation, parcellation, network generation, and classification, UniBrain enhances both accuracy and computational efficiency while significantly reducing annotation effort. Experimental results demonstrate its superiority over existing methods across multiple tasks, offering a more scalable and reliable solution for neuroimaging analysis. Our code and data can be found at https://github.com/Anonymous7852/UniBrain

Guiding the Last Centimeter: Novel Anatomy-Aware Probe Servoing for Standardized Imaging Plane Navigation in Robotic Lung Ultrasound

Jun 17, 2024Abstract:Navigating the ultrasound (US) probe to the standardized imaging plane (SIP) for image acquisition is a critical but operator-dependent task in conventional freehand diagnostic US. Robotic US systems (RUSS) offer the potential to enhance imaging consistency by leveraging real-time US image feedback to optimize the probe pose, thereby reducing reliance on operator expertise. However, determining the proper approach to extracting generalizable features from the US images for probe pose adjustment remain challenging. In this work, we propose a SIP navigation framework for RUSS, exemplified in the context of robotic lung ultrasound (LUS). This framework facilitates automatic probe adjustment when in proximity to the SIP. This is achieved by explicitly extracting multiple anatomical features presented in real-time LUS images and performing non-patient-specific template matching to generate probe motion towards the SIP using image-based visual servoing (IBVS). This framework is further integrated with the active-sensing end-effector (A-SEE), a customized robot end-effector that leverages patient external body geometry to maintain optimal probe alignment with the contact surface, thus preserving US signal quality throughout the navigation. The proposed approach ensures procedural interpretability and inter-patient adaptability. Validation is conducted through anatomy-mimicking phantom and in-vivo evaluations involving five human subjects. The results show the framework's high navigation precision with the probe correctly located at the SIP for all cases, exhibiting positioning error of under 2 mm in translation and under 2 degree in rotation. These results demonstrate the navigation process's capability to accomondate anatomical variations among patients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge