Min Hun Lee

Towards Uncertainty Aware Task Delegation and Human-AI Collaborative Decision-Making

May 23, 2025Abstract:Despite the growing promise of artificial intelligence (AI) in supporting decision-making across domains, fostering appropriate human reliance on AI remains a critical challenge. In this paper, we investigate the utility of exploring distance-based uncertainty scores for task delegation to AI and describe how these scores can be visualized through embedding representations for human-AI decision-making. After developing an AI-based system for physical stroke rehabilitation assessment, we conducted a study with 19 health professionals and 10 students in medicine/health to understand the effect of exploring distance-based uncertainty scores on users' reliance on AI. Our findings showed that distance-based uncertainty scores outperformed traditional probability-based uncertainty scores in identifying uncertain cases. In addition, after exploring confidence scores for task delegation and reviewing embedding-based visualizations of distance-based uncertainty scores, participants achieved an 8.20% higher rate of correct decisions, a 7.15% higher rate of changing their decisions to correct ones, and a 7.14% lower rate of incorrect changes after reviewing AI outputs than those reviewing probability-based uncertainty scores ($p<0.01$). Our findings highlight the potential of distance-based uncertainty scores to enhance decision accuracy and appropriate reliance on AI while discussing ongoing challenges for human-AI collaborative decision-making.

A Multimodal Fusion Model Leveraging MLP Mixer and Handcrafted Features-based Deep Learning Networks for Facial Palsy Detection

Mar 13, 2025

Abstract:Algorithmic detection of facial palsy offers the potential to improve current practices, which usually involve labor-intensive and subjective assessments by clinicians. In this paper, we present a multimodal fusion-based deep learning model that utilizes an MLP mixer-based model to process unstructured data (i.e. RGB images or images with facial line segments) and a feed-forward neural network to process structured data (i.e. facial landmark coordinates, features of facial expressions, or handcrafted features) for detecting facial palsy. We then contribute to a study to analyze the effect of different data modalities and the benefits of a multimodal fusion-based approach using videos of 20 facial palsy patients and 20 healthy subjects. Our multimodal fusion model achieved 96.00 F1, which is significantly higher than the feed-forward neural network trained on handcrafted features alone (82.80 F1) and an MLP mixer-based model trained on raw RGB images (89.00 F1).

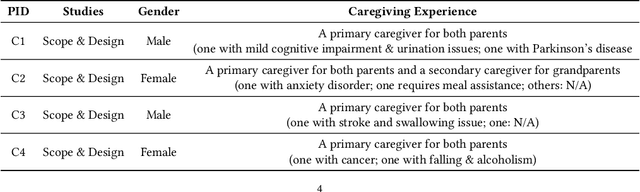

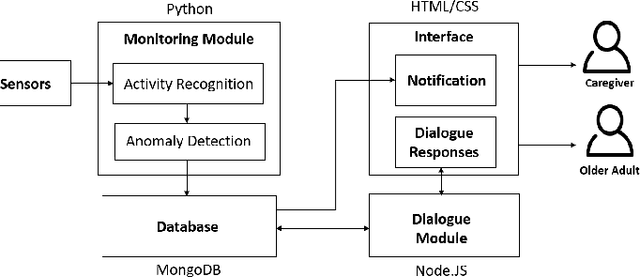

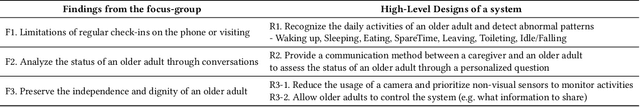

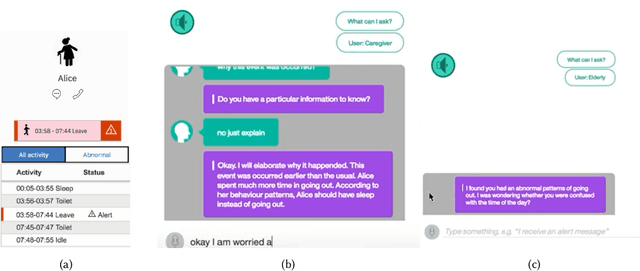

Investigating an Intelligent System to Monitor \& Explain Abnormal Activity Patterns of Older Adults

Jan 30, 2025

Abstract:Despite the growing potential of older adult care technologies, the adoption of these technologies remains challenging. In this work, we conducted a focus-group session with family caregivers to scope designs of the older adult care technology. We then developed a high-fidelity prototype and conducted its qualitative study with professional caregivers and older adults to understand their perspectives on the system functionalities. This system monitors abnormal activity patterns of older adults using wireless motion sensors and machine learning models and supports interactive dialogue responses to explain abnormal activity patterns of older adults to caregivers and allow older adults proactively sharing their status with caregivers for an adequate intervention. Both older adults and professional caregivers appreciated that our system can provide a faster, personalized service while proactively controlling what information is to be shared through interactive dialogue responses. We further discuss other considerations to realize older adult technology in practice.

Interactive Example-based Explanations to Improve Health Professionals' Onboarding with AI for Human-AI Collaborative Decision Making

Sep 24, 2024Abstract:A growing research explores the usage of AI explanations on user's decision phases for human-AI collaborative decision-making. However, previous studies found the issues of overreliance on `wrong' AI outputs. In this paper, we propose interactive example-based explanations to improve health professionals' onboarding with AI for their better reliance on AI during AI-assisted decision-making. We implemented an AI-based decision support system that utilizes a neural network to assess the quality of post-stroke survivors' exercises and interactive example-based explanations that systematically surface the nearest neighborhoods of a test/task sample from the training set of the AI model to assist users' onboarding with the AI model. To investigate the effect of interactive example-based explanations, we conducted a study with domain experts, health professionals to evaluate their performance and reliance on AI. Our interactive example-based explanations during onboarding assisted health professionals in having a better reliance on AI and making a higher ratio of making `right' decisions and a lower ratio of `wrong' decisions than providing only feature-based explanations during the decision-support phase. Our study discusses new challenges of assisting user's onboarding with AI for human-AI collaborative decision-making.

Improving Health Professionals' Onboarding with AI and XAI for Trustworthy Human-AI Collaborative Decision Making

May 26, 2024Abstract:With advanced AI/ML, there has been growing research on explainable AI (XAI) and studies on how humans interact with AI and XAI for effective human-AI collaborative decision-making. However, we still have a lack of understanding of how AI systems and XAI should be first presented to users without technical backgrounds. In this paper, we present the findings of semi-structured interviews with health professionals (n=12) and students (n=4) majoring in medicine and health to study how to improve onboarding with AI and XAI. For the interviews, we built upon human-AI interaction guidelines to create onboarding materials of an AI system for stroke rehabilitation assessment and AI explanations and introduce them to the participants. Our findings reveal that beyond presenting traditional performance metrics on AI, participants desired benchmark information, the practical benefits of AI, and interaction trials to better contextualize AI performance, and refine the objectives and performance of AI. Based on these findings, we highlight directions for improving onboarding with AI and XAI and human-AI collaborative decision-making.

Exploring a Multimodal Fusion-based Deep Learning Network for Detecting Facial Palsy

May 26, 2024Abstract:Algorithmic detection of facial palsy offers the potential to improve current practices, which usually involve labor-intensive and subjective assessment by clinicians. In this paper, we present a multimodal fusion-based deep learning model that utilizes unstructured data (i.e. an image frame with facial line segments) and structured data (i.e. features of facial expressions) to detect facial palsy. We then contribute to a study to analyze the effect of different data modalities and the benefits of a multimodal fusion-based approach using videos of 21 facial palsy patients. Our experimental results show that among various data modalities (i.e. unstructured data - RGB images and images of facial line segments and structured data - coordinates of facial landmarks and features of facial expressions), the feed-forward neural network using features of facial expression achieved the highest precision of 76.22 while the ResNet-based model using images of facial line segments achieved the highest recall of 83.47. When we leveraged both images of facial line segments and features of facial expressions, our multimodal fusion-based deep learning model slightly improved the precision score to 77.05 at the expense of a decrease in the recall score.

Understanding the Effect of Counterfactual Explanations on Trust and Reliance on AI for Human-AI Collaborative Clinical Decision Making

Aug 08, 2023Abstract:Artificial intelligence (AI) is increasingly being considered to assist human decision-making in high-stake domains (e.g. health). However, researchers have discussed an issue that humans can over-rely on wrong suggestions of the AI model instead of achieving human AI complementary performance. In this work, we utilized salient feature explanations along with what-if, counterfactual explanations to make humans review AI suggestions more analytically to reduce overreliance on AI and explored the effect of these explanations on trust and reliance on AI during clinical decision-making. We conducted an experiment with seven therapists and ten laypersons on the task of assessing post-stroke survivors' quality of motion, and analyzed their performance, agreement level on the task, and reliance on AI without and with two types of AI explanations. Our results showed that the AI model with both salient features and counterfactual explanations assisted therapists and laypersons to improve their performance and agreement level on the task when `right' AI outputs are presented. While both therapists and laypersons over-relied on `wrong' AI outputs, counterfactual explanations assisted both therapists and laypersons to reduce their over-reliance on `wrong' AI outputs by 21\% compared to salient feature explanations. Specifically, laypersons had higher performance degrades by 18.0 f1-score with salient feature explanations and 14.0 f1-score with counterfactual explanations than therapists with performance degrades of 8.6 and 2.8 f1-scores respectively. Our work discusses the potential of counterfactual explanations to better estimate the accuracy of an AI model and reduce over-reliance on `wrong' AI outputs and implications for improving human-AI collaborative decision-making.

Design, Development, and Evaluation of an Interactive Personalized Social Robot to Monitor and Coach Post-Stroke Rehabilitation Exercises

May 12, 2023Abstract:Socially assistive robots are increasingly being explored to improve the engagement of older adults and people with disability in health and well-being-related exercises. However, even if people have various physical conditions, most prior work on social robot exercise coaching systems has utilized generic, predefined feedback. The deployment of these systems still remains a challenge. In this paper, we present our work of iteratively engaging therapists and post-stroke survivors to design, develop, and evaluate a social robot exercise coaching system for personalized rehabilitation. Through interviews with therapists, we designed how this system interacts with the user and then developed an interactive social robot exercise coaching system. This system integrates a neural network model with a rule-based model to automatically monitor and assess patients' rehabilitation exercises and can be tuned with individual patient's data to generate real-time, personalized corrective feedback for improvement. With the dataset of rehabilitation exercises from 15 post-stroke survivors, we demonstrated our system significantly improves its performance to assess patients' exercises while tuning with held-out patient's data. In addition, our real-world evaluation study showed that our system can adapt to new participants and achieved 0.81 average performance to assess their exercises, which is comparable to the experts' agreement level. We further discuss the potential benefits and limitations of our system in practice.

Exploring a Gradient-based Explainable AI Technique for Time-Series Data: A Case Study of Assessing Stroke Rehabilitation Exercises

May 08, 2023Abstract:Explainable artificial intelligence (AI) techniques are increasingly being explored to provide insights into why AI and machine learning (ML) models provide a certain outcome in various applications. However, there has been limited exploration of explainable AI techniques on time-series data, especially in the healthcare context. In this paper, we describe a threshold-based method that utilizes a weakly supervised model and a gradient-based explainable AI technique (i.e. saliency map) and explore its feasibility to identify salient frames of time-series data. Using the dataset from 15 post-stroke survivors performing three upper-limb exercises and labels on whether a compensatory motion is observed or not, we implemented a feed-forward neural network model and utilized gradients of each input on model outcomes to identify salient frames that involve compensatory motions. According to the evaluation using frame-level annotations, our approach achieved a recall of 0.96 and an F2-score of 0.91. Our results demonstrated the potential of a gradient-based explainable AI technique (e.g. saliency map) for time-series data, such as highlighting the frames of a video that therapists should focus on reviewing and reducing the efforts on frame-level labeling for model training.

Imagining new futures beyond predictive systems in child welfare: A qualitative study with impacted stakeholders

May 18, 2022

Abstract:Child welfare agencies across the United States are turning to data-driven predictive technologies (commonly called predictive analytics) which use government administrative data to assist workers' decision-making. While some prior work has explored impacted stakeholders' concerns with current uses of data-driven predictive risk models (PRMs), less work has asked stakeholders whether such tools ought to be used in the first place. In this work, we conducted a set of seven design workshops with 35 stakeholders who have been impacted by the child welfare system or who work in it to understand their beliefs and concerns around PRMs, and to engage them in imagining new uses of data and technologies in the child welfare system. We found that participants worried current PRMs perpetuate or exacerbate existing problems in child welfare. Participants suggested new ways to use data and data-driven tools to better support impacted communities and suggested paths to mitigate possible harms of these tools. Participants also suggested low-tech or no-tech alternatives to PRMs to address problems in child welfare. Our study sheds light on how researchers and designers can work in solidarity with impacted communities, possibly to circumvent or oppose child welfare agencies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge