Michal Hradis

BenCzechMark : A Czech-centric Multitask and Multimetric Benchmark for Large Language Models with Duel Scoring Mechanism

Dec 23, 2024

Abstract:We present BenCzechMark (BCM), the first comprehensive Czech language benchmark designed for large language models, offering diverse tasks, multiple task formats, and multiple evaluation metrics. Its scoring system is grounded in statistical significance theory and uses aggregation across tasks inspired by social preference theory. Our benchmark encompasses 50 challenging tasks, with corresponding test datasets, primarily in native Czech, with 11 newly collected ones. These tasks span 8 categories and cover diverse domains, including historical Czech news, essays from pupils or language learners, and spoken word. Furthermore, we collect and clean BUT-Large Czech Collection, the largest publicly available clean Czech language corpus, and use it for (i) contamination analysis, (ii) continuous pretraining of the first Czech-centric 7B language model, with Czech-specific tokenization. We use our model as a baseline for comparison with publicly available multilingual models. Lastly, we release and maintain a leaderboard, with existing 44 model submissions, where new model submissions can be made at https://huggingface.co/spaces/CZLC/BenCzechMark.

A Comparative Study of Text Retrieval Models on DaReCzech

Nov 19, 2024Abstract:This article presents a comprehensive evaluation of 7 off-the-shelf document retrieval models: Splade, Plaid, Plaid-X, SimCSE, Contriever, OpenAI ADA and Gemma2 chosen to determine their performance on the Czech retrieval dataset DaReCzech. The primary objective of our experiments is to estimate the quality of modern retrieval approaches in the Czech language. Our analyses include retrieval quality, speed, and memory footprint. Secondly, we analyze whether it is better to use the model directly in Czech text, or to use machine translation into English, followed by retrieval in English. Our experiments identify the most effective option for Czech information retrieval. The findings revealed notable performance differences among the models, with Gemma22 achieving the highest precision and recall, while Contriever performing poorly. Conclusively, SPLADE and PLAID models offered a balance of efficiency and performance.

CNN for IMU Assisted Odometry Estimation using Velodyne LiDAR

Dec 18, 2017

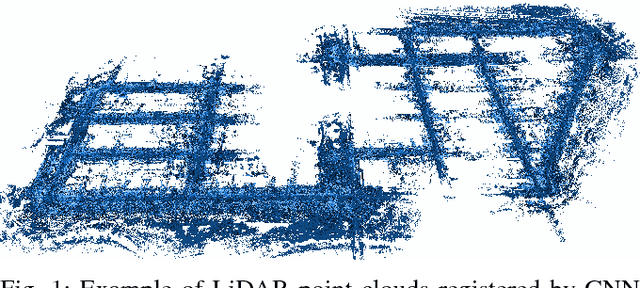

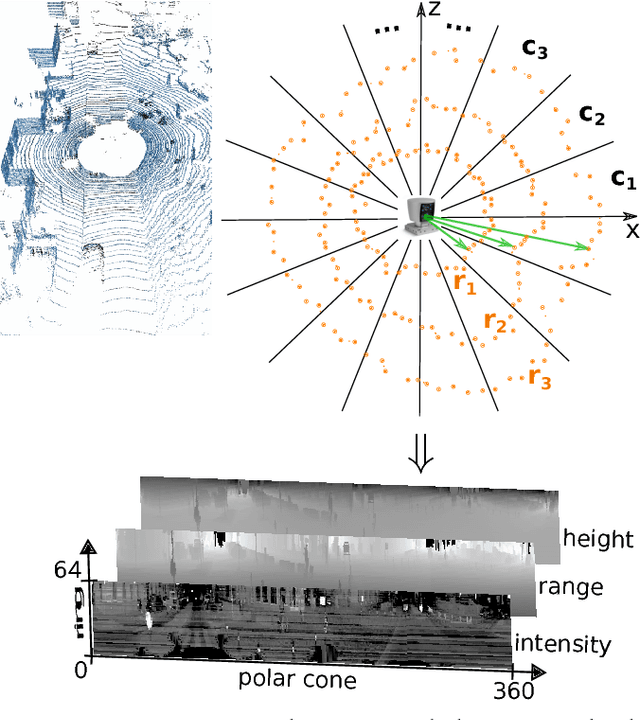

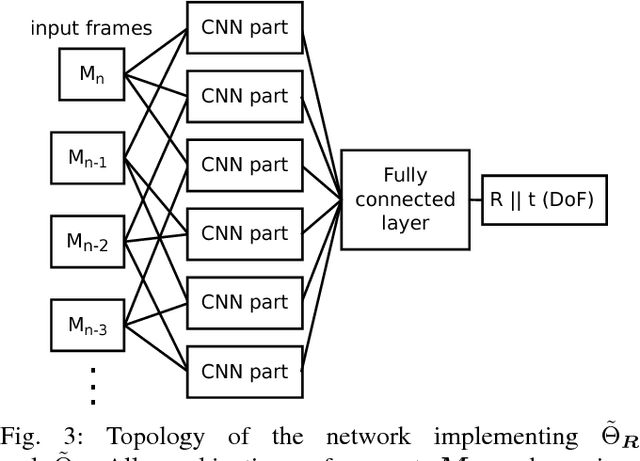

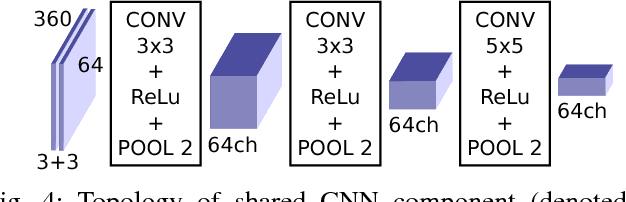

Abstract:We introduce a novel method for odometry estimation using convolutional neural networks from 3D LiDAR scans. The original sparse data are encoded into 2D matrices for the training of proposed networks and for the prediction. Our networks show significantly better precision in the estimation of translational motion parameters comparing with state of the art method LOAM, while achieving real-time performance. Together with IMU support, high quality odometry estimation and LiDAR data registration is realized. Moreover, we propose alternative CNNs trained for the prediction of rotational motion parameters while achieving results also comparable with state of the art. The proposed method can replace wheel encoders in odometry estimation or supplement missing GPS data, when the GNSS signal absents (e.g. during the indoor mapping). Our solution brings real-time performance and precision which are useful to provide online preview of the mapping results and verification of the map completeness in real time.

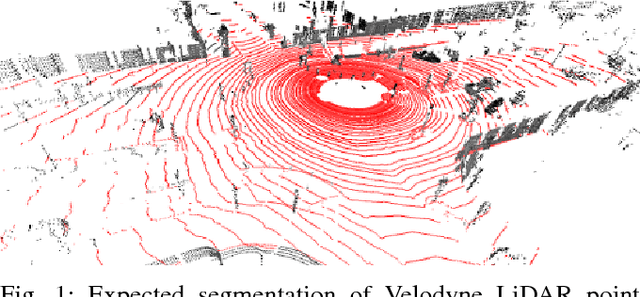

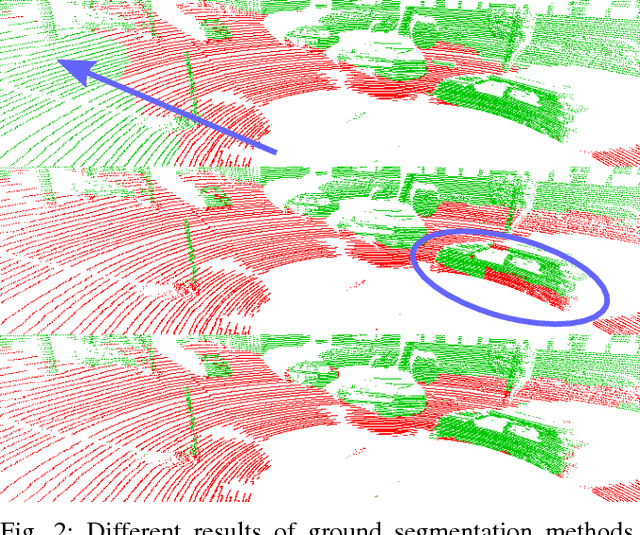

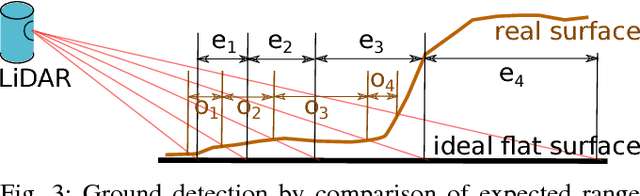

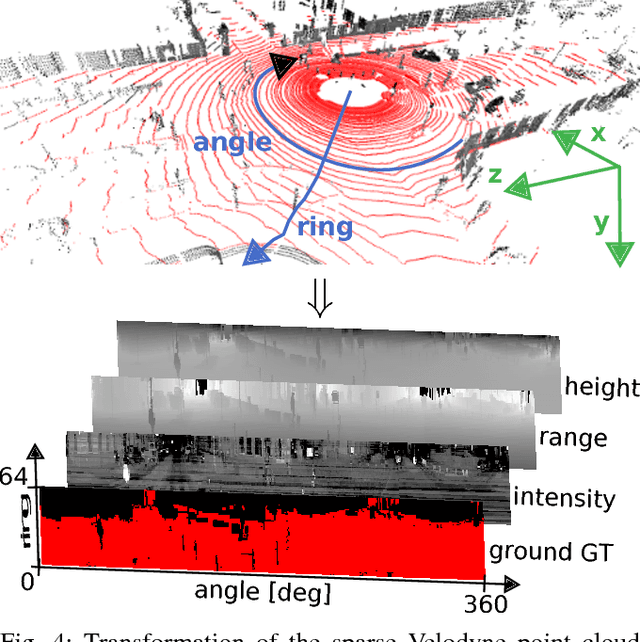

CNN for Very Fast Ground Segmentation in Velodyne LiDAR Data

Sep 07, 2017

Abstract:This paper presents a novel method for ground segmentation in Velodyne point clouds. We propose an encoding of sparse 3D data from the Velodyne sensor suitable for training a convolutional neural network (CNN). This general purpose approach is used for segmentation of the sparse point cloud into ground and non-ground points. The LiDAR data are represented as a multi-channel 2D signal where the horizontal axis corresponds to the rotation angle and the vertical axis the indexes channels (i.e. laser beams). Multiple topologies of relatively shallow CNNs (i.e. 3-5 convolutional layers) are trained and evaluated using a manually annotated dataset we prepared. The results show significant improvement of performance over the state-of-the-art method by Zhang et al. in terms of speed and also minor improvements in terms of accuracy.

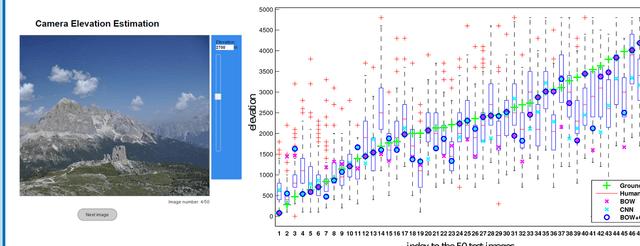

Camera Elevation Estimation from a Single Mountain Landscape Photograph

Jul 12, 2016

Abstract:This work addresses the problem of camera elevation estimation from a single photograph in an outdoor environment. We introduce a new benchmark dataset of one-hundred thousand images with annotated camera elevation called Alps100K. We propose and experimentally evaluate two automatic data-driven approaches to camera elevation estimation: one based on convolutional neural networks, the other on local features. To compare the proposed methods to human performance, an experiment with 100 subjects is conducted. The experimental results show that both proposed approaches outperform humans and that the best result is achieved by their combination.

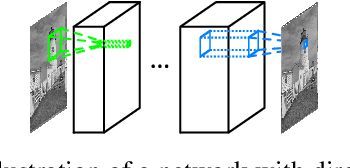

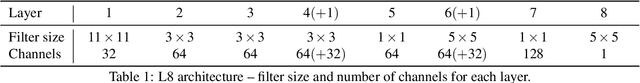

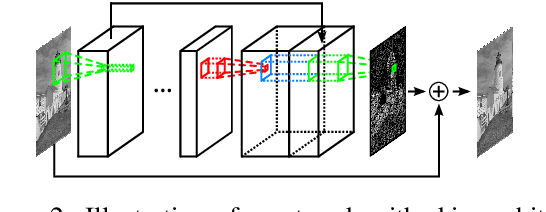

Compression Artifacts Removal Using Convolutional Neural Networks

May 02, 2016

Abstract:This paper shows that it is possible to train large and deep convolutional neural networks (CNN) for JPEG compression artifacts reduction, and that such networks can provide significantly better reconstruction quality compared to previously used smaller networks as well as to any other state-of-the-art methods. We were able to train networks with 8 layers in a single step and in relatively short time by combining residual learning, skip architecture, and symmetric weight initialization. We provide further insights into convolution networks for JPEG artifact reduction by evaluating three different objectives, generalization with respect to training dataset size, and generalization with respect to JPEG quality level.

CNN for License Plate Motion Deblurring

Feb 25, 2016

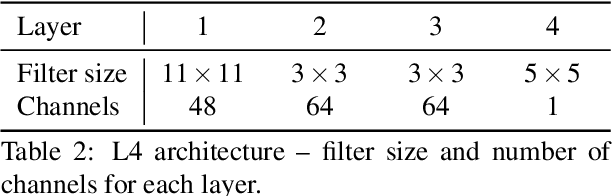

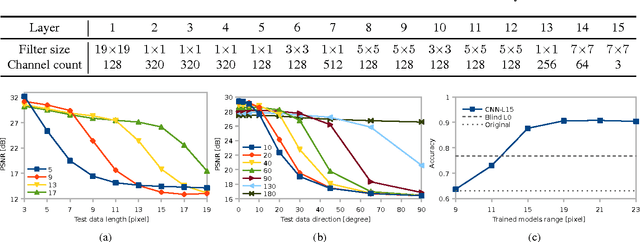

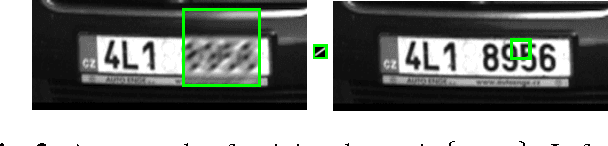

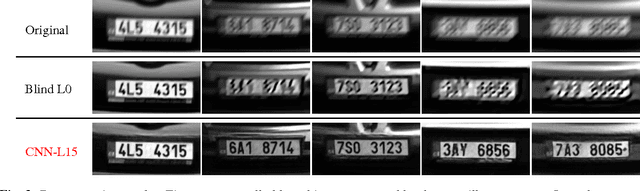

Abstract:In this work we explore the previously proposed approach of direct blind deconvolution and denoising with convolutional neural networks in a situation where the blur kernels are partially constrained. We focus on blurred images from a real-life traffic surveillance system, on which we, for the first time, demonstrate that neural networks trained on artificial data provide superior reconstruction quality on real images compared to traditional blind deconvolution methods. The training data is easy to obtain by blurring sharp photos from a target system with a very rough approximation of the expected blur kernels, thereby allowing custom CNNs to be trained for a specific application (image content and blur range). Additionally, we evaluate the behavior and limits of the CNNs with respect to blur direction range and length.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge