Michael Blumenstein

Exploring Mutual Cross-Modal Attention for Context-Aware Human Affordance Generation

Feb 19, 2025

Abstract:Human affordance learning investigates contextually relevant novel pose prediction such that the estimated pose represents a valid human action within the scene. While the task is fundamental to machine perception and automated interactive navigation agents, the exponentially large number of probable pose and action variations make the problem challenging and non-trivial. However, the existing datasets and methods for human affordance prediction in 2D scenes are significantly limited in the literature. In this paper, we propose a novel cross-attention mechanism to encode the scene context for affordance prediction by mutually attending spatial feature maps from two different modalities. The proposed method is disentangled among individual subtasks to efficiently reduce the problem complexity. First, we sample a probable location for a person within the scene using a variational autoencoder (VAE) conditioned on the global scene context encoding. Next, we predict a potential pose template from a set of existing human pose candidates using a classifier on the local context encoding around the predicted location. In the subsequent steps, we use two VAEs to sample the scale and deformation parameters for the predicted pose template by conditioning on the local context and template class. Our experiments show significant improvements over the previous baseline of human affordance injection into complex 2D scenes.

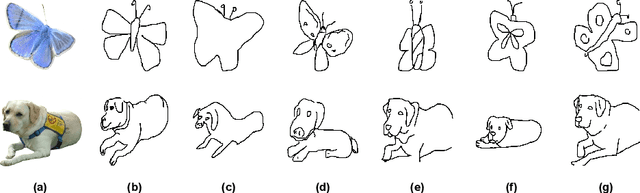

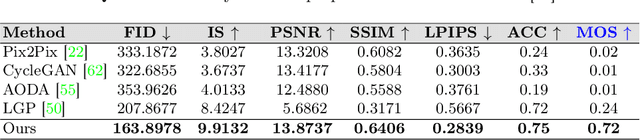

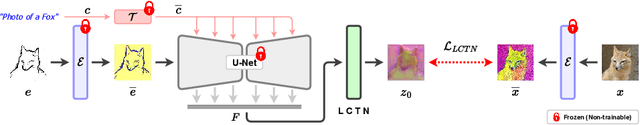

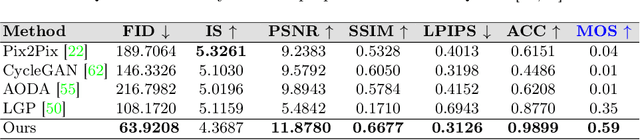

d-Sketch: Improving Visual Fidelity of Sketch-to-Image Translation with Pretrained Latent Diffusion Models without Retraining

Feb 19, 2025

Abstract:Structural guidance in an image-to-image translation allows intricate control over the shapes of synthesized images. Generating high-quality realistic images from user-specified rough hand-drawn sketches is one such task that aims to impose a structural constraint on the conditional generation process. While the premise is intriguing for numerous use cases of content creation and academic research, the problem becomes fundamentally challenging due to substantial ambiguities in freehand sketches. Furthermore, balancing the trade-off between shape consistency and realistic generation contributes to additional complexity in the process. Existing approaches based on Generative Adversarial Networks (GANs) generally utilize conditional GANs or GAN inversions, often requiring application-specific data and optimization objectives. The recent introduction of Denoising Diffusion Probabilistic Models (DDPMs) achieves a generational leap for low-level visual attributes in general image synthesis. However, directly retraining a large-scale diffusion model on a domain-specific subtask is often extremely difficult due to demanding computation costs and insufficient data. In this paper, we introduce a technique for sketch-to-image translation by exploiting the feature generalization capabilities of a large-scale diffusion model without retraining. In particular, we use a learnable lightweight mapping network to achieve latent feature translation from source to target domain. Experimental results demonstrate that the proposed method outperforms the existing techniques in qualitative and quantitative benchmarks, allowing high-resolution realistic image synthesis from rough hand-drawn sketches.

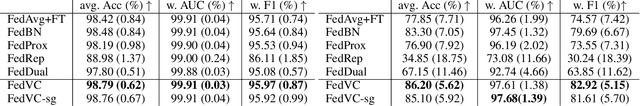

Personalized Interpretation on Federated Learning: A Virtual Concepts approach

Jun 28, 2024

Abstract:Tackling non-IID data is an open challenge in federated learning research. Existing FL methods, including robust FL and personalized FL, are designed to improve model performance without consideration of interpreting non-IID across clients. This paper aims to design a novel FL method to robust and interpret the non-IID data across clients. Specifically, we interpret each client's dataset as a mixture of conceptual vectors that each one represents an interpretable concept to end-users. These conceptual vectors could be pre-defined or refined in a human-in-the-loop process or be learnt via the optimization procedure of the federated learning system. In addition to the interpretability, the clarity of client-specific personalization could also be applied to enhance the robustness of the training process on FL system. The effectiveness of the proposed method have been validated on benchmark datasets.

Dual-Personalizing Adapter for Federated Foundation Models

Mar 28, 2024Abstract:Recently, foundation models, particularly large language models (LLMs), have demonstrated an impressive ability to adapt to various tasks by fine-tuning large amounts of instruction data. Notably, federated foundation models emerge as a privacy preservation method to fine-tune models collaboratively under federated learning (FL) settings by leveraging many distributed datasets with non-IID data. To alleviate communication and computation overhead, parameter-efficient methods are introduced for efficiency, and some research adapted personalization methods to federated foundation models for better user preferences alignment. However, a critical gap in existing research is the neglect of test-time distribution shifts in real-world applications. Therefore, to bridge this gap, we propose a new setting, termed test-time personalization, which not only concentrates on the targeted local task but also extends to other tasks that exhibit test-time distribution shifts. To address challenges in this new setting, we explore a simple yet effective solution to learn a comprehensive foundation model. Specifically, a dual-personalizing adapter architecture (FedDPA) is proposed, comprising a global adapter and a local adapter for addressing test-time distribution shifts and personalization, respectively. Additionally, we introduce an instance-wise dynamic weighting mechanism to optimize the balance between the global and local adapters, enhancing overall performance. The effectiveness of the proposed method has been evaluated on benchmark datasets across different NLP tasks.

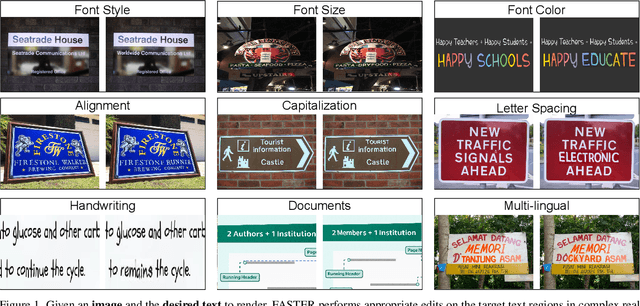

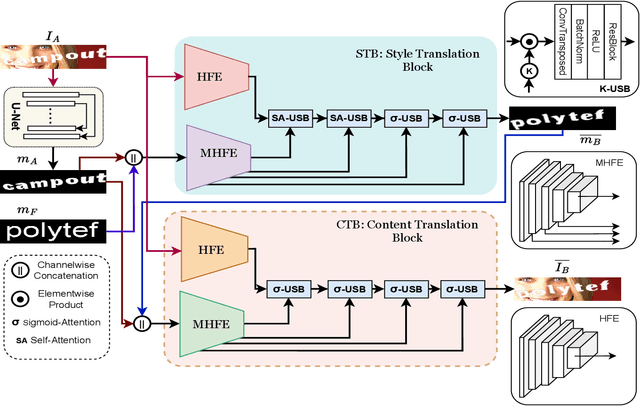

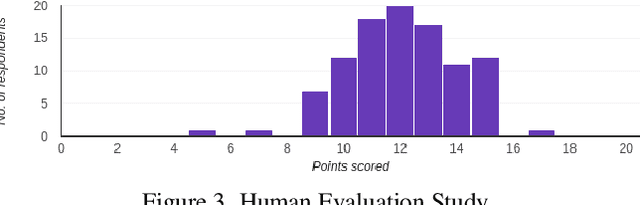

FAST: Font-Agnostic Scene Text Editing

Aug 05, 2023

Abstract:Scene Text Editing (STE) is a challenging research problem, and it aims to modify existing texts in an image while preserving the background and the font style of the original text of the image. Due to its various real-life applications, researchers have explored several approaches toward STE in recent years. However, most of the existing STE methods show inferior editing performance because of (1) complex image backgrounds, (2) various font styles, and (3) varying word lengths within the text. To address such inferior editing performance issues, in this paper, we propose a novel font-agnostic scene text editing framework, named FAST, for simultaneously generating text in arbitrary styles and locations while preserving a natural and realistic appearance through combined mask generation and style transfer. The proposed approach differs from the existing methods as they directly modify all image pixels. Instead, the proposed method has introduced a filtering mechanism to remove background distractions, allowing the network to focus solely on the text regions where editing is required. Additionally, a text-style transfer module has been designed to mitigate the challenges posed by varying word lengths. Extensive experiments and ablations have been conducted, and the results demonstrate that the proposed method outperforms the existing methods both qualitatively and quantitatively.

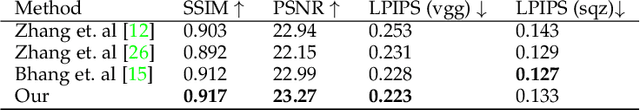

MMC: Multi-Modal Colorization of Images using Textual Descriptions

Apr 25, 2023Abstract:Handling various objects with different colors is a significant challenge for image colorization techniques. Thus, for complex real-world scenes, the existing image colorization algorithms often fail to maintain color consistency. In this work, we attempt to integrate textual descriptions as an auxiliary condition, along with the grayscale image that is to be colorized, to improve the fidelity of the colorization process. To do so, we have proposed a deep network that takes two inputs (grayscale image and the respective encoded text description) and tries to predict the relevant color components. Also, we have predicted each object in the image and have colorized them with their individual description to incorporate their specific attributes in the colorization process. After that, a fusion model fuses all the image objects (segments) to generate the final colorized image. As the respective textual descriptions contain color information of the objects present in the image, text encoding helps to improve the overall quality of predicted colors. In terms of performance, the proposed method outperforms existing colorization techniques in terms of LPIPS, PSNR and SSIM metrics.

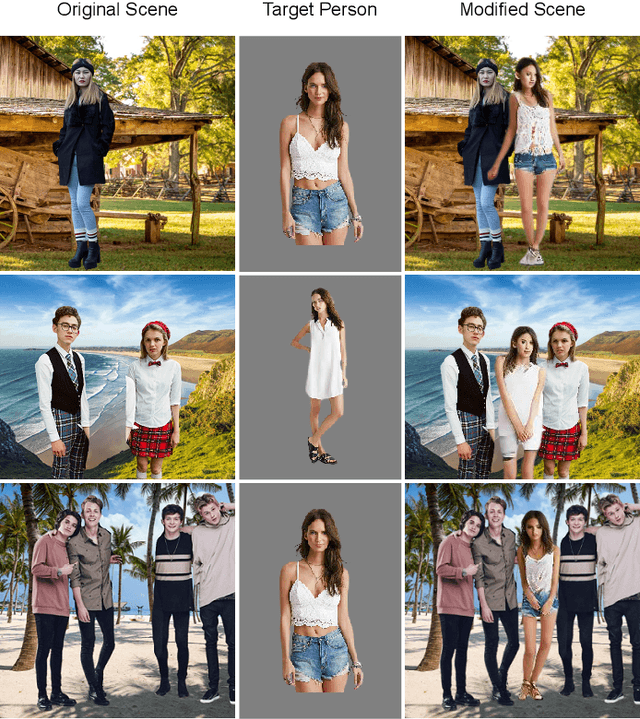

Global Context-Aware Person Image Generation

Feb 28, 2023Abstract:We propose a data-driven approach for context-aware person image generation. Specifically, we attempt to generate a person image such that the synthesized instance can blend into a complex scene. In our method, the position, scale, and appearance of the generated person are semantically conditioned on the existing persons in the scene. The proposed technique is divided into three sequential steps. At first, we employ a Pix2PixHD model to infer a coarse semantic mask that represents the new person's spatial location, scale, and potential pose. Next, we use a data-centric approach to select the closest representation from a precomputed cluster of fine semantic masks. Finally, we adopt a multi-scale, attention-guided architecture to transfer the appearance attributes from an exemplar image. The proposed strategy enables us to synthesize semantically coherent realistic persons that can blend into an existing scene without altering the global context. We conclude our findings with relevant qualitative and quantitative evaluations.

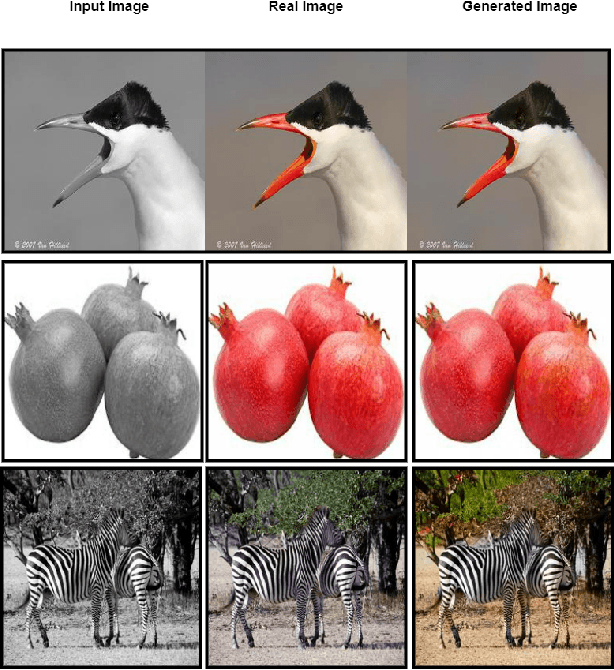

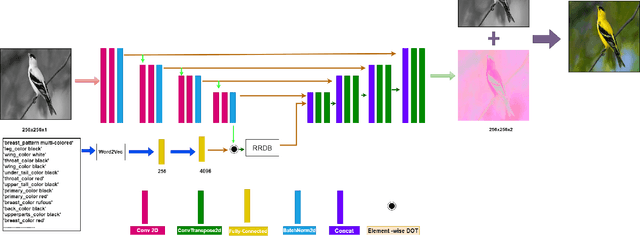

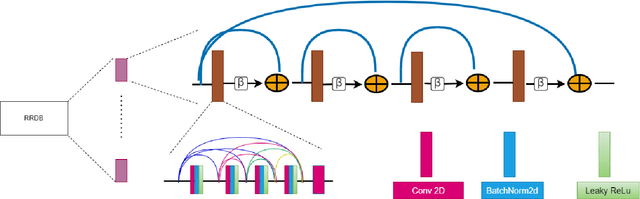

TIC: Text-Guided Image Colorization

Aug 04, 2022

Abstract:Image colorization is a well-known problem in computer vision. However, due to the ill-posed nature of the task, image colorization is inherently challenging. Though several attempts have been made by researchers to make the colorization pipeline automatic, these processes often produce unrealistic results due to a lack of conditioning. In this work, we attempt to integrate textual descriptions as an auxiliary condition, along with the grayscale image that is to be colorized, to improve the fidelity of the colorization process. To the best of our knowledge, this is one of the first attempts to incorporate textual conditioning in the colorization pipeline. To do so, we have proposed a novel deep network that takes two inputs (the grayscale image and the respective encoded text description) and tries to predict the relevant color gamut. As the respective textual descriptions contain color information of the objects present in the scene, the text encoding helps to improve the overall quality of the predicted colors. We have evaluated our proposed model using different metrics and found that it outperforms the state-of-the-art colorization algorithms both qualitatively and quantitatively.

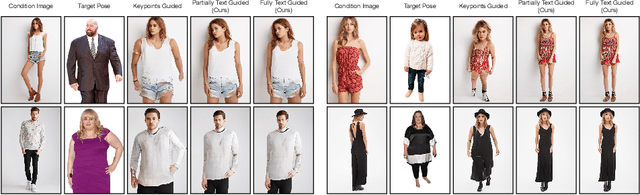

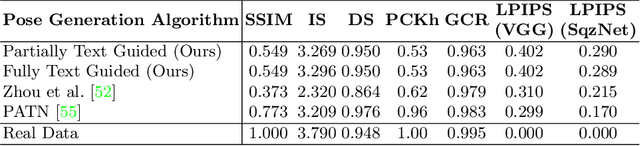

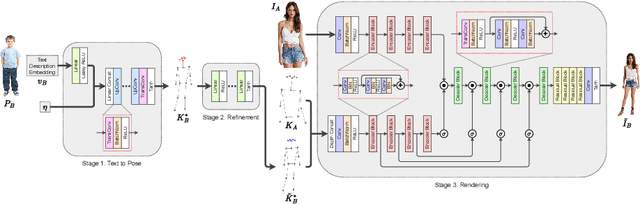

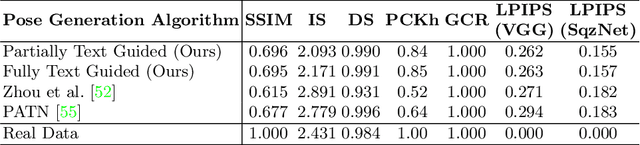

TIPS: Text-Induced Pose Synthesis

Jul 24, 2022

Abstract:In computer vision, human pose synthesis and transfer deal with probabilistic image generation of a person in a previously unseen pose from an already available observation of that person. Though researchers have recently proposed several methods to achieve this task, most of these techniques derive the target pose directly from the desired target image on a specific dataset, making the underlying process challenging to apply in real-world scenarios as the generation of the target image is the actual aim. In this paper, we first present the shortcomings of current pose transfer algorithms and then propose a novel text-based pose transfer technique to address those issues. We divide the problem into three independent stages: (a) text to pose representation, (b) pose refinement, and (c) pose rendering. To the best of our knowledge, this is one of the first attempts to develop a text-based pose transfer framework where we also introduce a new dataset DF-PASS, by adding descriptive pose annotations for the images of the DeepFashion dataset. The proposed method generates promising results with significant qualitative and quantitative scores in our experiments.

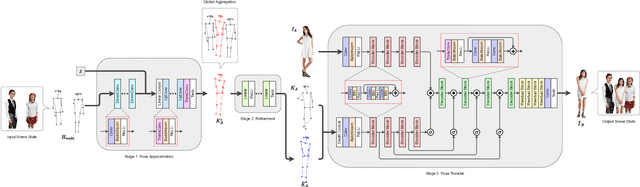

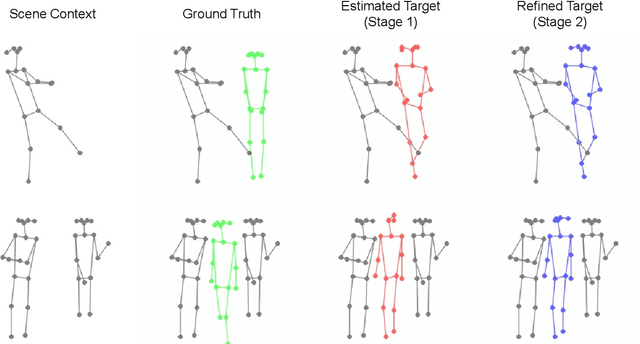

Scene Aware Person Image Generation through Global Contextual Conditioning

Jun 06, 2022

Abstract:Person image generation is an intriguing yet challenging problem. However, this task becomes even more difficult under constrained situations. In this work, we propose a novel pipeline to generate and insert contextually relevant person images into an existing scene while preserving the global semantics. More specifically, we aim to insert a person such that the location, pose, and scale of the person being inserted blends in with the existing persons in the scene. Our method uses three individual networks in a sequential pipeline. At first, we predict the potential location and the skeletal structure of the new person by conditioning a Wasserstein Generative Adversarial Network (WGAN) on the existing human skeletons present in the scene. Next, the predicted skeleton is refined through a shallow linear network to achieve higher structural accuracy in the generated image. Finally, the target image is generated from the refined skeleton using another generative network conditioned on a given image of the target person. In our experiments, we achieve high-resolution photo-realistic generation results while preserving the general context of the scene. We conclude our paper with multiple qualitative and quantitative benchmarks on the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge