Mengyue Geng

Adaptive Discovering and Merging for Incremental Novel Class Discovery

Mar 06, 2024Abstract:One important desideratum of lifelong learning aims to discover novel classes from unlabelled data in a continuous manner. The central challenge is twofold: discovering and learning novel classes while mitigating the issue of catastrophic forgetting of established knowledge. To this end, we introduce a new paradigm called Adaptive Discovering and Merging (ADM) to discover novel categories adaptively in the incremental stage and integrate novel knowledge into the model without affecting the original knowledge. To discover novel classes adaptively, we decouple representation learning and novel class discovery, and use Triple Comparison (TC) and Probability Regularization (PR) to constrain the probability discrepancy and diversity for adaptive category assignment. To merge the learned novel knowledge adaptively, we propose a hybrid structure with base and novel branches named Adaptive Model Merging (AMM), which reduces the interference of the novel branch on the old classes to preserve the previous knowledge, and merges the novel branch to the base model without performance loss and parameter growth. Extensive experiments on several datasets show that ADM significantly outperforms existing class-incremental Novel Class Discovery (class-iNCD) approaches. Moreover, our AMM also benefits the class-incremental Learning (class-IL) task by alleviating the catastrophic forgetting problem.

Recurrent Spike-based Image Restoration under General Illumination

Aug 06, 2023Abstract:Spike camera is a new type of bio-inspired vision sensor that records light intensity in the form of a spike array with high temporal resolution (20,000 Hz). This new paradigm of vision sensor offers significant advantages for many vision tasks such as high speed image reconstruction. However, existing spike-based approaches typically assume that the scenes are with sufficient light intensity, which is usually unavailable in many real-world scenarios such as rainy days or dusk scenes. To unlock more spike-based application scenarios, we propose a Recurrent Spike-based Image Restoration (RSIR) network, which is the first work towards restoring clear images from spike arrays under general illumination. Specifically, to accurately describe the noise distribution under different illuminations, we build a physical-based spike noise model according to the sampling process of the spike camera. Based on the noise model, we design our RSIR network which consists of an adaptive spike transformation module, a recurrent temporal feature fusion module, and a frequency-based spike denoising module. Our RSIR can process the spike array in a recursive manner to ensure that the spike temporal information is well utilized. In the training process, we generate the simulated spike data based on our noise model to train our network. Extensive experiments on real-world datasets with different illuminations demonstrate the effectiveness of the proposed network. The code and dataset are released at https://github.com/BIT-Vision/RSIR.

Deep Transfer Learning for Person Re-identification

Nov 22, 2016

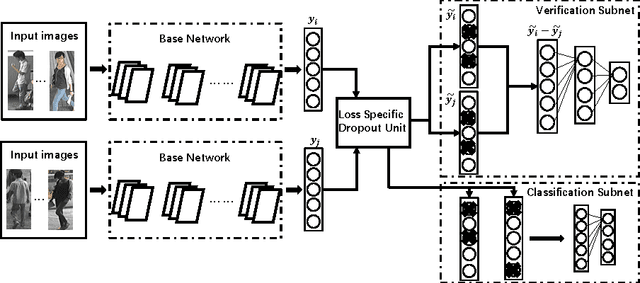

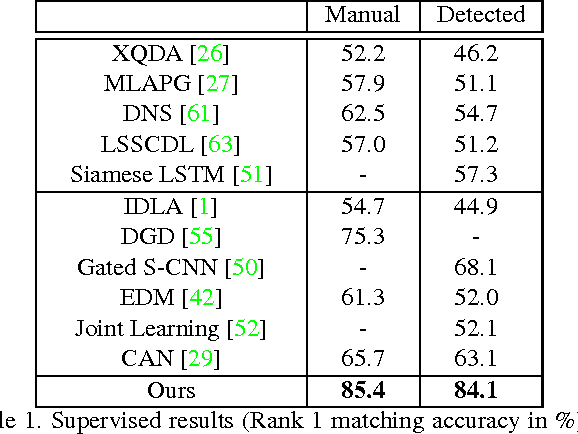

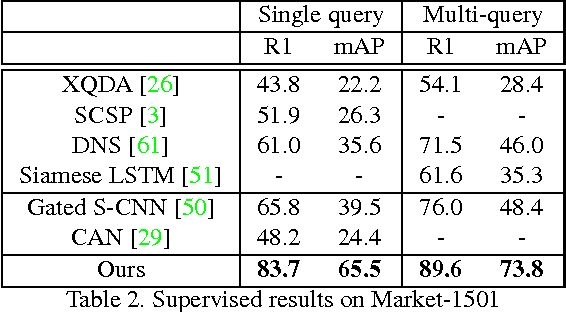

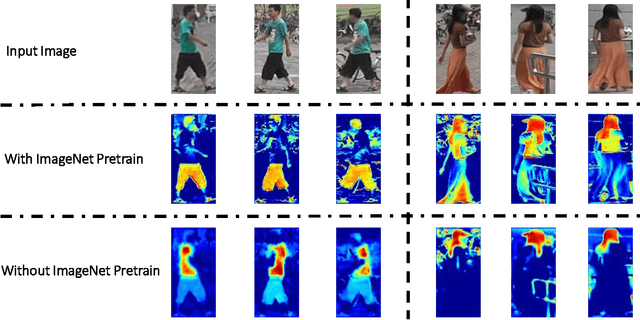

Abstract:Person re-identification (Re-ID) poses a unique challenge to deep learning: how to learn a deep model with millions of parameters on a small training set of few or no labels. In this paper, a number of deep transfer learning models are proposed to address the data sparsity problem. First, a deep network architecture is designed which differs from existing deep Re-ID models in that (a) it is more suitable for transferring representations learned from large image classification datasets, and (b) classification loss and verification loss are combined, each of which adopts a different dropout strategy. Second, a two-stepped fine-tuning strategy is developed to transfer knowledge from auxiliary datasets. Third, given an unlabelled Re-ID dataset, a novel unsupervised deep transfer learning model is developed based on co-training. The proposed models outperform the state-of-the-art deep Re-ID models by large margins: we achieve Rank-1 accuracy of 85.4\%, 83.7\% and 56.3\% on CUHK03, Market1501, and VIPeR respectively, whilst on VIPeR, our unsupervised model (45.1\%) beats most supervised models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge