Mehnaz Tabassum

Diagnostic Performance of Deep Learning for Predicting Gliomas' IDH and 1p/19q Status in MRI: A Systematic Review and Meta-Analysis

Oct 28, 2024Abstract:Gliomas, the most common primary brain tumors, show high heterogeneity in histological and molecular characteristics. Accurate molecular profiling, like isocitrate dehydrogenase (IDH) mutation and 1p/19q codeletion, is critical for diagnosis, treatment, and prognosis. This review evaluates MRI-based deep learning (DL) models' efficacy in predicting these biomarkers. Following PRISMA guidelines, we systematically searched major databases (PubMed, Scopus, Ovid, and Web of Science) up to February 2024, screening studies that utilized DL to predict IDH and 1p/19q codeletion status from MRI data of glioma patients. We assessed the quality and risk of bias using the radiomics quality score and QUADAS-2 tool. Our meta-analysis used a bivariate model to compute pooled sensitivity, specificity, and meta-regression to assess inter-study heterogeneity. Of the 565 articles, 57 were selected for qualitative synthesis, and 52 underwent meta-analysis. The pooled estimates showed high diagnostic performance, with validation sensitivity, specificity, and area under the curve (AUC) of 0.84 [prediction interval (PI): 0.67-0.93, I2=51.10%, p < 0.05], 0.87 [PI: 0.49-0.98, I2=82.30%, p < 0.05], and 0.89 for IDH prediction, and 0.76 [PI: 0.28-0.96, I2=77.60%, p < 0.05], 0.85 [PI: 0.49-0.97, I2=80.30%, p < 0.05], and 0.90 for 1p/19q prediction, respectively. Meta-regression analyses revealed significant heterogeneity influenced by glioma grade, data source, inclusion of non-radiomics data, MRI sequences, segmentation and feature extraction methods, and validation techniques. DL models demonstrate strong potential in predicting molecular biomarkers from MRI scans, with significant variability influenced by technical and clinical factors. Thorough external validation is necessary to increase clinical utility.

Towards Effective Authorship Attribution: Integrating Class-Incremental Learning

Aug 12, 2024Abstract:AA is the process of attributing an unidentified document to its true author from a predefined group of known candidates, each possessing multiple samples. The nature of AA necessitates accommodating emerging new authors, as each individual must be considered unique. This uniqueness can be attributed to various factors, including their stylistic preferences, areas of expertise, gender, cultural background, and other personal characteristics that influence their writing. These diverse attributes contribute to the distinctiveness of each author, making it essential for AA systems to recognize and account for these variations. However, current AA benchmarks commonly overlook this uniqueness and frame the problem as a closed-world classification, assuming a fixed number of authors throughout the system's lifespan and neglecting the inclusion of emerging new authors. This oversight renders the majority of existing approaches ineffective for real-world applications of AA, where continuous learning is essential. These inefficiencies manifest as current models either resist learning new authors or experience catastrophic forgetting, where the introduction of new data causes the models to lose previously acquired knowledge. To address these inefficiencies, we propose redefining AA as CIL, where new authors are introduced incrementally after the initial training phase, allowing the system to adapt and learn continuously. To achieve this, we briefly examine subsequent CIL approaches introduced in other domains. Moreover, we have adopted several well-known CIL methods, along with an examination of their strengths and weaknesses in the context of AA. Additionally, we outline potential future directions for advancing CIL AA systems. As a result, our paper can serve as a starting point for evolving AA systems from closed-world models to continual learning through CIL paradigms.

When Eye-Tracking Meets Machine Learning: A Systematic Review on Applications in Medical Image Analysis

Mar 12, 2024

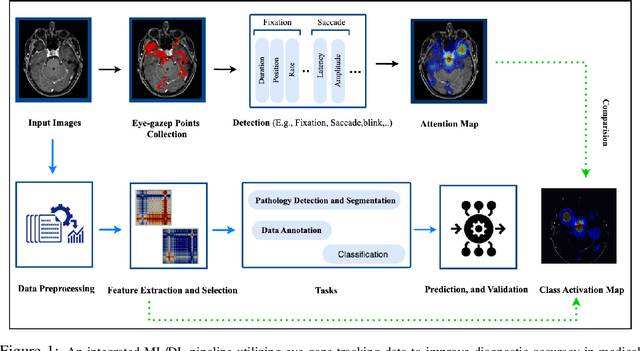

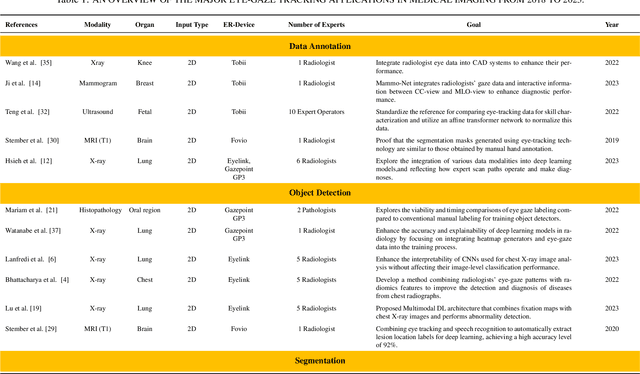

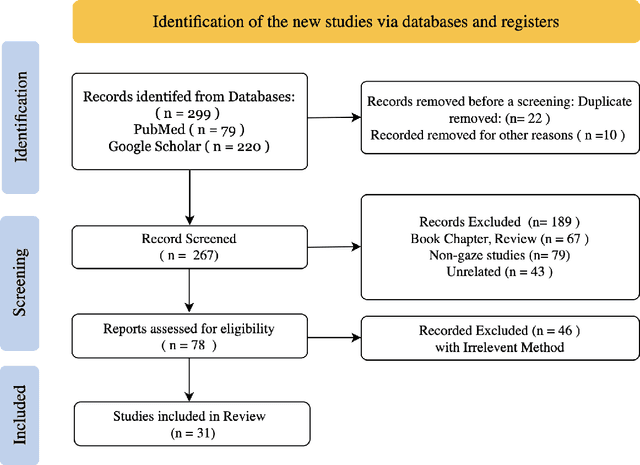

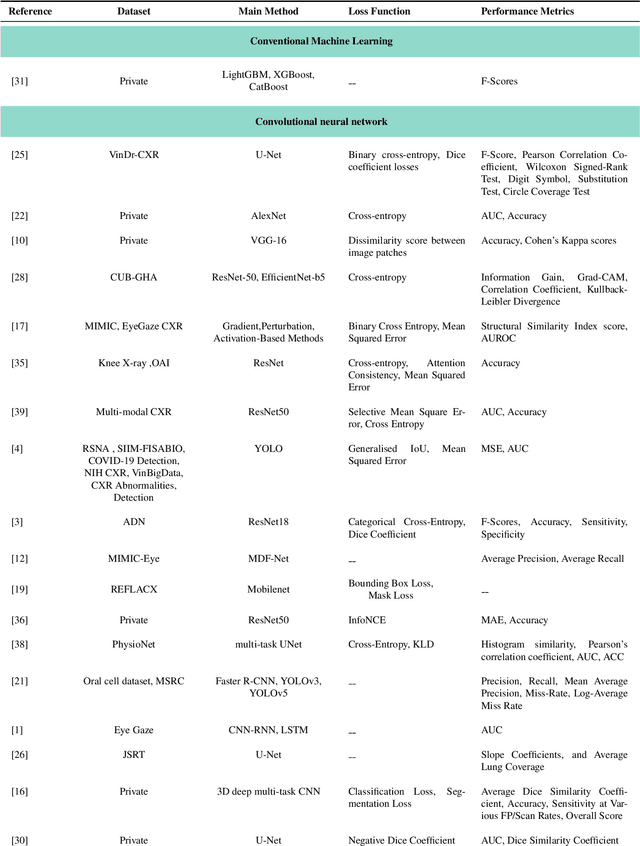

Abstract:Eye-gaze tracking research offers significant promise in enhancing various healthcare-related tasks, above all in medical image analysis and interpretation. Eye tracking, a technology that monitors and records the movement of the eyes, provides valuable insights into human visual attention patterns. This technology can transform how healthcare professionals and medical specialists engage with and analyze diagnostic images, offering a more insightful and efficient approach to medical diagnostics. Hence, extracting meaningful features and insights from medical images by leveraging eye-gaze data improves our understanding of how radiologists and other medical experts monitor, interpret, and understand images for diagnostic purposes. Eye-tracking data, with intricate human visual attention patterns embedded, provides a bridge to integrating artificial intelligence (AI) development and human cognition. This integration allows novel methods to incorporate domain knowledge into machine learning (ML) and deep learning (DL) approaches to enhance their alignment with human-like perception and decision-making. Moreover, extensive collections of eye-tracking data have also enabled novel ML/DL methods to analyze human visual patterns, paving the way to a better understanding of human vision, attention, and cognition. This systematic review investigates eye-gaze tracking applications and methodologies for enhancing ML/DL algorithms for medical image analysis in depth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge