Mehmet A. Orgun

UniMOS: A Universal Framework For Multi-Organ Segmentation Over Label-Constrained Datasets

Nov 20, 2023

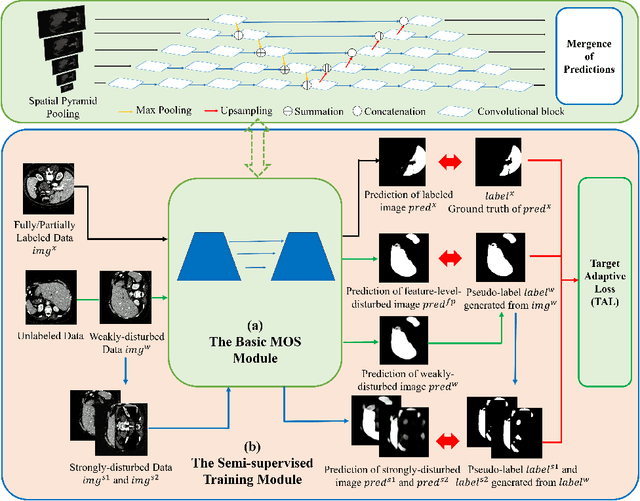

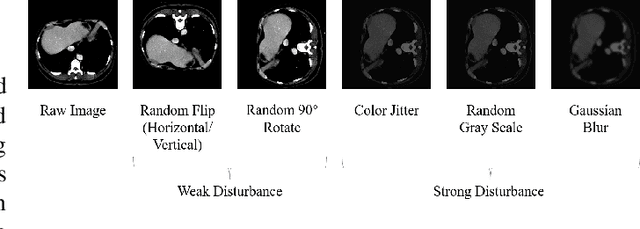

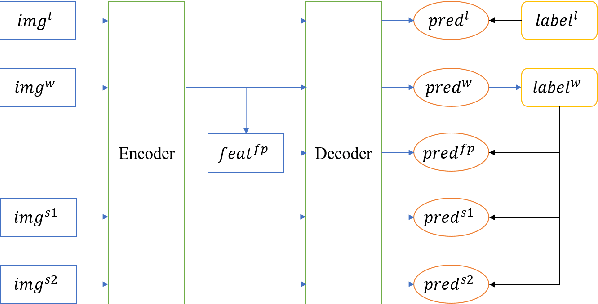

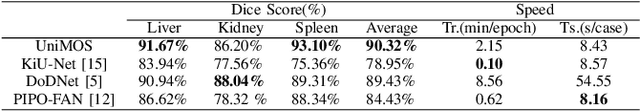

Abstract:Machine learning models for medical images can help physicians diagnose and manage diseases. However, due to the fact that medical image annotation requires a great deal of manpower and expertise, as well as the fact that clinical departments perform image annotation based on task orientation, there is the problem of having fewer medical image annotation data with more unlabeled data and having many datasets that annotate only a single organ. In this paper, we present UniMOS, the first universal framework for achieving the utilization of fully and partially labeled images as well as unlabeled images. Specifically, we construct a Multi-Organ Segmentation (MOS) module over fully/partially labeled data as the basenet and designed a new target adaptive loss. Furthermore, we incorporate a semi-supervised training module that combines consistent regularization and pseudolabeling techniques on unlabeled data, which significantly improves the segmentation of unlabeled data. Experiments show that the framework exhibits excellent performance in several medical image segmentation tasks compared to other advanced methods, and also significantly improves data utilization and reduces annotation cost. Code and models are available at: https://github.com/lw8807001/UniMOS.

Beyond CNNs: Exploiting Further Inherent Symmetries in Medical Image Segmentation

Jul 29, 2022

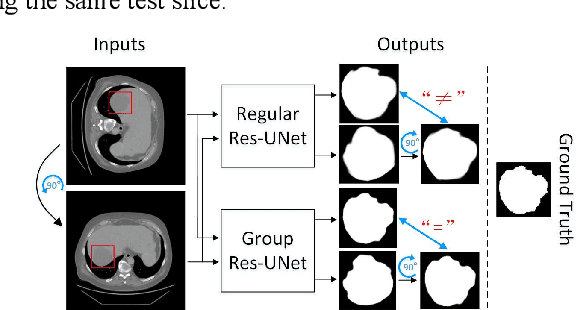

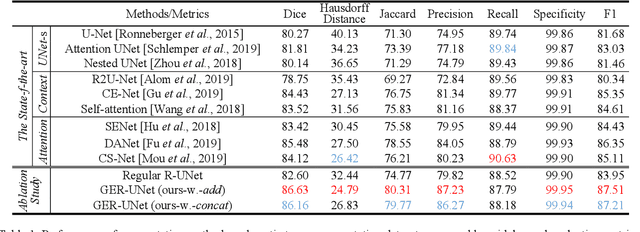

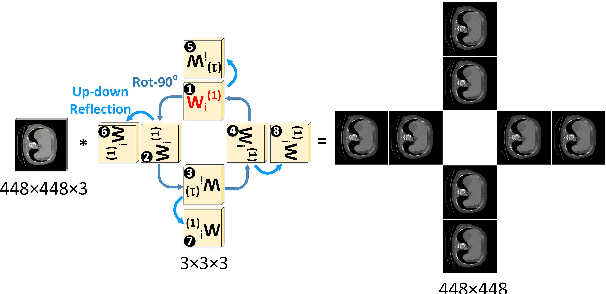

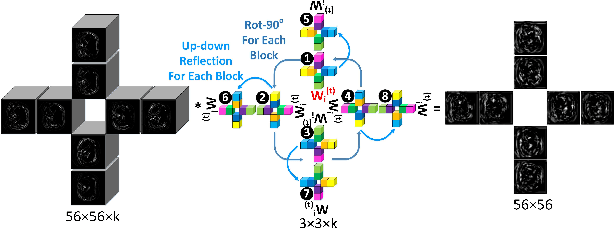

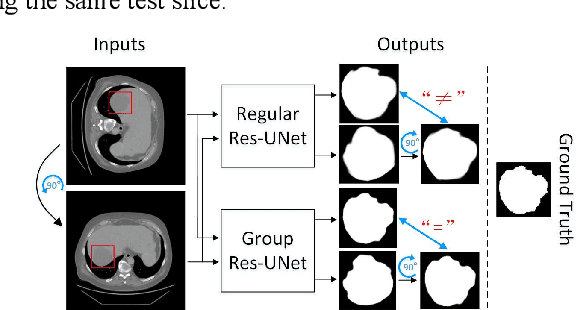

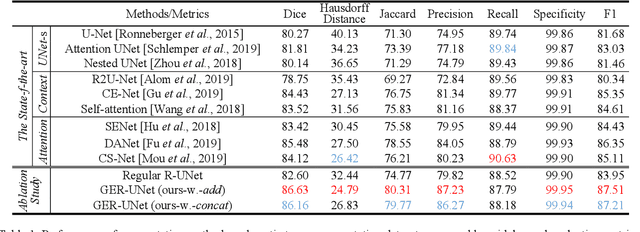

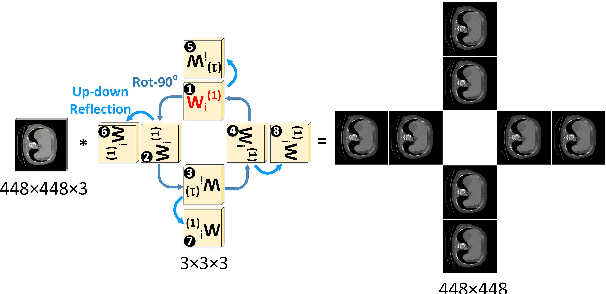

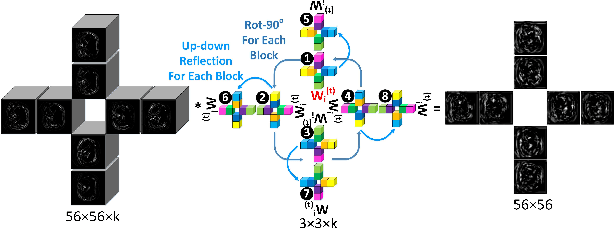

Abstract:Automatic tumor or lesion segmentation is a crucial step in medical image analysis for computer-aided diagnosis. Although the existing methods based on Convolutional Neural Networks (CNNs) have achieved the state-of-the-art performance, many challenges still remain in medical tumor segmentation. This is because, although the human visual system can detect symmetries in 2D images effectively, regular CNNs can only exploit translation invariance, overlooking further inherent symmetries existing in medical images such as rotations and reflections. To solve this problem, we propose a novel group equivariant segmentation framework by encoding those inherent symmetries for learning more precise representations. First, kernel-based equivariant operations are devised on each orientation, which allows it to effectively address the gaps of learning symmetries in existing approaches. Then, to keep segmentation networks globally equivariant, we design distinctive group layers with layer-wise symmetry constraints. Finally, based on our novel framework, extensive experiments conducted on real-world clinical data demonstrate that a Group Equivariant Res-UNet (named GER-UNet) outperforms its regular CNN-based counterpart and the state-of-the-art segmentation methods in the tasks of hepatic tumor segmentation, COVID-19 lung infection segmentation and retinal vessel detection. More importantly, the newly built GER-UNet also shows potential in reducing the sample complexity and the redundancy of filters, upgrading current segmentation CNNs and delineating organs on other medical imaging modalities.

Graph Learning based Recommender Systems: A Review

May 13, 2021

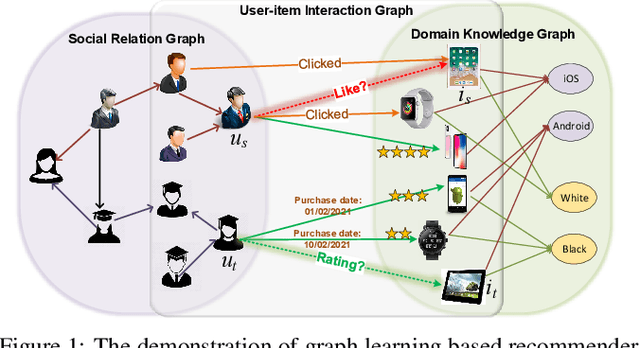

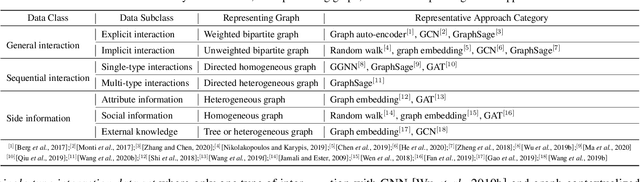

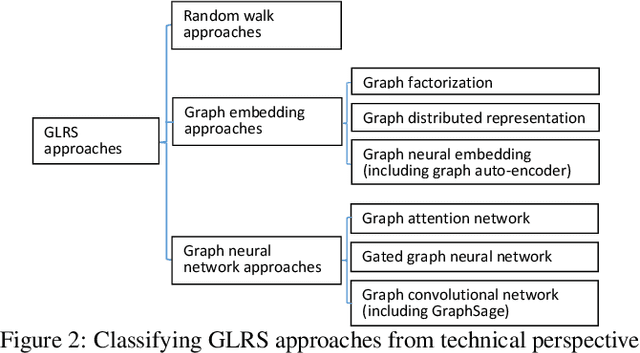

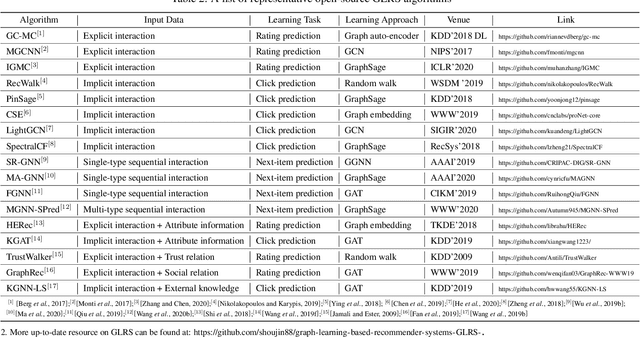

Abstract:Recent years have witnessed the fast development of the emerging topic of Graph Learning based Recommender Systems (GLRS). GLRS employ advanced graph learning approaches to model users' preferences and intentions as well as items' characteristics for recommendations. Differently from other RS approaches, including content-based filtering and collaborative filtering, GLRS are built on graphs where the important objects, e.g., users, items, and attributes, are either explicitly or implicitly connected. With the rapid development of graph learning techniques, exploring and exploiting homogeneous or heterogeneous relations in graphs are a promising direction for building more effective RS. In this paper, we provide a systematic review of GLRS, by discussing how they extract important knowledge from graph-based representations to improve the accuracy, reliability and explainability of the recommendations. First, we characterize and formalize GLRS, and then summarize and categorize the key challenges and main progress in this novel research area. Finally, we share some new research directions in this vibrant area.

Beyond CNNs: Exploiting Further Inherent Symmetries in Medical Images for Segmentation

May 08, 2020

Abstract:Automatic tumor segmentation is a crucial step in medical image analysis for computer-aided diagnosis. Although the existing methods based on convolutional neural networks (CNNs) have achieved the state-of-the-art performance, many challenges still remain in medical tumor segmentation. This is because regular CNNs can only exploit translation invariance, ignoring further inherent symmetries existing in medical images such as rotations and reflections. To mitigate this shortcoming, we propose a novel group equivariant segmentation framework by encoding those inherent symmetries for learning more precise representations. First, kernel-based equivariant operations are devised on every orientation, which can effectively address the gaps of learning symmetries in existing approaches. Then, to keep segmentation networks globally equivariant, we design distinctive group layers with layerwise symmetry constraints. By exploiting further symmetries, novel segmentation CNNs can dramatically reduce the sample complexity and the redundancy of filters (by roughly 2/3) over regular CNNs. More importantly, based on our novel framework, we show that a newly built GER-UNet outperforms its regular CNN-based counterpart and the state-of-the-art segmentation methods on real-world clinical data. Specifically, the group layers of our segmentation framework can be seamlessly integrated into any popular CNN-based segmentation architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge