Maximilian Nielsen

Cluster-based human-in-the-loop strategy for improving machine learning-based circulating tumor cell detection in liquid biopsy

Nov 25, 2024

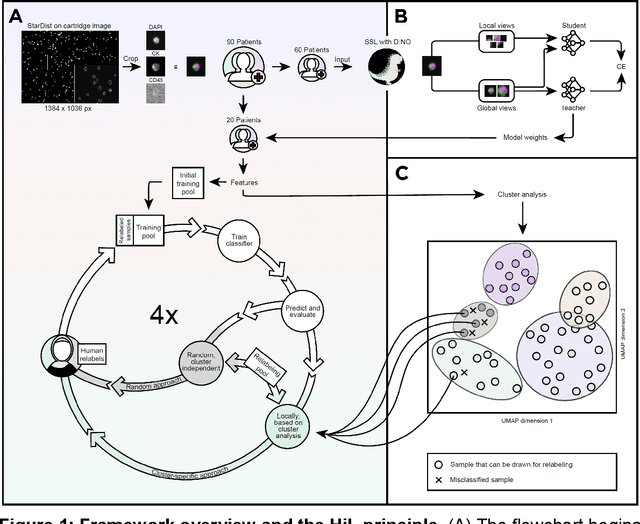

Abstract:Detection and differentiation of circulating tumor cells (CTCs) and non-CTCs in blood draws of cancer patients pose multiple challenges. While the gold standard relies on tedious manual evaluation of an automatically generated selection of images, machine learning (ML) techniques offer the potential to automate these processes. However, human assessment remains indispensable when the ML system arrives at uncertain or wrong decisions due to an insufficient set of labeled training data. This study introduces a human-in-the-loop (HiL) strategy for improving ML-based CTC detection. We combine self-supervised deep learning and a conventional ML-based classifier and propose iterative targeted sampling and labeling of new unlabeled training samples by human experts. The sampling strategy is based on the classification performance of local latent space clusters. The advantages of the proposed approach compared to naive random sampling are demonstrated for liquid biopsy data from patients with metastatic breast cancer.

Surgical tool classification and localization: results and methods from the MICCAI 2022 SurgToolLoc challenge

May 11, 2023

Abstract:The ability to automatically detect and track surgical instruments in endoscopic videos can enable transformational interventions. Assessing surgical performance and efficiency, identifying skilled tool use and choreography, and planning operational and logistical aspects of OR resources are just a few of the applications that could benefit. Unfortunately, obtaining the annotations needed to train machine learning models to identify and localize surgical tools is a difficult task. Annotating bounding boxes frame-by-frame is tedious and time-consuming, yet large amounts of data with a wide variety of surgical tools and surgeries must be captured for robust training. Moreover, ongoing annotator training is needed to stay up to date with surgical instrument innovation. In robotic-assisted surgery, however, potentially informative data like timestamps of instrument installation and removal can be programmatically harvested. The ability to rely on tool installation data alone would significantly reduce the workload to train robust tool-tracking models. With this motivation in mind we invited the surgical data science community to participate in the challenge, SurgToolLoc 2022. The goal was to leverage tool presence data as weak labels for machine learning models trained to detect tools and localize them in video frames with bounding boxes. We present the results of this challenge along with many of the team's efforts. We conclude by discussing these results in the broader context of machine learning and surgical data science. The training data used for this challenge consisting of 24,695 video clips with tool presence labels is also being released publicly and can be accessed at https://console.cloud.google.com/storage/browser/isi-surgtoolloc-2022.

Self-supervision for medical image classification: state-of-the-art performance with ~100 labeled training samples per class

Apr 11, 2023Abstract:Is self-supervised deep learning (DL) for medical image analysis already a serious alternative to the de facto standard of end-to-end trained supervised DL? We tackle this question for medical image classification, with a particular focus on one of the currently most limiting factors of the field: the (non-)availability of labeled data. Based on three common medical imaging modalities (bone marrow microscopy, gastrointestinal endoscopy, dermoscopy) and publicly available data sets, we analyze the performance of self-supervised DL within the self-distillation with no labels (DINO) framework. After learning an image representation without use of image labels, conventional machine learning classifiers are applied. The classifiers are fit using a systematically varied number of labeled data (1-1000 samples per class). Exploiting the learned image representation, we achieve state-of-the-art classification performance for all three imaging modalities and data sets with only a fraction of between 1% and 10% of the available labeled data and about 100 labeled samples per class.

Skin Lesion Classification Using Ensembles of Multi-Resolution EfficientNets with Meta Data

Oct 09, 2019

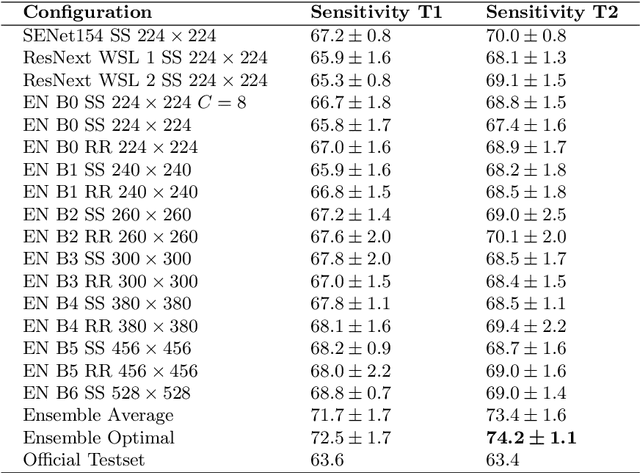

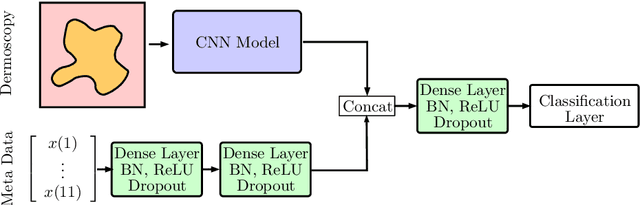

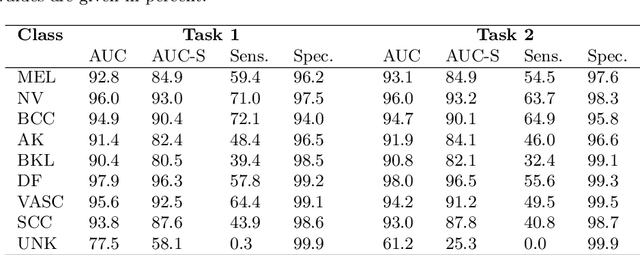

Abstract:In this paper, we describe our method for the ISIC 2019 Skin Lesion Classification Challenge. The challenge comes with two tasks. For task 1, skin lesions have to be classified based on dermoscopic images. For task 2, dermoscopic images and additional patient meta data have to be used. A diverse dataset of 25000 images was provided for training, containing images from eight classes. The final test set contains an additional, unknown class. We address this challenging problem with a simple, data driven approach by including external data with skin lesions types that are not present in the training set. Furthermore, multi-class skin lesion classification comes with the problem of severe class imbalance. We try to overcome this problem by using loss balancing. Also, the dataset contains images with very different resolutions. We take care of this property by considering different model input resolutions and different cropping strategies. To incorporate meta data such as age, anatomical site, and sex, we use an additional dense neural network and fuse its features with the CNN. We aggregate all our models with an ensembling strategy where we search for the optimal subset of models. Our best ensemble achieves a balanced accuracy of 74.2% using five-fold cross-validation. On the official test set our method is ranked first for both tasks with a balanced accuracy of 63.6% for task 1 and 63.4% for task 2.

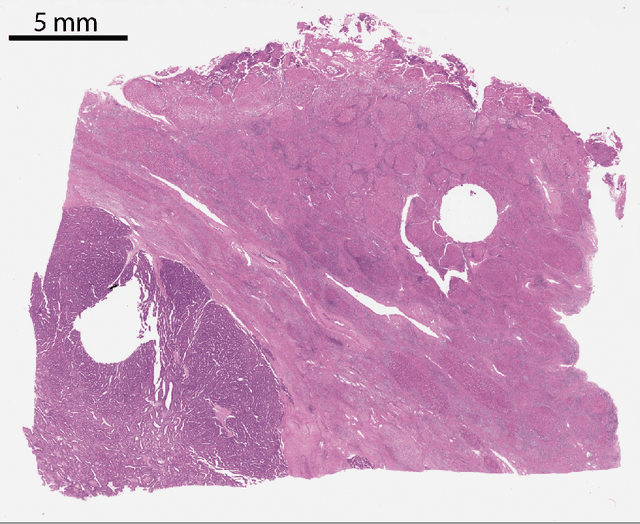

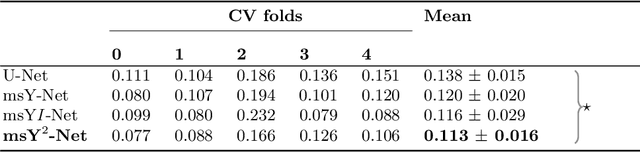

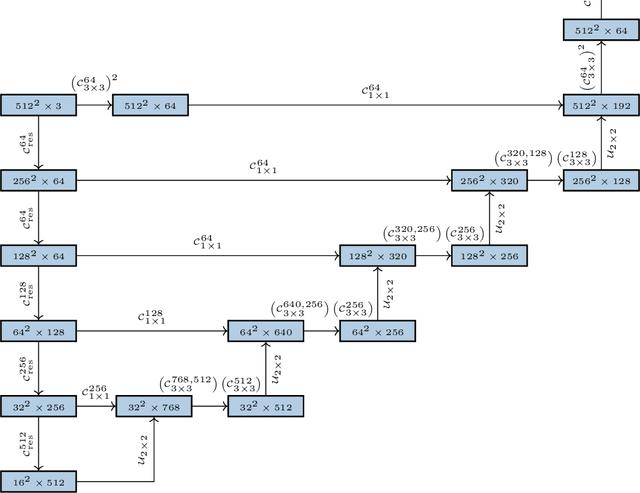

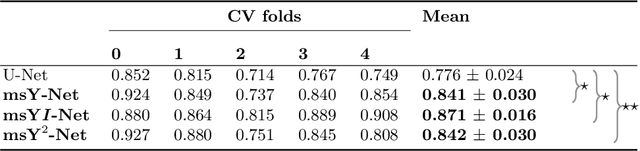

Multi-scale fully convolutional neural networks for histopathology image segmentation: from nuclear aberrations to the global tissue architecture

Sep 24, 2019

Abstract:Histopathologic diagnosis is dependent on simultaneous information from a broad range of scales, ranging from nuclear aberrations ($\approx \mathcal{O}(0.1 \mu m)$) over cellular structures ($\approx \mathcal{O}(10\mu m)$) to the global tissue architecture ($\gtrapprox \mathcal{O}(1 mm)$). Bearing in mind which information is employed by human pathologists, we introduce and examine different strategies for the integration of multiple and widely separate spatial scales into common U-Net-based architectures. Based on this, we present a family of new, end-to-end trainable, multi-scale multi-encoder fully-convolutional neural networks for human modus operandi-inspired computer vision in histopathology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge