Matteo Terzi

On the Properties of Adversarially-Trained CNNs

Mar 17, 2022

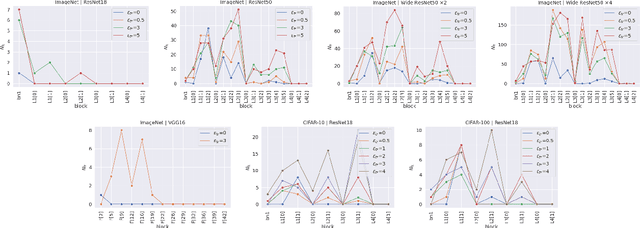

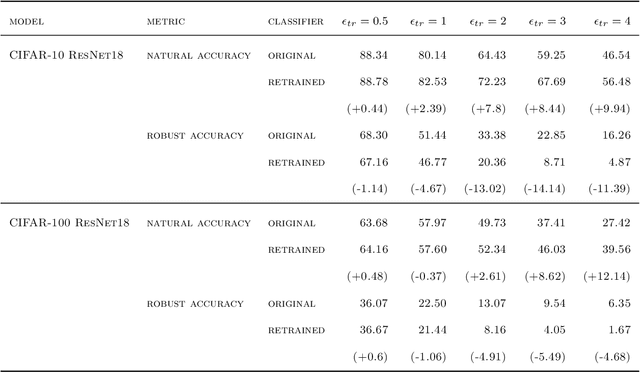

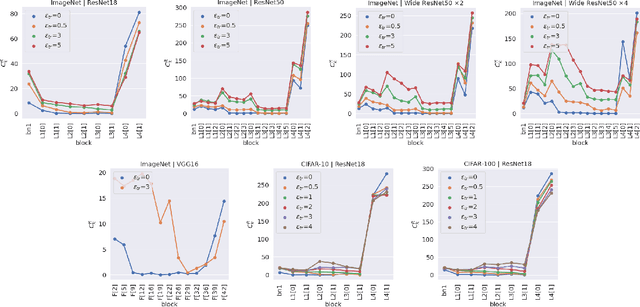

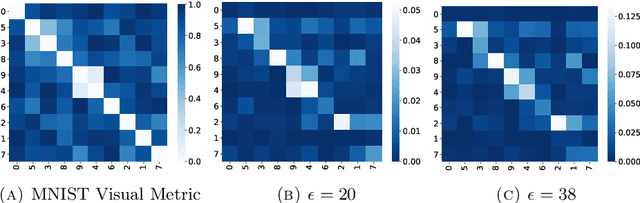

Abstract:Adversarial Training has proved to be an effective training paradigm to enforce robustness against adversarial examples in modern neural network architectures. Despite many efforts, explanations of the foundational principles underpinning the effectiveness of Adversarial Training are limited and far from being widely accepted by the Deep Learning community. In this paper, we describe surprising properties of adversarially-trained models, shedding light on mechanisms through which robustness against adversarial attacks is implemented. Moreover, we highlight limitations and failure modes affecting these models that were not discussed by prior works. We conduct extensive analyses on a wide range of architectures and datasets, performing a deep comparison between robust and natural models.

Improving Robustness with Image Filtering

Dec 21, 2021

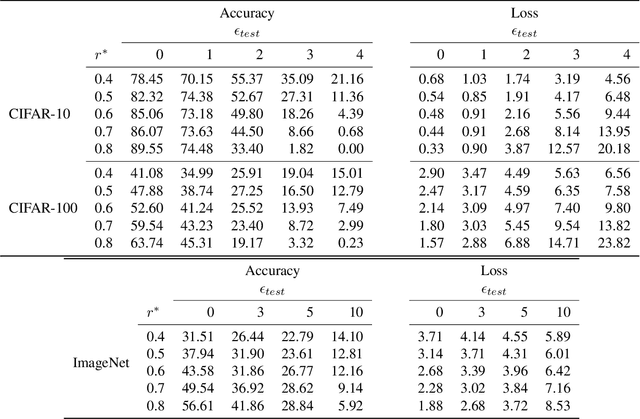

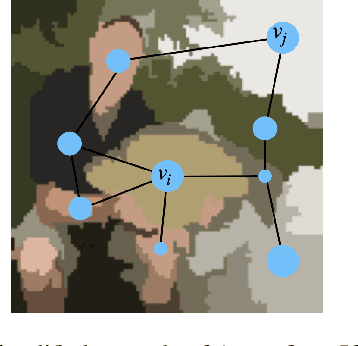

Abstract:Adversarial robustness is one of the most challenging problems in Deep Learning and Computer Vision research. All the state-of-the-art techniques require a time-consuming procedure that creates cleverly perturbed images. Due to its cost, many solutions have been proposed to avoid Adversarial Training. However, all these attempts proved ineffective as the attacker manages to exploit spurious correlations among pixels to trigger brittle features implicitly learned by the model. This paper first introduces a new image filtering scheme called Image-Graph Extractor (IGE) that extracts the fundamental nodes of an image and their connections through a graph structure. By leveraging the IGE representation, we build a new defense method, Filtering As a Defense, that does not allow the attacker to entangle pixels to create malicious patterns. Moreover, we show that data augmentation with filtered images effectively improves the model's robustness to data corruption. We validate our techniques on CIFAR-10, CIFAR-100, and ImageNet.

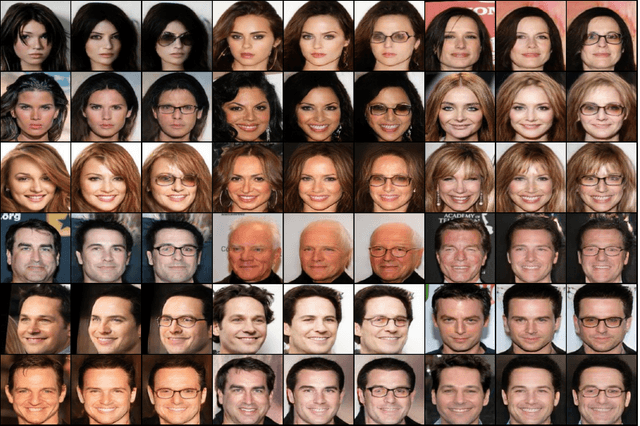

IntroVAC: Introspective Variational Classifiers for Learning Interpretable Latent Subspaces

Sep 14, 2020

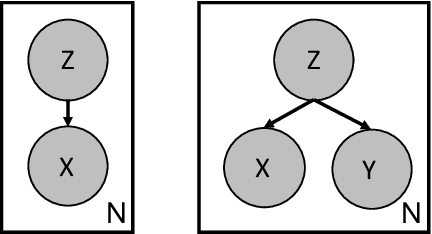

Abstract:Learning useful representations of complex data has been the subject of extensive research for many years. With the diffusion of Deep Neural Networks, Variational Autoencoders have gained lots of attention since they provide an explicit model of the data distribution based on an encoder/decoder architecture which is able to both generate images and encode them in a low-dimensional subspace. However, the latent space is not easily interpretable and the generation capabilities show some limitations since images typically look blurry and lack details. In this paper, we propose the Introspective Variational Classifier (IntroVAC), a model that learns interpretable latent subspaces by exploiting information from an additional label and provides improved image quality thanks to an adversarial training strategy.We show that IntroVAC is able to learn meaningful directions in the latent space enabling fine-grained manipulation of image attributes. We validate our approach on the CelebA dataset.

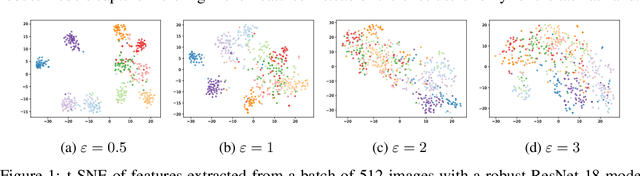

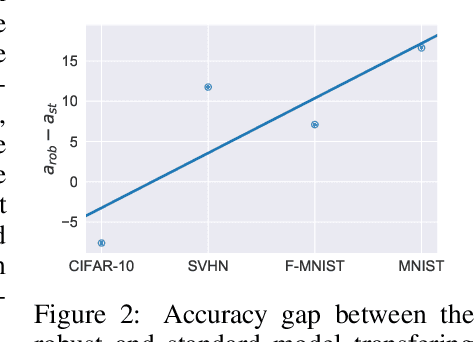

Adversarial Training Reduces Information and Improves Transferability

Aug 21, 2020

Abstract:Recent results show that features of adversarially trained networks for classification, in addition to being robust, enable desirable properties such as invertibility. The latter property may seem counter-intuitive as it is widely accepted by the community that classification models should only capture the minimal information (features) required for the task. Motivated by this discrepancy, we investigate the dual relationship between Adversarial Training and Information Theory. We show that the Adversarial Training can improve linear transferability to new tasks, from which arises a new trade-off between transferability of representations and accuracy on the source task. We validate our results employing robust networks trained on CIFAR-10, CIFAR-100 and ImageNet on several datasets. Moreover, we show that Adversarial Training reduces Fisher information of representations about the input and of the weights about the task, and we provide a theoretical argument which explains the invertibility of deterministic networks without violating the principle of minimality. Finally, we leverage our theoretical insights to remarkably improve the quality of reconstructed images through inversion.

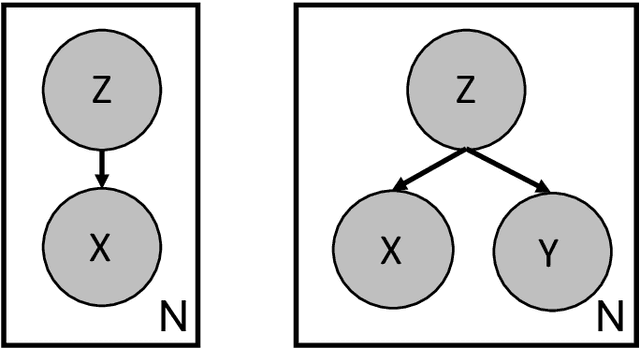

$β$-Variational Classifiers Under Attack

Aug 20, 2020

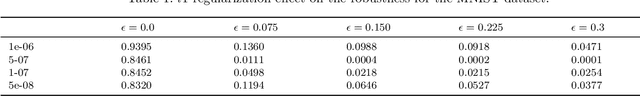

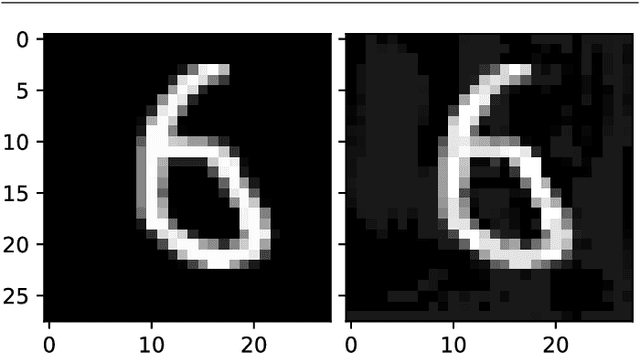

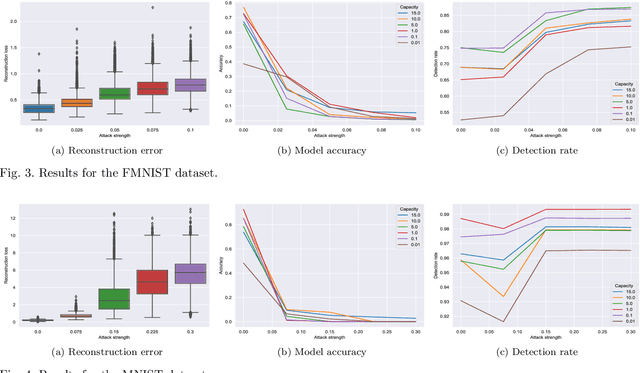

Abstract:Deep Neural networks have gained lots of attention in recent years thanks to the breakthroughs obtained in the field of Computer Vision. However, despite their popularity, it has been shown that they provide limited robustness in their predictions. In particular, it is possible to synthesise small adversarial perturbations that imperceptibly modify a correctly classified input data, making the network confidently misclassify it. This has led to a plethora of different methods to try to improve robustness or detect the presence of these perturbations. In this paper, we perform an analysis of $\beta$-Variational Classifiers, a particular class of methods that not only solve a specific classification task, but also provide a generative component that is able to generate new samples from the input distribution. More in details, we study their robustness and detection capabilities, together with some novel insights on the generative part of the model.

Interpretable Anomaly Detection with DIFFI: Depth-based Feature Importance for the Isolation Forest

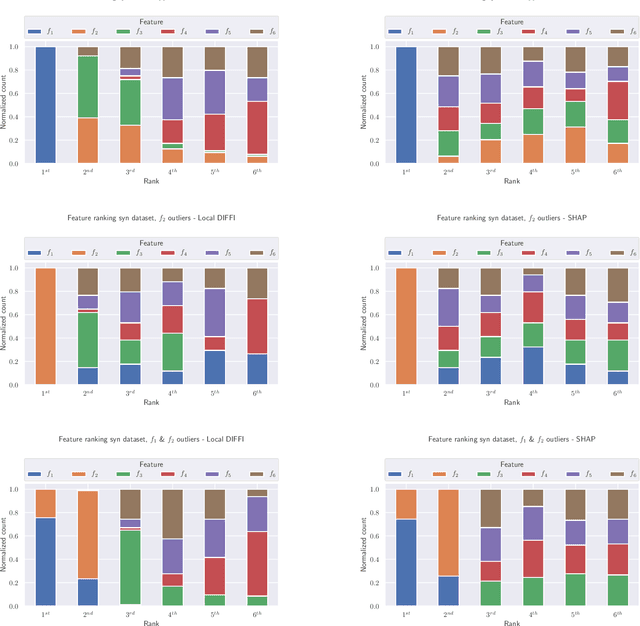

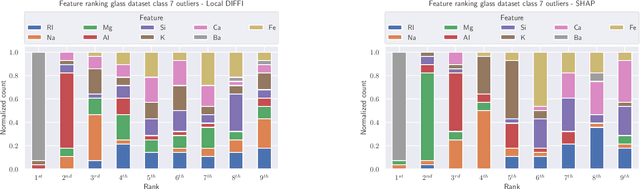

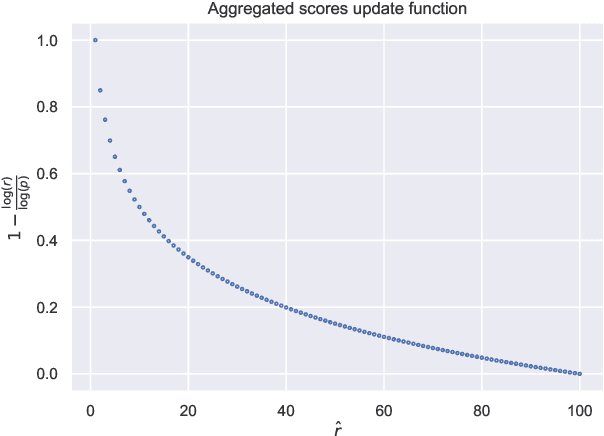

Jul 21, 2020

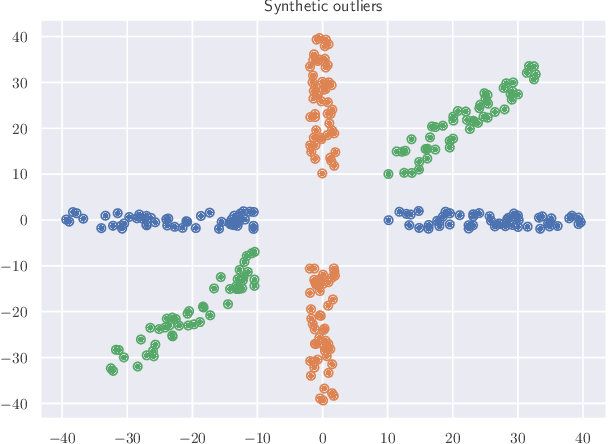

Abstract:Anomaly Detection is one of the most important tasks in unsupervised learning as it aims at detecting anomalous behaviours w.r.t. historical data; in particular, multivariate Anomaly Detection has an important role in many applications thanks to the capability of summarizing the status of a complex system or observed phenomenon with a single indicator (typically called `Anomaly Score') and thanks to the unsupervised nature of the task that does not require human tagging. The Isolation Forest is one of the most commonly adopted algorithms in the field of Anomaly Detection, due to its proven effectiveness and low computational complexity. A major problem affecting Isolation Forest is represented by the lack of interpretability, as it is not possible to grasp the logic behind the model predictions. In this paper we propose effective, yet computationally inexpensive, methods to define feature importance scores at both global and local level for the Isolation Forest. Moreover, we define a procedure to perform unsupervised feature selection for Anomaly Detection problems based on our interpretability method. We provide an extensive analysis of the proposed approaches, including comparisons against state-of-the-art interpretability techniques. We assess the performance on several synthetic and real-world datasets and make the code publicly available to enhance reproducibility and foster research in the field.

Directional Adversarial Training for Cost Sensitive Deep Learning Classification Applications

Oct 08, 2019

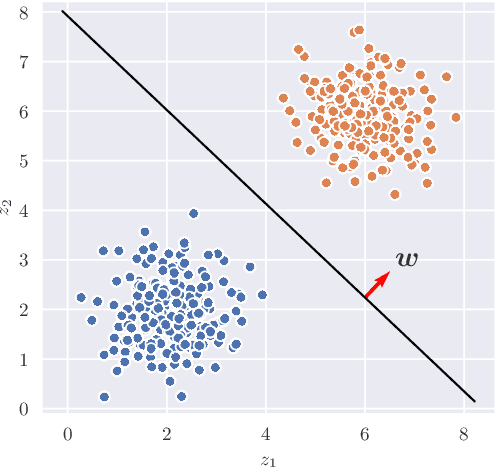

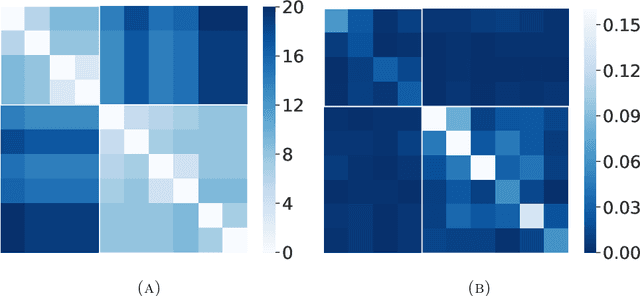

Abstract:In many real-world applications of Machine Learning it is of paramount importance not only to provide accurate predictions, but also to ensure certain levels of robustness. Adversarial Training is a training procedure aiming at providing models that are robust to worst-case perturbations around predefined points. Unfortunately, one of the main issues in adversarial training is that robustness w.r.t. gradient-based attackers is always achieved at the cost of prediction accuracy. In this paper, a new algorithm, called Wasserstein Projected Gradient Descent (WPGD), for adversarial training is proposed. WPGD provides a simple way to obtain cost-sensitive robustness, resulting in a finer control of the robustness-accuracy trade-off. Moreover, WPGD solves an optimal transport problem on the output space of the network and it can efficiently discover directions where robustness is required, allowing to control the directional trade-off between accuracy and robustness. The proposed WPGD is validated in this work on image recognition tasks with different benchmark datasets and architectures. Moreover, real world-like datasets are often unbalanced: this paper shows that when dealing with such type of datasets, the performance of adversarial training are mainly affected in term of standard accuracy.

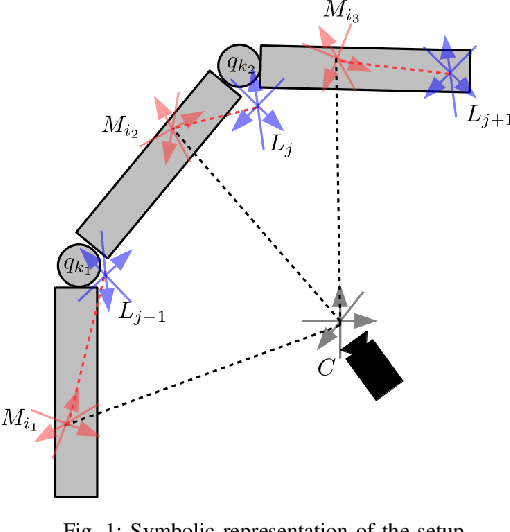

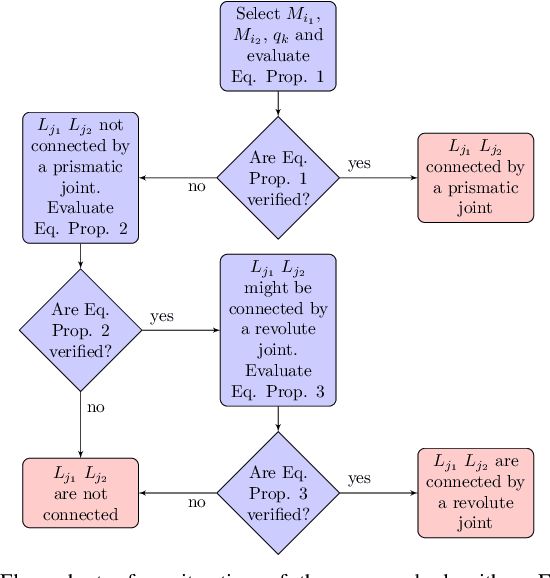

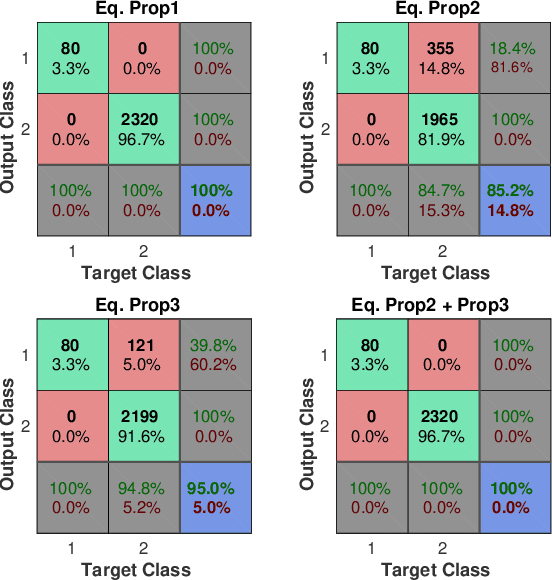

Robot kinematic structure classification from time series of visual data

Mar 11, 2019

Abstract:In this paper we present a novel algorithm to solve the robot kinematic structure identification problem. Given a time series of data, typically obtained processing a set of visual observations, the proposed approach identifies the ordered sequence of links associated to the kinematic chain, the joint type interconnecting each couple of consecutive links, and the input signal influencing the relative motion. Compared to the state of the art, the proposed algorithm has reduced computational costs, and is able to identify also the joints' type sequence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge