Matt Smith

CoDi: Conversational Distillation for Grounded Question Answering

Aug 20, 2024

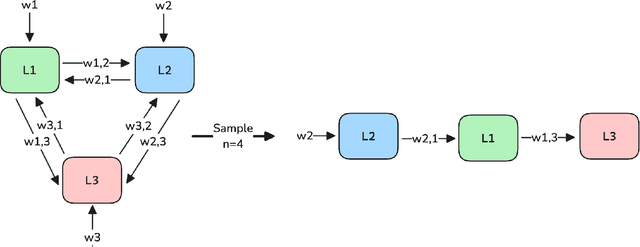

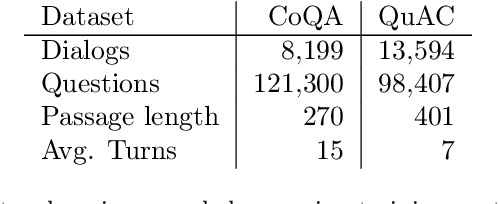

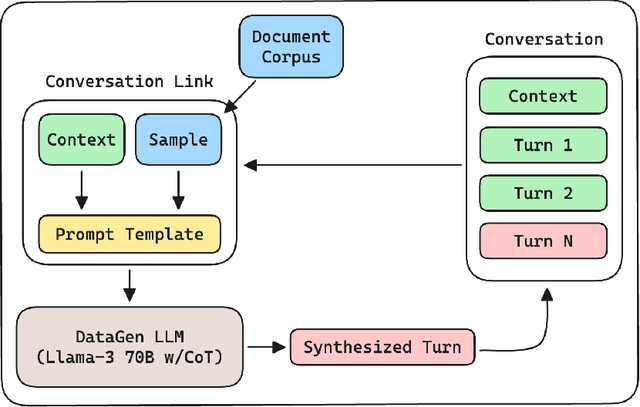

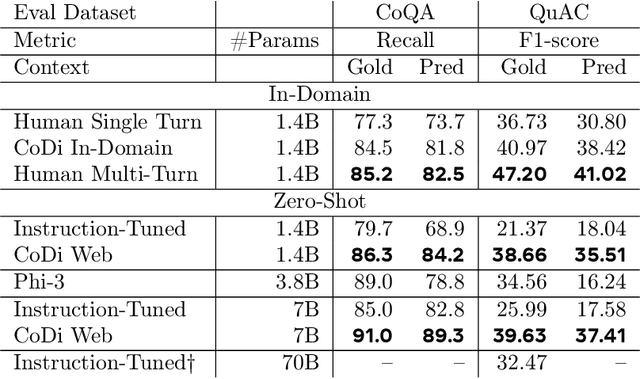

Abstract:Distilling conversational skills into Small Language Models (SLMs) with approximately 1 billion parameters presents significant challenges. Firstly, SLMs have limited capacity in their model parameters to learn extensive knowledge compared to larger models. Secondly, high-quality conversational datasets are often scarce, small, and domain-specific. Addressing these challenges, we introduce a novel data distillation framework named CoDi (short for Conversational Distillation, pronounced "Cody"), allowing us to synthesize large-scale, assistant-style datasets in a steerable and diverse manner. Specifically, while our framework is task agnostic at its core, we explore and evaluate the potential of CoDi on the task of conversational grounded reasoning for question answering. This is a typical on-device scenario for specialist SLMs, allowing for open-domain model responses, without requiring the model to "memorize" world knowledge in its limited weights. Our evaluations show that SLMs trained with CoDi-synthesized data achieve performance comparable to models trained on human-annotated data in standard metrics. Additionally, when using our framework to generate larger datasets from web data, our models surpass larger, instruction-tuned models in zero-shot conversational grounded reasoning tasks.

AnyMAL: An Efficient and Scalable Any-Modality Augmented Language Model

Sep 27, 2023

Abstract:We present Any-Modality Augmented Language Model (AnyMAL), a unified model that reasons over diverse input modality signals (i.e. text, image, video, audio, IMU motion sensor), and generates textual responses. AnyMAL inherits the powerful text-based reasoning abilities of the state-of-the-art LLMs including LLaMA-2 (70B), and converts modality-specific signals to the joint textual space through a pre-trained aligner module. To further strengthen the multimodal LLM's capabilities, we fine-tune the model with a multimodal instruction set manually collected to cover diverse topics and tasks beyond simple QAs. We conduct comprehensive empirical analysis comprising both human and automatic evaluations, and demonstrate state-of-the-art performance on various multimodal tasks.

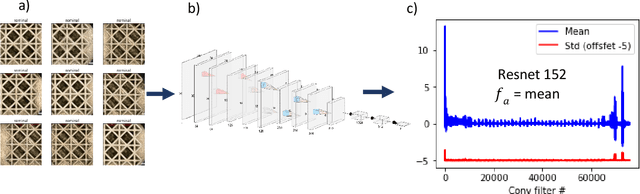

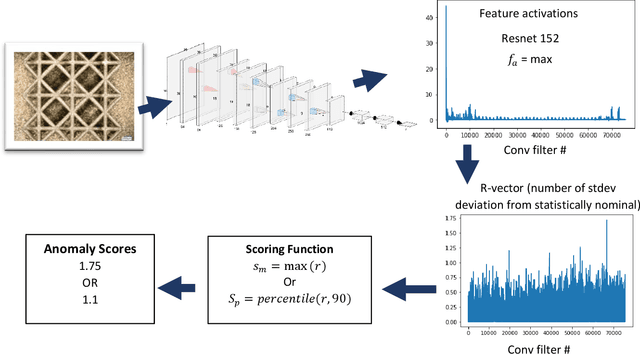

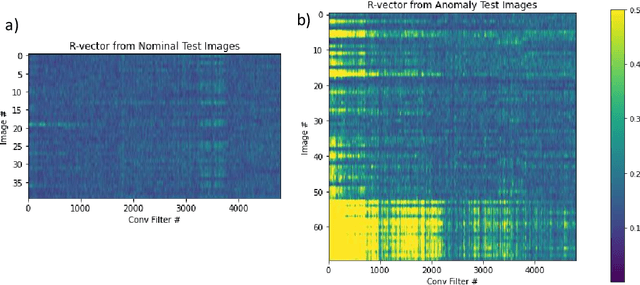

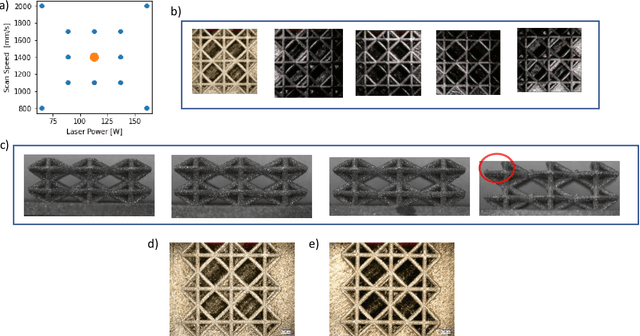

Feature anomaly detection system (FADS) for intelligent manufacturing

Apr 21, 2022

Abstract:Anomaly detection is important for industrial automation and part quality assurance, and while humans can easily detect anomalies in components given a few examples, designing a generic automated system that can perform at human or above human capabilities remains a challenge. In this work, we present a simple new anomaly detection algorithm called FADS (feature-based anomaly detection system) which leverages pretrained convolutional neural networks (CNN) to generate a statistical model of nominal inputs by observing the activation of the convolutional filters. During inference the system compares the convolutional filter activation of the new input to the statistical model and flags activations that are outside the expected range of values and therefore likely an anomaly. By using a pretrained network, FADS demonstrates excellent performance similar to or better than other machine learning approaches to anomaly detection while at the same time FADS requires no tuning of the CNN weights. We demonstrate FADS ability by detecting process parameter changes on a custom dataset of additively manufactured lattices. The FADS localization algorithm shows that textural differences that are visible on the surface can be used to detect process parameter changes. In addition, we test FADS on benchmark datasets, such as the MVTec Anomaly Detection dataset, and report good results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge