Mary-Anne Williams

Context Is Not Comprehension

Jun 08, 2025Abstract:The dominant evaluation of Large Language Models has centered on their ability to surface explicit facts from increasingly vast contexts. While today's best models demonstrate near-perfect recall on these tasks, this apparent success masks a fundamental failure in multi-step computation when information is embedded in a narrative. We introduce Verbose ListOps (VLO), a novel benchmark designed to isolate this failure. VLO programmatically weaves deterministic, nested computations into coherent stories, forcing models to track and update internal state rather than simply locate explicit values. Our experiments show that leading LLMs, capable of solving the raw ListOps equations with near-perfect accuracy, collapse in performance on VLO at just 10k tokens. The VLO framework is extensible to any verifiable reasoning task, providing a critical tool to move beyond simply expanding context windows and begin building models with the robust, stateful comprehension required for complex knowledge work.

Verbose ListOps (VLO): Beyond Long Context -- Unmasking LLM's Reasoning Blind Spots

Jun 05, 2025Abstract:Large Language Models (LLMs), whilst great at extracting facts from text, struggle with nested narrative reasoning. Existing long context and multi-hop QA benchmarks inadequately test this, lacking realistic distractors or failing to decouple context length from reasoning complexity, masking a fundamental LLM limitation. We introduce Verbose ListOps, a novel benchmark that programmatically transposes ListOps computations into lengthy, coherent stories. This uniquely forces internal computation and state management of nested reasoning problems by withholding intermediate results, and offers fine-grained controls for both narrative size \emph{and} reasoning difficulty. Whilst benchmarks like LongReason (2025) advance approaches for synthetically expanding the context size of multi-hop QA problems, Verbose ListOps pinpoints a specific LLM vulnerability: difficulty in state management for nested sub-reasoning amongst semantically-relevant, distracting narrative. Our experiments show that leading LLMs (e.g., OpenAI o4, Gemini 2.5 Pro) collapse in performance on Verbose ListOps at modest (~10k token) narrative lengths, despite effortlessly solving raw ListOps equations. Addressing this failure is paramount for real-world text interpretation which requires identifying key reasoning points, tracking conceptual intermediate results, and filtering irrelevant information. Verbose ListOps, and its extensible generation framework thus enables targeted reasoning enhancements beyond mere context-window expansion; a critical step to automating the world's knowledge work.

EEGS: A Transparent Model of Emotions

Nov 04, 2020

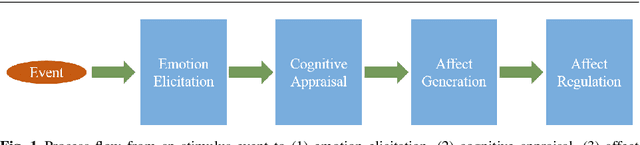

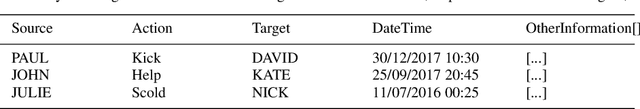

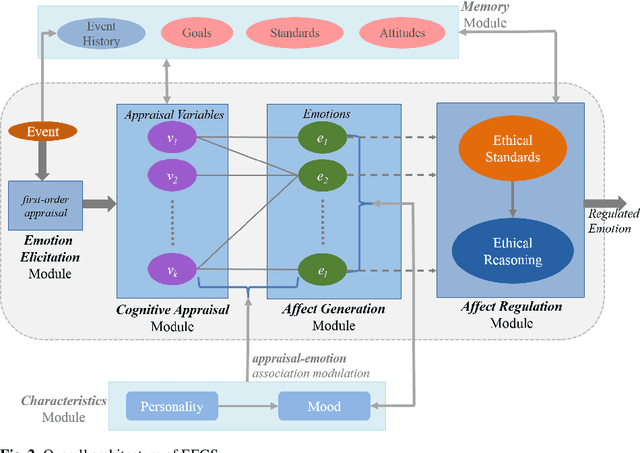

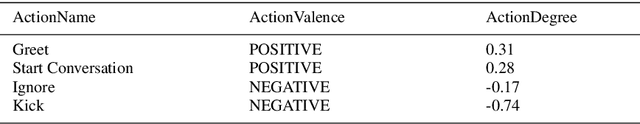

Abstract:This paper presents the computational details of our emotion model, EEGS, and also provides an overview of a three-stage validation methodology used for the evaluation of our model, which can also be applicable for other computational models of emotion. A major gap in existing emotion modelling literature has been the lack of computational/technical details of the implemented models, which not only makes it difficult for early-stage researchers to understand the area but also prevents benchmarking of the developed models for expert researchers. We partly addressed these issues by presenting technical details for the computation of appraisal variables in our previous work. In this paper, we present mathematical formulas for the calculation of emotion intensities based on the theoretical premises of appraisal theory. Moreover, we will discuss how we enable our emotion model to reach to a regulated emotional state for social acceptability of autonomous agents. We hope this paper will allow a better transparency of knowledge, accurate benchmarking and further evolution of the field of emotion modelling.

Fog Robotics: A Summary, Challenges and Future Scope

Aug 14, 2019

Abstract:Human-robot interaction plays a crucial role to make robots closer to humans. Usually, robots are limited by their own capabilities. Therefore, they utilise Cloud Robotics to enhance their dexterity. Its ability includes the sharing of information such as maps, images and the processing power. This whole process involves distributing data which intend to rise enormously. New issues can arise such as bandwidth, network congestion at backhaul and fronthaul systems resulting in high latency. Thus, it can make an impact on seamless connectivity between the robots, users and the cloud. Also, a robot may not accomplish its goal successfully within a stipulated time. As a consequence, Cloud Robotics cannot be in a position to handle the traffic imposed by robots. On the contrary, impending Fog Robotics can act as a solution by solving major problems of Cloud Robotics. Therefore to check its feasibility, we discuss the need and architectures of Fog Robotics in this paper. To evaluate the architectures, we used a realistic scenario of Fog Robotics by comparing them with Cloud Robotics. Next, latency is chosen as the primary factor for validating the effectiveness of the system. Besides, we utilised real-time latency using Pepper robot, Fog robot server and the Cloud server. Experimental results show that Fog Robotics reduces latency significantly compared to Cloud Robotics. Moreover, advantages, challenges and future scope of the Fog Robotics system is further discussed.

Fog Robotics for Efficient, Fluent and Robust Human-Robot Interaction

Nov 14, 2018

Abstract:Active communication between robots and humans is essential for effective human-robot interaction. To accomplish this objective, Cloud Robotics (CR) was introduced to make robots enhance their capabilities. It enables robots to perform extensive computations in the cloud by sharing their outcomes. Outcomes include maps, images, processing power, data, activities, and other robot resources. But due to the colossal growth of data and traffic, CR suffers from serious latency issues. Therefore, it is unlikely to scale a large number of robots particularly in human-robot interaction scenarios, where responsiveness is paramount. Furthermore, other issues related to security such as privacy breaches and ransomware attacks can increase. To address these problems, in this paper, we have envisioned the next generation of social robotic architectures based on Fog Robotics (FR) that inherits the strengths of Fog Computing to augment the future social robotic systems. These new architectures can escalate the dexterity of robots by shoving the data closer to the robot. Additionally, they can ensure that human-robot interaction is more responsive by resolving the problems of CR. Moreover, experimental results are further discussed by considering a scenario of FR and latency as a primary factor comparing to CR models.

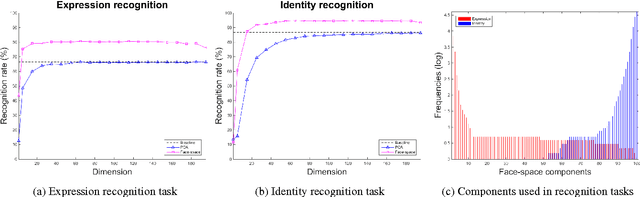

The face-space duality hypothesis: a computational model

Sep 23, 2016

Abstract:Valentine's face-space suggests that faces are represented in a psychological multidimensional space according to their perceived properties. However, the proposed framework was initially designed as an account of invariant facial features only, and explanations for dynamic features representation were neglected. In this paper we propose, develop and evaluate a computational model for a twofold structure of the face-space, able to unify both identity and expression representations in a single implemented model. To capture both invariant and dynamic facial features we introduce the face-space duality hypothesis and subsequently validate it through a mathematical presentation using a general approach to dimensionality reduction. Two experiments with real facial images show that the proposed face-space: (1) supports both identity and expression recognition, and (2) has a twofold structure anticipated by our formal argument.

PyRIDE: An Interactive Development Environment for PR2 Robot

May 30, 2016

Abstract:Python based Robot Interactive Development Environment (PyRIDE) is a software that supports rapid \textit{interactive} programming of robot skills and behaviours on PR2/ROS (Robot Operating System) platform. One of the key features of PyRIDE is its interactive remotely accessible Python console that allows its users to program robots \textit{online} and in \textit{realtime} in the same way as using the standard Python interactive interpreter. It allows programs to be modified while they are running. PyRIDE is also a software integration framework that abstracts and aggregates disparate low level ROS software modules, e.g. arm joint motor controllers, and exposes their functionalities through a unified Python programming interface. PR2 programmers are able to experiment and develop robot behaviours without dealing with specific details of accessing underlying softwares and hardwares. PyRIDE provides a client-server mechanism that allows remote user access of the robot functionalities, e.g. remote robot monitoring and control, access real-time robot camera image data etc. This enables multi-modal human robot interactions using different devices and user interfaces. All these features are seamlessly integrated into one lightweight and portable middleware package. In this paper, we use four real life scenarios to demonstrate PyRIDE key features and illustrate the usefulness of software.

Socially Impaired Robots: Human Social Disorders and Robots' Socio-Emotional Intelligence

Feb 15, 2016Abstract:Social robots need intelligence in order to safely coexist and interact with humans. Robots without functional abilities in understanding others and unable to empathise might be a societal risk and they may lead to a society of socially impaired robots. In this work we provide a survey of three relevant human social disorders, namely autism, psychopathy and schizophrenia, as a means to gain a better understanding of social robots' future capability requirements. We provide evidence supporting the idea that social robots will require a combination of emotional intelligence and social intelligence, namely socio-emotional intelligence. We argue that a robot with a simple socio-emotional process requires a simulation-driven model of intelligence. Finally, we provide some critical guidelines for designing future socio-emotional robots.

Action recognition in still images by latent superpixel classification

Jul 30, 2015

Abstract:Action recognition from still images is an important task of computer vision applications such as image annotation, robotic navigation, video surveillance and several others. Existing approaches mainly rely on either bag-of-feature representations or articulated body-part models. However, the relationship between the action and the image segments is still substantially unexplored. For this reason, in this paper we propose to approach action recognition by leveraging an intermediate layer of "superpixels" whose latent classes can act as attributes of the action. In the proposed approach, the action class is predicted by a structural model(learnt by Latent Structural SVM) based on measurements from the image superpixels and their latent classes. Experimental results over the challenging Stanford 40 Actions dataset report a significant average accuracy of 74.06% for the positive class and 88.50% for the negative class, giving evidence to the performance of the proposed approach.

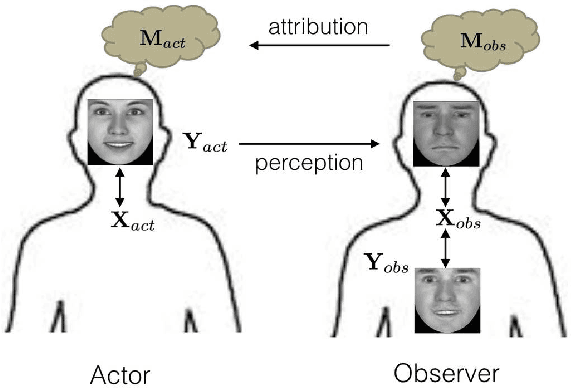

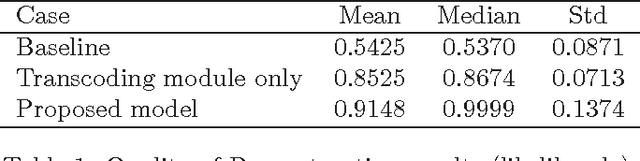

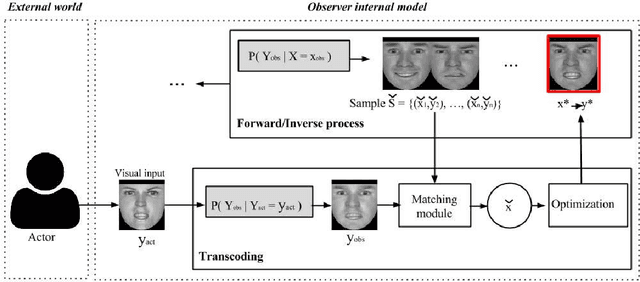

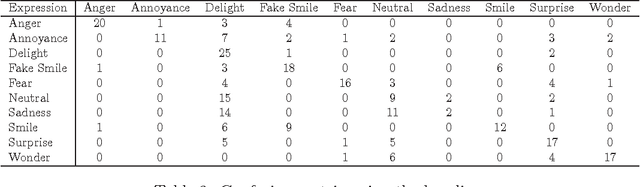

Affective Facial Expression Processing via Simulation: A Probabilistic Model

Nov 03, 2014

Abstract:Understanding the mental state of other people is an important skill for intelligent agents and robots to operate within social environments. However, the mental processes involved in `mind-reading' are complex. One explanation of such processes is Simulation Theory - it is supported by a large body of neuropsychological research. Yet, determining the best computational model or theory to use in simulation-style emotion detection, is far from being understood. In this work, we use Simulation Theory and neuroscience findings on Mirror-Neuron Systems as the basis for a novel computational model, as a way to handle affective facial expressions. The model is based on a probabilistic mapping of observations from multiple identities onto a single fixed identity (`internal transcoding of external stimuli'), and then onto a latent space (`phenomenological response'). Together with the proposed architecture we present some promising preliminary results

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge