Jonathan Vitale

EEGS: A Transparent Model of Emotions

Nov 04, 2020

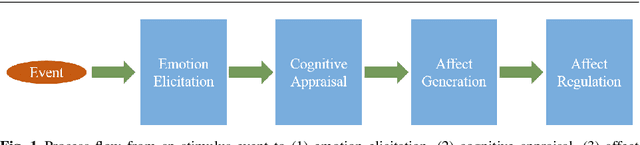

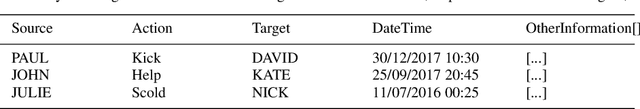

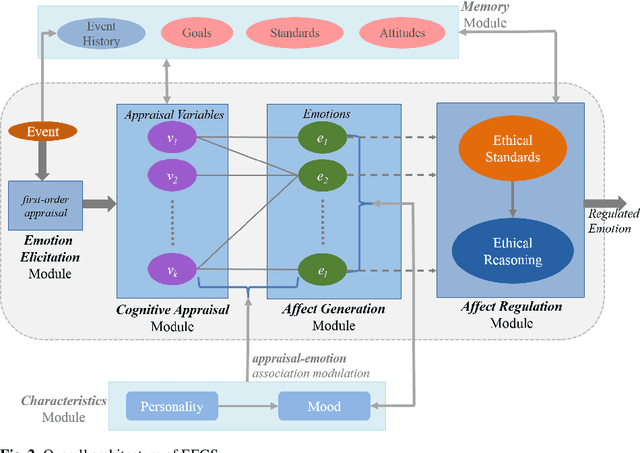

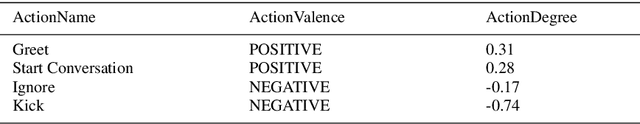

Abstract:This paper presents the computational details of our emotion model, EEGS, and also provides an overview of a three-stage validation methodology used for the evaluation of our model, which can also be applicable for other computational models of emotion. A major gap in existing emotion modelling literature has been the lack of computational/technical details of the implemented models, which not only makes it difficult for early-stage researchers to understand the area but also prevents benchmarking of the developed models for expert researchers. We partly addressed these issues by presenting technical details for the computation of appraisal variables in our previous work. In this paper, we present mathematical formulas for the calculation of emotion intensities based on the theoretical premises of appraisal theory. Moreover, we will discuss how we enable our emotion model to reach to a regulated emotional state for social acceptability of autonomous agents. We hope this paper will allow a better transparency of knowledge, accurate benchmarking and further evolution of the field of emotion modelling.

The face-space duality hypothesis: a computational model

Sep 23, 2016

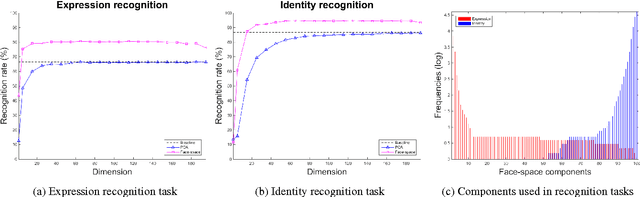

Abstract:Valentine's face-space suggests that faces are represented in a psychological multidimensional space according to their perceived properties. However, the proposed framework was initially designed as an account of invariant facial features only, and explanations for dynamic features representation were neglected. In this paper we propose, develop and evaluate a computational model for a twofold structure of the face-space, able to unify both identity and expression representations in a single implemented model. To capture both invariant and dynamic facial features we introduce the face-space duality hypothesis and subsequently validate it through a mathematical presentation using a general approach to dimensionality reduction. Two experiments with real facial images show that the proposed face-space: (1) supports both identity and expression recognition, and (2) has a twofold structure anticipated by our formal argument.

Socially Impaired Robots: Human Social Disorders and Robots' Socio-Emotional Intelligence

Feb 15, 2016Abstract:Social robots need intelligence in order to safely coexist and interact with humans. Robots without functional abilities in understanding others and unable to empathise might be a societal risk and they may lead to a society of socially impaired robots. In this work we provide a survey of three relevant human social disorders, namely autism, psychopathy and schizophrenia, as a means to gain a better understanding of social robots' future capability requirements. We provide evidence supporting the idea that social robots will require a combination of emotional intelligence and social intelligence, namely socio-emotional intelligence. We argue that a robot with a simple socio-emotional process requires a simulation-driven model of intelligence. Finally, we provide some critical guidelines for designing future socio-emotional robots.

Affective Facial Expression Processing via Simulation: A Probabilistic Model

Nov 03, 2014

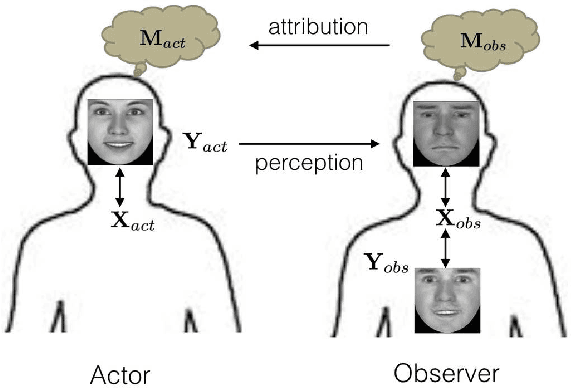

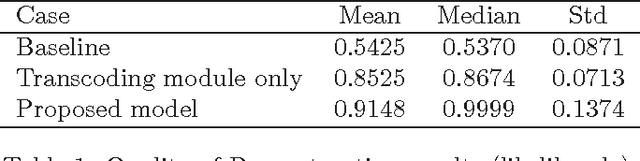

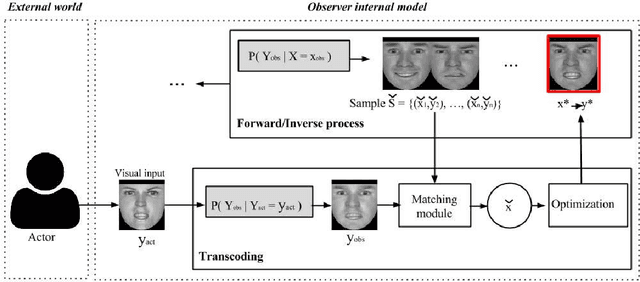

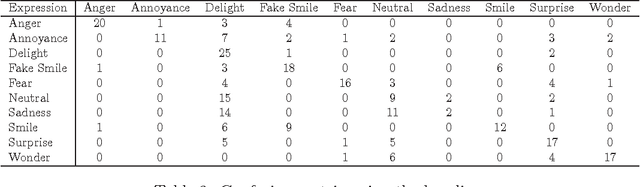

Abstract:Understanding the mental state of other people is an important skill for intelligent agents and robots to operate within social environments. However, the mental processes involved in `mind-reading' are complex. One explanation of such processes is Simulation Theory - it is supported by a large body of neuropsychological research. Yet, determining the best computational model or theory to use in simulation-style emotion detection, is far from being understood. In this work, we use Simulation Theory and neuroscience findings on Mirror-Neuron Systems as the basis for a novel computational model, as a way to handle affective facial expressions. The model is based on a probabilistic mapping of observations from multiple identities onto a single fixed identity (`internal transcoding of external stimuli'), and then onto a latent space (`phenomenological response'). Together with the proposed architecture we present some promising preliminary results

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge