Marko Grobelnik

Jožef Stefan Institute - Ljubljana

MRM3: Machine Readable ML Model Metadata

May 19, 2025Abstract:As the complexity and number of machine learning (ML) models grows, well-documented ML models are essential for developers and companies to use or adapt them to their specific use cases. Model metadata, already present in unstructured format as model cards in online repositories such as Hugging Face, could be more structured and machine readable while also incorporating environmental impact metrics such as energy consumption and carbon footprint. Our work extends the existing State of the Art by defining a structured schema for ML model metadata focusing on machine-readable format and support for integration into a knowledge graph (KG) for better organization and querying, enabling a wider set of use cases. Furthermore, we present an example wireless localization model metadata dataset consisting of 22 models trained on 4 datasets, integrated into a Neo4j-based KG with 113 nodes and 199 relations.

Agent-Based Simulations of Online Political Discussions: A Case Study on Elections in Germany

Mar 31, 2025Abstract:User engagement on social media platforms is influenced by historical context, time constraints, and reward-driven interactions. This study presents an agent-based simulation approach that models user interactions, considering past conversation history, motivation, and resource constraints. Utilizing German Twitter data on political discourse, we fine-tune AI models to generate posts and replies, incorporating sentiment analysis, irony detection, and offensiveness classification. The simulation employs a myopic best-response model to govern agent behavior, accounting for decision-making based on expected rewards. Our results highlight the impact of historical context on AI-generated responses and demonstrate how engagement evolves under varying constraints.

BAR-Analytics: A Web-based Platform for Analyzing Information Spreading Barriers in News: Comparative Analysis Across Multiple Barriers and Events

Mar 31, 2025Abstract:This paper presents BAR-Analytics, a web-based, open-source platform designed to analyze news dissemination across geographical, economic, political, and cultural boundaries. Using the Russian-Ukrainian and Israeli-Palestinian conflicts as case studies, the platform integrates four analytical methods: propagation analysis, trend analysis, sentiment analysis, and temporal topic modeling. Over 350,000 articles were collected and analyzed, with a focus on economic disparities and geographical influences using metadata enrichment. We evaluate the case studies using coherence, sentiment polarity, topic frequency, and trend shifts as key metrics. Our results show distinct patterns in news coverage: the Israeli-Palestinian conflict tends to have more negative sentiment with a focus on human rights, while the Russia-Ukraine conflict is more positive, emphasizing election interference. These findings highlight the influence of political, economic, and regional factors in shaping media narratives across different conflicts.

The 2021 Tokyo Olympics Multilingual News Article Dataset

Feb 10, 2025

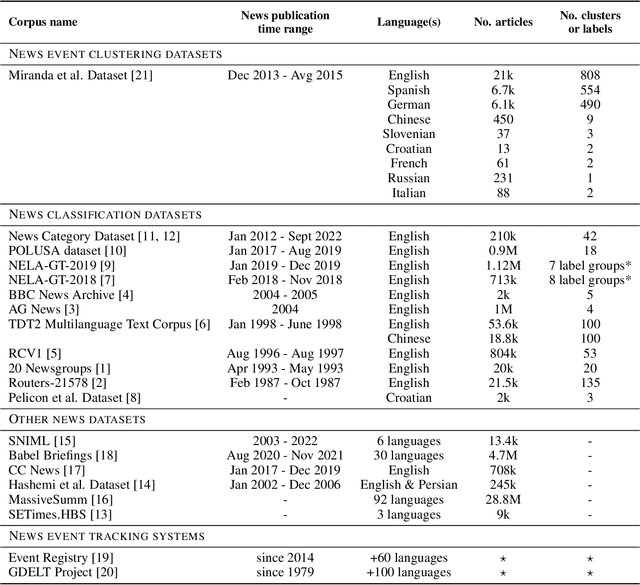

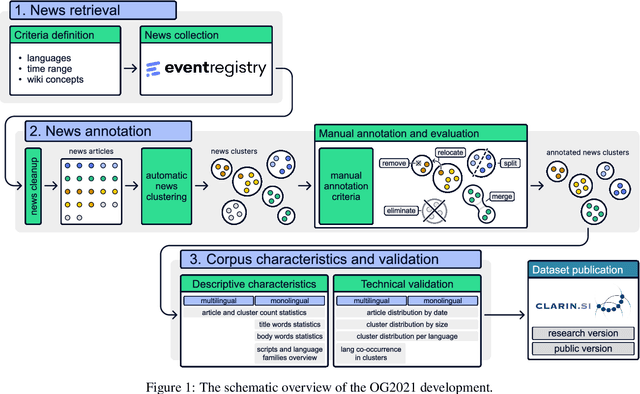

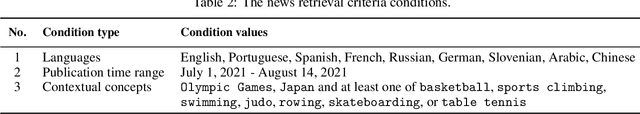

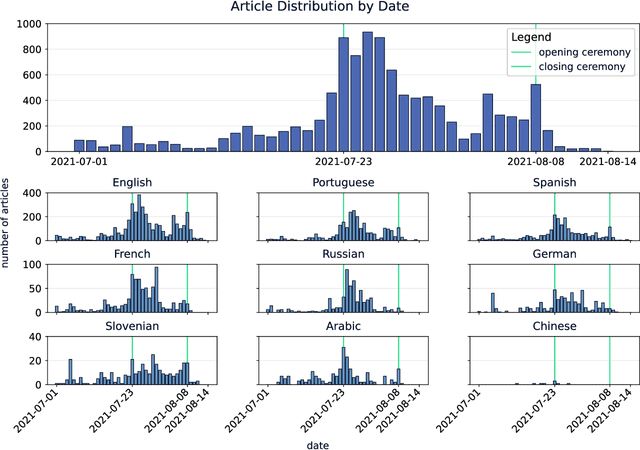

Abstract:In this paper, we introduce a dataset of multilingual news articles covering the 2021 Tokyo Olympics. A total of 10,940 news articles were gathered from 1,918 different publishers, covering 1,350 sub-events of the 2021 Olympics, and published between July 1, 2021, and August 14, 2021. These articles are written in nine languages from different language families and in different scripts. To create the dataset, the raw news articles were first retrieved via a service that collects and analyzes news articles. Then, the articles were grouped using an online clustering algorithm, with each group containing articles reporting on the same sub-event. Finally, the groups were manually annotated and evaluated. The development of this dataset aims to provide a resource for evaluating the performance of multilingual news clustering algorithms, for which limited datasets are available. It can also be used to analyze the dynamics and events of the 2021 Tokyo Olympics from different perspectives. The dataset is available in CSV format and can be accessed from the CLARIN.SI repository.

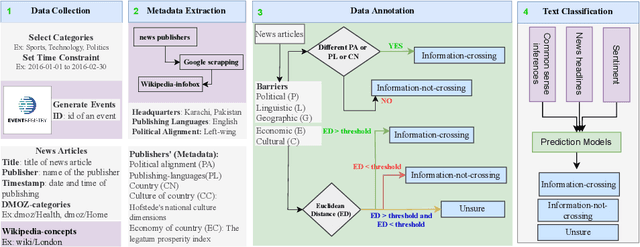

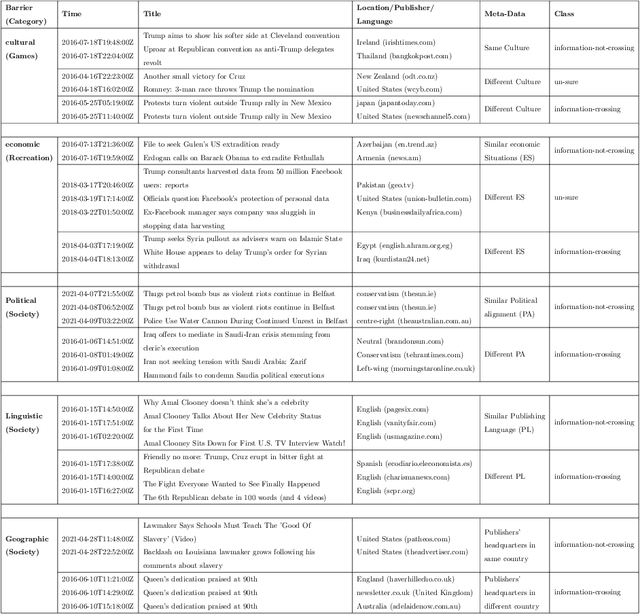

Classification of news spreading barriers

Apr 10, 2023Abstract:News media is one of the most effective mechanisms for spreading information internationally, and many events from different areas are internationally relevant. However, news coverage for some news events is limited to a specific geographical region because of information spreading barriers, which can be political, geographical, economic, cultural, or linguistic. In this paper, we propose an approach to barrier classification where we infer the semantics of news articles through Wikipedia concepts. To that end, we collected news articles and annotated them for different kinds of barriers using the metadata of news publishers. Then, we utilize the Wikipedia concepts along with the body text of news articles as features to infer the news-spreading barriers. We compare our approach to the classical text classification methods, deep learning, and transformer-based methods. The results show that the proposed approach using Wikipedia concepts based semantic knowledge offers better performance than the usual for classifying the news-spreading barriers.

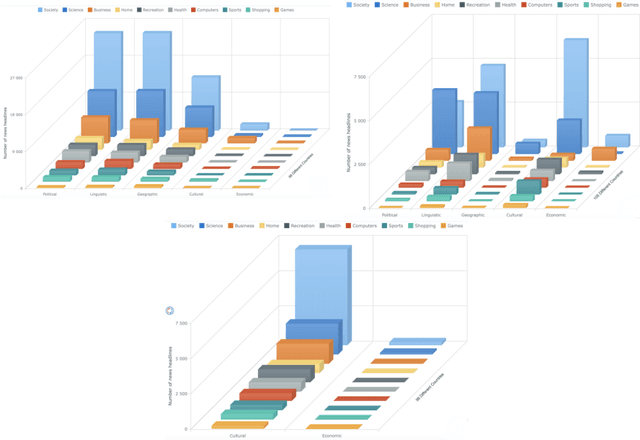

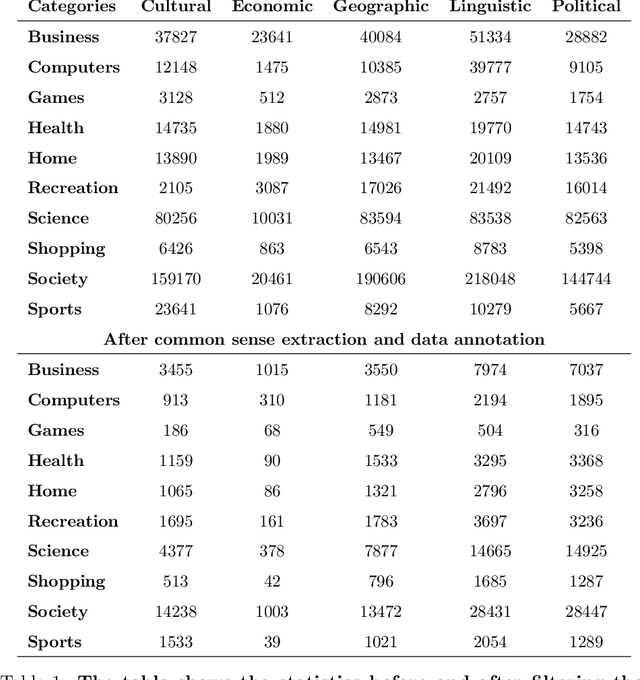

Profiling the news spreading barriers using news headlines

Apr 07, 2023

Abstract:News headlines can be a good data source for detecting the news spreading barriers in news media, which may be useful in many real-world applications. In this paper, we utilize semantic knowledge through the inference-based model COMET and sentiments of news headlines for barrier classification. We consider five barriers including cultural, economic, political, linguistic, and geographical, and different types of news headlines including health, sports, science, recreation, games, homes, society, shopping, computers, and business. To that end, we collect and label the news headlines automatically for the barriers using the metadata of news publishers. Then, we utilize the extracted commonsense inferences and sentiments as features to detect the news spreading barriers. We compare our approach to the classical text classification methods, deep learning, and transformer-based methods. The results show that the proposed approach using inferences-based semantic knowledge and sentiment offers better performance than the usual (the average F1-score of the ten categories improves from 0.41, 0.39, 0.59, and 0.59 to 0.47, 0.55, 0.70, and 0.76 for the cultural, economic, political, and geographical respectively) for classifying the news-spreading barriers.

Political and Economic Patterns in COVID-19 News: From Lockdown to Vaccination

Dec 15, 2022

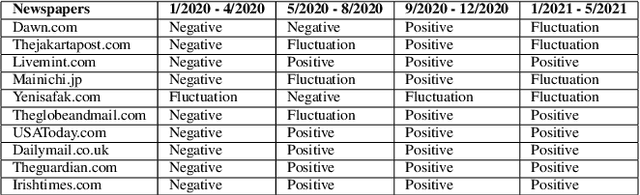

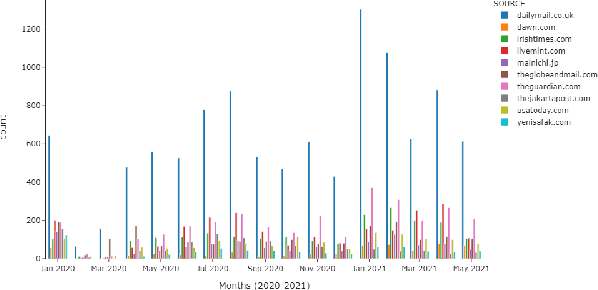

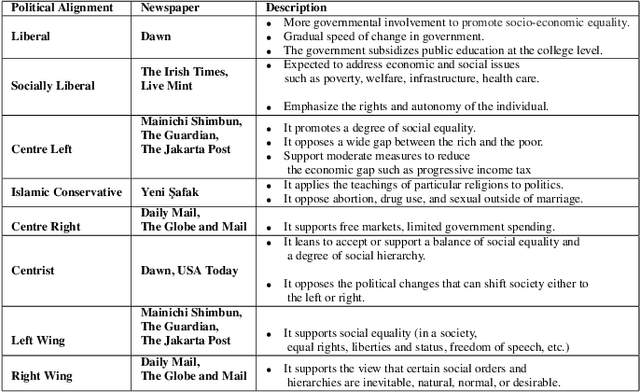

Abstract:The purpose of this study is to analyse COVID-19 related news published across different geographical places, in order to gain insights in reporting differences. The COVID-19 pandemic had a major outbreak in January 2020 and was followed by different preventive measures, lockdown, and finally by the process of vaccination. To date, more comprehensive analysis of news related to COVID-19 pandemic are missing, especially those which explain what aspects of this pandemic are being reported by newspapers inserted in different economies and belonging to different political alignments. Since LDA is often less coherent when there are news articles published across the world about an event and you look answers for specific queries. It is because of having semantically different content. To address this challenge, we performed pooling of news articles based on information retrieval using TF-IDF score in a data processing step and topic modeling using LDA with combination of 1 to 6 ngrams. We used VADER sentiment analyzer to analyze the differences in sentiments in news articles reported across different geographical places. The novelty of this study is to look at how COVID-19 pandemic was reported by the media, providing a comparison among countries in different political and economic contexts. Our findings suggest that the news reporting by newspapers with different political alignment support the reported content. Also, economic issues reported by newspapers depend on economy of the place where a newspaper resides.

Analysis of information cascading and propagation barriers across distinctive news events

Dec 15, 2022Abstract:News reporting on events that occur in our society can have different styles and structures as well as different dynamics of news spreading over time. News publishers have the potential to spread their news and reach out to a large number of readers worldwide. In this paper we would like to understand how well they are doing it and which kind of obstacles the news may encounter when spreading. The news to be spread wider cross multiple barriers such as linguistic (the most evident one as they get published in other natural languages), economic, geographical, political, time zone, and cultural barriers. Observing potential differences between spreading of news on different events published by multiple publishers can bring insights into what may influence the differences in the spreading patterns. There are multiple reasons, possibly many hidden, influencing the speed and geographical spread of news. This paper studies information cascading and propagation barriers, applying the proposed methodology on three distinctive kinds of events: Global Warming, earthquakes, and FIFA World Cup.

A Commonsense-Infused Language-Agnostic Learning Framework for Enhancing Prediction of Political Polarity in Multilingual News Headlines

Dec 01, 2022Abstract:Predicting the political polarity of news headlines is a challenging task that becomes even more challenging in a multilingual setting with low-resource languages. To deal with this, we propose to utilise the Inferential Commonsense Knowledge via a Translate-Retrieve-Translate strategy to introduce a learning framework. To begin with, we use the method of translation and retrieval to acquire the inferential knowledge in the target language. We then employ an attention mechanism to emphasise important inferences. We finally integrate the attended inferences into a multilingual pre-trained language model for the task of bias prediction. To evaluate the effectiveness of our framework, we present a dataset of over 62.6K multilingual news headlines in five European languages annotated with their respective political polarities. We evaluate several state-of-the-art multilingual pre-trained language models since their performance tends to vary across languages (low/high resource). Evaluation results demonstrate that our proposed framework is effective regardless of the models employed. Overall, the best performing model trained with only headlines show 0.90 accuracy and F1, and 0.83 jaccard score. With attended knowledge in our framework, the same model show an increase in 2.2% accuracy and F1, and 3.6% jaccard score. Extending our experiments to individual languages reveals that the models we analyze for Slovenian perform significantly worse than other languages in our dataset. To investigate this, we assess the effect of translation quality on prediction performance. It indicates that the disparity in performance is most likely due to poor translation quality. We release our dataset and scripts at: https://github.com/Swati17293/KG-Multi-Bias for future research. Our framework has the potential to benefit journalists, social scientists, news producers, and consumers.

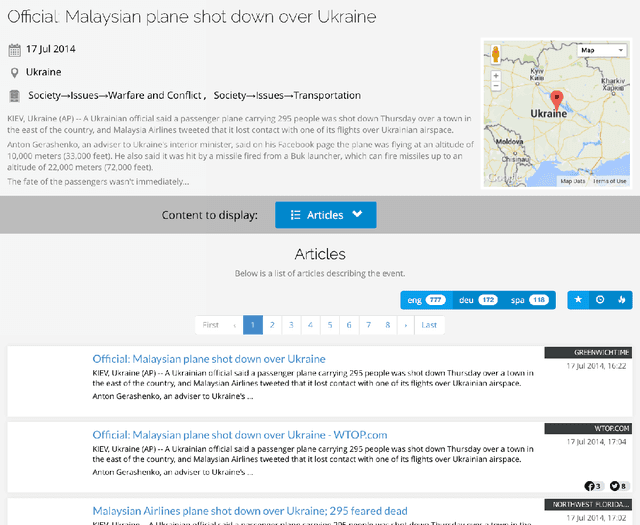

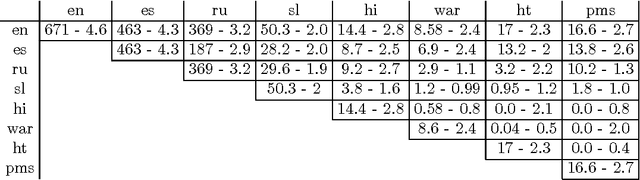

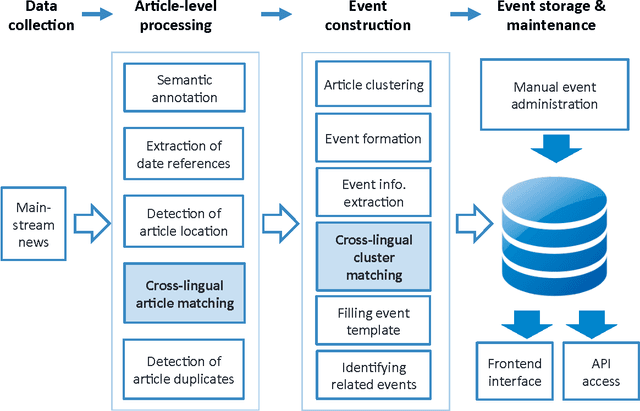

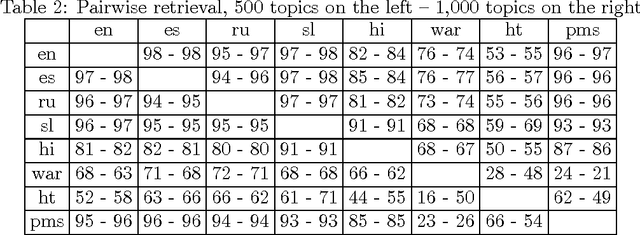

News Across Languages - Cross-Lingual Document Similarity and Event Tracking

Dec 22, 2015

Abstract:In today's world, we follow news which is distributed globally. Significant events are reported by different sources and in different languages. In this work, we address the problem of tracking of events in a large multilingual stream. Within a recently developed system Event Registry we examine two aspects of this problem: how to compare articles in different languages and how to link collections of articles in different languages which refer to the same event. Taking a multilingual stream and clusters of articles from each language, we compare different cross-lingual document similarity measures based on Wikipedia. This allows us to compute the similarity of any two articles regardless of language. Building on previous work, we show there are methods which scale well and can compute a meaningful similarity between articles from languages with little or no direct overlap in the training data. Using this capability, we then propose an approach to link clusters of articles across languages which represent the same event. We provide an extensive evaluation of the system as a whole, as well as an evaluation of the quality and robustness of the similarity measure and the linking algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge