Simon Münker

Agent-Based Simulations of Online Political Discussions: A Case Study on Elections in Germany

Mar 31, 2025Abstract:User engagement on social media platforms is influenced by historical context, time constraints, and reward-driven interactions. This study presents an agent-based simulation approach that models user interactions, considering past conversation history, motivation, and resource constraints. Utilizing German Twitter data on political discourse, we fine-tune AI models to generate posts and replies, incorporating sentiment analysis, irony detection, and offensiveness classification. The simulation employs a myopic best-response model to govern agent behavior, accounting for decision-making based on expected rewards. Our results highlight the impact of historical context on AI-generated responses and demonstrate how engagement evolves under varying constraints.

Towards "Differential AI Psychology" and in-context Value-driven Statement Alignment with Moral Foundations Theory

Aug 21, 2024

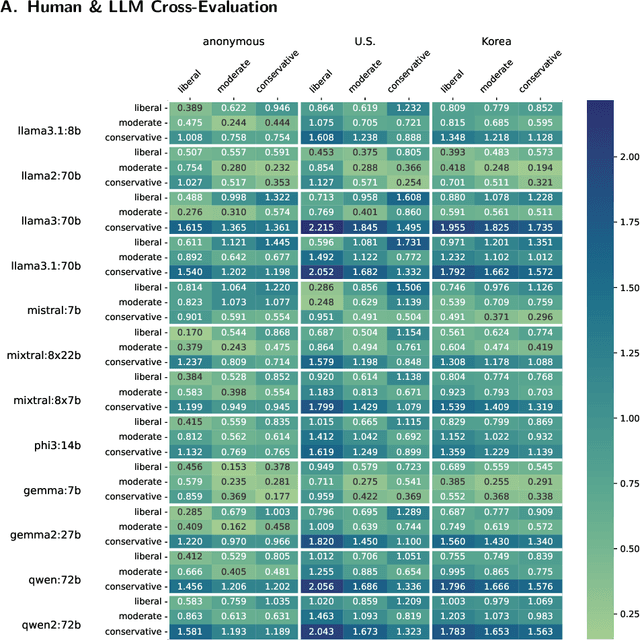

Abstract:Contemporary research in social sciences is increasingly utilizing state-of-the-art statistical language models to annotate or generate content. While these models perform benchmark-leading on common language tasks and show exemplary task-independent emergent abilities, transferring them to novel out-of-domain tasks is only insufficiently explored. The implications of the statistical black-box approach - stochastic parrots - are prominently criticized in the language model research community; however, the significance for novel generative tasks is not. This work investigates the alignment between personalized language models and survey participants on a Moral Foundation Theory questionnaire. We adapt text-to-text models to different political personas and survey the questionnaire repetitively to generate a synthetic population of persona and model combinations. Analyzing the intra-group variance and cross-alignment shows significant differences across models and personas. Our findings indicate that adapted models struggle to represent the survey-captured assessment of political ideologies. Thus, using language models to mimic social interactions requires measurable improvements in in-context optimization or parameter manipulation to align with psychological and sociological stereotypes. Without quantifiable alignment, generating politically nuanced content remains unfeasible. To enhance these representations, we propose a testable framework to generate agents based on moral value statements for future research.

Zero-shot prompt-based classification: topic labeling in times of foundation models in German Tweets

Jun 26, 2024Abstract:Filtering and annotating textual data are routine tasks in many areas, like social media or news analytics. Automating these tasks allows to scale the analyses wrt. speed and breadth of content covered and decreases the manual effort required. Due to technical advancements in Natural Language Processing, specifically the success of large foundation models, a new tool for automating such annotation processes by using a text-to-text interface given written guidelines without providing training samples has become available. In this work, we assess these advancements in-the-wild by empirically testing them in an annotation task on German Twitter data about social and political European crises. We compare the prompt-based results with our human annotation and preceding classification approaches, including Naive Bayes and a BERT-based fine-tuning/domain adaptation pipeline. Our results show that the prompt-based approach - despite being limited by local computation resources during the model selection - is comparable with the fine-tuned BERT but without any annotated training data. Our findings emphasize the ongoing paradigm shift in the NLP landscape, i.e., the unification of downstream tasks and elimination of the need for pre-labeled training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge