Marian-Andrei Rizoiu

Beyond Content: Behavioral Policies Reveal Actors in Information Operations

Feb 02, 2026Abstract:The detection of online influence operations -- coordinated campaigns by malicious actors to spread narratives -- has traditionally depended on content analysis or network features. These approaches are increasingly brittle as generative models produce convincing text, platforms restrict access to behavioral data, and actors migrate to less-regulated spaces. We introduce a platform-agnostic framework that identifies malicious actors from their behavioral policies by modeling user activity as sequential decision processes. We apply this approach to 12,064 Reddit users, including 99 accounts linked to the Russian Internet Research Agency in Reddit's 2017 transparency report, analyzing over 38 million activity steps from 2015-2018. Activity-based representations, which model how users act rather than what they post, consistently outperform content models in detecting malicious accounts. When distinguishing trolls -- users engaged in coordinated manipulation -- from ordinary users, policy-based classifiers achieve a median macro-$F_1$ of 94.9%, compared to 91.2% for text embeddings. Policy features also enable earlier detection from short traces and degrade more gracefully under evasion strategies or data corruption. These findings show that behavioral dynamics encode stable, discriminative signals of manipulation and point to resilient, cross-platform detection strategies in the era of synthetic content and limited data access.

DREAMS: A Social Exchange Theory-Informed Modeling of Misinformation Engagement on Social Media

Feb 02, 2026Abstract:Social media engagement prediction is a central challenge in computational social science, particularly for understanding how users interact with misinformation. Existing approaches often treat engagement as a homogeneous time-series signal, overlooking the heterogeneous social mechanisms and platform designs that shape how misinformation spreads. In this work, we ask: ``Can neural architectures discover social exchange principles from behavioral data alone?'' We introduce \textsc{Dreams} (\underline{D}isentangled \underline{R}epresentations and \underline{E}pisodic \underline{A}daptive \underline{M}odeling for \underline{S}ocial media misinformation engagements), a social exchange theory-guided framework that models misinformation engagement as a dynamic process of social exchange. Rather than treating engagement as a static outcome, \textsc{Dreams} models it as a sequence-to-sequence adaptation problem, where each action reflects an evolving negotiation between user effort and social reward conditioned by platform context. It integrates adaptive mechanisms to learn how emotional and contextual signals propagate through time and across platforms. On a cross-platform dataset spanning $7$ platforms and 2.37M posts collected between 2021 and 2025, \textsc{Dreams} achieves state-of-the-art performance in predicting misinformation engagements, reaching a mean absolute percentage error of $19.25$\%. This is a $43.6$\% improvement over the strongest baseline. Beyond predictive gains, the model reveals consistent cross-platform patterns that align with social exchange principles, suggesting that integrating behavioral theory can enhance empirical modeling of online misinformation engagement. The source code is available at: https://github.com/ltian678/DREAMS.

Learning to Make Friends: Coaching LLM Agents toward Emergent Social Ties

Oct 22, 2025Abstract:Can large language model (LLM) agents reproduce the complex social dynamics that characterize human online behavior -- shaped by homophily, reciprocity, and social validation -- and what memory and learning mechanisms enable such dynamics to emerge? We present a multi-agent LLM simulation framework in which agents repeatedly interact, evaluate one another, and adapt their behavior through in-context learning accelerated by a coaching signal. To model human social behavior, we design behavioral reward functions that capture core drivers of online engagement, including social interaction, information seeking, self-presentation, coordination, and emotional support. These rewards align agent objectives with empirically observed user motivations, enabling the study of how network structures and group formations emerge from individual decision-making. Our experiments show that coached LLM agents develop stable interaction patterns and form emergent social ties, yielding network structures that mirror properties of real online communities. By combining behavioral rewards with in-context adaptation, our framework establishes a principled testbed for investigating collective dynamics in LLM populations and reveals how artificial agents may approximate or diverge from human-like social behavior.

Estimating Online Influence Needs Causal Modeling! Counterfactual Analysis of Social Media Engagement

May 25, 2025Abstract:Understanding true influence in social media requires distinguishing correlation from causation--particularly when analyzing misinformation spread. While existing approaches focus on exposure metrics and network structures, they often fail to capture the causal mechanisms by which external temporal signals trigger engagement. We introduce a novel joint treatment-outcome framework that leverages existing sequential models to simultaneously adapt to both policy timing and engagement effects. Our approach adapts causal inference techniques from healthcare to estimate Average Treatment Effects (ATE) within the sequential nature of social media interactions, tackling challenges from external confounding signals. Through our experiments on real-world misinformation and disinformation datasets, we show that our models outperform existing benchmarks by 15--22% in predicting engagement across diverse counterfactual scenarios, including exposure adjustment, timing shifts, and varied intervention durations. Case studies on 492 social media users show our causal effect measure aligns strongly with the gold standard in influence estimation, the expert-based empirical influence.

Before It's Too Late: A State Space Model for the Early Prediction of Misinformation and Disinformation Engagement

Feb 07, 2025Abstract:In today's digital age, conspiracies and information campaigns can emerge rapidly and erode social and democratic cohesion. While recent deep learning approaches have made progress in modeling engagement through language and propagation models, they struggle with irregularly sampled data and early trajectory assessment. We present IC-Mamba, a novel state space model that forecasts social media engagement by modeling interval-censored data with integrated temporal embeddings. Our model excels at predicting engagement patterns within the crucial first 15-30 minutes of posting (RMSE 0.118-0.143), enabling rapid assessment of content reach. By incorporating interval-censored modeling into the state space framework, IC-Mamba captures fine-grained temporal dynamics of engagement growth, achieving a 4.72% improvement over state-of-the-art across multiple engagement metrics (likes, shares, comments, and emojis). Our experiments demonstrate IC-Mamba's effectiveness in forecasting both post-level dynamics and broader narrative patterns (F1 0.508-0.751 for narrative-level predictions). The model maintains strong predictive performance across extended time horizons, successfully forecasting opinion-level engagement up to 28 days ahead using observation windows of 3-10 days. These capabilities enable earlier identification of potentially problematic content, providing crucial lead time for designing and implementing countermeasures. Code is available at: https://github.com/ltian678/ic-mamba. An interactive dashboard demonstrating our results is available at: https://ic-mamba.behavioral-ds.science.

Behavioral Homophily in Social Media via Inverse Reinforcement Learning: A Reddit Case Study

Feb 05, 2025

Abstract:Online communities play a critical role in shaping societal discourse and influencing collective behavior in the real world. The tendency for people to connect with others who share similar characteristics and views, known as homophily, plays a key role in the formation of echo chambers which further amplify polarization and division. Existing works examining homophily in online communities traditionally infer it using content- or adjacency-based approaches, such as constructing explicit interaction networks or performing topic analysis. These methods fall short for platforms where interaction networks cannot be easily constructed and fail to capture the complex nature of user interactions across the platform. This work introduces a novel approach for quantifying user homophily. We first use an Inverse Reinforcement Learning (IRL) framework to infer users' policies, then use these policies as a measure of behavioral homophily. We apply our method to Reddit, conducting a case study across 5.9 million interactions over six years, demonstrating how this approach uncovers distinct behavioral patterns and user roles that vary across different communities. We further validate our behavioral homophily measure against traditional content-based homophily, offering a powerful method for analyzing social media dynamics and their broader societal implications. We find, among others, that users can behave very similarly (high behavioral homophily) when discussing entirely different topics like soccer vs e-sports (low topical homophily), and that there is an entire class of users on Reddit whose purpose seems to be to disagree with others.

What Drives Online Popularity: Author, Content or Sharers? Estimating Spread Dynamics with Bayesian Mixture Hawkes

Jun 12, 2024Abstract:The spread of content on social media is shaped by intertwining factors on three levels: the source, the content itself, and the pathways of content spread. At the lowest level, the popularity of the sharing user determines its eventual reach. However, higher-level factors such as the nature of the online item and the credibility of its source also play crucial roles in determining how widely and rapidly the online item spreads. In this work, we propose the Bayesian Mixture Hawkes (BMH) model to jointly learn the influence of source, content and spread. We formulate the BMH model as a hierarchical mixture model of separable Hawkes processes, accommodating different classes of Hawkes dynamics and the influence of feature sets on these classes. We test the BMH model on two learning tasks, cold-start popularity prediction and temporal profile generalization performance, applying to two real-world retweet cascade datasets referencing articles from controversial and traditional media publishers. The BMH model outperforms the state-of-the-art models and predictive baselines on both datasets and utilizes cascade- and item-level information better than the alternatives. Lastly, we perform a counter-factual analysis where we apply the trained publisher-level BMH models to a set of article headlines and show that effectiveness of headline writing style (neutral, clickbait, inflammatory) varies across publishers. The BMH model unveils differences in style effectiveness between controversial and reputable publishers, where we find clickbait to be notably more effective for reputable publishers as opposed to controversial ones, which links to the latter's overuse of clickbait.

Author, Content or Sharers? Estimating Spread Dynamics with Bayesian Mixture Hawkes

Jun 05, 2024Abstract:The spread of content on social media is shaped by intertwining factors on three levels: the source, the content itself, and the pathways of content spread. At the lowest level, the popularity of the sharing user determines its eventual reach. However, higher-level factors such as the nature of the online item and the credibility of its source also play crucial roles in determining how widely and rapidly the online item spreads. In this work, we propose the Bayesian Mixture Hawkes (BMH) model to jointly learn the influence of source, content and spread. We formulate the BMH model as a hierarchical mixture model of separable Hawkes processes, accommodating different classes of Hawkes dynamics and the influence of feature sets on these classes. We test the BMH model on two learning tasks, cold-start popularity prediction and temporal profile generalization performance, applying to two real-world retweet cascade datasets referencing articles from controversial and traditional media publishers. The BMH model outperforms the state-of-the-art models and predictive baselines on both datasets and utilizes cascade- and item-level information better than the alternatives. Lastly, we perform a counter-factual analysis where we apply the trained publisher-level BMH models to a set of article headlines and show that effectiveness of headline writing style (neutral, clickbait, inflammatory) varies across publishers. The BMH model unveils differences in style effectiveness between controversial and reputable publishers, where we find clickbait to be notably more effective for reputable publishers as opposed to controversial ones, which links to the latter's overuse of clickbait.

Misinformation is not about Bad Facts: An Analysis of the Production and Consumption of Fringe Content

Mar 13, 2024Abstract:What if misinformation is not an information problem at all? Our findings suggest that online fringe ideologies spread through the use of content that is consensus-based and "factually correct". We found that Australian news publishers with both moderate and far-right political leanings contain comparable levels of information completeness and quality; and furthermore, that far-right Twitter users often share from moderate sources. However, a stark difference emerges when we consider two additional factors: 1) the narrow topic selection of articles by far-right users, suggesting that they cherrypick only news articles that engage with specific topics of their concern, and 2) the difference between moderate and far-right publishers when we examine the writing style of their articles. Furthermore, we can even identify users prone to sharing misinformation based on their communication style. These findings have important implications for countering online misinformation, as they highlight the powerful role that users' personal bias towards specific topics, and publishers' writing styles, have in amplifying fringe ideologies online.

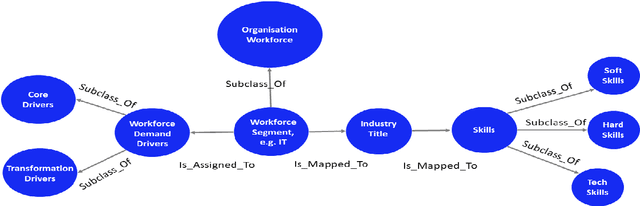

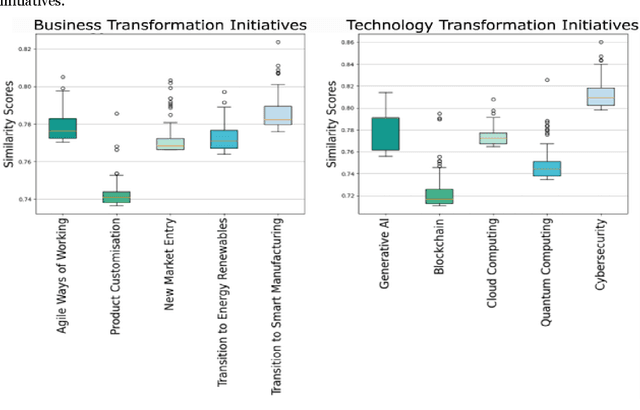

The Innovation-to-Occupations Ontology: Linking Business Transformation Initiatives to Occupations and Skills

Oct 27, 2023

Abstract:The fast adoption of new technologies forces companies to continuously adapt their operations making it harder to predict workforce requirements. Several recent studies have attempted to predict the emergence of new roles and skills in the labour market from online job ads. This paper aims to present a novel ontology linking business transformation initiatives to occupations and an approach to automatically populating it by leveraging embeddings extracted from job ads and Wikipedia pages on business transformation and emerging technologies topics. To our knowledge, no previous research explicitly links business transformation initiatives, like the adoption of new technologies or the entry into new markets, to the roles needed. Our approach successfully matches occupations to transformation initiatives under ten different scenarios, five linked to technology adoption and five related to business. This framework presents an innovative approach to guide enterprises and educational institutions on the workforce requirements for specific business transformation initiatives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge