Didar Zowghi

Diversity and Inclusion in AI for Recruitment: Lessons from Industry Workshop

Nov 09, 2024

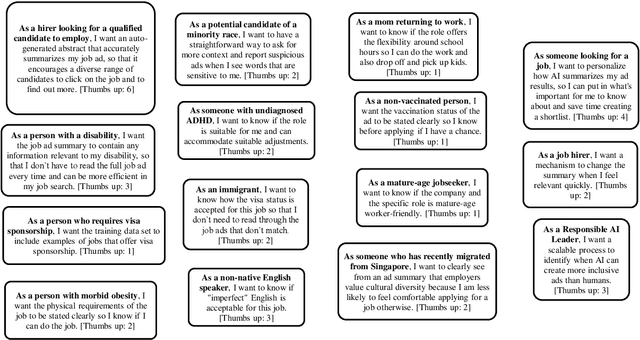

Abstract:Artificial Intelligence (AI) systems for online recruitment markets have the potential to significantly enhance the efficiency and effectiveness of job placements and even promote fairness or inclusive hiring practices. Neglecting Diversity and Inclusion (D&I) in these systems, however, can perpetuate biases, leading to unfair hiring practices and decreased workplace diversity, while exposing organisations to legal and reputational risks. Despite the acknowledged importance of D&I in AI, there is a gap in research on effectively implementing D&I guidelines in real-world recruitment systems. Challenges include a lack of awareness and framework for operationalising D&I in a cost-effective, context-sensitive manner. This study aims to investigate the practical application of D&I guidelines in AI-driven online job-seeking systems, specifically exploring how these principles can be operationalised to create more inclusive recruitment processes. We conducted a co-design workshop with a large multinational recruitment company focusing on two AI-driven recruitment use cases. User stories and personas were applied to evaluate the impacts of AI on diverse stakeholders. Follow-up interviews were conducted to assess the workshop's long-term effects on participants' awareness and application of D&I principles. The co-design workshop successfully increased participants' understanding of D&I in AI. However, translating awareness into operational practice posed challenges, particularly in balancing D&I with business goals. The results suggest developing tailored D&I guidelines and ongoing support to ensure the effective adoption of inclusive AI practices.

AI for All: Identifying AI incidents Related to Diversity and Inclusion

Jul 19, 2024

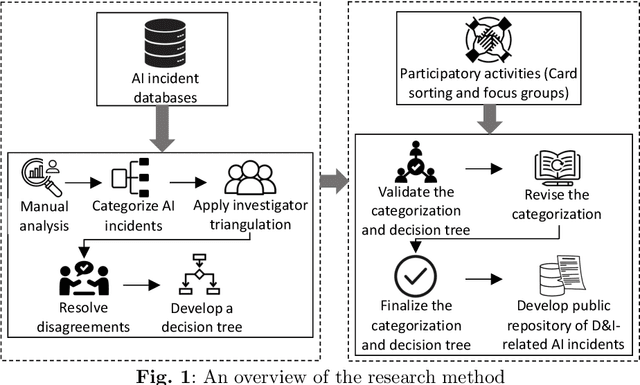

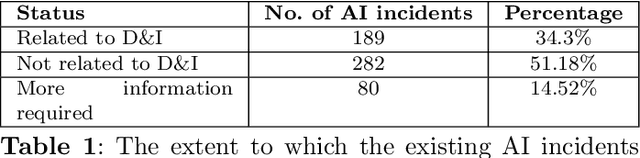

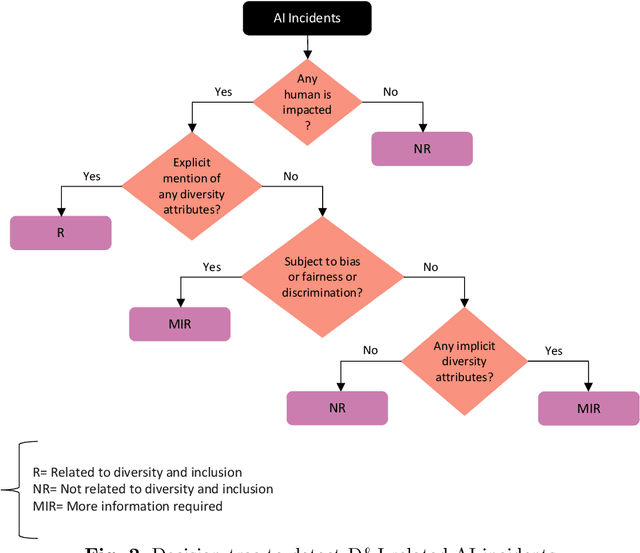

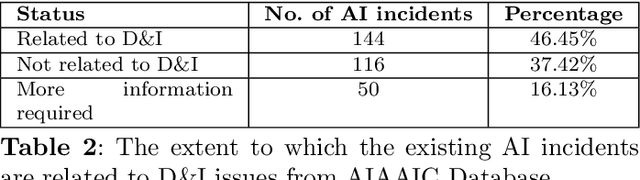

Abstract:The rapid expansion of Artificial Intelligence (AI) technologies has introduced both significant advancements and challenges, with diversity and inclusion (D&I) emerging as a critical concern. Addressing D&I in AI is essential to reduce biases and discrimination, enhance fairness, and prevent adverse societal impacts. Despite its importance, D&I considerations are often overlooked, resulting in incidents marked by built-in biases and ethical dilemmas. Analyzing AI incidents through a D&I lens is crucial for identifying causes of biases and developing strategies to mitigate them, ensuring fairer and more equitable AI technologies. However, systematic investigations of D&I-related AI incidents are scarce. This study addresses these challenges by identifying and understanding D&I issues within AI systems through a manual analysis of AI incident databases (AIID and AIAAIC). The research develops a decision tree to investigate D&I issues tied to AI incidents and populate a public repository of D&I-related AI incidents. The decision tree was validated through a card sorting exercise and focus group discussions. The research demonstrates that almost half of the analyzed AI incidents are related to D&I, with a notable predominance of racial, gender, and age discrimination. The decision tree and resulting public repository aim to foster further research and responsible AI practices, promoting the development of inclusive and equitable AI systems.

Investigating Responsible AI for Scientific Research: An Empirical Study

Dec 15, 2023Abstract:Scientific research organizations that are developing and deploying Artificial Intelligence (AI) systems are at the intersection of technological progress and ethical considerations. The push for Responsible AI (RAI) in such institutions underscores the increasing emphasis on integrating ethical considerations within AI design and development, championing core values like fairness, accountability, and transparency. For scientific research organizations, prioritizing these practices is paramount not just for mitigating biases and ensuring inclusivity, but also for fostering trust in AI systems among both users and broader stakeholders. In this paper, we explore the practices at a research organization concerning RAI practices, aiming to assess the awareness and preparedness regarding the ethical risks inherent in AI design and development. We have adopted a mixed-method research approach, utilising a comprehensive survey combined with follow-up in-depth interviews with selected participants from AI-related projects. Our results have revealed certain knowledge gaps concerning ethical, responsible, and inclusive AI, with limitations in awareness of the available AI ethics frameworks. This revealed an overarching underestimation of the ethical risks that AI technologies can present, especially when implemented without proper guidelines and governance. Our findings reveal the need for a holistic and multi-tiered strategy to uplift capabilities and better support science research teams for responsible, ethical, and inclusive AI development and deployment.

A Vision for Operationalising Diversity and Inclusion in AI

Dec 11, 2023

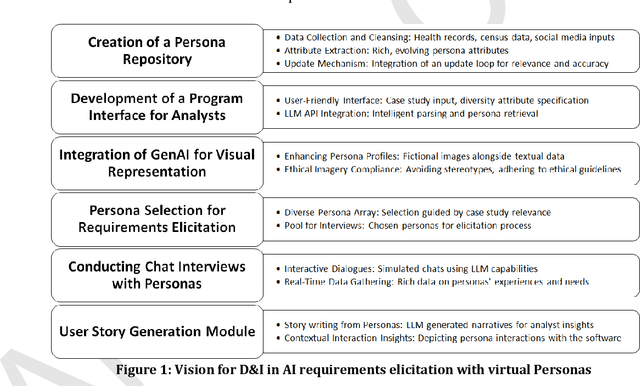

Abstract:The growing presence of Artificial Intelligence (AI) in various sectors necessitates systems that accurately reflect societal diversity. This study seeks to envision the operationalization of the ethical imperatives of diversity and inclusion (D&I) within AI ecosystems, addressing the current disconnect between ethical guidelines and their practical implementation. A significant challenge in AI development is the effective operationalization of D&I principles, which is critical to prevent the reinforcement of existing biases and ensure equity across AI applications. This paper proposes a vision of a framework for developing a tool utilizing persona-based simulation by Generative AI (GenAI). The approach aims to facilitate the representation of the needs of diverse users in the requirements analysis process for AI software. The proposed framework is expected to lead to a comprehensive persona repository with diverse attributes that inform the development process with detailed user narratives. This research contributes to the development of an inclusive AI paradigm that ensures future technological advances are designed with a commitment to the diverse fabric of humanity.

The Innovation-to-Occupations Ontology: Linking Business Transformation Initiatives to Occupations and Skills

Oct 27, 2023

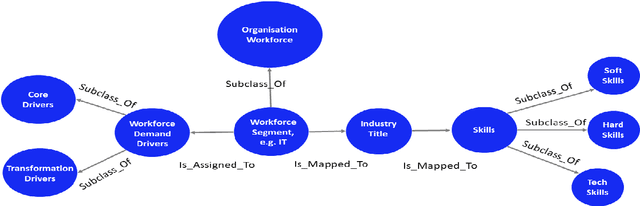

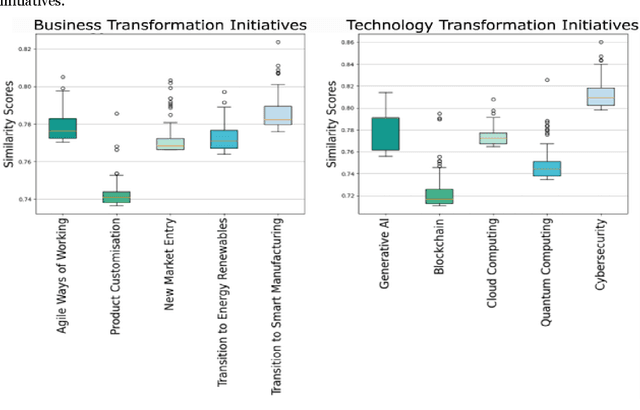

Abstract:The fast adoption of new technologies forces companies to continuously adapt their operations making it harder to predict workforce requirements. Several recent studies have attempted to predict the emergence of new roles and skills in the labour market from online job ads. This paper aims to present a novel ontology linking business transformation initiatives to occupations and an approach to automatically populating it by leveraging embeddings extracted from job ads and Wikipedia pages on business transformation and emerging technologies topics. To our knowledge, no previous research explicitly links business transformation initiatives, like the adoption of new technologies or the entry into new markets, to the roles needed. Our approach successfully matches occupations to transformation initiatives under ten different scenarios, five linked to technology adoption and five related to business. This framework presents an innovative approach to guide enterprises and educational institutions on the workforce requirements for specific business transformation initiatives.

Challenges and Solutions in AI for All

Jul 20, 2023

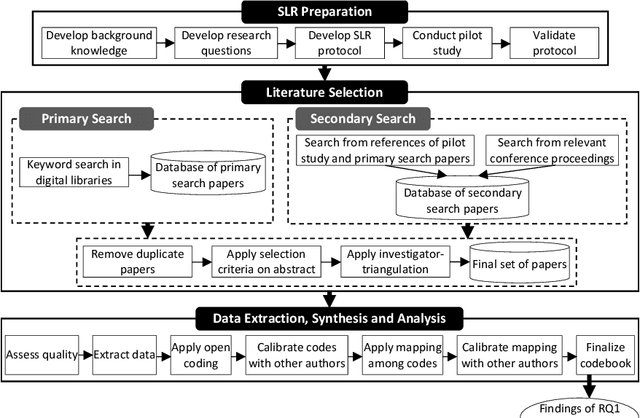

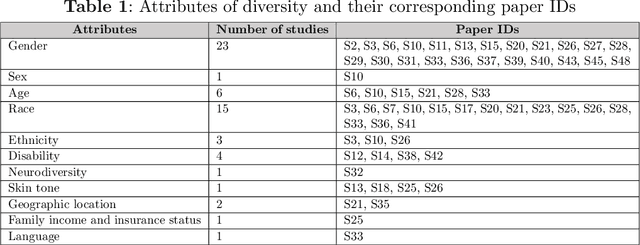

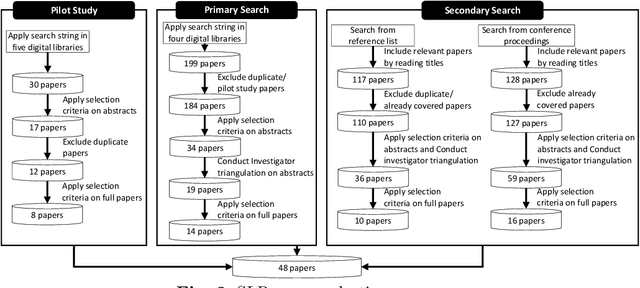

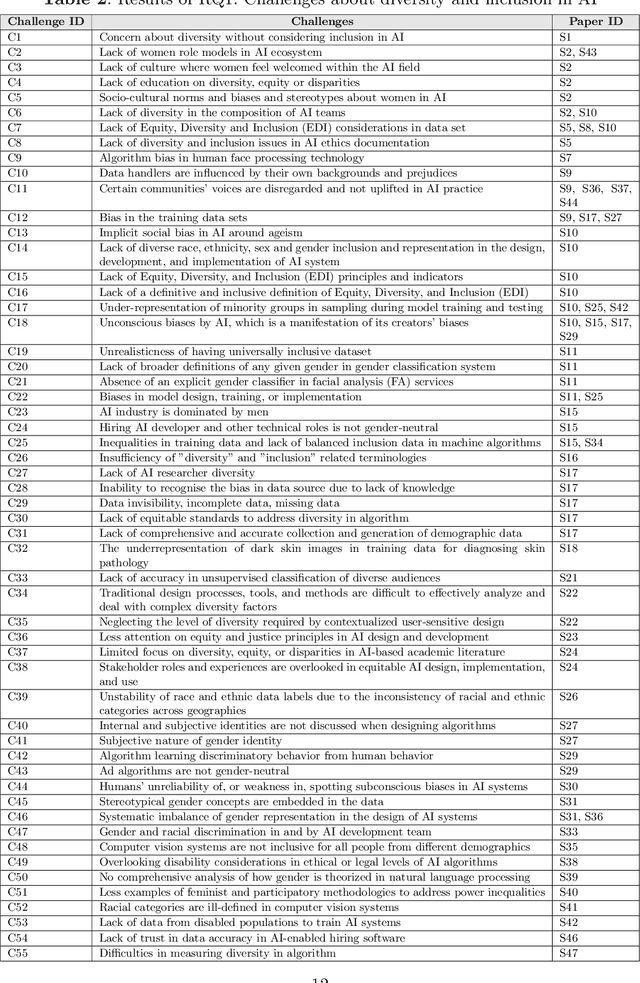

Abstract:Artificial Intelligence (AI)'s pervasive presence and variety necessitate diversity and inclusivity (D&I) principles in its design for fairness, trust, and transparency. Yet, these considerations are often overlooked, leading to issues of bias, discrimination, and perceived untrustworthiness. In response, we conducted a Systematic Review to unearth challenges and solutions relating to D&I in AI. Our rigorous search yielded 48 research articles published between 2017 and 2022. Open coding of these papers revealed 55 unique challenges and 33 solutions for D&I in AI, as well as 24 unique challenges and 23 solutions for enhancing such practices using AI. This study, by offering a deeper understanding of these issues, will enlighten researchers and practitioners seeking to integrate these principles into future AI systems.

Exploring Qualitative Research Using LLMs

Jun 23, 2023Abstract:The advent of AI driven large language models (LLMs) have stirred discussions about their role in qualitative research. Some view these as tools to enrich human understanding, while others perceive them as threats to the core values of the discipline. This study aimed to compare and contrast the comprehension capabilities of humans and LLMs. We conducted an experiment with small sample of Alexa app reviews, initially classified by a human analyst. LLMs were then asked to classify these reviews and provide the reasoning behind each classification. We compared the results with human classification and reasoning. The research indicated a significant alignment between human and ChatGPT 3.5 classifications in one third of cases, and a slightly lower alignment with GPT4 in over a quarter of cases. The two AI models showed a higher alignment, observed in more than half of the instances. However, a consensus across all three methods was seen only in about one fifth of the classifications. In the comparison of human and LLMs reasoning, it appears that human analysts lean heavily on their individual experiences. As expected, LLMs, on the other hand, base their reasoning on the specific word choices found in app reviews and the functional components of the app itself. Our results highlight the potential for effective human LLM collaboration, suggesting a synergistic rather than competitive relationship. Researchers must continuously evaluate LLMs role in their work, thereby fostering a future where AI and humans jointly enrich qualitative research.

Diversity and Inclusion in Artificial Intelligence

May 22, 2023

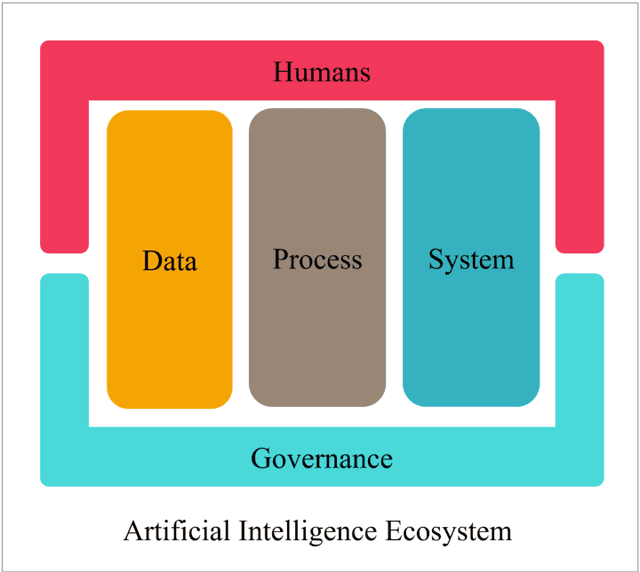

Abstract:To date, there has been little concrete practical advice about how to ensure that diversity and inclusion considerations should be embedded within both specific Artificial Intelligence (AI) systems and the larger global AI ecosystem. In this chapter, we present a clear definition of diversity and inclusion in AI, one which positions this concept within an evolving and holistic ecosystem. We use this definition and conceptual framing to present a set of practical guidelines primarily aimed at AI technologists, data scientists and project leaders.

Responsible AI Pattern Catalogue: A Multivocal Literature Review

Sep 15, 2022

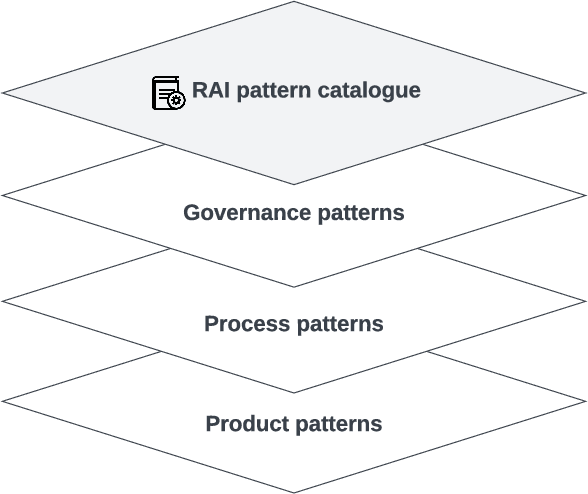

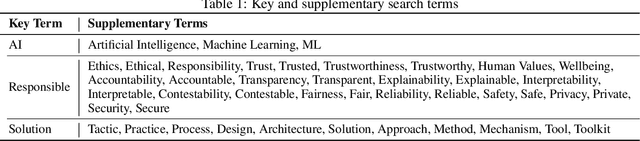

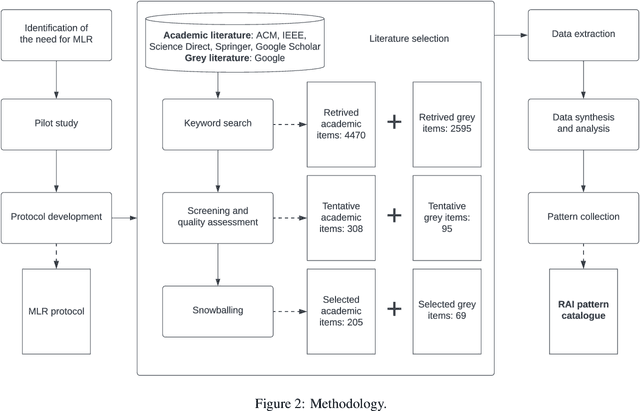

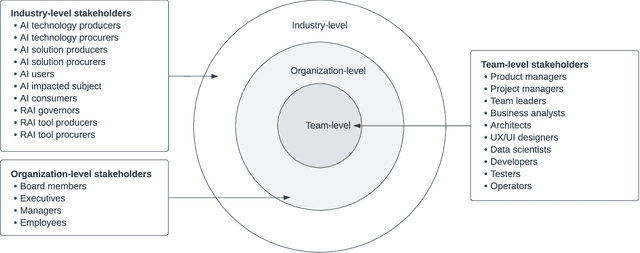

Abstract:Responsible AI has been widely considered as one of the greatest scientific challenges of our time and the key to increase the adoption of AI. A number of AI ethics principles frameworks have been published recently. However, without further best practice guidance, practitioners are left with nothing much beyond truisms. Also, significant efforts have been placed at algorithm-level rather than system-level, mainly focusing on a subset of mathematics-amenable ethical principles (such as fairness). Nevertheless, ethical issues can occur at any step of the development lifecycle crosscutting many AI and non-AI components of systems beyond AI algorithms and models. To operationalize responsible AI from a system perspective, in this paper, we present a Responsible AI Pattern Catalogue based on the results of a Multivocal Literature Review (MLR). Rather than staying at the principle or algorithm level, we focus on patterns that AI system stakeholders can undertake in practice to ensure that the developed AI systems are responsible throughout the entire governance and engineering lifecycle. The Responsible AI Pattern Catalogue classifies the patterns into three groups: multi-level governance patterns, trustworthy process patterns, and responsible-AI-by-design product patterns. These patterns provide a systematic and actionable guidance for stakeholders to implement responsible AI.

ELICA: An Automated Tool for Dynamic Extraction of Requirements Relevant Information

Jul 21, 2018

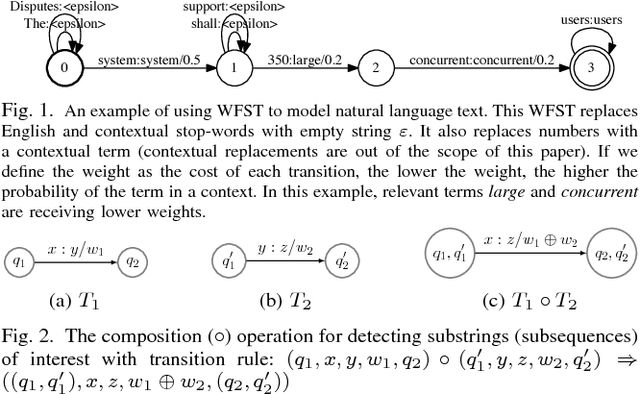

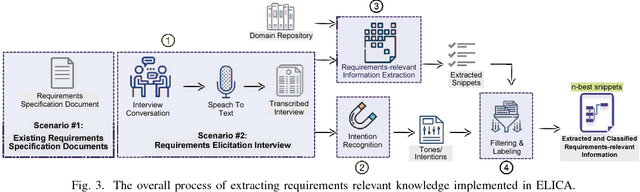

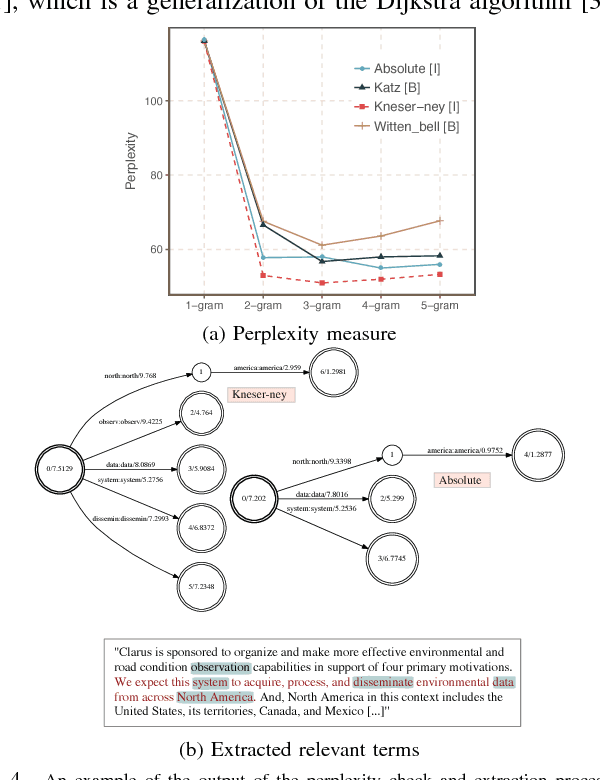

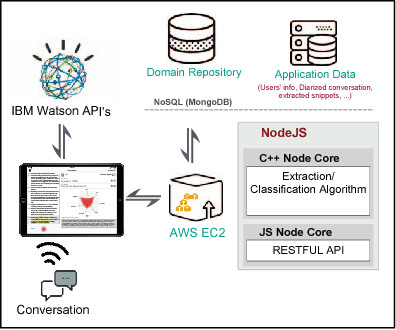

Abstract:Requirements elicitation requires extensive knowledge and deep understanding of the problem domain where the final system will be situated. However, in many software development projects, analysts are required to elicit the requirements from an unfamiliar domain, which often causes communication barriers between analysts and stakeholders. In this paper, we propose a requirements ELICitation Aid tool (ELICA) to help analysts better understand the target application domain by dynamic extraction and labeling of requirements-relevant knowledge. To extract the relevant terms, we leverage the flexibility and power of Weighted Finite State Transducers (WFSTs) in dynamic modeling of natural language processing tasks. In addition to the information conveyed through text, ELICA captures and processes non-linguistic information about the intention of speakers such as their confidence level, analytical tone, and emotions. The extracted information is made available to the analysts as a set of labeled snippets with highlighted relevant terms which can also be exported as an artifact of the Requirements Engineering (RE) process. The application and usefulness of ELICA are demonstrated through a case study. This study shows how pre-existing relevant information about the application domain and the information captured during an elicitation meeting, such as the conversation and stakeholders' intentions, can be captured and used to support analysts achieving their tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge