Maria Frucci

BRACS: A Dataset for BReAst Carcinoma Subtyping in H&E Histology Images

Nov 08, 2021

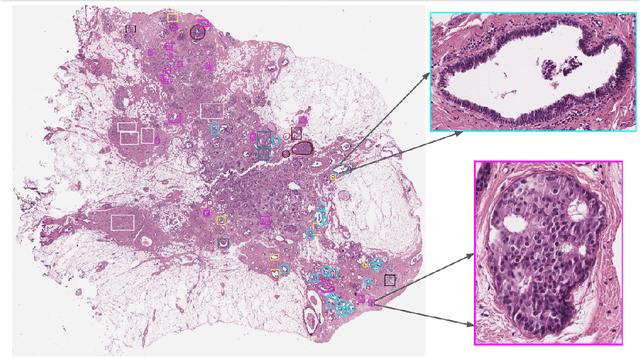

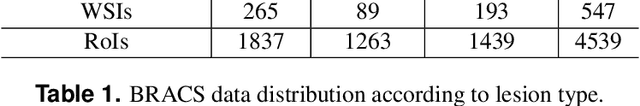

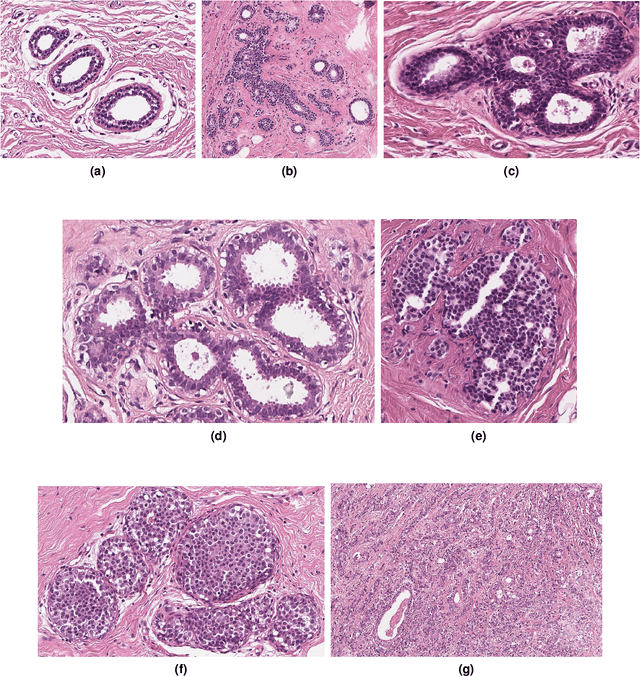

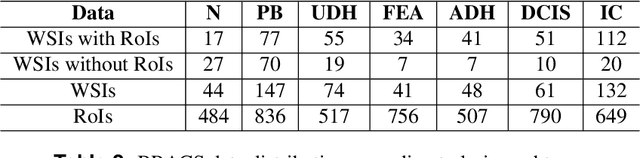

Abstract:Breast cancer is the most commonly diagnosed cancer and registers the highest number of deaths for women with cancer. Recent advancements in diagnostic activities combined with large-scale screening policies have significantly lowered the mortality rates for breast cancer patients. However, the manual inspection of tissue slides by the pathologists is cumbersome, time-consuming, and is subject to significant inter- and intra-observer variability. Recently, the advent of whole-slide scanning systems have empowered the rapid digitization of pathology slides, and enabled to develop digital workflows. These advances further enable to leverage Artificial Intelligence (AI) to assist, automate, and augment pathological diagnosis. But the AI techniques, especially Deep Learning (DL), require a large amount of high-quality annotated data to learn from. Constructing such task-specific datasets poses several challenges, such as, data-acquisition level constrains, time-consuming and expensive annotations, and anonymization of private information. In this paper, we introduce the BReAst Carcinoma Subtyping (BRACS) dataset, a large cohort of annotated Hematoxylin & Eosin (H&E)-stained images to facilitate the characterization of breast lesions. BRACS contains 547 Whole-Slide Images (WSIs), and 4539 Regions of Interest (ROIs) extracted from the WSIs. Each WSI, and respective ROIs, are annotated by the consensus of three board-certified pathologists into different lesion categories. Specifically, BRACS includes three lesion types, i.e., benign, malignant and atypical, which are further subtyped into seven categories. It is, to the best of our knowledge, the largest annotated dataset for breast cancer subtyping both at WSI- and ROI-level. Further, by including the understudied atypical lesions, BRACS offers an unique opportunity for leveraging AI to better understand their characteristics.

Hierarchical Graph Representations in Digital Pathology

Mar 17, 2021

Abstract:Cancer diagnosis, prognosis, and therapy response predictions from tissue specimens highly depend on the phenotype and topological distribution of constituting histological entities. Thus, adequate tissue representations for encoding histological entities is imperative for computer aided cancer patient care. To this end, several approaches have leveraged cell-graphs that encode cell morphology and organization to denote the tissue information. These allow for utilizing machine learning to map tissue representations to tissue functionality to help quantify their relationship. Though cellular information is crucial, it is incomplete alone to comprehensively characterize complex tissue structure. We herein treat the tissue as a hierarchical composition of multiple types of histological entities from fine to coarse level, capturing multivariate tissue information at multiple levels. We propose a novel multi-level hierarchical entity-graph representation of tissue specimens to model hierarchical compositions that encode histological entities as well as their intra- and inter-entity level interactions. Subsequently, a graph neural network is proposed to operate on the hierarchical entity-graph representation to map the tissue structure to tissue functionality. Specifically, for input histology images we utilize well-defined cells and tissue regions to build HierArchical Cell-to-Tissue (HACT) graph representations, and devise HACT-Net, a graph neural network, to classify such HACT representations. As part of this work, we introduce the BReAst Carcinoma Subtyping (BRACS) dataset, a large cohort of H&E stained breast tumor images, to evaluate our proposed methodology against pathologists and state-of-the-art approaches. Through comparative assessment and ablation studies, our method is demonstrated to yield superior classification results compared to alternative methods as well as pathologists.

Gigapixel Histopathological Image Analysis using Attention-based Neural Networks

Jan 30, 2021

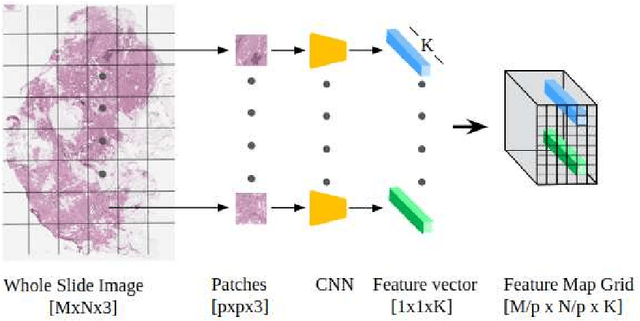

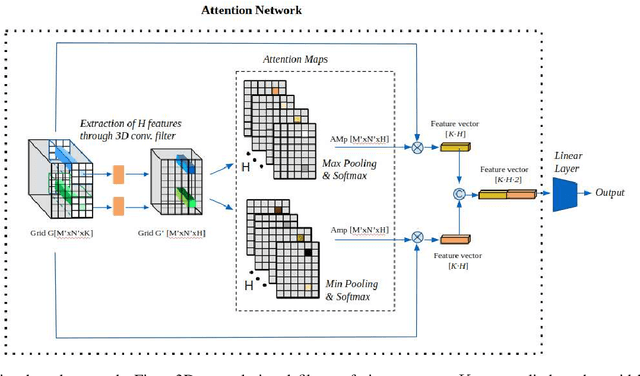

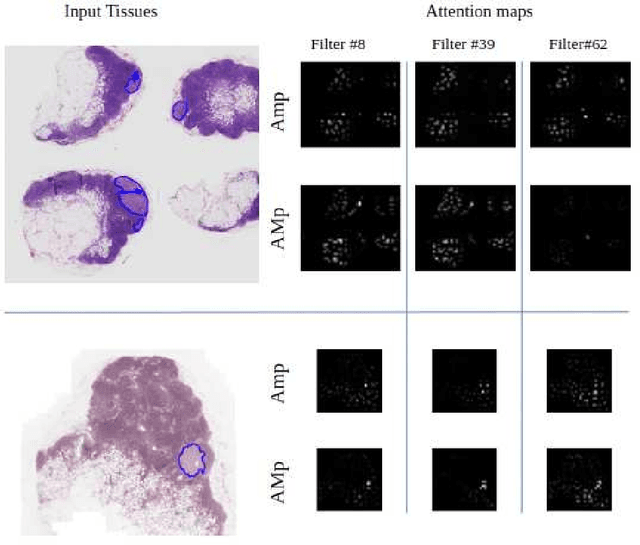

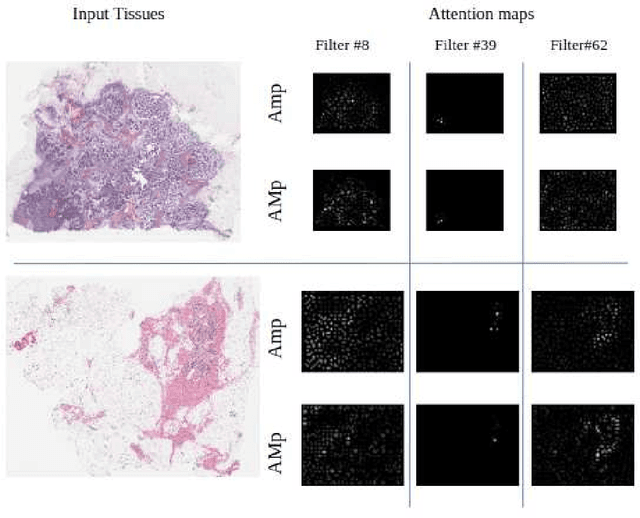

Abstract:Although CNNs are widely considered as the state-of-the-art models in various applications of image analysis, one of the main challenges still open is the training of a CNN on high resolution images. Different strategies have been proposed involving either a rescaling of the image or an individual processing of parts of the image. Such strategies cannot be applied to images, such as gigapixel histopathological images, for which a high reduction in resolution inherently effects a loss of discriminative information, and in respect of which the analysis of single parts of the image suffers from a lack of global information or implies a high workload in terms of annotating the training images in such a way as to select significant parts. We propose a method for the analysis of gigapixel histopathological images solely by using weak image-level labels. In particular, two analysis tasks are taken into account: a binary classification and a prediction of the tumor proliferation score. Our method is based on a CNN structure consisting of a compressing path and a learning path. In the compressing path, the gigapixel image is packed into a grid-based feature map by using a residual network devoted to the feature extraction of each patch into which the image has been divided. In the learning path, attention modules are applied to the grid-based feature map, taking into account spatial correlations of neighboring patch features to find regions of interest, which are then used for the final whole slide analysis. Our method integrates both global and local information, is flexible with regard to the size of the input images and only requires weak image-level labels. Comparisons with different methods of the state-of-the-art on two well known datasets, Camelyon16 and TUPAC16, have been made to confirm the validity of the proposed model.

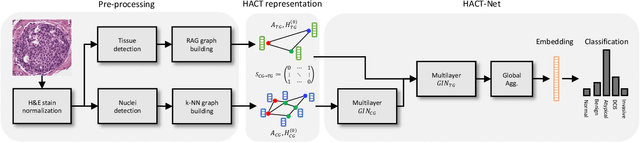

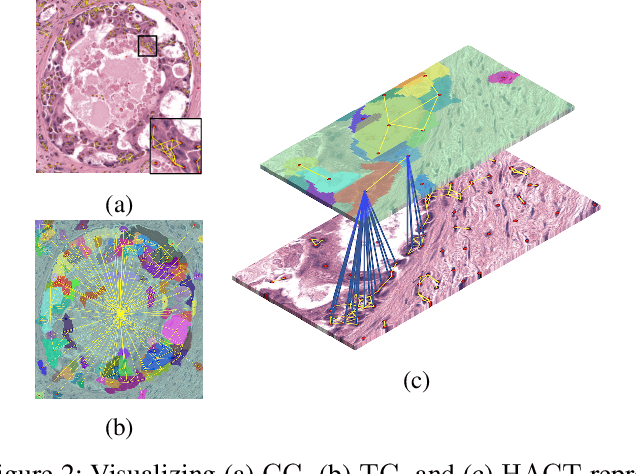

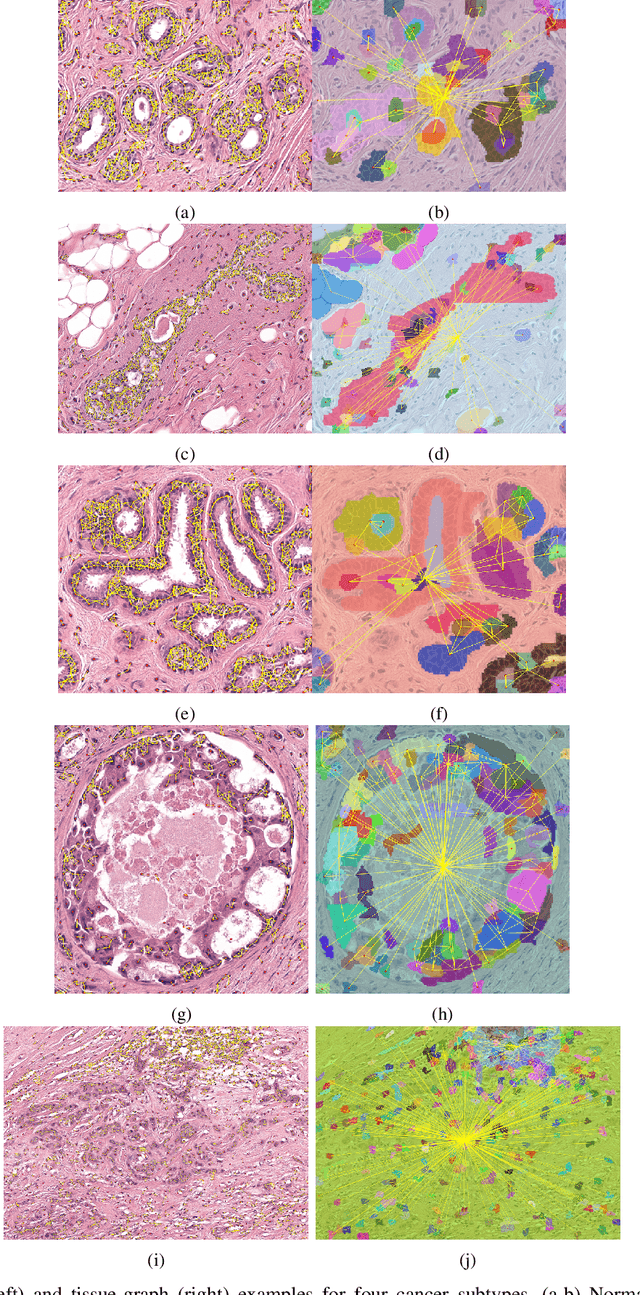

HACT-Net: A Hierarchical Cell-to-Tissue Graph Neural Network for Histopathological Image Classification

Jul 01, 2020

Abstract:Cancer diagnosis, prognosis, and therapeutic response prediction are heavily influenced by the relationship between the histopathological structures and the function of the tissue. Recent approaches acknowledging the structure-function relationship, have linked the structural and spatial patterns of cell organization in tissue via cell-graphs to tumor grades. Though cell organization is imperative, it is insufficient to entirely represent the histopathological structure. We propose a novel hierarchical cell-to-tissue-graph (HACT) representation to improve the structural depiction of the tissue. It consists of a low-level cell-graph, capturing cell morphology and interactions, a high-level tissue-graph, capturing morphology and spatial distribution of tissue parts, and cells-to-tissue hierarchies, encoding the relative spatial distribution of the cells with respect to the tissue distribution. Further, a hierarchical graph neural network (HACT-Net) is proposed to efficiently map the HACT representations to histopathological breast cancer subtypes. We assess the methodology on a large set of annotated tissue regions of interest from H\&E stained breast carcinoma whole-slides. Upon evaluation, the proposed method outperformed recent convolutional neural network and graph neural network approaches for breast cancer multi-class subtyping. The proposed entity-based topological analysis is more inline with the pathological diagnostic procedure of the tissue. It provides more command over the tissue modelling, therefore encourages the further inclusion of pathological priors into task-specific tissue representation.

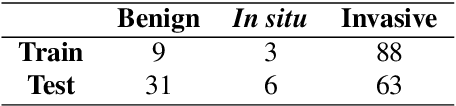

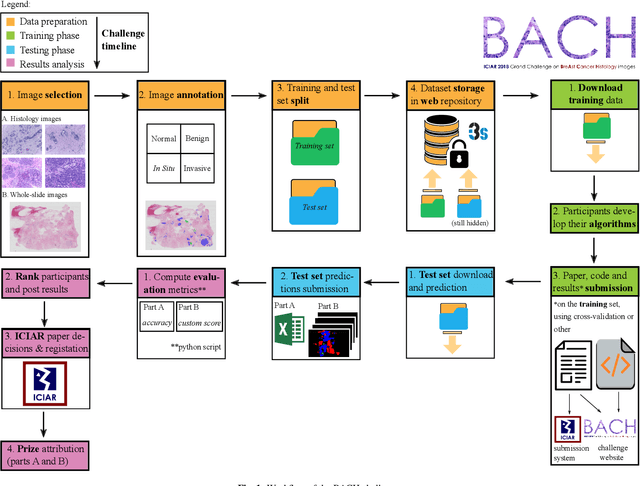

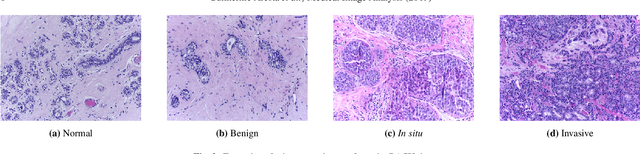

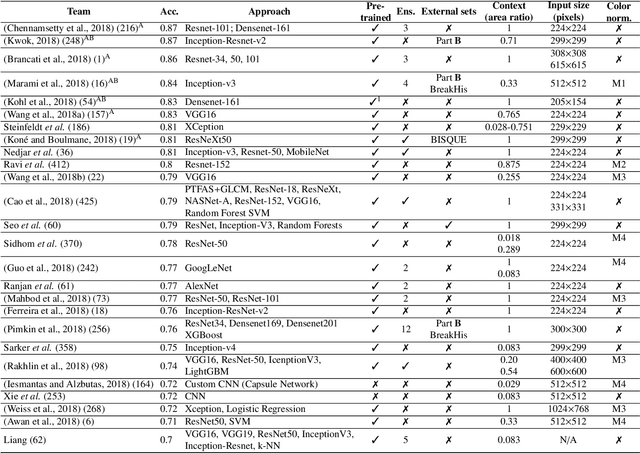

BACH: Grand Challenge on Breast Cancer Histology Images

Aug 13, 2018

Abstract:Breast cancer is the most common invasive cancer in women, affecting more than 10% of women worldwide. Microscopic analysis of a biopsy remains one of the most important methods to diagnose the type of breast cancer. This requires specialized analysis by pathologists, in a task that i) is highly time- and cost-consuming and ii) often leads to nonconsensual results. The relevance and potential of automatic classification algorithms using hematoxylin-eosin stained histopathological images has already been demonstrated, but the reported results are still sub-optimal for clinical use. With the goal of advancing the state-of-the-art in automatic classification, the Grand Challenge on BreAst Cancer Histology images (BACH) was organized in conjunction with the 15th International Conference on Image Analysis and Recognition (ICIAR 2018). A large annotated dataset, composed of both microscopy and whole-slide images, was specifically compiled and made publicly available for the BACH challenge. Following a positive response from the scientific community, a total of 64 submissions, out of 677 registrations, effectively entered the competition. From the submitted algorithms it was possible to push forward the state-of-the-art in terms of accuracy (87%) in automatic classification of breast cancer with histopathological images. Convolutional neuronal networks were the most successful methodology in the BACH challenge. Detailed analysis of the collective results allowed the identification of remaining challenges in the field and recommendations for future developments. The BACH dataset remains publically available as to promote further improvements to the field of automatic classification in digital pathology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge