Marc Reichenbach

Evaluation Pipeline for systematically searching for Anomaly Detection Systems

Jun 18, 2025Abstract:Digitalization in the medical world provides major benefits while making it a target for attackers and thus hard to secure. To deal with network intruders we propose an anomaly detection system on hardware to detect malicious clients in real-time. We meet real-time and power restrictions using FPGAs. Overall system performance is achieved via the presented holistic system evaluation.

The Polynomial Connection between Morphological Dilation and Discrete Convolution

May 04, 2023

Abstract:In this paper we consider the fundamental operations dilation and erosion of mathematical morphology. Many powerful image filtering operations are based on their combinations. We establish homomorphism between max-plus semi-ring of integers and subset of polynomials over the field of real numbers. This enables to reformulate the task of computing morphological dilation to that of computing sums and products of polynomials. Therefore, dilation and its dual operation erosion can be computed by convolution of discrete linear signals, which is efficiently accomplished using a Fast Fourier Transform technique. The novel method may deal with non-flat filters and incorporates no restrictions on shape or size of the structuring element, unlike many other fast methods in the field. In contrast to previous fast Fourier techniques it gives exact results and is not an approximation. The new method is in practice particularly suitable for filtering images with small tonal range or when employing large filter sizes. We explore the benefits by investigating an implementation on FPGA hardware. Several experiments demonstrate the exactness and efficiency of the proposed method.

Estimation of Non-Functional Properties for Embedded Hardware with Application to Image Processing

Mar 03, 2022

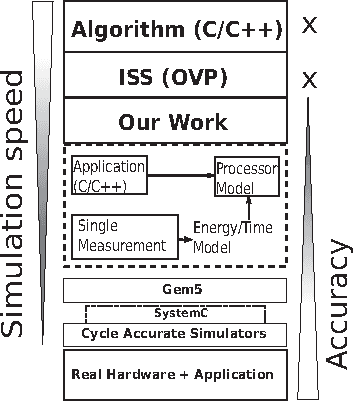

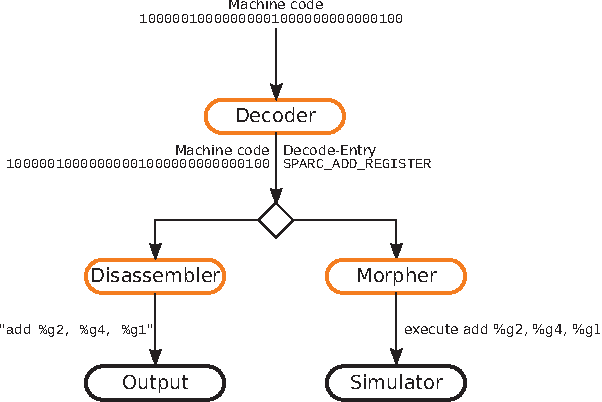

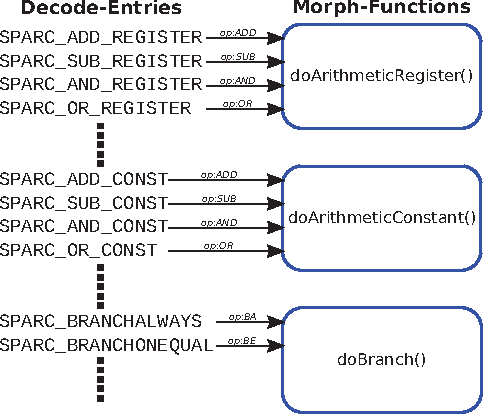

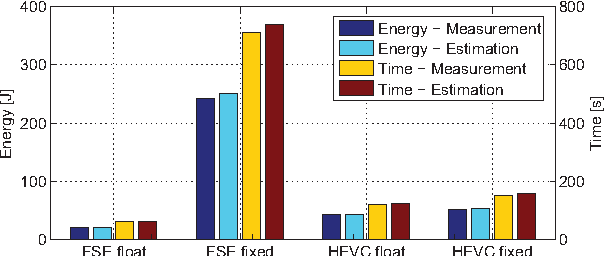

Abstract:In recent years, due to a higher demand for portable devices, which provide restricted amounts of processing capacity and battery power, the need for energy and time efficient hard- and software solutions has increased. Preliminary estimations of time and energy consumption can thus be valuable to improve implementations and design decisions. To this end, this paper presents a method to estimate the time and energy consumption of a given software solution, without having to rely on the use of a traditional Cycle Accurate Simulator (CAS). Instead, we propose to utilize a combination of high-level functional simulation with a mechanistic extension to include non-functional properties: Instruction counts from virtual execution are multiplied with corresponding specific energies and times. By evaluating two common image processing algorithms on an FPGA-based CPU, where a mean relative estimation error of 3% is achieved for cacheless systems, we show that this estimation tool can be a valuable aid in the development of embedded processor architectures. The tool allows the developer to reach well-suited design decisions regarding the optimal processor hardware configuration for a given algorithm at an early stage in the design process.

Modeling the Energy Consumption of the HEVC Decoding Process

Mar 01, 2022

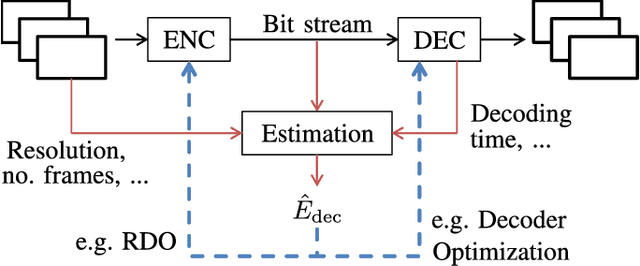

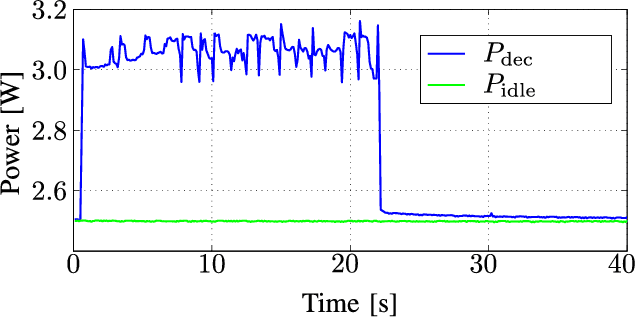

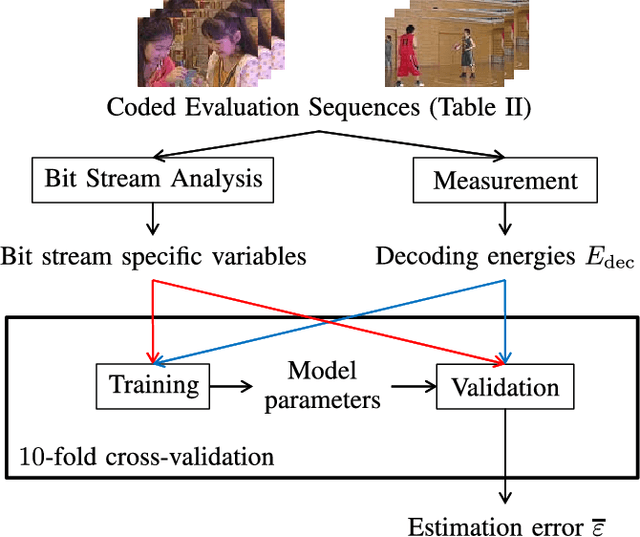

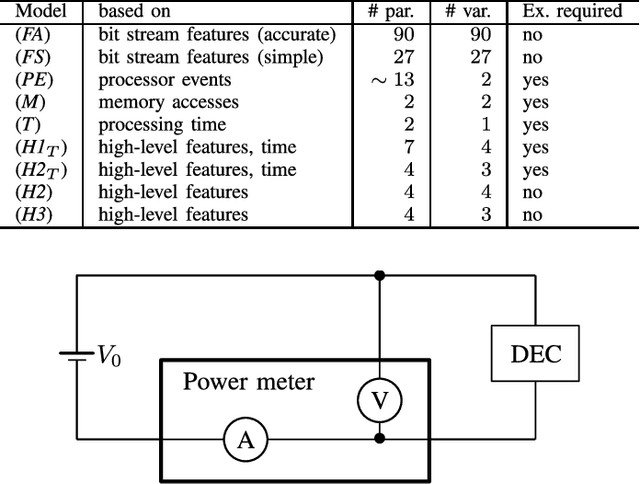

Abstract:In this paper, we present a bit stream feature based energy model that accurately estimates the energy required to decode a given HEVC-coded bit stream. Therefore, we take a model from literature and extend it by explicitly modeling the inloop filters, which was not done before. Furthermore, to prove its superior estimation performance, it is compared to seven different energy models from literature. By using a unified evaluation framework we show how accurately the required decoding energy for different decoding systems can be approximated. We give thorough explanations on the model parameters and explain how the model variables are derived. To show the modeling capabilities in general, we test the estimation performance for different decoding software and hardware solutions, where we find that the proposed model outperforms the models from literature by reaching frame-wise mean estimation errors of less than 7% for software and less than 15% for hardware based systems.

* 13 pages, 4 figures

Transparent FPGA Acceleration with TensorFlow

Feb 02, 2021

Abstract:Today, artificial neural networks are one of the major innovators pushing the progress of machine learning. This has particularly affected the development of neural network accelerating hardware. However, since most of these architectures require specialized toolchains, there is a certain amount of additional effort for developers each time they want to make use of a new deep learning accelerator. Furthermore the flexibility of the device is bound to the architecture itself, as well as to the functionality of the runtime environment. In this paper we propose a toolflow using TensorFlow as frontend, thus offering developers the opportunity of using a familiar environment. On the backend we use an FPGA, which is addressable via an HSA runtime environment. In this way we are able to hide the complexity of controlling new hardware from the user, while at the same time maintaining a high amount of flexibility. This can be achieved by our HSA toolflow, since the hardware is not statically configured with the structure of the network. Instead, it can be dynamically reconfigured during runtime with the respective kernels executed by the network and simultaneously from other sources e.g. OpenCL/OpenMP.

A Holistic Approach for Modeling and Synthesis of Image Processing Applications for Heterogeneous Computing Architectures

Feb 26, 2015

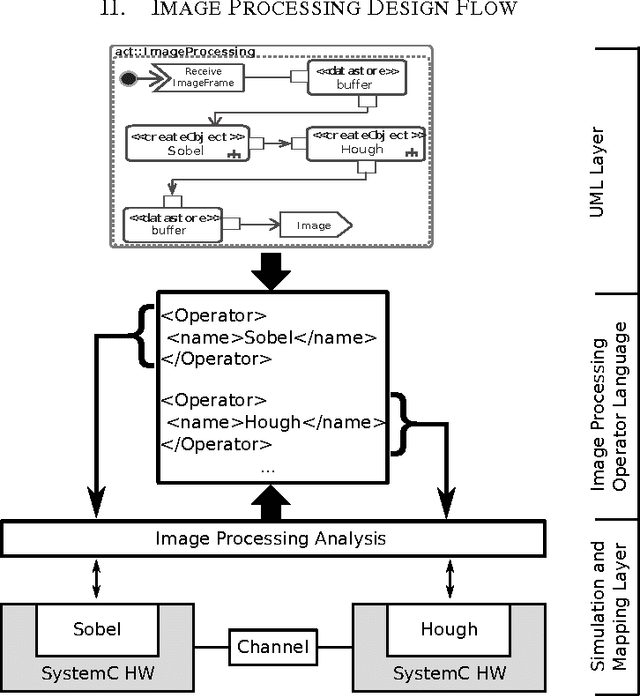

Abstract:Image processing applications are common in every field of our daily life. However, most of them are very complex and contain several tasks with different complexities which result in varying requirements for computing architectures. Nevertheless, a general processing scheme in every image processing application has a similar structure, called image processing pipeline: (1) capturing an image, (2) pre-processing using local operators, (3) processing with global operators and (4) post-processing using complex operations. Therefore, application-specialized hardware solutions based on heterogeneous architectures are used for image processing. Unfortunately the development of applications for heterogeneous hardware architectures is challenging due to the distribution of computational tasks among processors and programmable logic units. Nowadays, image processing systems are started from scratch which is time-consuming, error-prone and inflexible. A new methodology for modeling and implementing is needed in order to reduce the development time of heterogenous image processing systems. This paper introduces a new holistic top down approach for image processing systems. Two challenges have to be investigated. First, designers ought to be able to model their complete image processing pipeline on an abstract layer using UML. Second, we want to close the gap between the abstract system and the system architecture.

Automatic Optimization of Hardware Accelerators for Image Processing

Feb 26, 2015

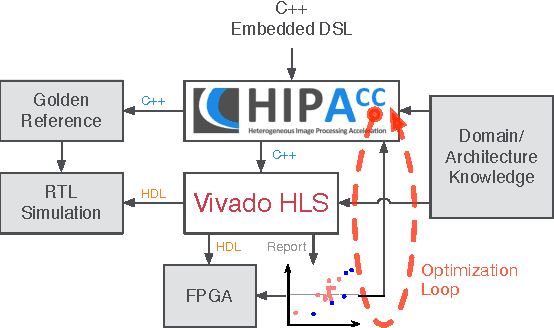

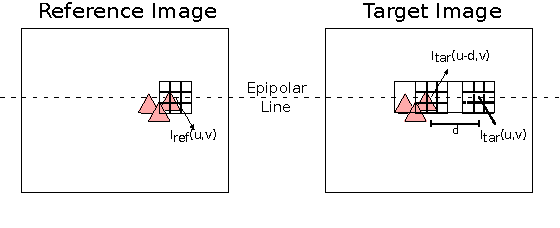

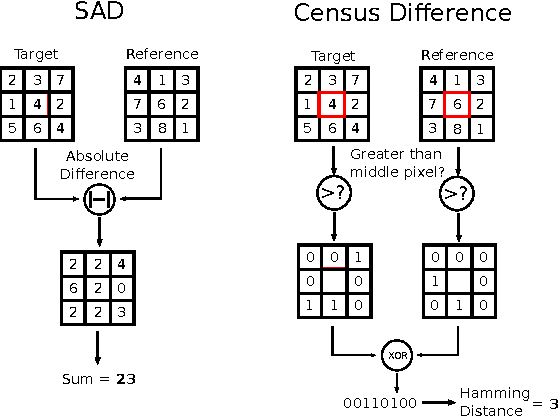

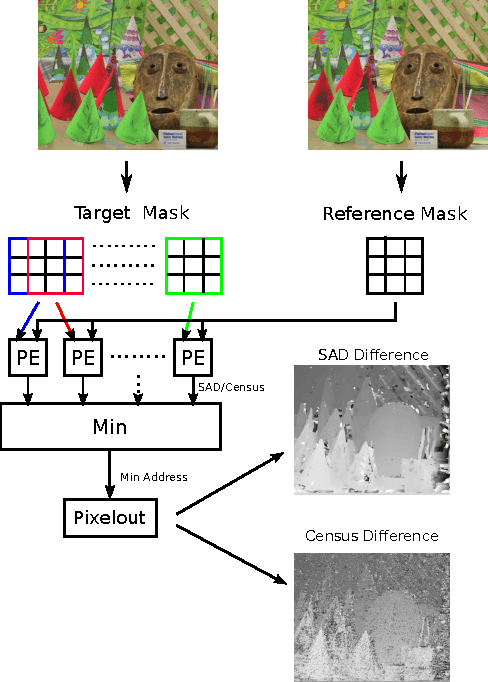

Abstract:In the domain of image processing, often real-time constraints are required. In particular, in safety-critical applications, such as X-ray computed tomography in medical imaging or advanced driver assistance systems in the automotive domain, timing is of utmost importance. A common approach to maintain real-time capabilities of compute-intensive applications is to offload those computations to dedicated accelerator hardware, such as Field Programmable Gate Arrays (FPGAs). Programming such architectures is a challenging task, with respect to the typical FPGA-specific design criteria: Achievable overall algorithm latency and resource usage of FPGA primitives (BRAM, FF, LUT, and DSP). High-Level Synthesis (HLS) dramatically simplifies this task by enabling the description of algorithms in well-known higher languages (C/C++) and its automatic synthesis that can be accomplished by HLS tools. However, algorithm developers still need expert knowledge about the target architecture, in order to achieve satisfying results. Therefore, in previous work, we have shown that elevating the description of image algorithms to an even higher abstraction level, by using a Domain-Specific Language (DSL), can significantly cut down the complexity for designing such algorithms for FPGAs. To give the developer even more control over the common trade-off, latency vs. resource usage, we will present an automatic optimization process where these criteria are analyzed and fed back to the DSL compiler, in order to generate code that is closer to the desired design specifications. Finally, we generate code for stereo block matching algorithms and compare it with handwritten implementations to quantify the quality of our results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge