Marc D. Killpack

Scaling Fabric-Based Piezoresistive Sensor Arrays for Whole-Body Tactile Sensing

Aug 28, 2025Abstract:Scaling tactile sensing for robust whole-body manipulation is a significant challenge, often limited by wiring complexity, data throughput, and system reliability. This paper presents a complete architecture designed to overcome these barriers. Our approach pairs open-source, fabric-based sensors with custom readout electronics that reduce signal crosstalk to less than 3.3% through hardware-based mitigation. Critically, we introduce a novel, daisy-chained SPI bus topology that avoids the practical limitations of common wireless protocols and the prohibitive wiring complexity of USB hub-based systems. This architecture streams synchronized data from over 8,000 taxels across 1 square meter of sensing area at update rates exceeding 50 FPS, confirming its suitability for real-time control. We validate the system's efficacy in a whole-body grasping task where, without feedback, the robot's open-loop trajectory results in an uncontrolled application of force that slowly crushes a deformable cardboard box. With real-time tactile feedback, the robot transforms this motion into a gentle, stable grasp, successfully manipulating the object without causing structural damage. This work provides a robust and well-characterized platform to enable future research in advanced whole-body control and physical human-robot interaction.

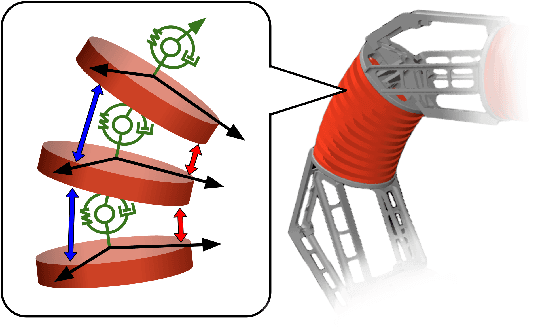

PneuDrive: An Embedded Pressure Control System and Modeling Toolkit for Large-Scale Soft Robots

Mar 31, 2025Abstract:In this paper, we present a modular pressure control system called PneuDrive that can be used for large-scale, pneumatically-actuated soft robots. The design is particularly suited for situations which require distributed pressure control and high flow rates. Up to four embedded pressure control modules can be daisy-chained together as peripherals on a robust RS-485 bus, enabling closed-loop control of up to 16 valves with pressures ranging from 0-100 psig (0-689 kPa) over distances of more than 10 meters. The system is configured as a C++ ROS node by default. However, independent of ROS, we provide a Python interface with a scripting API for added flexibility. We demonstrate our implementation of PneuDrive through various trajectory tracking experiments for a three-joint, continuum soft robot with 12 different pressure inputs. Finally, we present a modeling toolkit with implementations of three dynamic actuation models, all suitable for real-time simulation and control. We demonstrate the use of this toolkit in customizing each model with real-world data and evaluating the performance of each model. The results serve as a reference guide for choosing between several actuation models in a principled manner. A video summarizing our results can be found here: https://bit.ly/3QkrEqO.

* Proceedings of the 2024 IEEE 7th International Conference on Soft Robotics (RoboSoft)

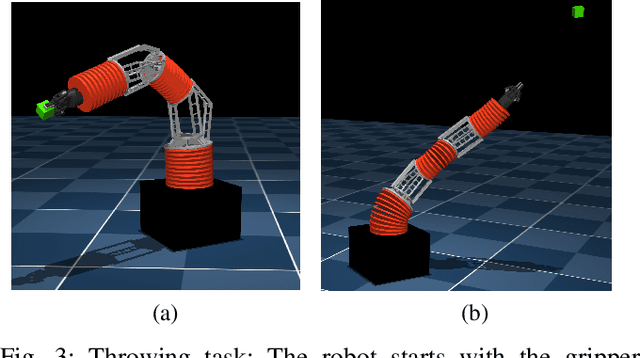

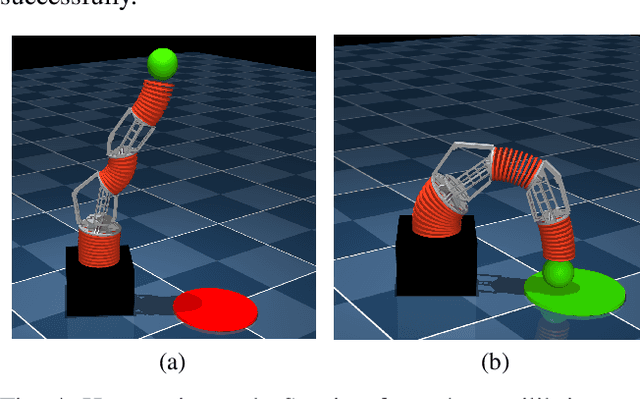

Learning Dynamic Tasks on a Large-scale Soft Robot in a Handful of Trials

Nov 13, 2024

Abstract:Soft robots offer more flexibility, compliance, and adaptability than traditional rigid robots. They are also typically lighter and cheaper to manufacture. However, their use in real-world applications is limited due to modeling challenges and difficulties in integrating effective proprioceptive sensors. Large-scale soft robots ($\approx$ two meters in length) have greater modeling complexity due to increased inertia and related effects of gravity. Common efforts to ease these modeling difficulties such as assuming simple kinematic and dynamics models also limit the general capabilities of soft robots and are not applicable in tasks requiring fast, dynamic motion like throwing and hammering. To overcome these challenges, we propose a data-efficient Bayesian optimization-based approach for learning control policies for dynamic tasks on a large-scale soft robot. Our approach optimizes the task objective function directly from commanded pressures, without requiring approximate kinematics or dynamics as an intermediate step. We demonstrate the effectiveness of our approach through both simulated and real-world experiments.

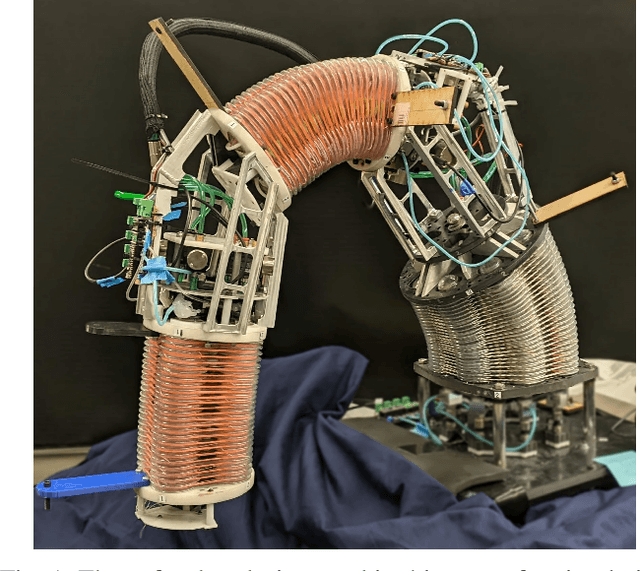

Baloo: A Large-Scale Hybrid Soft Robotic Torso for Whole-Arm Manipulation

Sep 12, 2024

Abstract:Soft robotic actuators and their inherent compliance can simplify the design of controllers when operating in contact-rich environments. With such structures we can accomplish high-impact, dynamic, and contact-rich tasks that would be difficult using conventional rigid robots which might either break the robot or the object without careful modeling and design of high bandwidth controllers. In order to explore the benefits of structural passive compliance and exploit them effectively, we present a prototype robotic torso named Baloo, designed with a hybrid rigid-soft methodology, incorporating both adaptability from soft components and strength from rigid components. Baloo consists of two meter-long, pneumatically-driven soft robot arms mounted on a rigid torso and driven vertically by a linear actuator. We explore some challenges inherent in controlling this type of robot and build on previous work with rigid robots to develop a joint-level neural-network adaptive controller to enable high performance tracking of highly nonlinear, time-varying soft robot dynamics. We also demonstrate a promising use case for the platform with several hardware experiments performing whole-body manipulation with large, heavy, and unwieldy objects. A video of our results can be viewed at https://youtu.be/eTUvBEVGKXY.

A Decomposition of Interaction Force for Multi-Agent Co-Manipulation

Aug 02, 2024

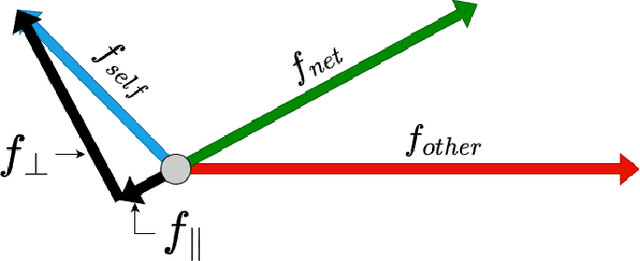

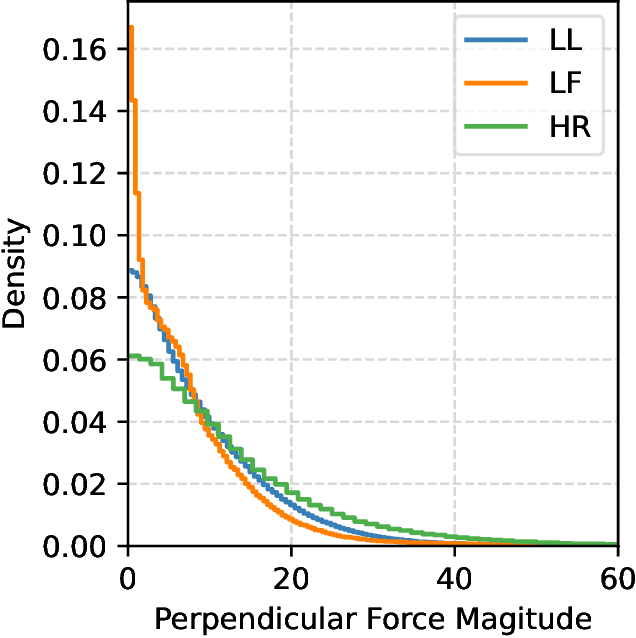

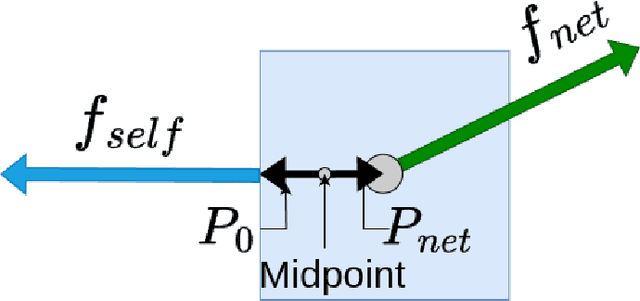

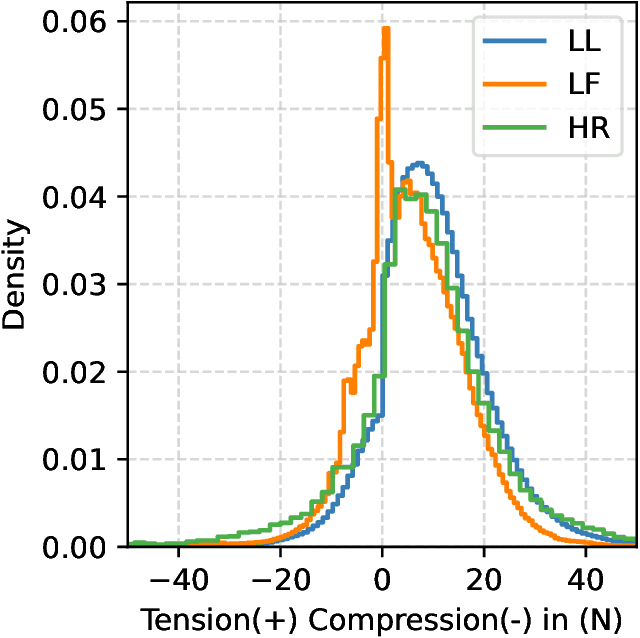

Abstract:Multi-agent human-robot co-manipulation is a poorly understood process with many inputs that potentially affect agent behavior. This paper explores one such input known as interaction force. Interaction force is potentially a primary component in communication that occurs during co-manipulation. There are, however, many different perspectives and definitions of interaction force in the literature. Therefore, a decomposition of interaction force is proposed that provides a consistent way of ascertaining the state of an agent relative to the group for multi-agent co-manipulation. This proposed method extends a current definition from one to four degrees of freedom, does not rely on a predefined object path, and is independent of the number of agents acting on the system and their locations and input wrenches (forces and torques). In addition, all of the necessary measures can be obtained by a self-contained robotic system, allowing for a more flexible and adaptive approach for future co-manipulation robot controllers.

A Versatile Multi-Robot Monte Carlo Tree Search Planner for On-Line Coverage Path Planning

Feb 11, 2020

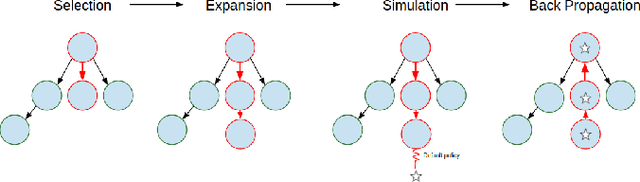

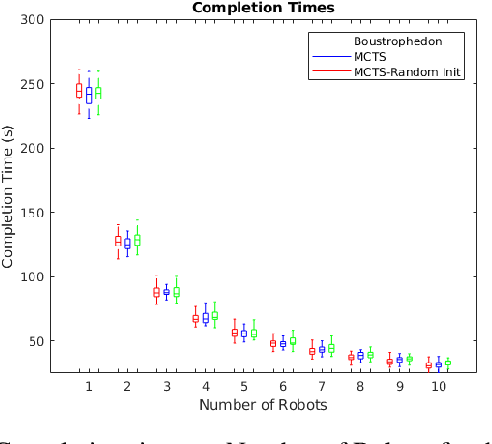

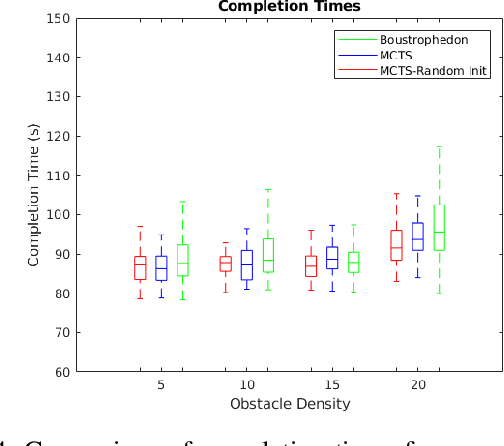

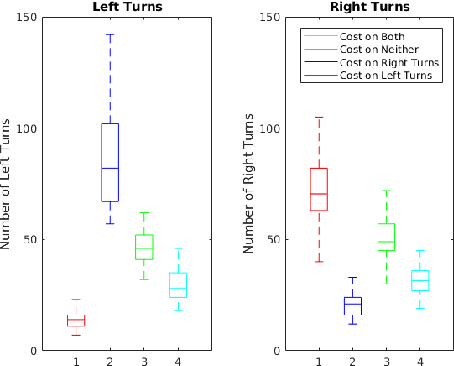

Abstract:Mobile robots hold great promise in reducing the need for humans to perform jobs such as vacuuming, seeding,harvesting, painting, search and rescue, and inspection. In practice, these tasks must often be done without an exact map of the area and could be completed more quickly through the use of multiple robots working together. The task of simultaneously covering and mapping an area with multiple robots is known as multi-robot on-line coverage and is a growing area of research. Many multi-robot on-line coverage path planning algorithms have been developed as extensions of well established off-line coverage algorithms. In this work we introduce a novel approach to multi-robot on-line coverage path planning based on a method borrowed from game theory and machine learning- Monte Carlo Tree Search. We implement a Monte Carlo Tree Search planner and compare completion times against a Boustrophedon-based on-line multi-robot planner. The MCTS planner is shown to perform on par with the conventional Boustrophedon algorithm in simulations varying the number of robots and the density of obstacles in the map. The versatility of the MCTS planner is demonstrated by incorporating secondary objectives such as turn minimization while performing the same coverage task. The versatility of the MCTS planner suggests it is well suited to many multi-objective tasks that arise in mobile robotics.

Parameterized and GPU-Parallelized Real-Time Model Predictive Control for High Degree of Freedom Robots

Jan 14, 2020

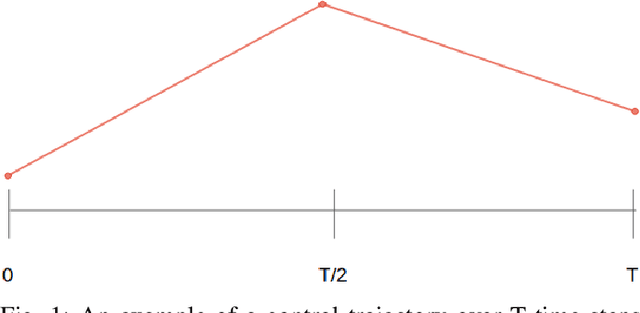

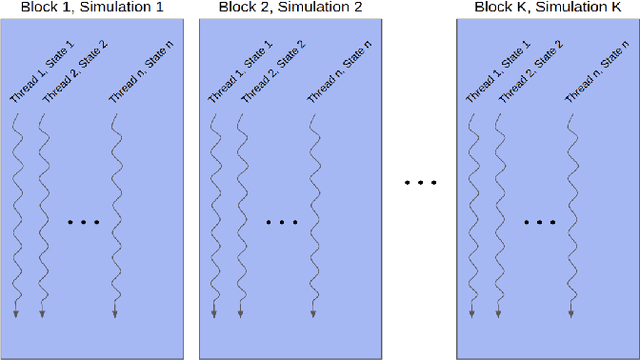

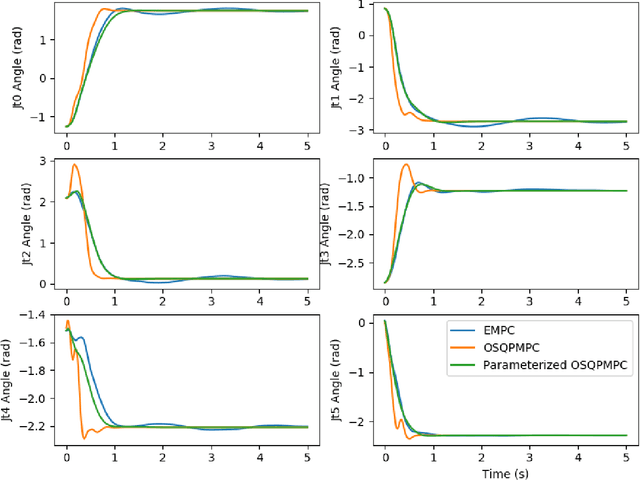

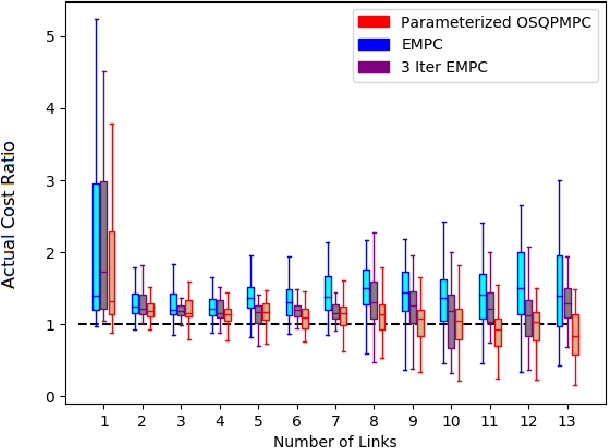

Abstract:This work presents and evaluates a novel input parameterization method which improves the tractability of model predictive control (MPC) for high degree of freedom (DoF) robots. Experimental results demonstrate that by parameterizing the input trajectory more than three quarters of the optimization variables used in traditional MPC can be eliminated with practically no effect on system performance. This parameterization also leads to trajectories which are more conservative, producing less overshoot in underdamped systems with modeling error. In this paper we present two MPC solution methods that make use of this parameterization. The first uses a convex solver, and the second makes use of parallel computing on a graphics processing unit (GPU). We show that both approaches drastically reduce solve times for large DoF, long horizon MPC problems allowing solutions at real-time rates. Through simulation and hardware experiments, we show that the parameterized convex solver MPC has faster solve times than traditional MPC for high DoF cases while still achieving similar performance. For the GPU-based MPC solution method, we use an evolutionary algorithm and that we call Evolutionary MPC (EMPC). EMPC is shown to have even faster solve times for high DoF systems. Solve times for EMPC are shown to decrease even further through the use of a more powerful GPU. This suggests that parallelized MPC methods will become even more advantageous with the improvement and prevalence of GPU technology.

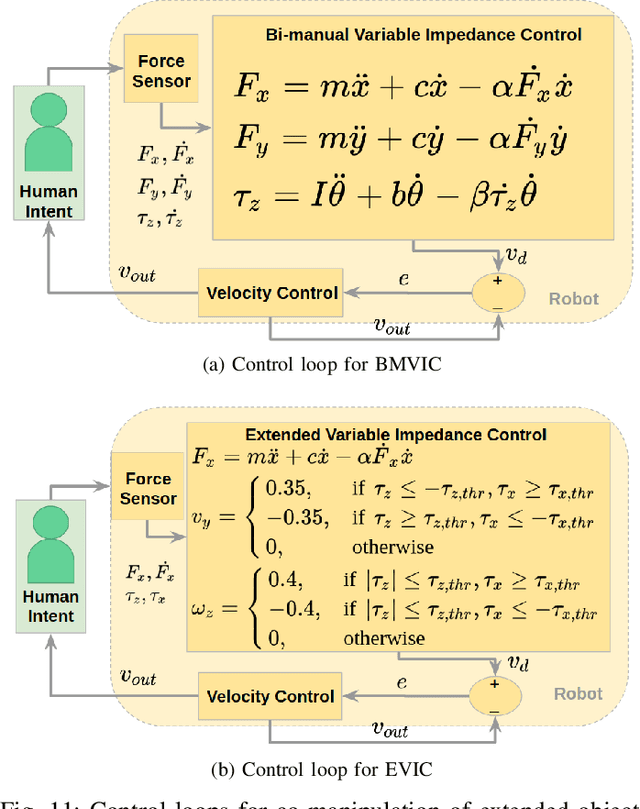

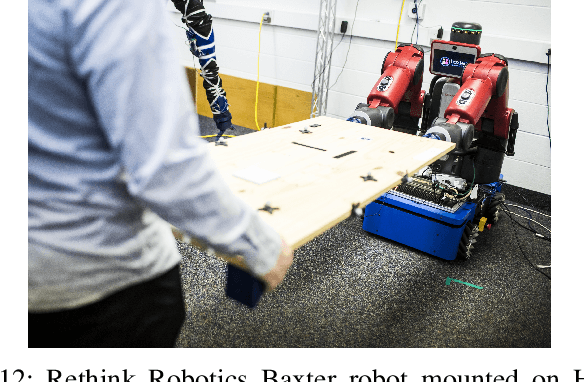

Human-robot co-manipulation of extended objects: Data-driven models and control from analysis of human-human dyads

Jan 03, 2020

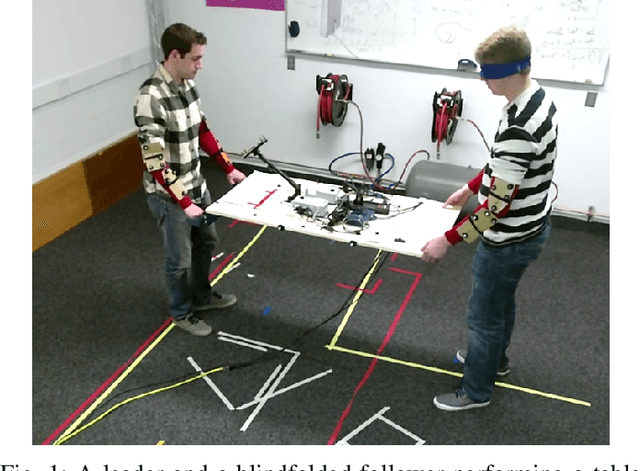

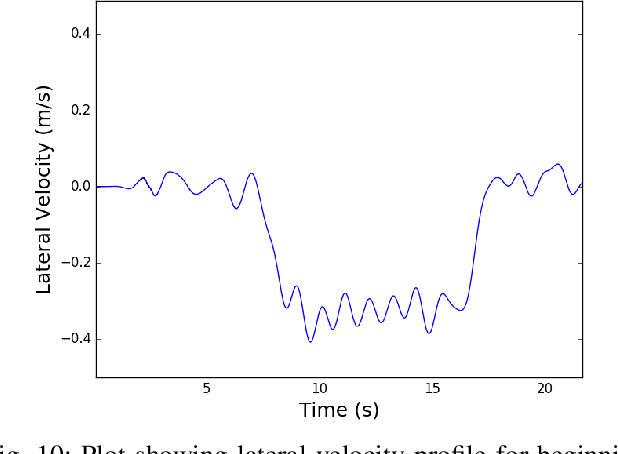

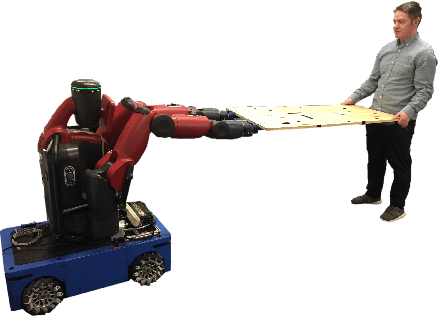

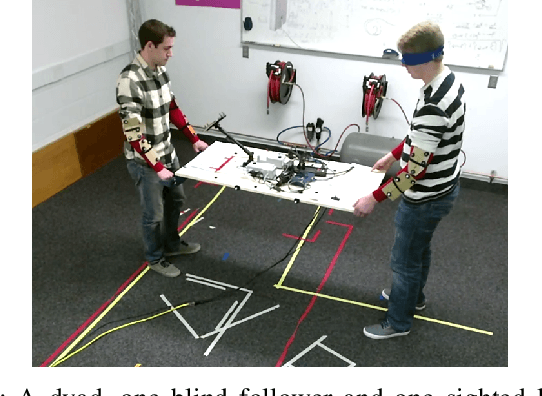

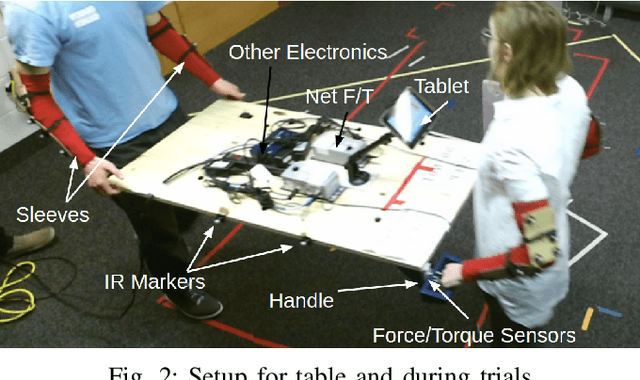

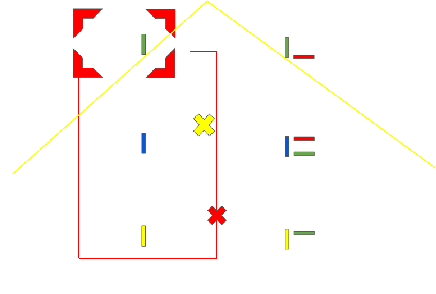

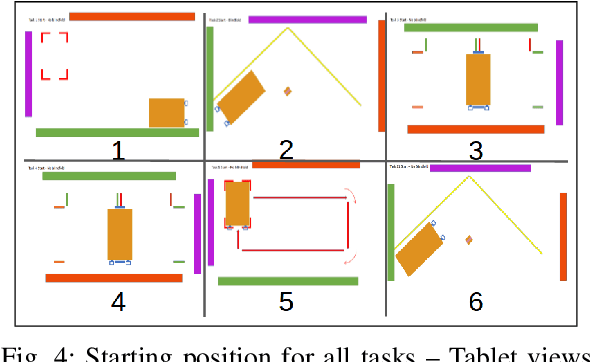

Abstract:Human teams are able to easily perform collaborative manipulation tasks. However, for a robot and human to simultaneously manipulate an extended object is a difficult task using existing methods from the literature. Our approach in this paper is to use data from human-human dyad experiments to determine motion intent which we use for a physical human-robot co-manipulation task. We first present and analyze data from human-human dyads performing co-manipulation tasks. We show that our human-human dyad data has interesting trends including that interaction forces are non-negligible compared to the force required to accelerate an object and that the beginning of a lateral movement is characterized by distinct torque triggers from the leader of the dyad. We also examine different metrics to quantify performance of different dyads. We also develop a deep neural network based on motion data from human-human trials to predict human intent based on past motion. We then show how force and motion data can be used as a basis for robot control in a human-robot dyad. Finally, we compare the performance of two controllers for human-robot co-manipulation to human-human dyad performance.

Estimating Human Intent for Physical Human-Robot Co-Manipulation

May 30, 2017

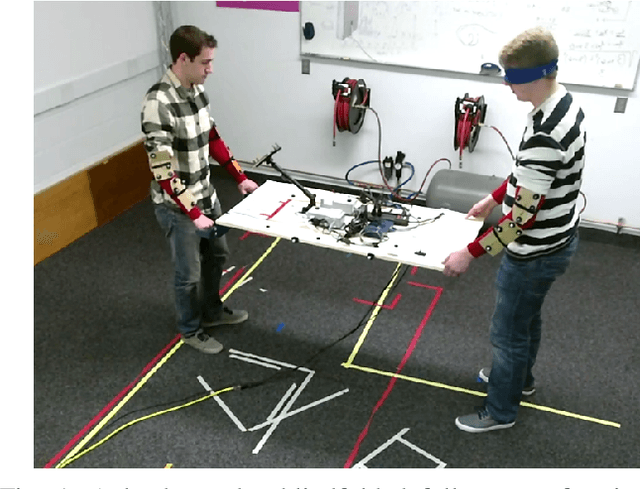

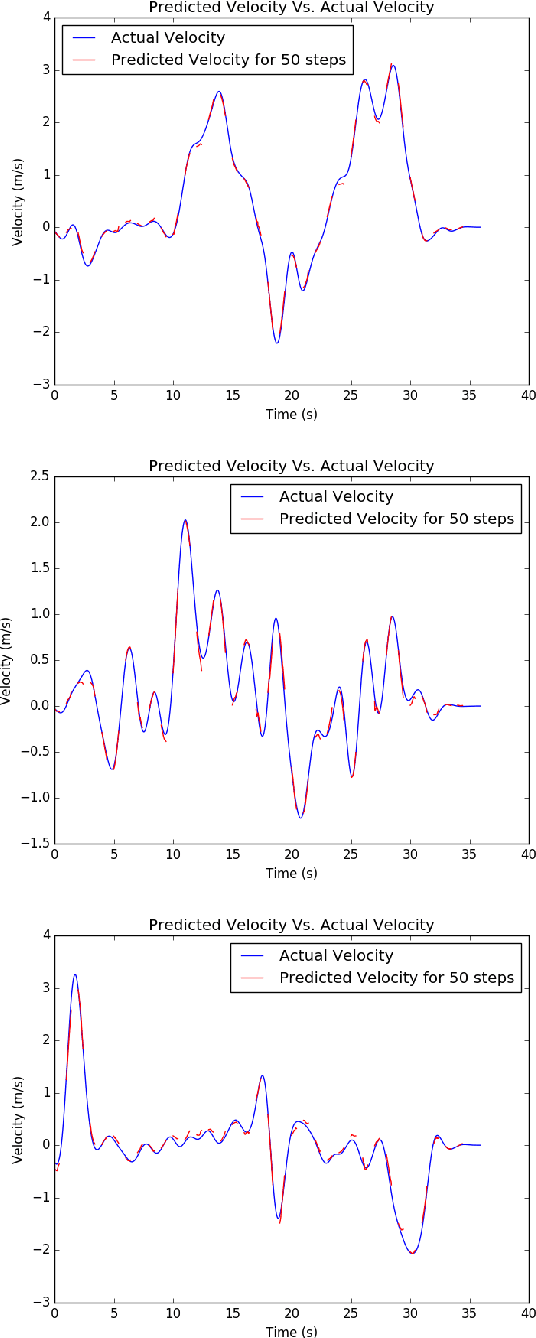

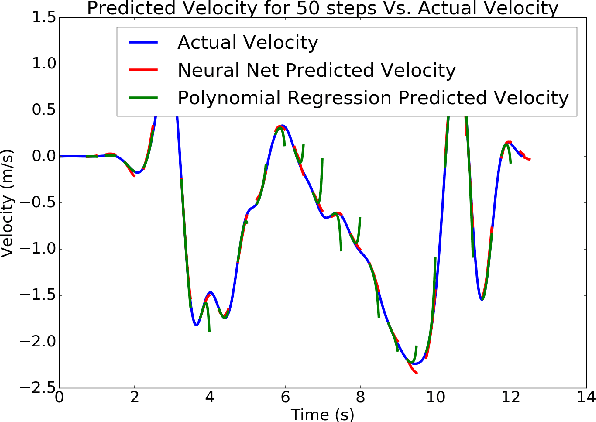

Abstract:Human teams can be exceptionally efficient at adapting and collaborating during manipulation tasks using shared mental models. However, the same shared mental models that can be used by humans to perform robust low-level force and motion control during collaborative manipulation tasks are non-existent for robots. For robots to perform collaborative tasks with people naturally and efficiently, understanding and predicting human intent is necessary. However, humans are difficult to predict and model. We have completed an exploratory study recording motion and force for 20 human dyads moving an object in tandem in order to better understand how they move and how their movement can be predicted. In this paper, we show how past motion data can be used to predict human intent. In order to predict human intent, which we equate with the human team's velocity for a short time horizon, we used a neural network. Using the previous 150 time steps at a rate of 200 Hz, human intent can be predicted for the next 50 time steps with a mean squared error of 0.02 (m/s)^2. We also show that human intent can be estimated in a human-robot dyad. This work is an important first step in enabling future work of integrating human intent estimation on a robot controller to execute a short-term collaborative trajectory.

Analysis of Rigid Extended Object Co-Manipulation by Human Dyads: Lateral Movement Characterization

Feb 02, 2017

Abstract:During co-manipulation involving humans and robots, it is necessary to base robot controllers on human behaviors to achieve comfortable and coordinated movement between the human-robot dyad. In this paper, we describe an experiment between human-human dyads and we record the force and motion data as the leader-follower dyads moved in translation and rotation. The force/motion data was then analyzed for patterns found during lateral translation only. For extended objects, lateral translation and in-place rotation are ambiguous, but this paper determines a way to characterize lateral translation triggers for future use in human-robot interaction. The study has 4 main results. First, interaction forces are apparent and necessary for co-manipulation. Second, minimum-jerk trajectories are found in the lateral direction only for lateral movement. Third, the beginning of a lateral movement is characterized by distinct force triggers by the leader. Last, there are different metrics that can be attributed to determine which dyads moved most effectively in the lateral direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge