Mane Williams

A General-Purpose Self-Supervised Model for Computational Pathology

Aug 29, 2023

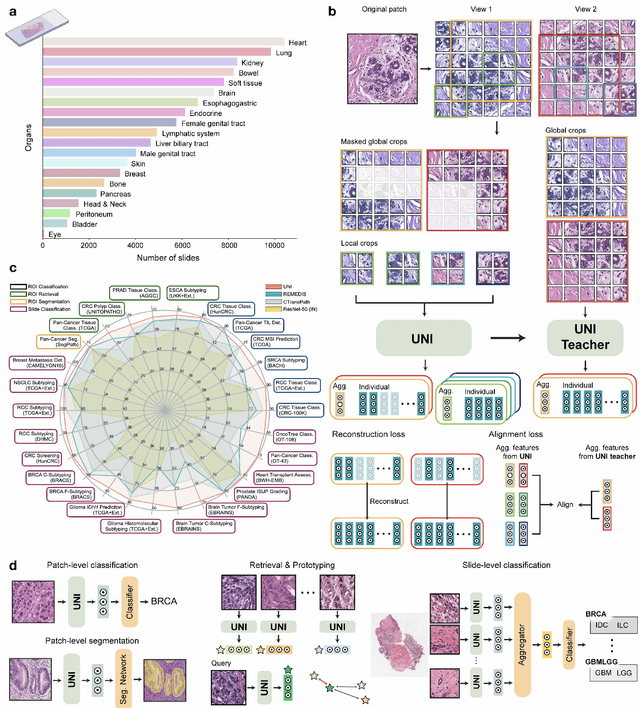

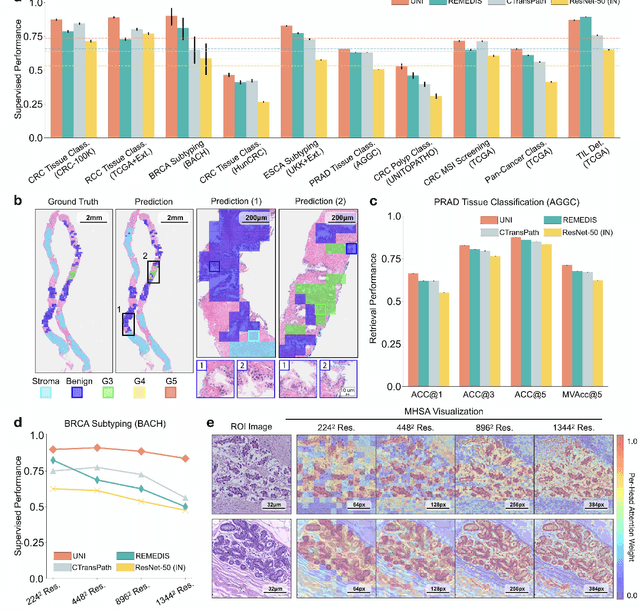

Abstract:Tissue phenotyping is a fundamental computational pathology (CPath) task in learning objective characterizations of histopathologic biomarkers in anatomic pathology. However, whole-slide imaging (WSI) poses a complex computer vision problem in which the large-scale image resolutions of WSIs and the enormous diversity of morphological phenotypes preclude large-scale data annotation. Current efforts have proposed using pretrained image encoders with either transfer learning from natural image datasets or self-supervised pretraining on publicly-available histopathology datasets, but have not been extensively developed and evaluated across diverse tissue types at scale. We introduce UNI, a general-purpose self-supervised model for pathology, pretrained using over 100 million tissue patches from over 100,000 diagnostic haematoxylin and eosin-stained WSIs across 20 major tissue types, and evaluated on 33 representative CPath clinical tasks in CPath of varying diagnostic difficulties. In addition to outperforming previous state-of-the-art models, we demonstrate new modeling capabilities in CPath such as resolution-agnostic tissue classification, slide classification using few-shot class prototypes, and disease subtyping generalization in classifying up to 108 cancer types in the OncoTree code classification system. UNI advances unsupervised representation learning at scale in CPath in terms of both pretraining data and downstream evaluation, enabling data-efficient AI models that can generalize and transfer to a gamut of diagnostically-challenging tasks and clinical workflows in anatomic pathology.

Weakly Supervised AI for Efficient Analysis of 3D Pathology Samples

Jul 27, 2023

Abstract:Human tissue and its constituent cells form a microenvironment that is fundamentally three-dimensional (3D). However, the standard-of-care in pathologic diagnosis involves selecting a few two-dimensional (2D) sections for microscopic evaluation, risking sampling bias and misdiagnosis. Diverse methods for capturing 3D tissue morphologies have been developed, but they have yet had little translation to clinical practice; manual and computational evaluations of such large 3D data have so far been impractical and/or unable to provide patient-level clinical insights. Here we present Modality-Agnostic Multiple instance learning for volumetric Block Analysis (MAMBA), a deep-learning-based platform for processing 3D tissue images from diverse imaging modalities and predicting patient outcomes. Archived prostate cancer specimens were imaged with open-top light-sheet microscopy or microcomputed tomography and the resulting 3D datasets were used to train risk-stratification networks based on 5-year biochemical recurrence outcomes via MAMBA. With the 3D block-based approach, MAMBA achieves an area under the receiver operating characteristic curve (AUC) of 0.86 and 0.74, superior to 2D traditional single-slice-based prognostication (AUC of 0.79 and 0.57), suggesting superior prognostication with 3D morphological features. Further analyses reveal that the incorporation of greater tissue volume improves prognostic performance and mitigates risk prediction variability from sampling bias, suggesting the value of capturing larger extents of heterogeneous 3D morphology. With the rapid growth and adoption of 3D spatial biology and pathology techniques by researchers and clinicians, MAMBA provides a general and efficient framework for 3D weakly supervised learning for clinical decision support and can help to reveal novel 3D morphological biomarkers for prognosis and therapeutic response.

Pan-Cancer Integrative Histology-Genomic Analysis via Interpretable Multimodal Deep Learning

Aug 04, 2021

Abstract:The rapidly emerging field of deep learning-based computational pathology has demonstrated promise in developing objective prognostic models from histology whole slide images. However, most prognostic models are either based on histology or genomics alone and do not address how histology and genomics can be integrated to develop joint image-omic prognostic models. Additionally identifying explainable morphological and molecular descriptors from these models that govern such prognosis is of interest. We used multimodal deep learning to integrate gigapixel whole slide pathology images, RNA-seq abundance, copy number variation, and mutation data from 5,720 patients across 14 major cancer types. Our interpretable, weakly-supervised, multimodal deep learning algorithm is able to fuse these heterogeneous modalities for predicting outcomes and discover prognostic features from these modalities that corroborate with poor and favorable outcomes via multimodal interpretability. We compared our model with unimodal deep learning models trained on histology slides and molecular profiles alone, and demonstrate performance increase in risk stratification on 9 out of 14 cancers. In addition, we analyze morphologic and molecular markers responsible for prognostic predictions across all cancer types. All analyzed data, including morphological and molecular correlates of patient prognosis across the 14 cancer types at a disease and patient level are presented in an interactive open-access database (http://pancancer.mahmoodlab.org) to allow for further exploration and prognostic biomarker discovery. To validate that these model explanations are prognostic, we further analyzed high attention morphological regions in WSIs, which indicates that tumor-infiltrating lymphocyte presence corroborates with favorable cancer prognosis on 9 out of 14 cancer types studied.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge