Jonathan T. C. Liu

AI-driven 3D Spatial Transcriptomics

Feb 25, 2025

Abstract:A comprehensive three-dimensional (3D) map of tissue architecture and gene expression is crucial for illuminating the complexity and heterogeneity of tissues across diverse biomedical applications. However, most spatial transcriptomics (ST) approaches remain limited to two-dimensional (2D) sections of tissue. Although current 3D ST methods hold promise, they typically require extensive tissue sectioning, are complex, are not compatible with non-destructive 3D tissue imaging technologies, and often lack scalability. Here, we present VOlumetrically Resolved Transcriptomics EXpression (VORTEX), an AI framework that leverages 3D tissue morphology and minimal 2D ST to predict volumetric 3D ST. By pretraining on diverse 3D morphology-transcriptomic pairs from heterogeneous tissue samples and then fine-tuning on minimal 2D ST data from a specific volume of interest, VORTEX learns both generic tissue-related and sample-specific morphological correlates of gene expression. This approach enables dense, high-throughput, and fast 3D ST, scaling seamlessly to large tissue volumes far beyond the reach of existing 3D ST techniques. By offering a cost-effective and minimally destructive route to obtaining volumetric molecular insights, we anticipate that VORTEX will accelerate biomarker discovery and our understanding of morphomolecular associations and cell states in complex tissues. Interactive 3D ST volumes can be viewed at https://vortex-demo.github.io/

Triage of 3D pathology data via 2.5D multiple-instance learning to guide pathologist assessments

Jun 11, 2024

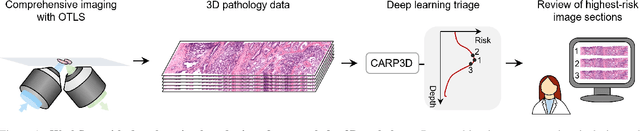

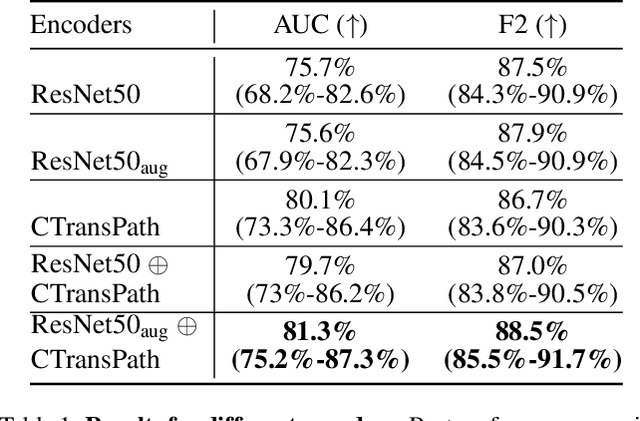

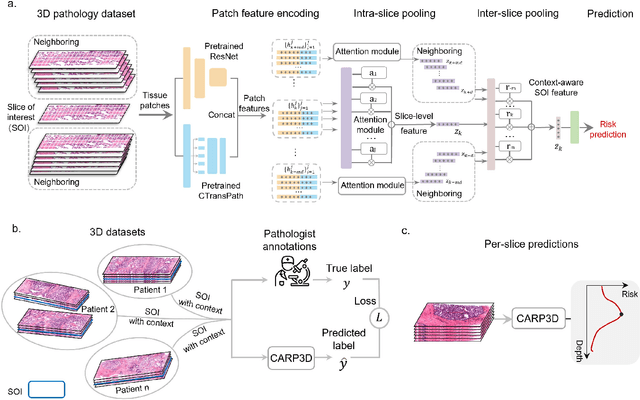

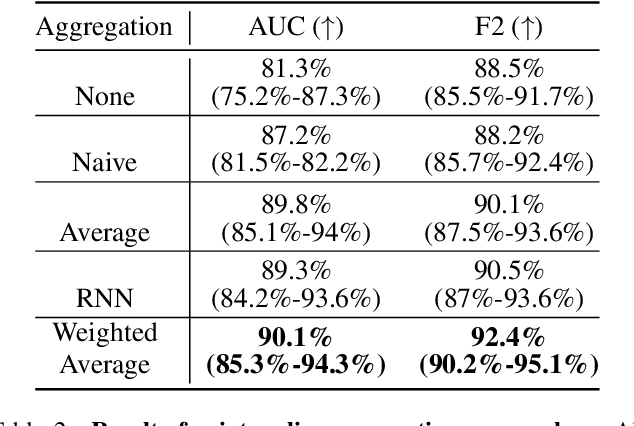

Abstract:Accurate patient diagnoses based on human tissue biopsies are hindered by current clinical practice, where pathologists assess only a limited number of thin 2D tissue slices sectioned from 3D volumetric tissue. Recent advances in non-destructive 3D pathology, such as open-top light-sheet microscopy, enable comprehensive imaging of spatially heterogeneous tissue morphologies, offering the feasibility to improve diagnostic determinations. A potential early route towards clinical adoption for 3D pathology is to rely on pathologists for final diagnosis based on viewing familiar 2D H&E-like image sections from the 3D datasets. However, manual examination of the massive 3D pathology datasets is infeasible. To address this, we present CARP3D, a deep learning triage approach that automatically identifies the highest-risk 2D slices within 3D volumetric biopsy, enabling time-efficient review by pathologists. For a given slice in the biopsy, we estimate its risk by performing attention-based aggregation of 2D patches within each slice, followed by pooling of the neighboring slices to compute a context-aware 2.5D risk score. For prostate cancer risk stratification, CARP3D achieves an area under the curve (AUC) of 90.4% for triaging slices, outperforming methods relying on independent analysis of 2D sections (AUC=81.3%). These results suggest that integrating additional depth context enhances the model's discriminative capabilities. In conclusion, CARP3D has the potential to improve pathologist diagnosis via accurate triage of high-risk slices within large-volume 3D pathology datasets.

* CVPR CVMI 2024

Weakly Supervised AI for Efficient Analysis of 3D Pathology Samples

Jul 27, 2023

Abstract:Human tissue and its constituent cells form a microenvironment that is fundamentally three-dimensional (3D). However, the standard-of-care in pathologic diagnosis involves selecting a few two-dimensional (2D) sections for microscopic evaluation, risking sampling bias and misdiagnosis. Diverse methods for capturing 3D tissue morphologies have been developed, but they have yet had little translation to clinical practice; manual and computational evaluations of such large 3D data have so far been impractical and/or unable to provide patient-level clinical insights. Here we present Modality-Agnostic Multiple instance learning for volumetric Block Analysis (MAMBA), a deep-learning-based platform for processing 3D tissue images from diverse imaging modalities and predicting patient outcomes. Archived prostate cancer specimens were imaged with open-top light-sheet microscopy or microcomputed tomography and the resulting 3D datasets were used to train risk-stratification networks based on 5-year biochemical recurrence outcomes via MAMBA. With the 3D block-based approach, MAMBA achieves an area under the receiver operating characteristic curve (AUC) of 0.86 and 0.74, superior to 2D traditional single-slice-based prognostication (AUC of 0.79 and 0.57), suggesting superior prognostication with 3D morphological features. Further analyses reveal that the incorporation of greater tissue volume improves prognostic performance and mitigates risk prediction variability from sampling bias, suggesting the value of capturing larger extents of heterogeneous 3D morphology. With the rapid growth and adoption of 3D spatial biology and pathology techniques by researchers and clinicians, MAMBA provides a general and efficient framework for 3D weakly supervised learning for clinical decision support and can help to reveal novel 3D morphological biomarkers for prognosis and therapeutic response.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge