Maggie Wang

Interrogating LLM design under a fair learning doctrine

Feb 22, 2025

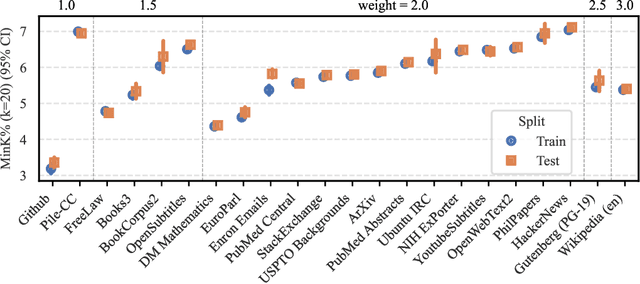

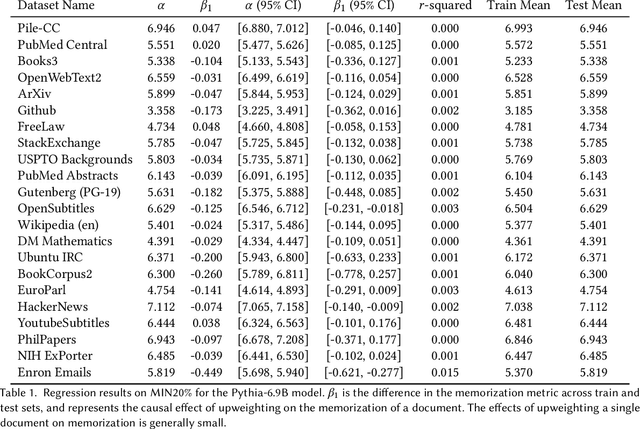

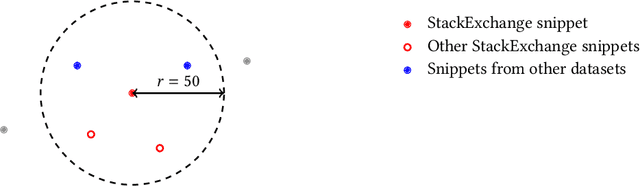

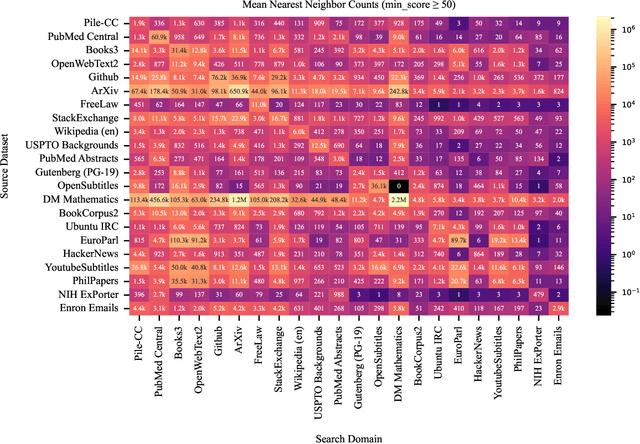

Abstract:The current discourse on large language models (LLMs) and copyright largely takes a "behavioral" perspective, focusing on model outputs and evaluating whether they are substantially similar to training data. However, substantial similarity is difficult to define algorithmically and a narrow focus on model outputs is insufficient to address all copyright risks. In this interdisciplinary work, we take a complementary "structural" perspective and shift our focus to how LLMs are trained. We operationalize a notion of "fair learning" by measuring whether any training decision substantially affected the model's memorization. As a case study, we deconstruct Pythia, an open-source LLM, and demonstrate the use of causal and correlational analyses to make factual determinations about Pythia's training decisions. By proposing a legal standard for fair learning and connecting memorization analyses to this standard, we identify how judges may advance the goals of copyright law through adjudication. Finally, we discuss how a fair learning standard might evolve to enhance its clarity by becoming more rule-like and incorporating external technical guidelines.

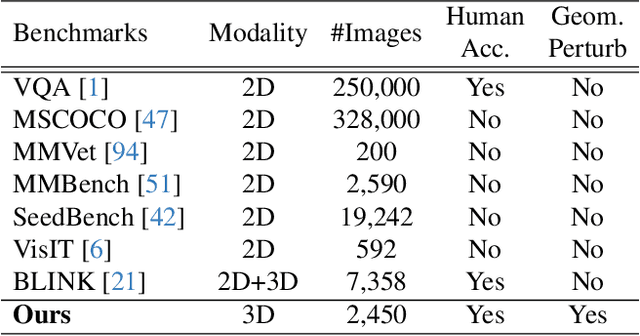

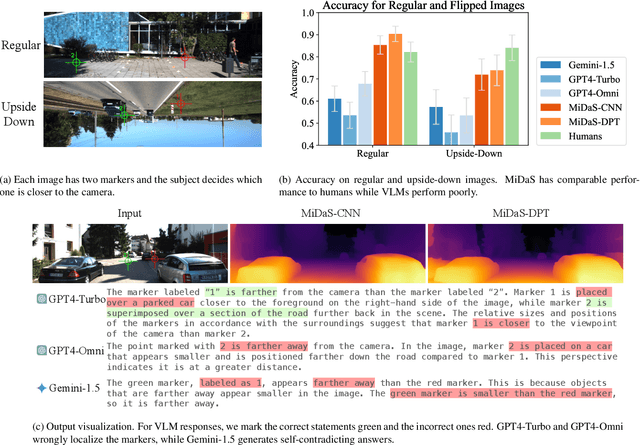

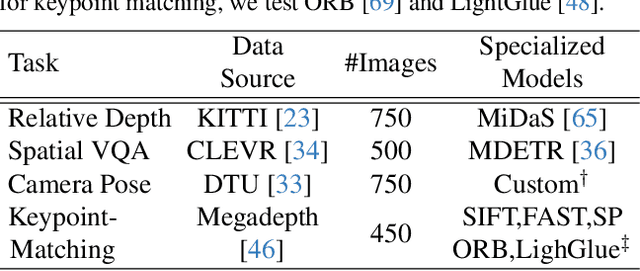

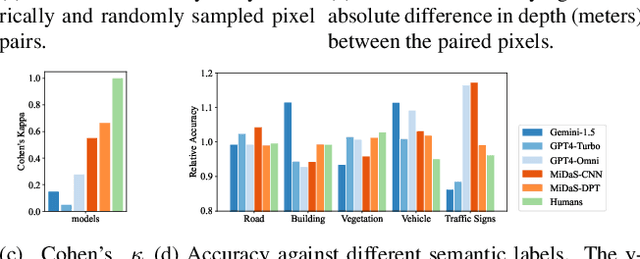

Towards Foundation Models for 3D Vision: How Close Are We?

Oct 14, 2024

Abstract:Building a foundation model for 3D vision is a complex challenge that remains unsolved. Towards that goal, it is important to understand the 3D reasoning capabilities of current models as well as identify the gaps between these models and humans. Therefore, we construct a new 3D visual understanding benchmark that covers fundamental 3D vision tasks in the Visual Question Answering (VQA) format. We evaluate state-of-the-art Vision-Language Models (VLMs), specialized models, and human subjects on it. Our results show that VLMs generally perform poorly, while the specialized models are accurate but not robust, failing under geometric perturbations. In contrast, human vision continues to be the most reliable 3D visual system. We further demonstrate that neural networks align more closely with human 3D vision mechanisms compared to classical computer vision methods, and Transformer-based networks such as ViT align more closely with human 3D vision mechanisms than CNNs. We hope our study will benefit the future development of foundation models for 3D vision.

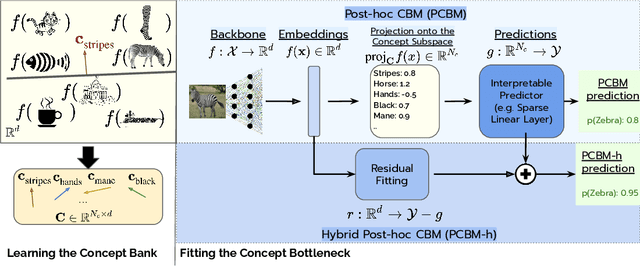

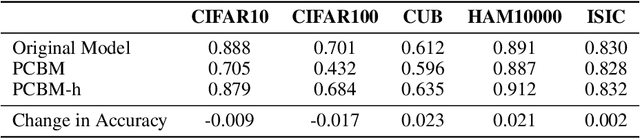

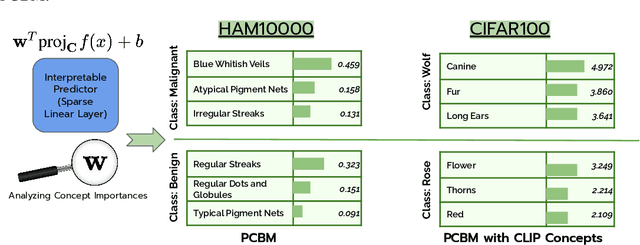

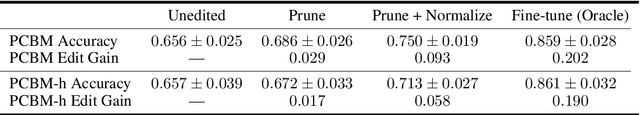

Post-hoc Concept Bottleneck Models

May 31, 2022

Abstract:Concept Bottleneck Models (CBMs) map the inputs onto a set of interpretable concepts (``the bottleneck'') and use the concepts to make predictions. A concept bottleneck enhances interpretability since it can be investigated to understand what concepts the model "sees" in an input and which of these concepts are deemed important. However, CBMs are restrictive in practice as they require concept labels in the training data to learn the bottleneck and do not leverage strong pretrained models. Moreover, CBMs often do not match the accuracy of an unrestricted neural network, reducing the incentive to deploy them in practice. In this work, we address the limitations of CBMs by introducing Post-hoc Concept Bottleneck models (PCBMs). We show that we can turn any neural network into a PCBM without sacrificing model performance while still retaining interpretability benefits. When concept annotation is not available on the training data, we show that PCBM can transfer concepts from other datasets or from natural language descriptions of concepts. PCBM also enables users to quickly debug and update the model to reduce spurious correlations and improve generalization to new (potentially different) data. Through a model-editing user study, we show that editing PCBMs via concept-level feedback can provide significant performance gains without using any data from the target domain or model retraining.

FlowDB a large scale precipitation, river, and flash flood dataset

Dec 21, 2020Abstract:Flooding results in 8 billion dollars of damage annually in the US and causes the most deaths of any weather related event. Due to climate change scientists expect more heavy precipitation events in the future. However, no current datasets exist that contain both hourly precipitation and river flow data. We introduce a novel hourly river flow and precipitation dataset and a second subset of flash flood events with damage estimates and injury counts. Using these datasets we create two challenges (1) general stream flow forecasting and (2) flash flood damage estimation. We have created several publicly available benchmarks and an easy to use package. Additionally, in the future we aim to augment our dataset with snow pack data and soil index moisture data to improve predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge