Madhushanka Padmal

Elevated LiDAR based Sensing for 6G -- 3D Maps with cm Level Accuracy

Feb 22, 2021

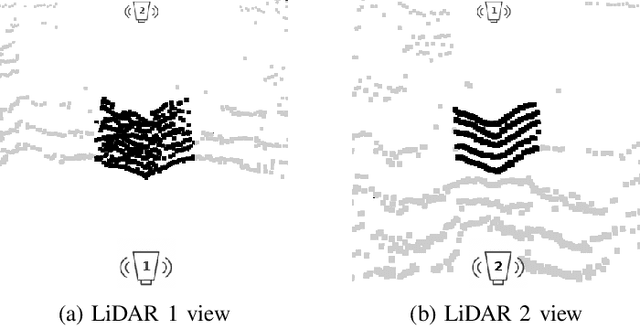

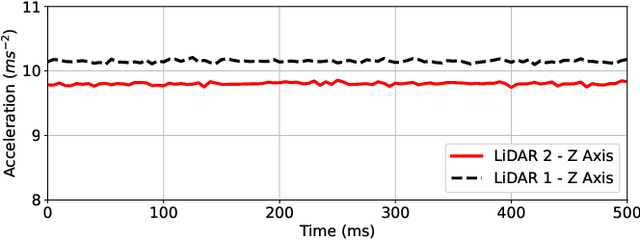

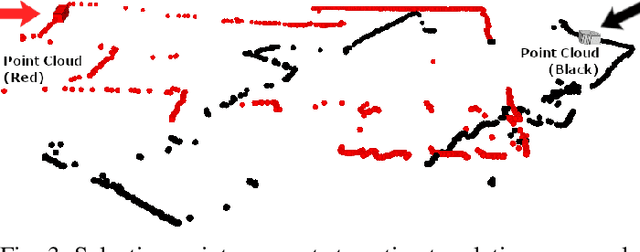

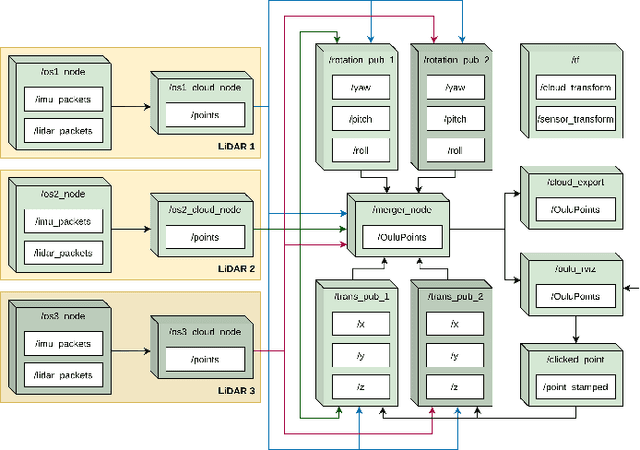

Abstract:One key vertical application that will be enabled by 6G is the automation of the processes with the increased use of robots. As a result, sensing and localization of the surrounding environment becomes a crucial factor for these robots to operate. Light detection and ranging (LiDAR) has emerged as an appropriate method of sensing due to its capability of generating detail-rich information with high accuracy. However, LiDARs are power hungry devices that generate a lot of data, and these characteristics limit their use as on-board sensors in robots. In this paper, we present a novel approach on the methodology of generating an enhanced 3D map with improved field-of-view using multiple LiDAR sensors. We utilize an inherent property of LiDAR point clouds; rings and data from the inertial measurement unit (IMU) embedded in the sensor for registration of the point clouds. The generated 3D map has an accuracy of 10 cm when compared to the real-world measurements. We also carry out the practical implementation of the proposed method using two LiDAR sensors. Furthermore, we develop an application to utilize the generated map where a robot navigates through the mapped environment with minimal support from the sensors on-board. The LiDARs are fixed in the infrastructure at elevated positions. Thus this is applicable to vehicular and factory scenarios. Our results further validate the idea of using multiple elevated LiDARs as a part of the infrastructure for various applications.

Collaborative SLAM based on Wifi Fingerprint Similarity and Motion Information

Nov 30, 2019

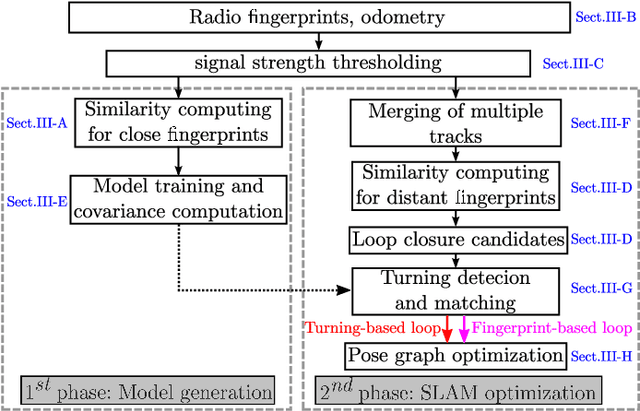

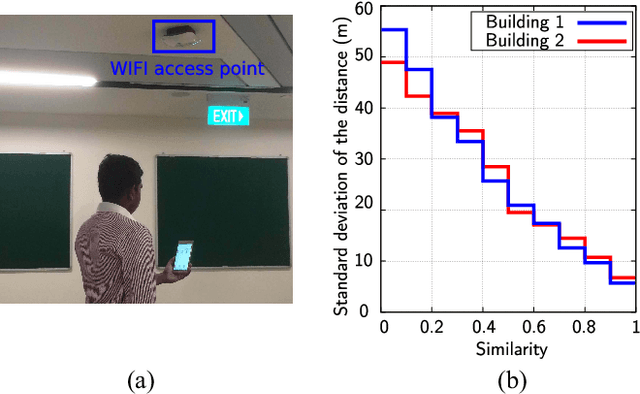

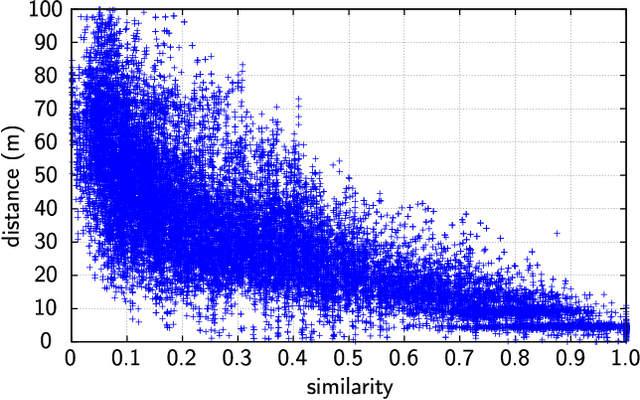

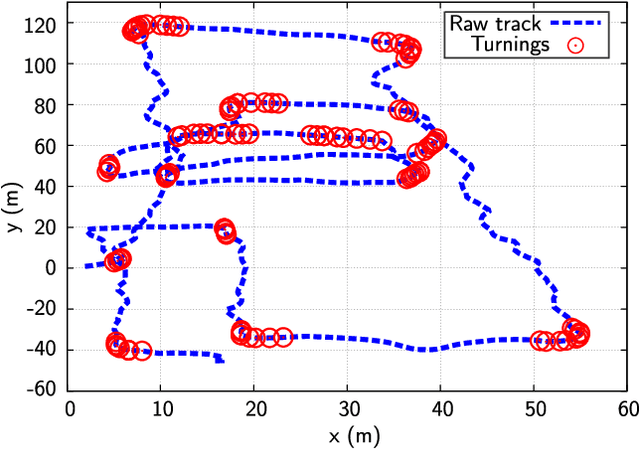

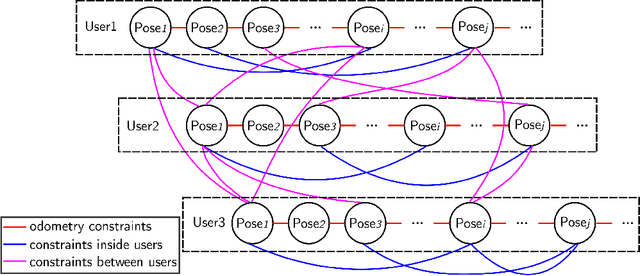

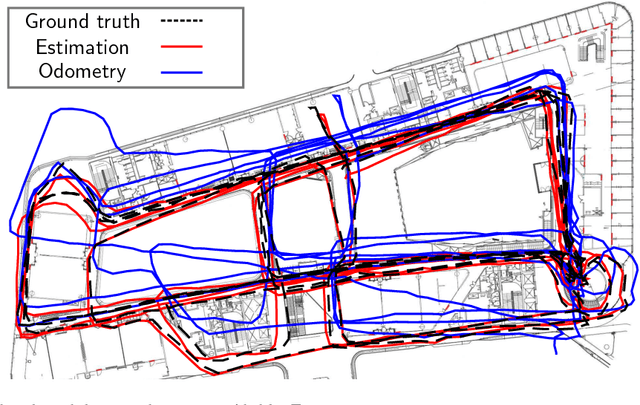

Abstract:Simultaneous localization and mapping (SLAM) has been extensively researched in past years particularly with regard to range-based or visual-based sensors. Instead of deploying dedicated devices that use visual features, it is more pragmatic to exploit the radio features to achieve this task, due to their ubiquitous nature and the widespread deployment of Wi-Fi wireless network. This paper presents a novel approach for collaborative simultaneous localization and radio fingerprint mapping (C-SLAM-RF) in large unknown indoor environments. The proposed system uses received signal strengths (RSS) from Wi-Fi access points (AP) in the existing infrastructure and pedestrian dead reckoning (PDR) from a smart phone, without a prior knowledge about map or distribution of AP in the environment. We claim a loop closure based on the similarity of the two radio fingerprints. To further improve the performance, we incorporate the turning motion and assign a small uncertainty value to a loop closure if a matched turning is identified. The experiment was done in an area of 130 meters by 70 meters and the results show that our proposed system is capable of estimating the tracks of four users with an accuracy of 0.6 meters with Tango-based PDR and 4.76 meters with a step counter-based PDR.

Crowd-sensing Simultaneous Localization and Radio Fingerprint Mapping based on Probabilistic Similarity Models

Apr 26, 2019

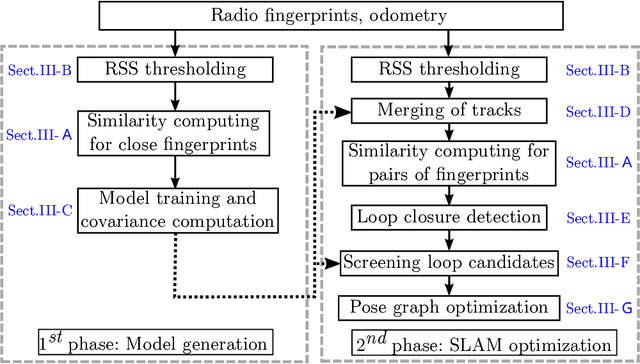

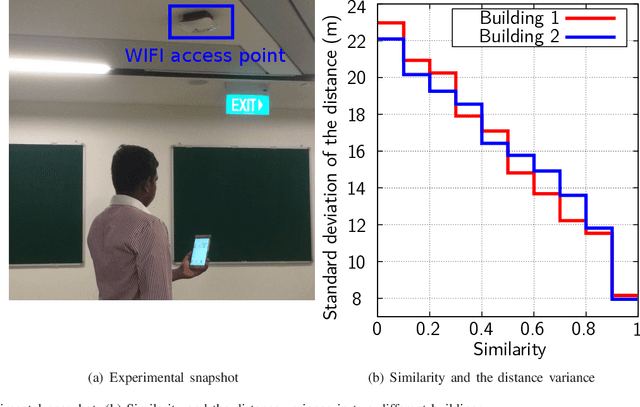

Abstract:Simultaneous localization and mapping (SLAM) has been richly researched in past years particularly with regard to range-based or visual-based sensors. Instead of deploying dedicated devices that use visual features, it is more pragmatic to exploit the radio features to achieve this task, due to their ubiquitous nature and the wide deployment of Wifi wireless network. In this paper, we present a novel approach for crowd-sensing simultaneous localization and radio fingerprint mapping (C-SLAM-RF) in large unknown indoor environments. The proposed system makes use of the received signal strength (RSS) from surrounding Wifi access points (AP) and the motion tracking data from a smart phone (Tango as an example). These measurements are captured duration the walking of multiple users in unknown environments without map information and location of the AP. The experiments were done in a university building with dynamic environment and the results show that the proposed system is capable of estimating the tracks of a group of users with an accuracy of 1.74 meters when compared to the ground truth acquired from a point cloud-based SLAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge