Elevated LiDAR based Sensing for 6G -- 3D Maps with cm Level Accuracy

Paper and Code

Feb 22, 2021

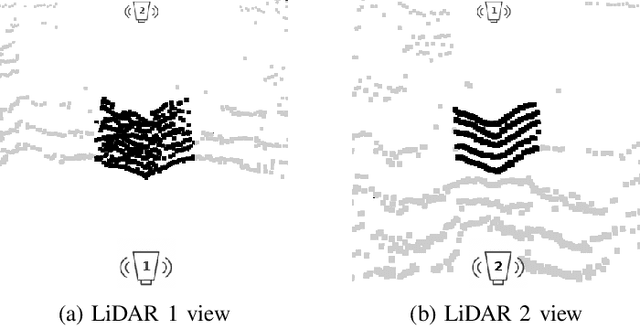

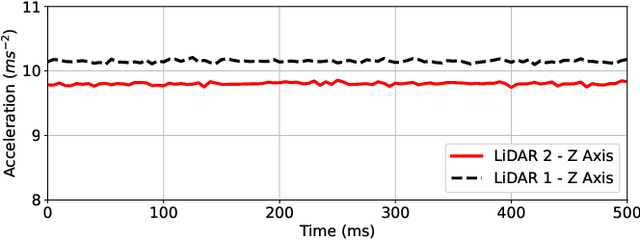

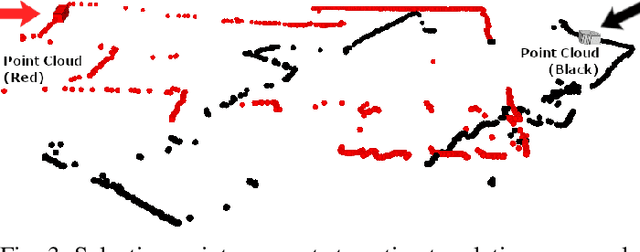

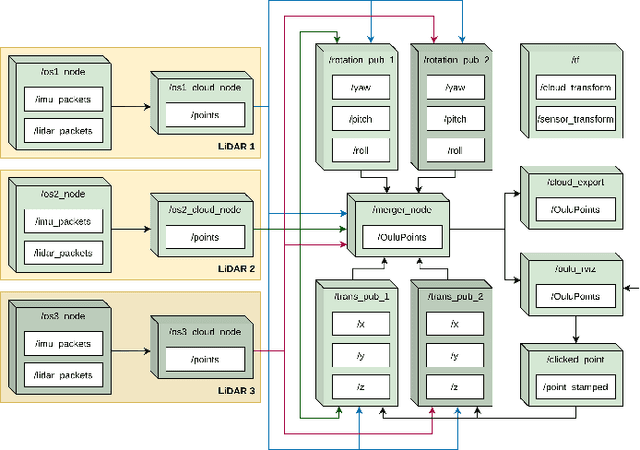

One key vertical application that will be enabled by 6G is the automation of the processes with the increased use of robots. As a result, sensing and localization of the surrounding environment becomes a crucial factor for these robots to operate. Light detection and ranging (LiDAR) has emerged as an appropriate method of sensing due to its capability of generating detail-rich information with high accuracy. However, LiDARs are power hungry devices that generate a lot of data, and these characteristics limit their use as on-board sensors in robots. In this paper, we present a novel approach on the methodology of generating an enhanced 3D map with improved field-of-view using multiple LiDAR sensors. We utilize an inherent property of LiDAR point clouds; rings and data from the inertial measurement unit (IMU) embedded in the sensor for registration of the point clouds. The generated 3D map has an accuracy of 10 cm when compared to the real-world measurements. We also carry out the practical implementation of the proposed method using two LiDAR sensors. Furthermore, we develop an application to utilize the generated map where a robot navigates through the mapped environment with minimal support from the sensors on-board. The LiDARs are fixed in the infrastructure at elevated positions. Thus this is applicable to vehicular and factory scenarios. Our results further validate the idea of using multiple elevated LiDARs as a part of the infrastructure for various applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge