Luca Anzalone

Aspen Open Jets: Unlocking LHC Data for Foundation Models in Particle Physics

Dec 13, 2024Abstract:Foundation models are deep learning models pre-trained on large amounts of data which are capable of generalizing to multiple datasets and/or downstream tasks. This work demonstrates how data collected by the CMS experiment at the Large Hadron Collider can be useful in pre-training foundation models for HEP. Specifically, we introduce the AspenOpenJets dataset, consisting of approximately 180M high $p_T$ jets derived from CMS 2016 Open Data. We show how pre-training the OmniJet-$\alpha$ foundation model on AspenOpenJets improves performance on generative tasks with significant domain shift: generating boosted top and QCD jets from the simulated JetClass dataset. In addition to demonstrating the power of pre-training of a jet-based foundation model on actual proton-proton collision data, we provide the ML-ready derived AspenOpenJets dataset for further public use.

Triggering Dark Showers with Conditional Dual Auto-Encoders

Jun 22, 2023

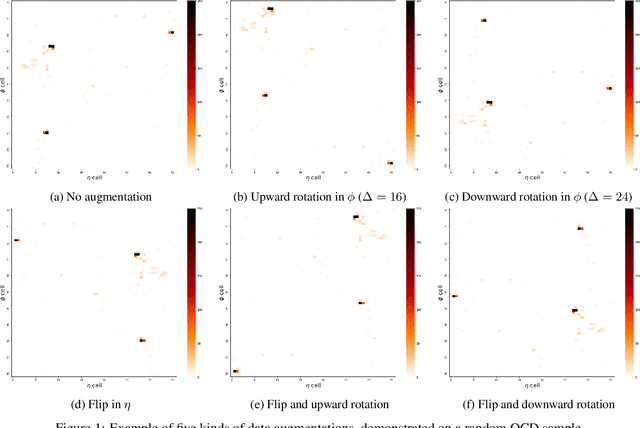

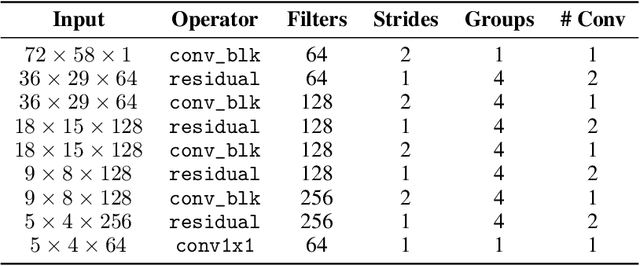

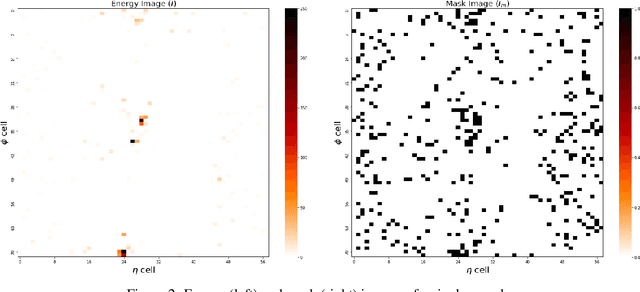

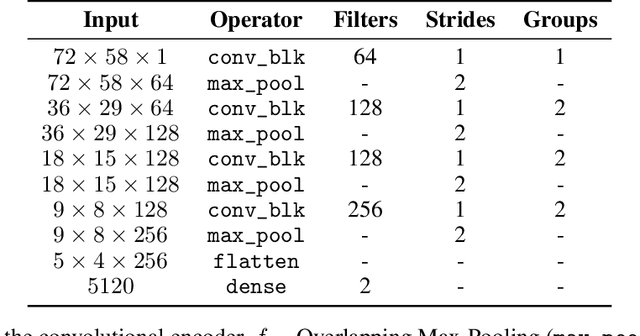

Abstract:Auto-encoders (AEs) have the potential to be effective and generic tools for new physics searches at colliders, requiring little to no model-dependent assumptions. New hypothetical physics signals can be considered anomalies that deviate from the well-known background processes generally expected to describe the whole dataset. We present a search formulated as an anomaly detection (AD) problem, using an AE to define a criterion to decide about the physics nature of an event. In this work, we perform an AD search for manifestations of a dark version of strong force using raw detector images, which are large and very sparse, without leveraging any physics-based pre-processing or assumption on the signals. We propose a dual-encoder design which can learn a compact latent space through conditioning. In the context of multiple AD metrics, we present a clear improvement over competitive baselines and prior approaches. It is the first time that an AE is shown to exhibit excellent discrimination against multiple dark shower models, illustrating the suitability of this method as a performant, model-independent algorithm to deploy, e.g., in the trigger stage of LHC experiments such as ATLAS and CMS.

Improving Parametric Neural Networks for High-Energy Physics (and Beyond)

Feb 08, 2022

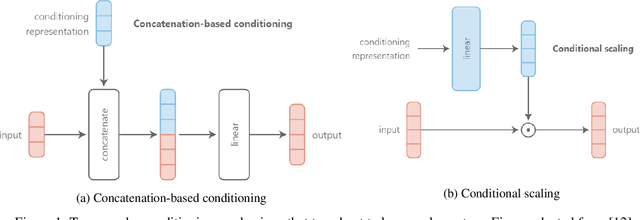

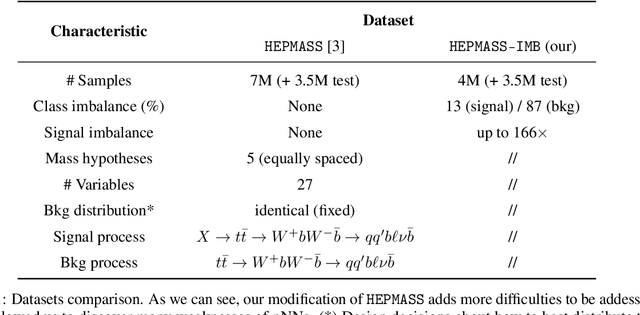

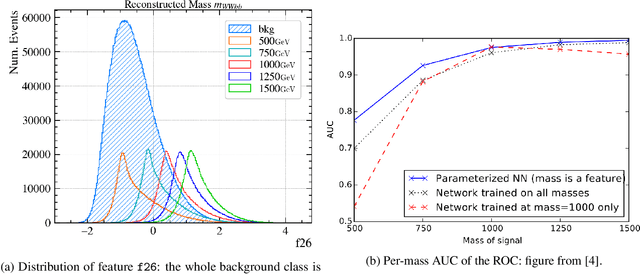

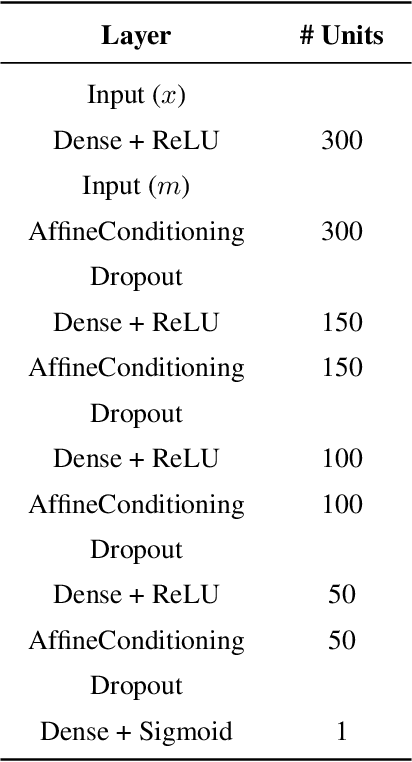

Abstract:Signal-background classification is a central problem in High-Energy Physics, that plays a major role for the discovery of new fundamental particles. A recent method -- the Parametric Neural Network (pNN) -- leverages multiple signal mass hypotheses as an additional input feature to effectively replace a whole set of individual classifier, each providing (in principle) the best response for a single mass hypothesis. In this work we aim at deepening the understanding of pNNs in light of real-world usage. We discovered several peculiarities of parametric networks, providing intuition, metrics, and guidelines to them. We further propose an alternative parametrization scheme, resulting in a new parametrized neural network architecture: the AffinePNN; along with many other generally applicable improvements. Finally, we extensively evaluate our models on the HEPMASS dataset, along its imbalanced version (called HEPMASS-IMB) we provide here for the first time to further validate our approach. Provided results are in terms of the impact of the proposed design decisions, classification performance, and interpolation capability as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge