Liudan Zhao

AuscultaBase: A Foundational Step Towards AI-Powered Body Sound Diagnostics

Nov 12, 2024

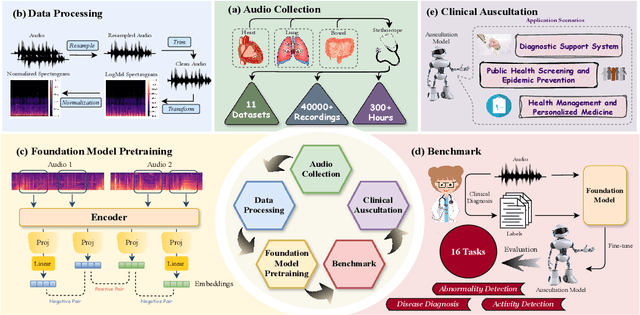

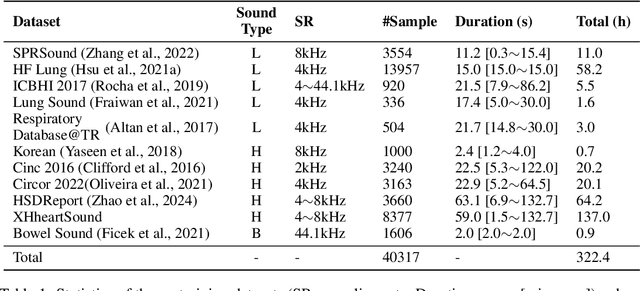

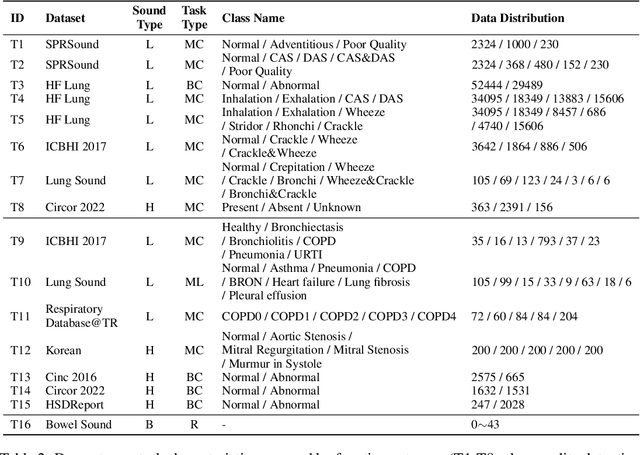

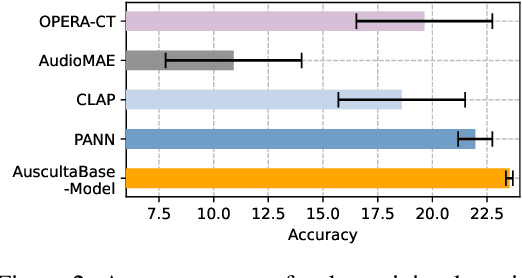

Abstract:Auscultation of internal body sounds is essential for diagnosing a range of health conditions, yet its effectiveness is often limited by clinicians' expertise and the acoustic constraints of human hearing, restricting its use across various clinical scenarios. To address these challenges, we introduce AuscultaBase, a foundational framework aimed at advancing body sound diagnostics through innovative data integration and contrastive learning techniques. Our contributions include the following: First, we compile AuscultaBase-Corpus, a large-scale, multi-source body sound database encompassing 11 datasets with 40,317 audio recordings and totaling 322.4 hours of heart, lung, and bowel sounds. Second, we develop AuscultaBase-Model, a foundational diagnostic model for body sounds, utilizing contrastive learning on the compiled corpus. Third, we establish AuscultaBase-Bench, a comprehensive benchmark containing 16 sub-tasks, assessing the performance of various open-source acoustic pre-trained models. Evaluation results indicate that our model outperforms all other open-source models in 12 out of 16 tasks, demonstrating the efficacy of our approach in advancing diagnostic capabilities for body sound analysis.

HSDreport: Heart Sound Diagnosis with Echocardiography Reports

Aug 16, 2024

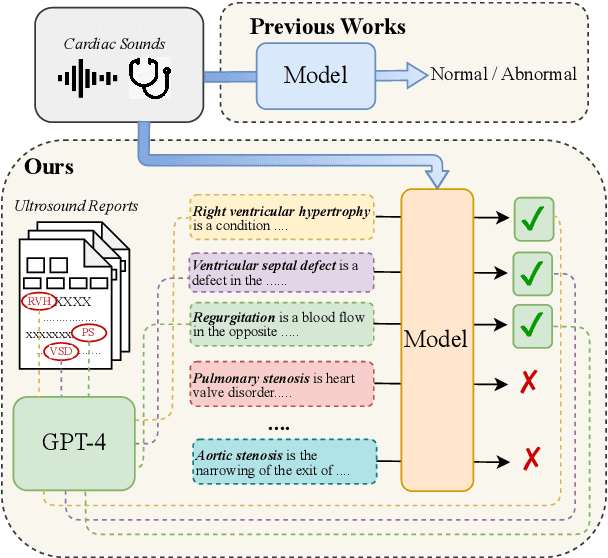

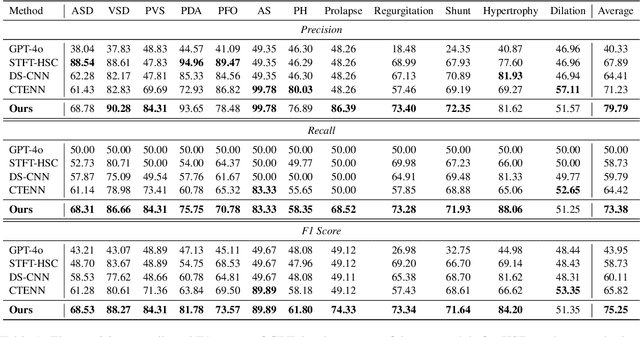

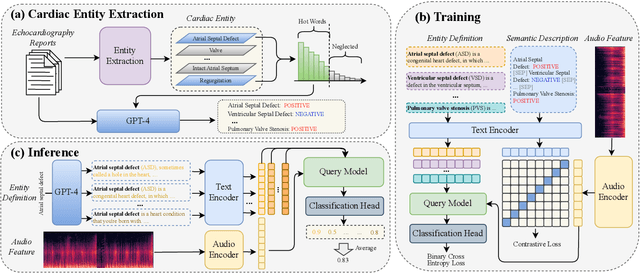

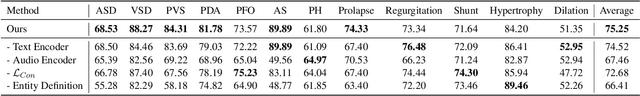

Abstract:Heart sound auscultation holds significant importance in the diagnosis of congenital heart disease. However, existing methods for Heart Sound Diagnosis (HSD) tasks are predominantly limited to a few fixed categories, framing the HSD task as a rigid classification problem that does not fully align with medical practice and offers only limited information to physicians. Besides, such methods do not utilize echocardiography reports, the gold standard in the diagnosis of related diseases. To tackle this challenge, we introduce HSDreport, a new benchmark for HSD, which mandates the direct utilization of heart sounds obtained from auscultation to predict echocardiography reports. This benchmark aims to merge the convenience of auscultation with the comprehensive nature of echocardiography reports. First, we collect a new dataset for this benchmark, comprising 2,275 heart sound samples along with their corresponding reports. Subsequently, we develop a knowledge-aware query-based transformer to handle this task. The intent is to leverage the capabilities of medically pre-trained models and the internal knowledge of large language models (LLMs) to address the task's inherent complexity and variability, thereby enhancing the robustness and scientific validity of the method. Furthermore, our experimental results indicate that our method significantly outperforms traditional HSD approaches and existing multimodal LLMs in detecting key abnormalities in heart sounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge