Liang Ze Wong

`Generalization is hallucination' through the lens of tensor completions

Feb 24, 2025

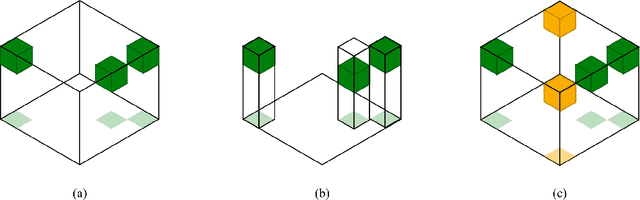

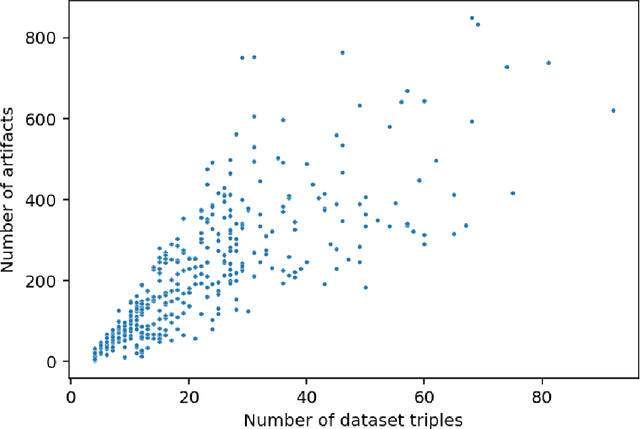

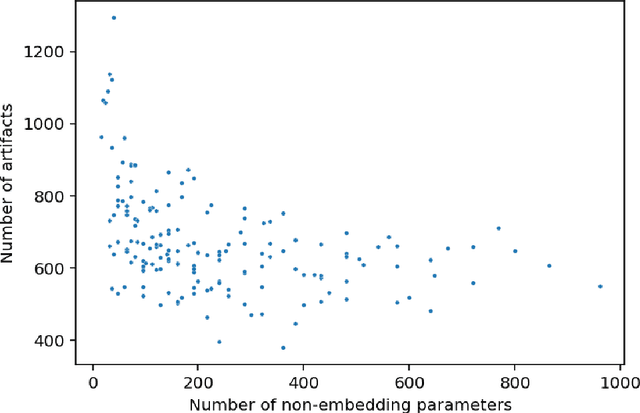

Abstract:In this short position paper, we introduce tensor completions and artifacts and make the case that they are a useful theoretical framework for understanding certain types of hallucinations and generalizations in language models.

Paying Attention to Facts: Quantifying the Knowledge Capacity of Attention Layers

Feb 07, 2025

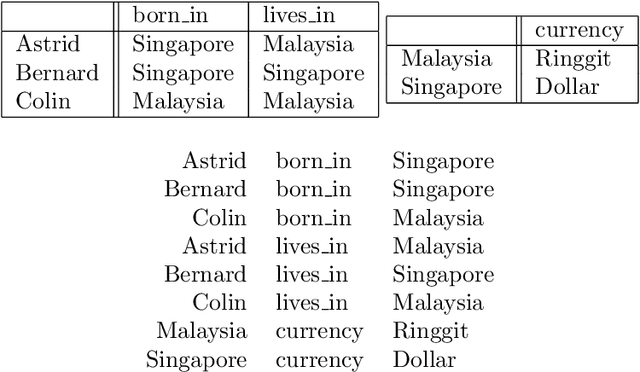

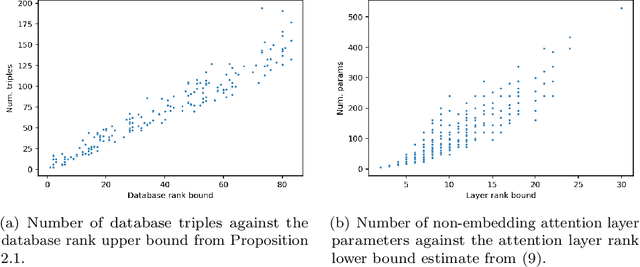

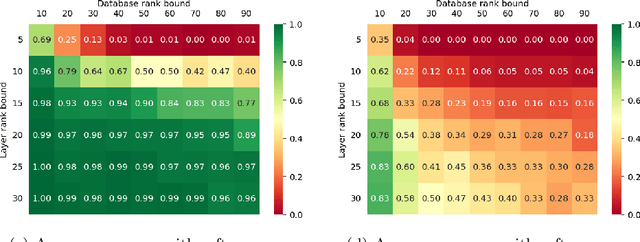

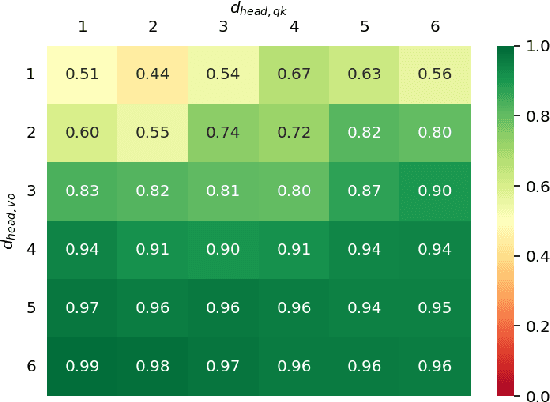

Abstract:In this paper, we investigate the ability of single-layer attention-only transformers (i.e. attention layers) to memorize facts contained in databases from a linear-algebraic perspective. We associate with each database a 3-tensor, propose the rank of this tensor as a measure of the size of the database, and provide bounds on the rank in terms of properties of the database. We also define a 3-tensor corresponding to an attention layer, and empirically demonstrate the relationship between its rank and database rank on a dataset of toy models and random databases. By highlighting the roles played by the value-output and query-key weights, and the effects of argmax and softmax on rank, our results shed light on the `additive motif' of factual recall in transformers, while also suggesting a way of increasing layer capacity without increasing the number of parameters.

Rethinking stance detection: A theoretically-informed research agenda for user-level inference using language models

Feb 04, 2025

Abstract:Stance detection has emerged as a popular task in natural language processing research, enabled largely by the abundance of target-specific social media data. While there has been considerable research on the development of stance detection models, datasets, and application, we highlight important gaps pertaining to (i) a lack of theoretical conceptualization of stance, and (ii) the treatment of stance at an individual- or user-level, as opposed to message-level. In this paper, we first review the interdisciplinary origins of stance as an individual-level construct to highlight relevant attributes (e.g., psychological features) that might be useful to incorporate in stance detection models. Further, we argue that recent pre-trained and large language models (LLMs) might offer a way to flexibly infer such user-level attributes and/or incorporate them in modelling stance. To better illustrate this, we briefly review and synthesize the emerging corpus of studies on using LLMs for inferring stance, and specifically on incorporating user attributes in such tasks. We conclude by proposing a four-point agenda for pursuing stance detection research that is theoretically informed, inclusive, and practically impactful.

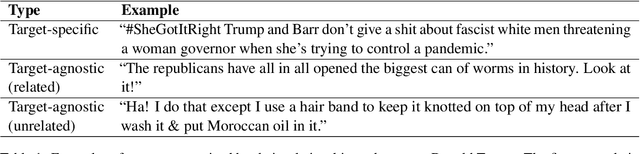

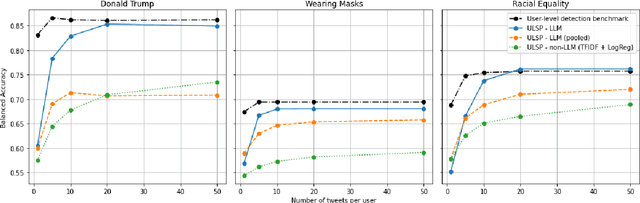

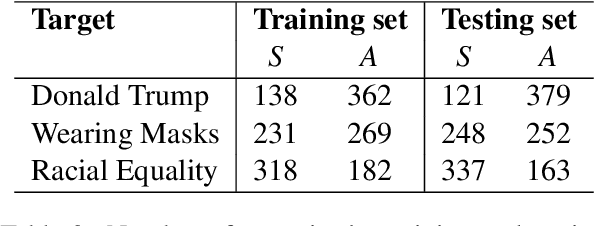

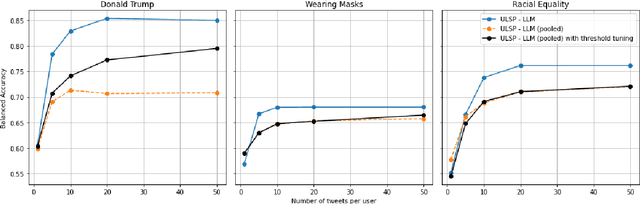

Predicting User Stances from Target-Agnostic Information using Large Language Models

Sep 22, 2024

Abstract:We investigate Large Language Models' (LLMs) ability to predict a user's stance on a target given a collection of his/her target-agnostic social media posts (i.e., user-level stance prediction). While we show early evidence that LLMs are capable of this task, we highlight considerable variability in the performance of the model across (i) the type of stance target, (ii) the prediction strategy and (iii) the number of target-agnostic posts supplied. Post-hoc analyses further hint at the usefulness of target-agnostic posts in providing relevant information to LLMs through the presence of both surface-level (e.g., target-relevant keywords) and user-level features (e.g., encoding users' moral values). Overall, our findings suggest that LLMs might offer a viable method for determining public stances towards new topics based on historical and target-agnostic data. At the same time, we also call for further research to better understand LLMs' strong performance on the stance prediction task and how their effectiveness varies across task contexts.

Enhancing Stance Classification with Quantified Moral Foundations

Oct 15, 2023

Abstract:This study enhances stance detection on social media by incorporating deeper psychological attributes, specifically individuals' moral foundations. These theoretically-derived dimensions aim to provide a comprehensive profile of an individual's moral concerns which, in recent work, has been linked to behaviour in a range of domains, including society, politics, health, and the environment. In this paper, we investigate how moral foundation dimensions can contribute to predicting an individual's stance on a given target. Specifically we incorporate moral foundation features extracted from text, along with message semantic features, to classify stances at both message- and user-levels across a range of targets and models. Our preliminary results suggest that encoding moral foundations can enhance the performance of stance detection tasks and help illuminate the associations between specific moral foundations and online stances on target topics. The results highlight the importance of considering deeper psychological attributes in stance analysis and underscores the role of moral foundations in guiding online social behavior.

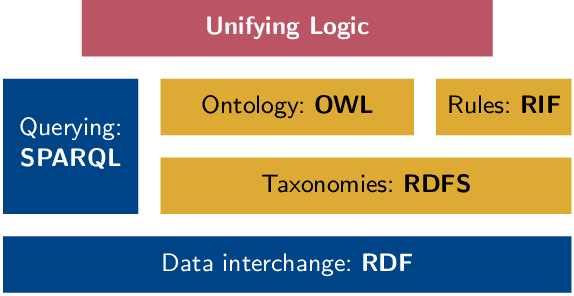

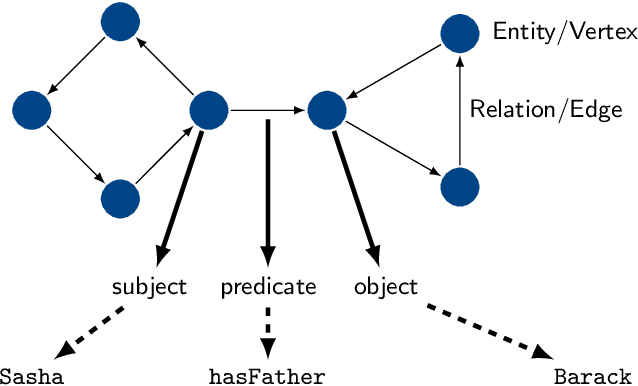

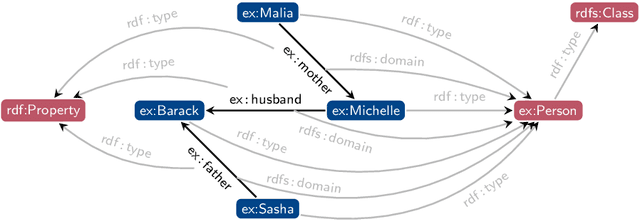

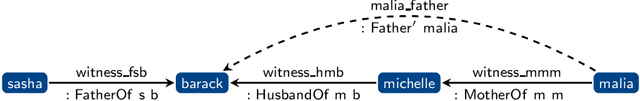

Dependently Typed Knowledge Graphs

Mar 08, 2020

Abstract:Reasoning over knowledge graphs is traditionally built upon a hierarchy of languages in the Semantic Web Stack. Starting from the Resource Description Framework (RDF) for knowledge graphs, more advanced constructs have been introduced through various syntax extensions to add reasoning capabilities to knowledge graphs. In this paper, we show how standardized semantic web technologies (RDF and its query language SPARQL) can be reproduced in a unified manner with dependent type theory. In addition to providing the basic functionalities of knowledge graphs, dependent types add expressiveness in encoding both entities and queries, explainability in answers to queries through witnesses, and compositionality and automation in the construction of witnesses. Using the Coq proof assistant, we demonstrate how to build and query dependently typed knowledge graphs as a proof of concept for future works in this direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge