Le Zou

DAFD: Domain Adaptation via Feature Disentanglement for Image Classification

Jan 30, 2023

Abstract:A good feature representation is the key to image classification. In practice, image classifiers may be applied in scenarios different from what they have been trained on. This so-called domain shift leads to a significant performance drop in image classification. Unsupervised domain adaptation (UDA) reduces the domain shift by transferring the knowledge learned from a labeled source domain to an unlabeled target domain. We perform feature disentanglement for UDA by distilling category-relevant features and excluding category-irrelevant features from the global feature maps. This disentanglement prevents the network from overfitting to category-irrelevant information and makes it focus on information useful for classification. This reduces the difficulty of domain alignment and improves the classification accuracy on the target domain. We propose a coarse-to-fine domain adaptation method called Domain Adaptation via Feature Disentanglement~(DAFD), which has two components: (1)the Category-Relevant Feature Selection (CRFS) module, which disentangles the category-relevant features from the category-irrelevant features, and (2)the Dynamic Local Maximum Mean Discrepancy (DLMMD) module, which achieves fine-grained alignment by reducing the discrepancy within the category-relevant features from different domains. Combined with the CRFS, the DLMMD module can align the category-relevant features properly. We conduct comprehensive experiment on four standard datasets. Our results clearly demonstrate the robustness and effectiveness of our approach in domain adaptive image classification tasks and its competitiveness to the state of the art.

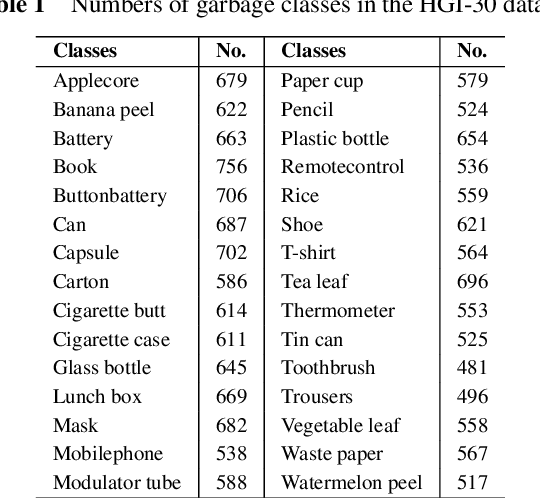

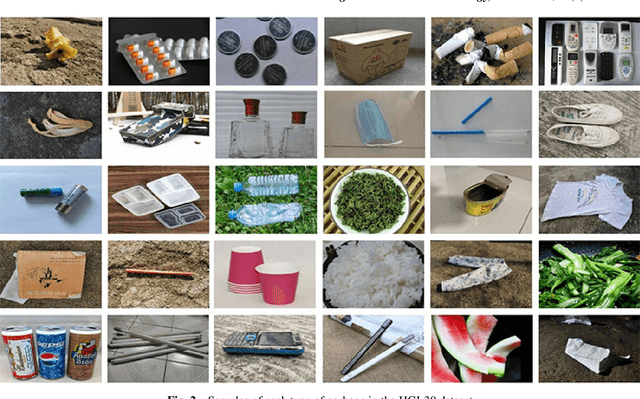

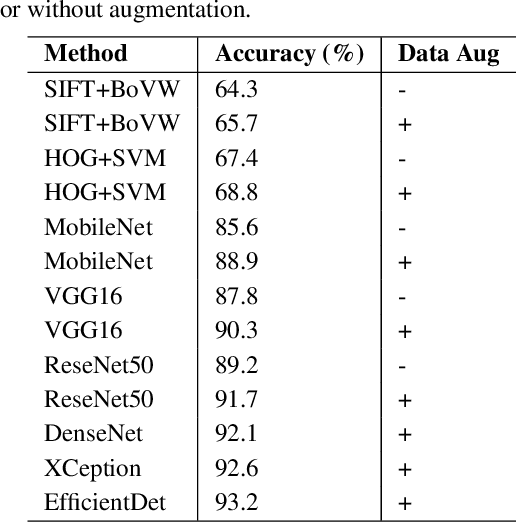

New Benchmark for Household Garbage Image Recognition

Feb 24, 2022

Abstract:Household garbage images are usually faced with complex backgrounds, variable illuminations, diverse angles, and changeable shapes, which bring a great difficulty in garbage image classification. Due to the ability to discover problem-specific features, deep learning and especially convolutional neural networks (CNNs) have been successfully and widely used for image representation learning. However, available and stable household garbage datasets are insufficient, which seriously limits the development of research and application. Besides, the state of the art in the field of garbage image classification is not entirely clear. To solve this problem, in this study, we built a new open benchmark dataset for household garbage image classification by simulating different lightings, backgrounds, angles, and shapes. This dataset is named 30 Classes of Household Garbage Images (HGI-30), which contains 18,000 images of 30 household garbage classes. The publicly available HGI-30 dataset allows researchers to develop accurate and robust methods for household garbage recognition. We also conducted experiments and performance analysis of the state-of-the-art deep CNN methods on HGI-30, which serves as baseline results on this benchmark.

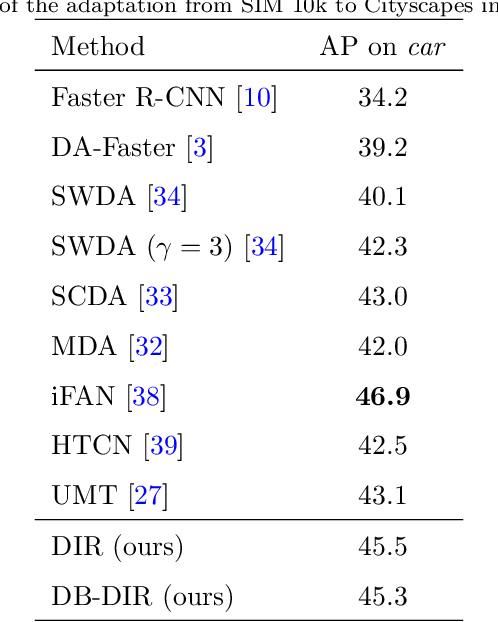

Domain-Invariant Proposals based on a Balanced Domain Classifier for Object Detection

Feb 12, 2022

Abstract:Object recognition from images means to automatically find object(s) of interest and to return their category and location information. Benefiting from research on deep learning, like convolutional neural networks~(CNNs) and generative adversarial networks, the performance in this field has been improved significantly, especially when training and test data are drawn from similar distributions. However, mismatching distributions, i.e., domain shifts, lead to a significant performance drop. In this paper, we build domain-invariant detectors by learning domain classifiers via adversarial training. Based on the previous works that align image and instance level features, we mitigate the domain shift further by introducing a domain adaptation component at the region level within Faster \mbox{R-CNN}. We embed a domain classification network in the region proposal network~(RPN) using adversarial learning. The RPN can now generate accurate region proposals in different domains by effectively aligning the features between them. To mitigate the unstable convergence during the adversarial learning, we introduce a balanced domain classifier as well as a network learning rate adjustment strategy. We conduct comprehensive experiments using four standard datasets. The results demonstrate the effectiveness and robustness of our object detection approach in domain shift scenarios.

Skeleton Based Action Recognition using a Stacked Denoising Autoencoder with Constraints of Privileged Information

Mar 12, 2020

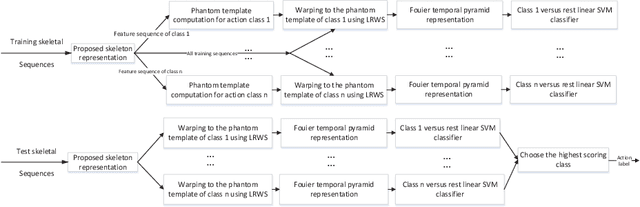

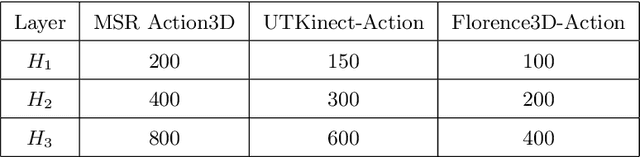

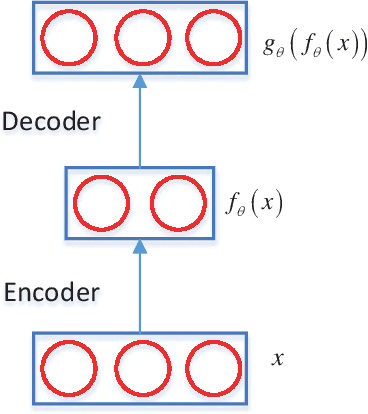

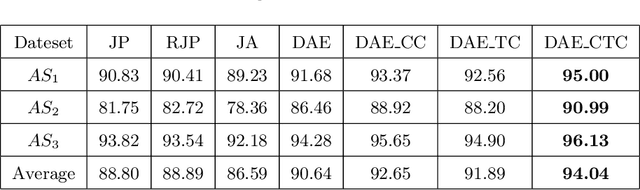

Abstract:Recently, with the availability of cost-effective depth cameras coupled with real-time skeleton estimation, the interest in skeleton-based human action recognition is renewed. Most of the existing skeletal representation approaches use either the joint location or the dynamics model. Differing from the previous studies, we propose a new method called Denoising Autoencoder with Temporal and Categorical Constraints (DAE_CTC)} to study the skeletal representation in a view of skeleton reconstruction. Based on the concept of learning under privileged information, we integrate action categories and temporal coordinates into a stacked denoising autoencoder in the training phase, to preserve category and temporal feature, while learning the hidden representation from a skeleton. Thus, we are able to improve the discriminative validity of the hidden representation. In order to mitigate the variation resulting from temporary misalignment, a new method of temporal registration, called Locally-Warped Sequence Registration (LWSR), is proposed for registering the sequences of inter- and intra-class actions. We finally represent the sequences using a Fourier Temporal Pyramid (FTP) representation and perform classification using a combination of LWSR registration, FTP representation, and a linear Support Vector Machine (SVM). The experimental results on three action data sets, namely MSR-Action3D, UTKinect-Action, and Florence3D-Action, show that our proposal performs better than many existing methods and comparably to the state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge