Kunbo Zhang

Graph and Skipped Transformer: Exploiting Spatial and Temporal Modeling Capacities for Efficient 3D Human Pose Estimation

Jul 03, 2024Abstract:In recent years, 2D-to-3D pose uplifting in monocular 3D Human Pose Estimation (HPE) has attracted widespread research interest. GNN-based methods and Transformer-based methods have become mainstream architectures due to their advanced spatial and temporal feature learning capacities. However, existing approaches typically construct joint-wise and frame-wise attention alignments in spatial and temporal domains, resulting in dense connections that introduce considerable local redundancy and computational overhead. In this paper, we take a global approach to exploit spatio-temporal information and realise efficient 3D HPE with a concise Graph and Skipped Transformer architecture. Specifically, in Spatial Encoding stage, coarse-grained body parts are deployed to construct Spatial Graph Network with a fully data-driven adaptive topology, ensuring model flexibility and generalizability across various poses. In Temporal Encoding and Decoding stages, a simple yet effective Skipped Transformer is proposed to capture long-range temporal dependencies and implement hierarchical feature aggregation. A straightforward Data Rolling strategy is also developed to introduce dynamic information into 2D pose sequence. Extensive experiments are conducted on Human3.6M, MPI-INF-3DHP and Human-Eva benchmarks. G-SFormer series methods achieve superior performances compared with previous state-of-the-arts with only around ten percent of parameters and significantly reduced computational complexity. Additionally, G-SFormer also exhibits outstanding robustness to inaccuracies in detected 2D poses.

Multiscale Dynamic Graph Representation for Biometric Recognition with Occlusions

Jul 27, 2023

Abstract:Occlusion is a common problem with biometric recognition in the wild. The generalization ability of CNNs greatly decreases due to the adverse effects of various occlusions. To this end, we propose a novel unified framework integrating the merits of both CNNs and graph models to overcome occlusion problems in biometric recognition, called multiscale dynamic graph representation (MS-DGR). More specifically, a group of deep features reflected on certain subregions is recrafted into a feature graph (FG). Each node inside the FG is deemed to characterize a specific local region of the input sample, and the edges imply the co-occurrence of non-occluded regions. By analyzing the similarities of the node representations and measuring the topological structures stored in the adjacent matrix, the proposed framework leverages dynamic graph matching to judiciously discard the nodes corresponding to the occluded parts. The multiscale strategy is further incorporated to attain more diverse nodes representing regions of various sizes. Furthermore, the proposed framework exhibits a more illustrative and reasonable inference by showing the paired nodes. Extensive experiments demonstrate the superiority of the proposed framework, which boosts the accuracy in both natural and occlusion-simulated cases by a large margin compared with that of baseline methods.

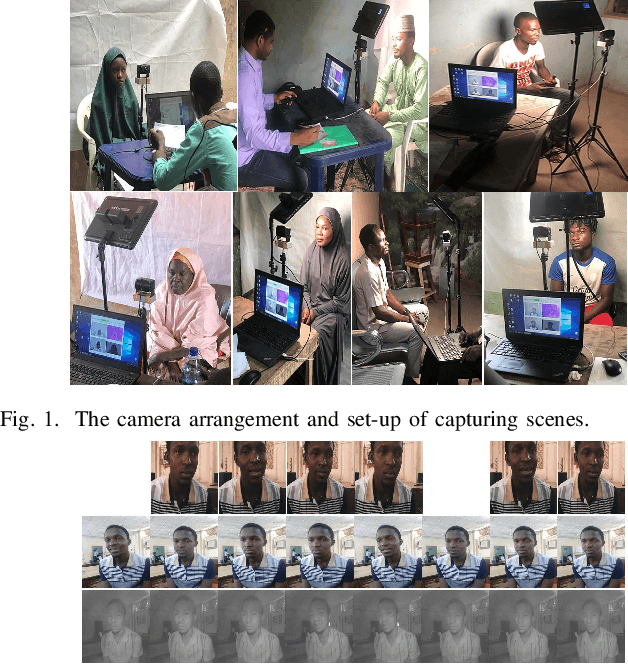

CASIA-Iris-Africa: A Large-scale African Iris Image Database

Feb 25, 2023

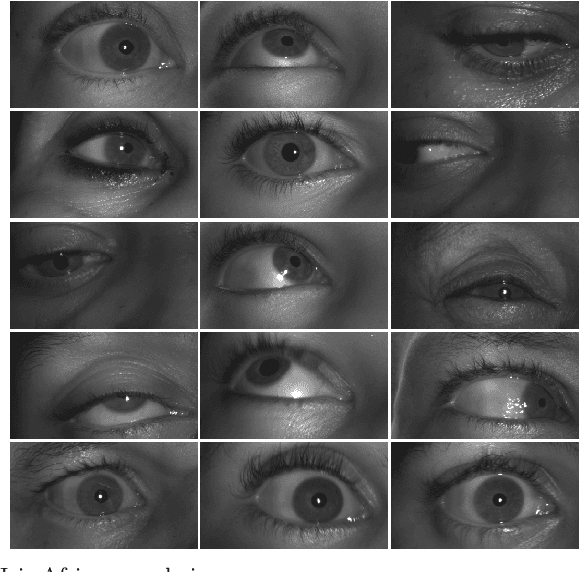

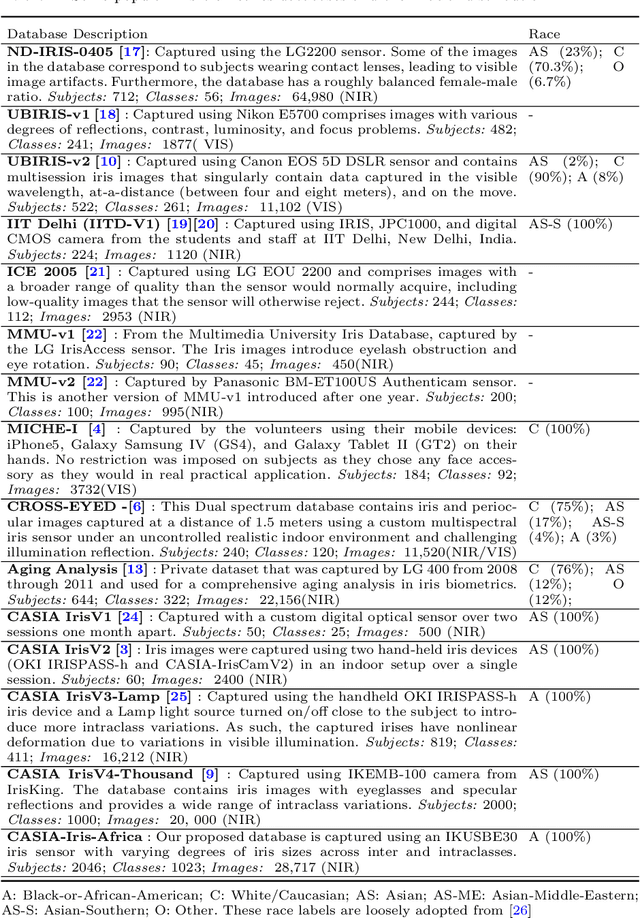

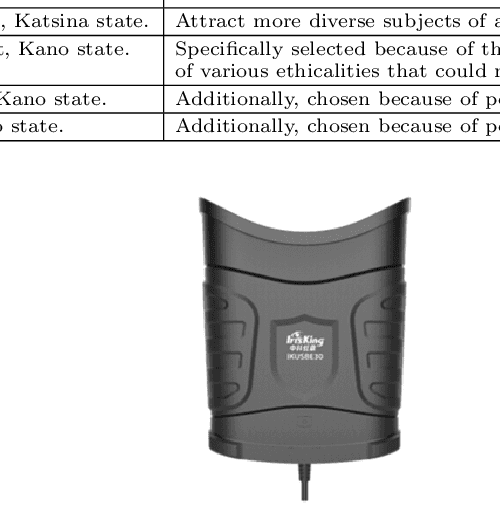

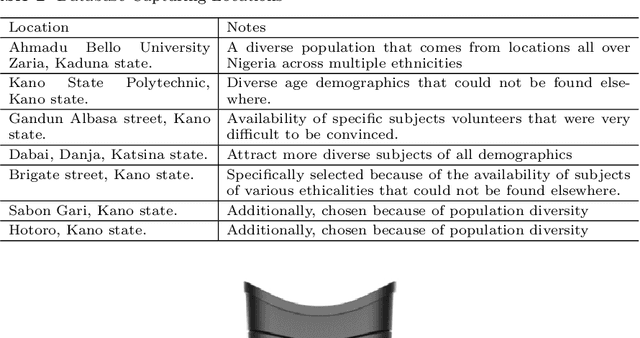

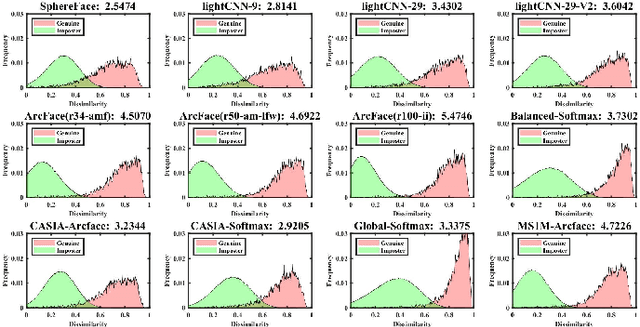

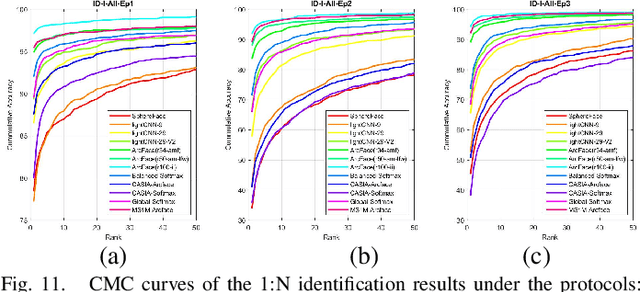

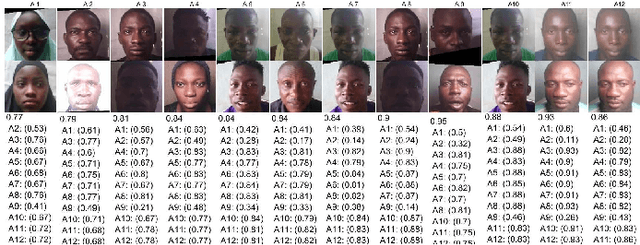

Abstract:Iris biometrics is a phenotypic biometric trait that has proven to be agnostic to human natural physiological changes. Research on iris biometrics has progressed tremendously, partly due to publicly available iris databases. Various databases have been available to researchers that address pressing iris biometric challenges such as constraint, mobile, multispectral, synthetics, long-distance, contact lenses, liveness detection, etc. However, these databases mostly contain subjects of Caucasian and Asian docents with very few Africans. Despite many investigative studies on racial bias in face biometrics, very few studies on iris biometrics have been published, mainly due to the lack of racially diverse large-scale databases containing sufficient iris samples of Africans in the public domain. Furthermore, most of these databases contain a relatively small number of subjects and labelled images. This paper proposes a large-scale African database named CASIA-Iris-Africa that can be used as a complementary database for the iris recognition community to mediate the effect of racial biases on Africans. The database contains 28,717 images of 1023 African subjects (2046 iris classes) with age, gender, and ethnicity attributes that can be useful in demographically sensitive studies of Africans. Sets of specific application protocols are incorporated with the database to ensure the database's variability and scalability. Performance results of some open-source SOTA algorithms on the database are presented, which will serve as baseline performances. The relatively poor performances of the baseline algorithms on the proposed database despite better performance on other databases prove that racial biases exist in these iris recognition algorithms. The database will be made available on our website: http://www.idealtest.org.

HumanDiffusion: a Coarse-to-Fine Alignment Diffusion Framework for Controllable Text-Driven Person Image Generation

Nov 11, 2022

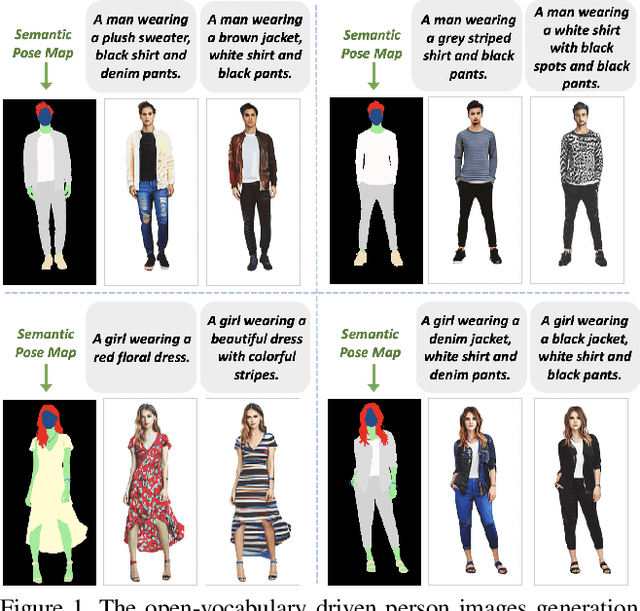

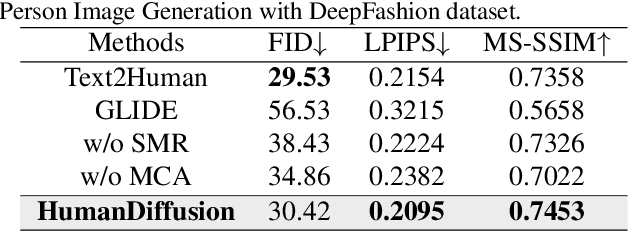

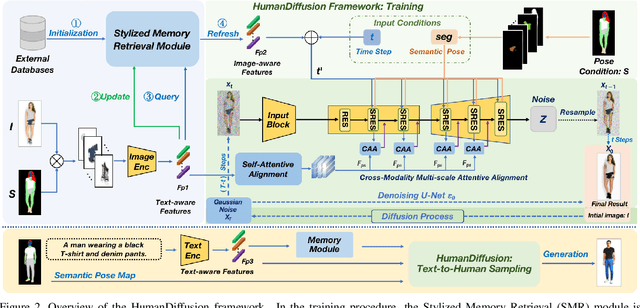

Abstract:Text-driven person image generation is an emerging and challenging task in cross-modality image generation. Controllable person image generation promotes a wide range of applications such as digital human interaction and virtual try-on. However, previous methods mostly employ single-modality information as the prior condition (e.g. pose-guided person image generation), or utilize the preset words for text-driven human synthesis. Introducing a sentence composed of free words with an editable semantic pose map to describe person appearance is a more user-friendly way. In this paper, we propose HumanDiffusion, a coarse-to-fine alignment diffusion framework, for text-driven person image generation. Specifically, two collaborative modules are proposed, the Stylized Memory Retrieval (SMR) module for fine-grained feature distillation in data processing and the Multi-scale Cross-modality Alignment (MCA) module for coarse-to-fine feature alignment in diffusion. These two modules guarantee the alignment quality of the text and image, from image-level to feature-level, from low-resolution to high-resolution. As a result, HumanDiffusion realizes open-vocabulary person image generation with desired semantic poses. Extensive experiments conducted on DeepFashion demonstrate the superiority of our method compared with previous approaches. Moreover, better results could be obtained for complicated person images with various details and uncommon poses.

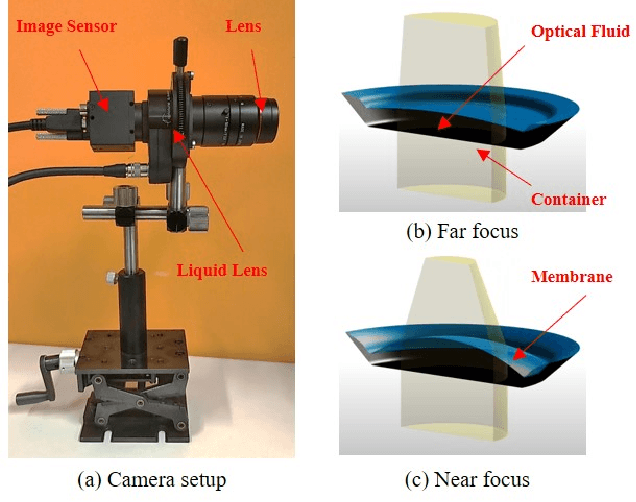

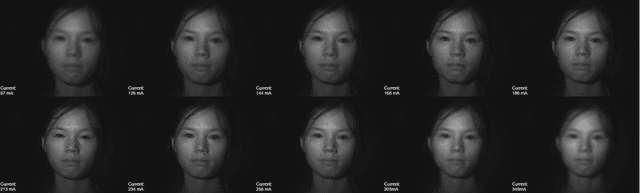

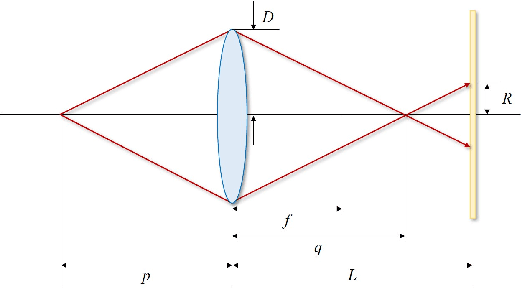

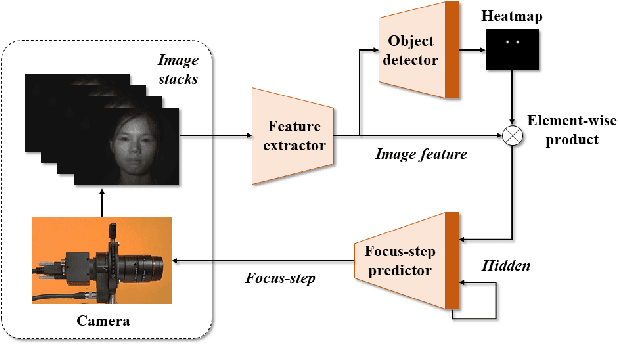

An End-to-End Autofocus Camera for Iris on the Move

Jun 29, 2021

Abstract:For distant iris recognition, a long focal length lens is generally used to ensure the resolution ofiris images, which reduces the depth of field and leads to potential defocus blur. To accommodate users at different distances, it is necessary to control focus quickly and accurately. While for users in motion, it is expected to maintain the correct focus on the iris area continuously. In this paper, we introduced a novel rapid autofocus camera for active refocusing ofthe iris area ofthe moving objects using a focus-tunable lens. Our end-to-end computational algorithm can predict the best focus position from one single blurred image and generate a lens diopter control signal automatically. This scene-based active manipulation method enables real-time focus tracking of the iris area ofa moving object. We built a testing bench to collect real-world focal stacks for evaluation of the autofocus methods. Our camera has reached an autofocus speed ofover 50 fps. The results demonstrate the advantages of our proposed camera for biometric perception in static and dynamic scenes. The code is available at https://github.com/Debatrix/AquulaCam.

CASIA-Face-Africa: A Large-scale African Face Image Database

May 11, 2021

Abstract:Face recognition is a popular and well-studied area with wide applications in our society. However, racial bias had been proven to be inherent in most State Of The Art (SOTA) face recognition systems. Many investigative studies on face recognition algorithms have reported higher false positive rates of African subjects cohorts than the other cohorts. Lack of large-scale African face image databases in public domain is one of the main restrictions in studying the racial bias problem of face recognition. To this end, we collect a face image database namely CASIA-Face-Africa which contains 38,546 images of 1,183 African subjects. Multi-spectral cameras are utilized to capture the face images under various illumination settings. Demographic attributes and facial expressions of the subjects are also carefully recorded. For landmark detection, each face image in the database is manually labeled with 68 facial keypoints. A group of evaluation protocols are constructed according to different applications, tasks, partitions and scenarios. The performances of SOTA face recognition algorithms without re-training are reported as baselines. The proposed database along with its face landmark annotations, evaluation protocols and preliminary results form a good benchmark to study the essential aspects of face biometrics for African subjects, especially face image preprocessing, face feature analysis and matching, facial expression recognition, sex/age estimation, ethnic classification, face image generation, etc. The database can be downloaded from our http://www.cripacsir.cn/dataset/

All-in-Focus Iris Camera With a Great Capture Volume

Nov 19, 2020

Abstract:Imaging volume of an iris recognition system has been restricting the throughput and cooperation convenience in biometric applications. Numerous improvement trials are still impractical to supersede the dominant fixed-focus lens in stand-off iris recognition due to incremental performance increase and complicated optical design. In this study, we develop a novel all-in-focus iris imaging system using a focus-tunable lens and a 2D steering mirror to greatly extend capture volume by spatiotemporal multiplexing method. Our iris imaging depth of field extension system requires no mechanical motion and is capable to adjust the focal plane at extremely high speed. In addition, the motorized reflection mirror adaptively steers the light beam to extend the horizontal and vertical field of views in an active manner. The proposed all-in-focus iris camera increases the depth of field up to 3.9 m which is a factor of 37.5 compared with conventional long focal lens. We also experimentally demonstrate the capability of this 3D light beam steering imaging system in real-time multi-person iris refocusing using dynamic focal stacks and the potential of continuous iris recognition for moving participants.

Recognition Oriented Iris Image Quality Assessment in the Feature Space

Sep 27, 2020

Abstract:A large portion of iris images captured in real world scenarios are poor quality due to the uncontrolled environment and the non-cooperative subject. To ensure that the recognition algorithm is not affected by low-quality images, traditional hand-crafted factors based methods discard most images, which will cause system timeout and disrupt user experience. In this paper, we propose a recognition-oriented quality metric and assessment method for iris image to deal with the problem. The method regards the iris image embeddings Distance in Feature Space (DFS) as the quality metric and the prediction is based on deep neural networks with the attention mechanism. The quality metric proposed in this paper can significantly improve the performance of the recognition algorithm while reducing the number of images discarded for recognition, which is advantageous over hand-crafted factors based iris quality assessment methods. The relationship between Image Rejection Rate (IRR) and Equal Error Rate (EER) is proposed to evaluate the performance of the quality assessment algorithm under the same image quality distribution and the same recognition algorithm. Compared with hand-crafted factors based methods, the proposed method is a trial to bridge the gap between the image quality assessment and biometric recognition. The code is available at https://github.com/Debatrix/DFSNet.

Face Anti-Spoofing by Learning Polarization Cues in a Real-World Scenario

Mar 19, 2020

Abstract:Face anti-spoofing is the key to preventing security breaches in biometric recognition applications. Existing software-based and hardware-based face liveness detection methods are effective in constrained environments or designated datasets only. Deep learning method using RGB and infrared images demands a large amount of training data for new attacks. In this paper, we present a face anti-spoofing method in a real-world scenario by automatic learning the physical characteristics in polarization images of a real face compared to a deceptive attack. A computational framework is developed to extract and classify the unique face features using convolutional neural networks and SVM together. Our real-time polarized face anti-spoofing (PAAS) detection method uses a on-chip integrated polarization imaging sensor with optimized processing algorithms. Extensive experiments demonstrate the advantages of the PAAS technique to counter diverse face spoofing attacks (print, replay, mask) in uncontrolled indoor and outdoor conditions by learning polarized face images of 33 people. A four-directional polarized face image dataset is released to inspire future applications within biometric anti-spoofing field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge